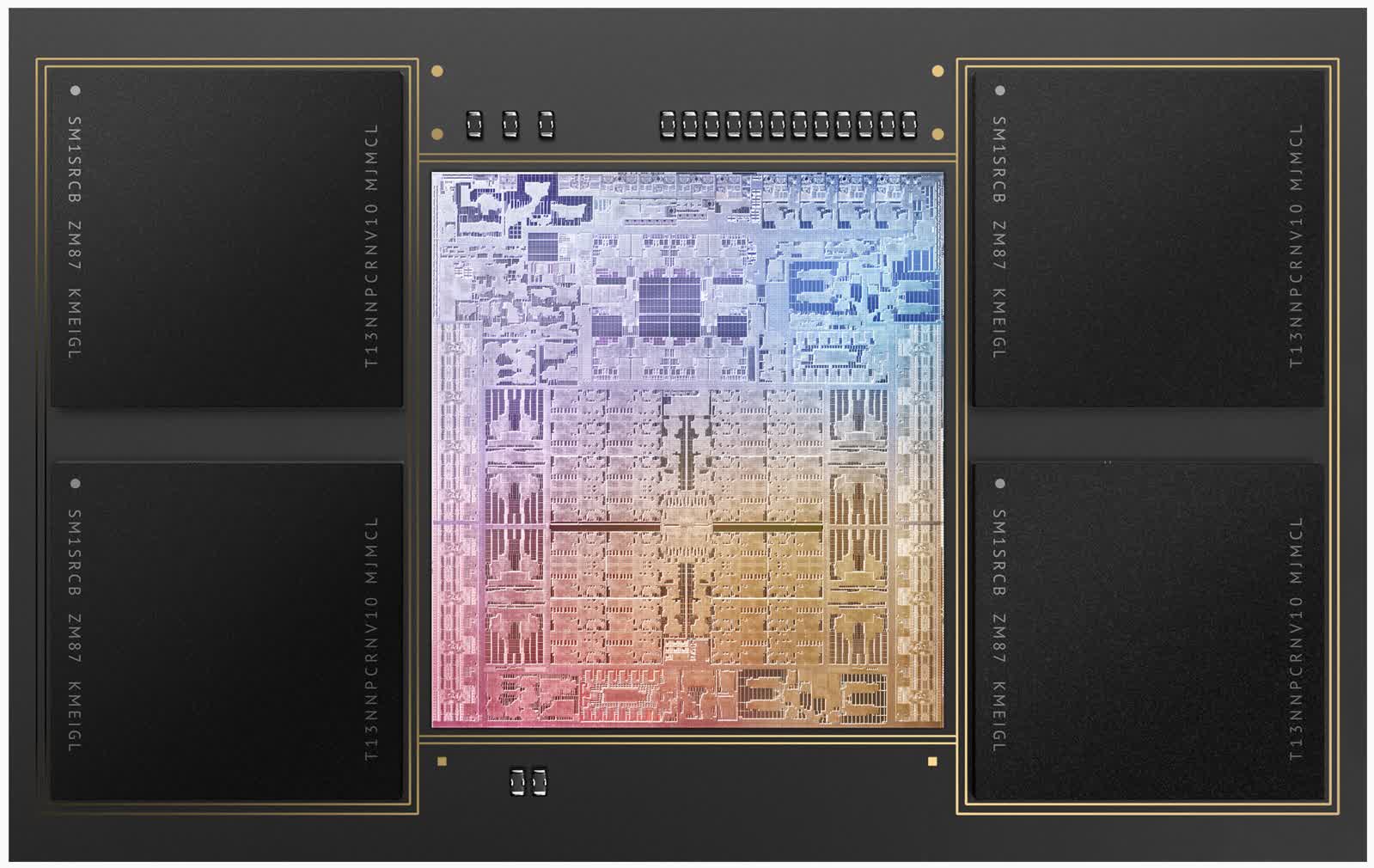

Why it matters: When Apple announced the M1 Pro and M1 Max-based MacBook Pros, it made a number of claims about the performance of the new chipsets when compared to existing solutions from the PC world. As more people get their hands on the new devices, those claims are being validated one by one in various tests. The most impressive finding by far is that Apple has managed to create power-efficient mobile chips that can compete in certain productivity tasks with workstation-grade hardware.

Apple’s M1 Max chipset has already showed its teeth in Adobe Premiere Pro, where it scored higher than 11th generation Intel CPUs paired with Nvidia RTX 3000 series laptop GPUs. This is no small feat, especially since it does so with much lower power consumption than those setups.

Lead Affinity Photo developer Andy Somerfield was curious to see what the new SoC is capable of, so he created a battery of tests to stress its GPU. Somerfield also wrote a Twitter thread where he details how the Affinity team has been gradually building GPU support into the architecture of the app over the past 12 years.

Somerfield was careful to note there’s no single measure of GPU performance, which is why the benchmark results he observed can only be taken as an indication of how well Affinity Photo will run on Apple’s latest silicon. The best GPU for software like Affinity Photo and Affinity Designer would be one that has high compute performance, fast on-chip bandwidth, and fast transfer on and off the chip.

The fastest GPU the Affinity team had previously tested was the AMD Radeon Pro W6900X, which is sold by Apple as an MPX module for the Mac Pro and coincidentally costs as much as a fully-specced 14-inch MacBook Pro.

It turns out the M1 Max outperforms it in every one of the Affinity tests, despite having only 400 GB/s of memory bandwidth that is shared with a CPU, a Neural Engine, and a Media Engine. By comparison, the Radeon Pro W6900X has 32 gigabytes of GDDR6 that can deliver up to 512 GB/s of memory bandwidth.

The only result where the Radeon Pro W6900X comes close to the M1 Max is the Raster (Single GPU) test where it manages a score of 32,580 points, while the Apple chipset stretches that to 32,891 points. It’s also worth noting the AMD card can draw up to 300 watts of power, while the M1 Max will draw considerably less in all scenarios.

Overall, Apple’s latest silicon seems to be built with power users in mind, and builds on the strong foundation of the M1 chipset. The only workload where the M1 Pro and M1 Max don’t seem to excel is gaming, with the former being slower than an Nvidia RTX 3060 laptop GPU and the latter being easily surpassed by an RTX 3080 laptop GPU or an AMD Radeon 6800M.

Of course, this wouldn’t be a surprise as the Mac isn’t the go-to platform for gamers. If you’re looking for an in-depth analysis of the new Apple chips, AnandTech’s Andrei Frumusanu has a great write up.

https://www.techspot.com/news/91941-apple-m1-max-faster-than-6000-amd-radeon.html