In context: In recent years, delidding has gradually become a lost art due to improvements in modern IHS design, the use of better thermal interface materials, and idiot-proof tools for the delidding process. However, it's always a pleasant surprise when a processor like the Ryzen 7 5800X3D that marks the end of an era gets delidded by an enthusiast who believes the reward is well worth the risks.

Earlier this month, an anonymous overclocker delidded an engineering sample from the upcoming Ryzen 7000 CPU lineup and revealed the bare dies in all their glory along with the first new integrated heat spreader (IHS) design change in years.

Normally, this procedure is only done by enthusiasts looking to reduce operating temperatures without the use of exotic cooling hardware. For obvious reasons, the Ryzen 7000 series processor delid was only a tease meant to showcase how AMD is working around some of the problems of moving to an LGA socket for the new CPUs. At the same time, it looks like it will be a more daunting task than on any previous CPU due to the way capacitors are arranged on the interposer.

This week, another overclocker going by @Madness7771 on Twitter revealed he had delidded the Ryzen 7 5800X3D, the last processor of the AM4 era. There are no surprises in terms of what's under the IHS — a core complex die and SoC die are present, and one can also observe some nonconductive protective goop where a second core complex die would have been if AMD had decided to make a Ryzen 9 5900X3D.

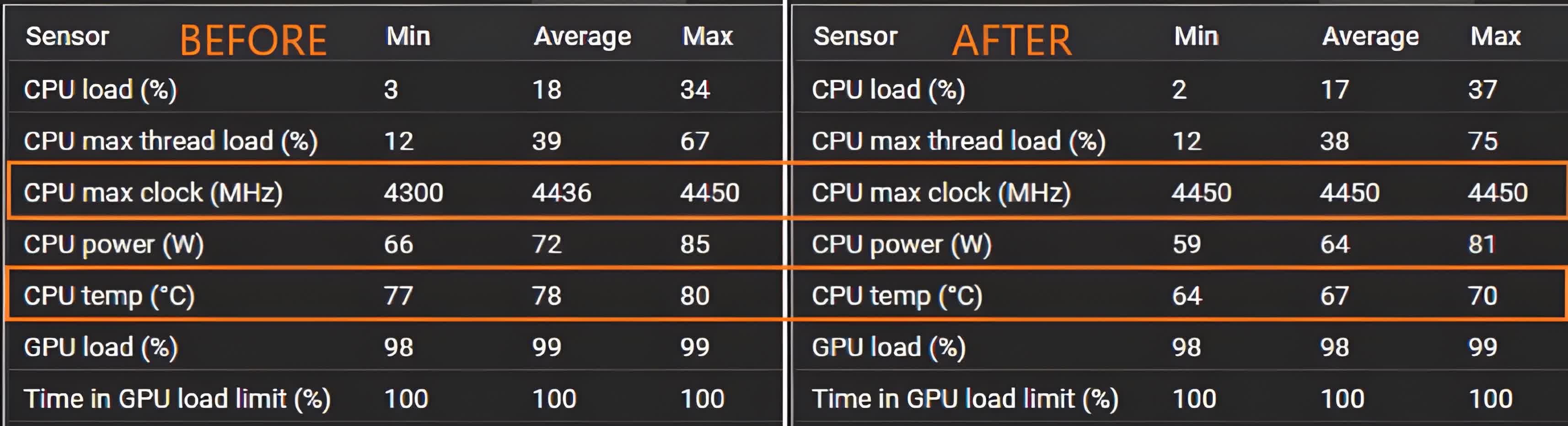

What's interesting about this delid is that replacing the factory liquid metal with some of Thermal Grizzly's Conductonaut led to the CPU running 10 degrees Celsius cooler and maintaining higher clocks during gaming workloads. Madness says this was a worthwhile improvement as the 5800X3D would previously reach temperatures of up to 90 degrees during long gaming sessions in titles like Forza Horizon 5.

That said, delidding a modern processor produces more modest results than it used to while requiring a great deal of patience and finesse. Madness used classic tools like razor blades and a heat gun to do the job, and it goes without saying this procedure carries a high risk of damaging the delicate core complex die with the stacked 3D V-Cache.

Overall, the results are impressive, and there's little one can do to further improve how the Ryzen 7 5800X3D performs. Overclocking isn't officially supported due to design limitations, but there are signs that manufacturers like MSI may add some limited overclocking support on select high-end AM4 motherboards shortly.

https://www.techspot.com/news/95078-ryzen-7-5800x3d-cpu-gets-delid-improved-temperatures.html