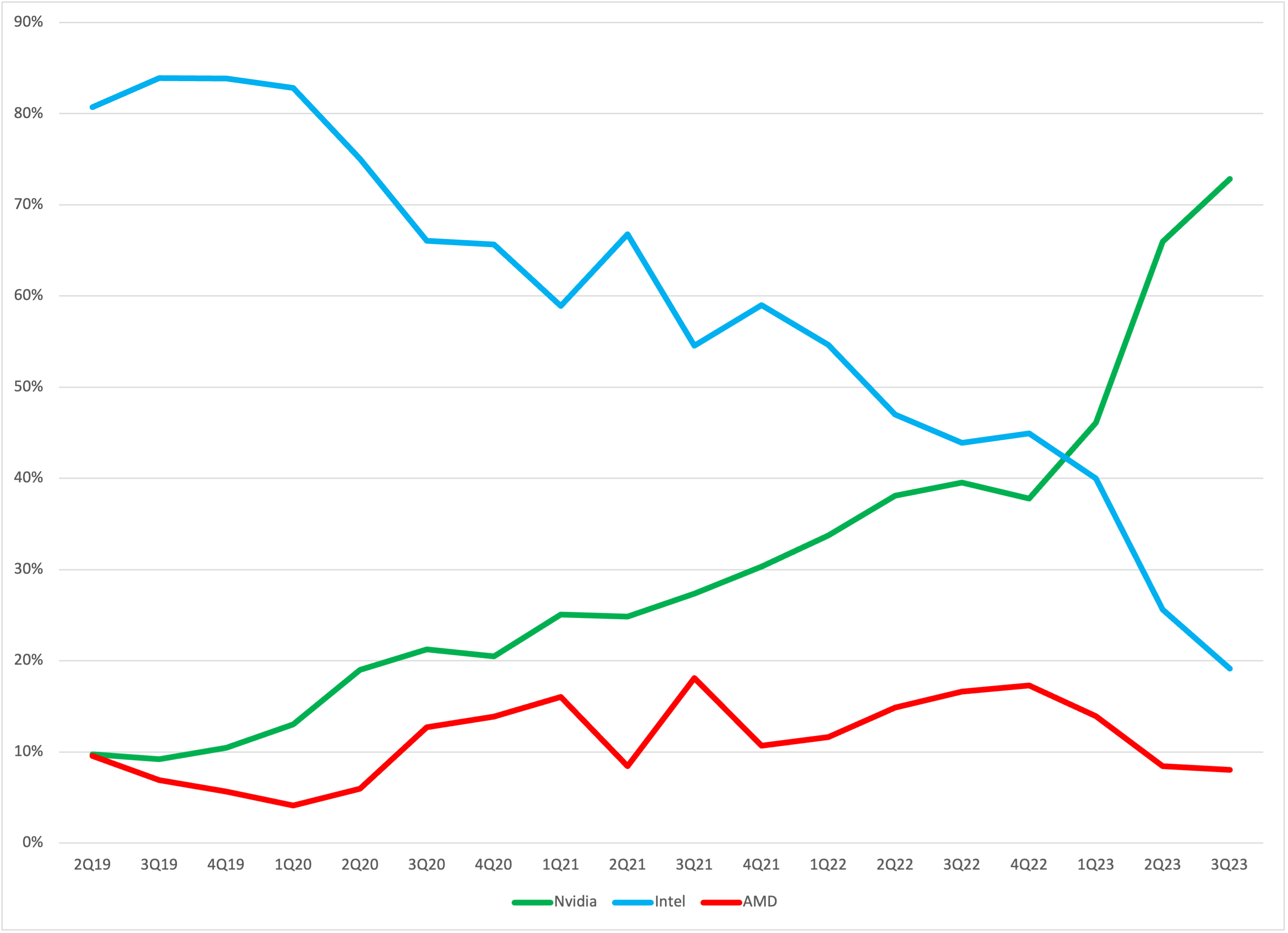

Why it matters: The revenue share figures for data center processors present a clear picture. Nvidia has experienced robust growth for years and currently leads the market. The primary question now is: what is the new normal for their market share?

A week ago, we came across a chart from HPC Guru on Twitter that was so striking we had to make our own version to really believe it. No slight to HPC Guru, it's just that the numbers were eye-opening, and in the end our numbers matched theirs.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

The chart below shows data center processor revenue market share by quarter going back to 2Q19. It shows the collapse of Intel and the incredible rise of Nvidia. And of course, what really stands out in all of this is just the scale of Nvidia's surge. In the latest quarter, they claimed 73% market share. We knew they were doing well, but as they say, a picture is worth 1,000 words, or $14 billion a quarter.

Data Center Revenue Share – AMD, Intel and Nvidia

In the replies to that thread, Ian Cutress asked the reasonable question as to how much the whole data center market has grown in that time. The answer is that the market grew at a 30% CAGR (compound annual growth rate) over this period – Intel shrank at a 6% rate, AMD grew by 27%, and Nvidia grew at 103% a year.

Also clear from this data is that Nvidia's truly spectacular growth came in the last year since the unveiling of ChatGPT wowed the world. But if we strip out those last 12 months, they were already growing at a 67% CAGR.

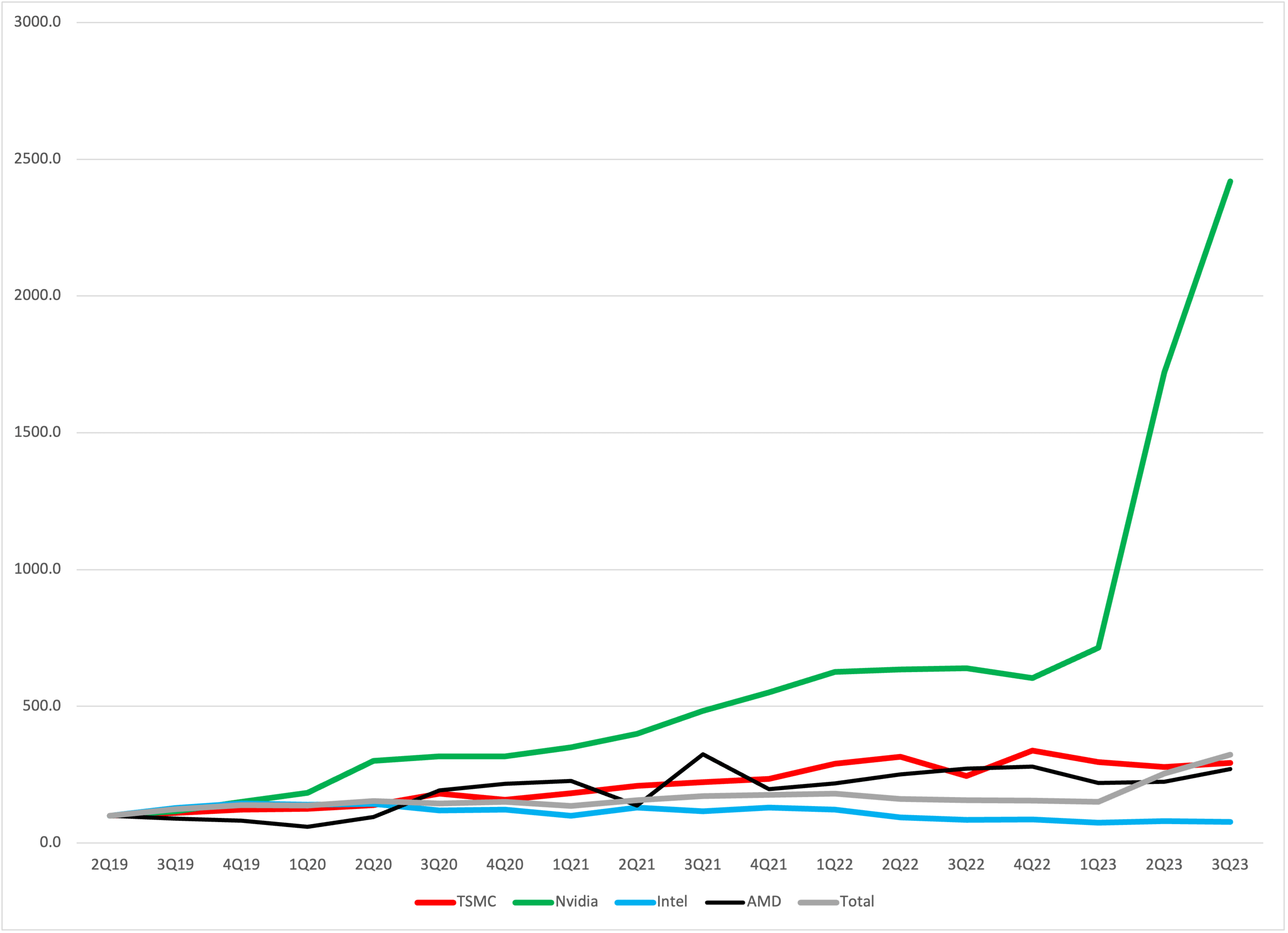

We tried plotting this as a common size graph with 2Q19 set to 100, this is a handy way to compare growth rates.

Common Size Growth in Data Center Revenue

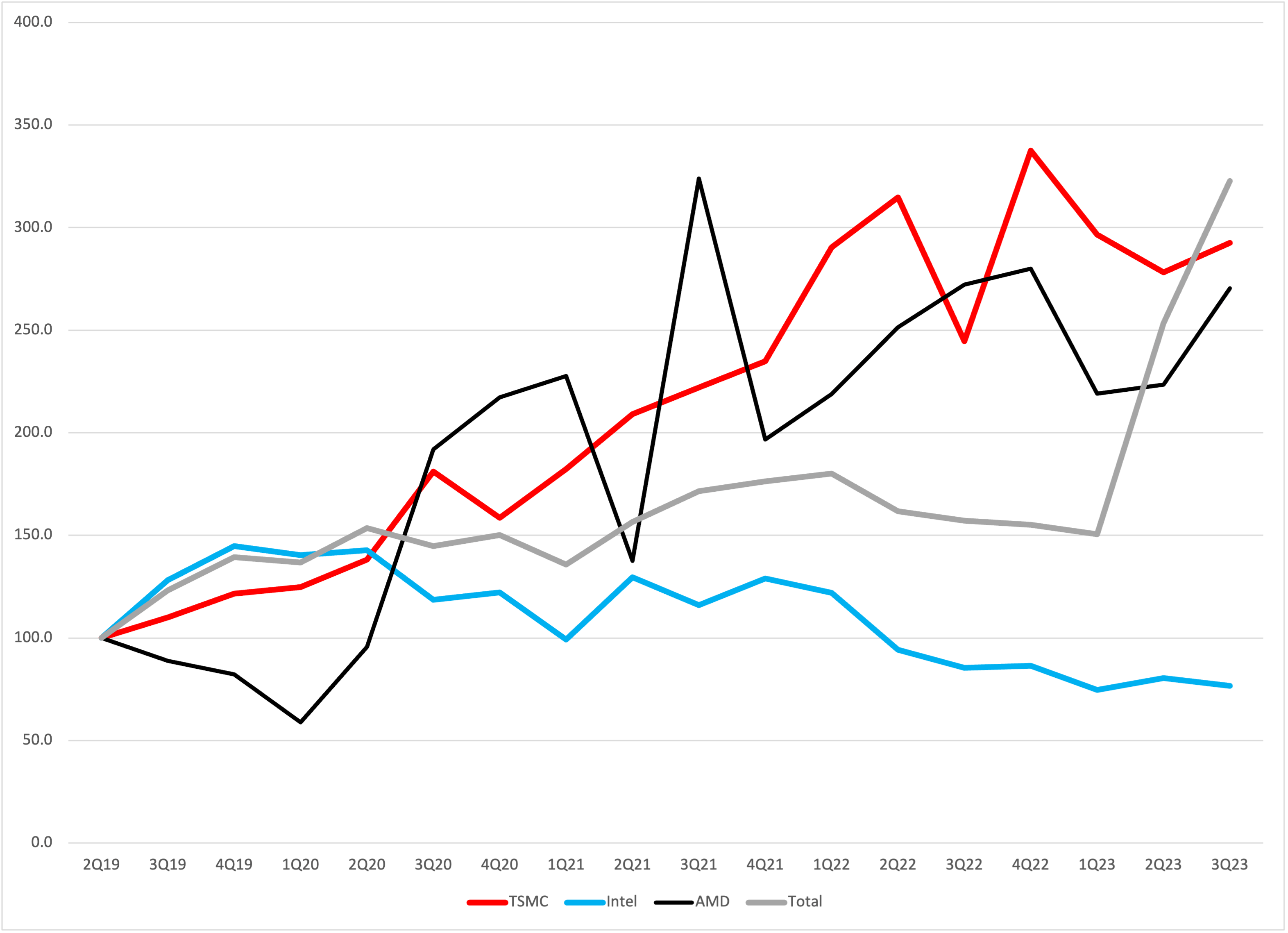

But Nvidia's growth is so strong that it drowns out the signal from all the others. So we plotted it again, without Nvidia. This time, we added TSMC to the mix as another proxy for the growth of the market.

Common Size Growth in Data Center Revenue ex-Nvidia

For those interested in recreating their own charts, this is the raw data. Our source for the data are company presentations, quarterly reports and SEC filings. We looked at Data Center segment revenue, but during this period, both Intel and AMD reclassified how they segment their data, so the early quarters are a bit different than the more recent quarters.

For TSMC we used their "HPC" segment reports, which they only began breaking out in 2Q19 which is where we start the series. Finally, it is worth keeping in mind that all of these segments include more than just CPUs and GPUs, as each company defines the segment a bit differently. This probably means that Intel's early revenue is inflated as it included networking, memory and a bunch of other products. So their decline is not quite as steep as it may appear. That all being said, the overall trend is still very clear.

We can draw two important conclusions from this. First, after years of effort and some serious share gains, AMD is still a distant third in this market with less than 10% share. They are still half of Intel's size, which is not a surprise, but stands out starkly in the light of Nvidia's rise.

The second, more important conclusion, is that this market is now permanently changed. Again, this is one of those things that everyone knows, but not everyone realizes. It is unlikely that Nvidia can retain 70% of wallet forever, but by the same token Intel had 90% share for over a decade. As much as we talk about the rise of heterogeneous compute, we are now entering a period where Nvidia is the common factor in the data center.

As much as the tech world is excited about the bold future of AI, another way to understand the rise of Large Language Models (LLMs) and AI in general is that the market is undergoing one of its periodic shifts. Just as the rise of Linux and Intel in the data center in the 1990s heralded a seismic shift in the market to a new paradigm of computing which today we call the cloud, the rise of LLMs seems to mark the rise of a new compute paradigm based on Nvidia silicon.

The question for the foreseeable future is not "Can Intel reclaim its data center crown?" (it cannot), instead the question is just how dominant will Nvidia be? Can they maintain a 70% share? (probably not), or is 50% the long term status quo? (very possibly).

https://www.techspot.com/news/101082-there-no-going-back-new-data-center-dominated.html