AMD reported its quarterly earnings this week with a lot of moving parts, but most importantly they came in a little better than expected, which is exactly what the company needs.

Over its history, AMD has gone from the company that couldn't shoot straight to now the leader in in their field and probably one of the best at execution. As we highlighted a few months back, the company now needs to continue building its roadmap and find pieces of the market to claim. The company does not need to report blowout earnings, it just needs to continue building on what appears to be a solid head of momentum.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

By raw numbers, the quarter went just fine. They reported revenue of $5.4 billion, just ahead of consensus of $5.3 billion, and EPS of $0.58 versus expectations of $0.57. The stock was trading up after hours, but we would not be surprised if that's only temporary. As we said, not exciting, just steady progress.

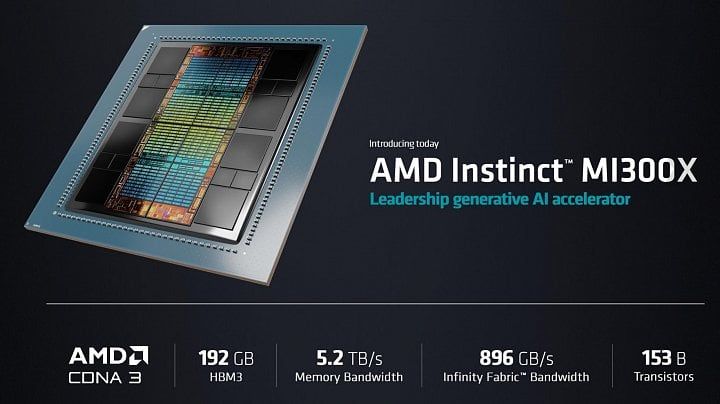

Beyond the numbers, the one topic that everyone wanted to know was how well is the company progressing in the data center, specifically around AI. And here the news was generally good. They have attracted a lot of interest from the usual suspects for their newly launched M-series GPUs.

They only announced these a month ago, and the product has just begun sampling, but judging from executive commentary, they have made a lot of traction garnering demos. This is still early days – for AMD's new products and for "AI" – so it's too soon to expect a huge change in AMD's results from the new products, but they are in a good position.

Put another way, Nvidia is streets ahead of everyone in the AI market, due to their foresight and no small amount of luck, but Nvidia will not, cannot be the only provider of data center AI semis, and AMD can still do great business as the leading fast follower in the market. Not for nothing, AMD is leading the way for Windows PC CPUs with built-in AI blocs, so they have not been asleep at the AI wheel like certain others.

Also read: The Rise, Fall and Renaissance of AMD

We also think there are some important takeaways for the broader market. A key trend that has emerged is the hyperscalers are for the most part scrambling with their AI strategies. Some of them are increasing their capex budgets for 2023 to accommodate AI needs, others are waiting until next year.

This means that AI is cannibalizing some market share this year from CPUs, but not all of it. And it seems clear that next year's budgets will be big for all hyperscalers, both for AI and for traditional workloads. AMD expects AI inference to become a $150 billion opportunity, which is a really big number. Perhaps most interesting was the increased focus on enterprise (I.e. non-hyperscale customers). This has been a somnolent market for a decade, steadily seeping into the cloud, but has now reignited to the point that AMD (among others) is investing in tooling up their sales effort to support the market.

Sifting through all of this, AMD's quarters had a lot of moving parts. The data center and AI stories were important and sounded good, but everything else was mixed.

Gross margins were good enough but not particularly inspiring; supply challenges seem to have been worked through but are now a concern for the new products; consumer demand for PCs is still recovering; a fair portion of their data center build this year rests on a single order for a supercomputer; the 5G build out helped, but is now tapering. The list goes on. The next few quarters AMD will remain a battleground stock but the company itself is in a great position longer term.

https://www.techspot.com/news/99678-what-next-amd-building-momentum.html