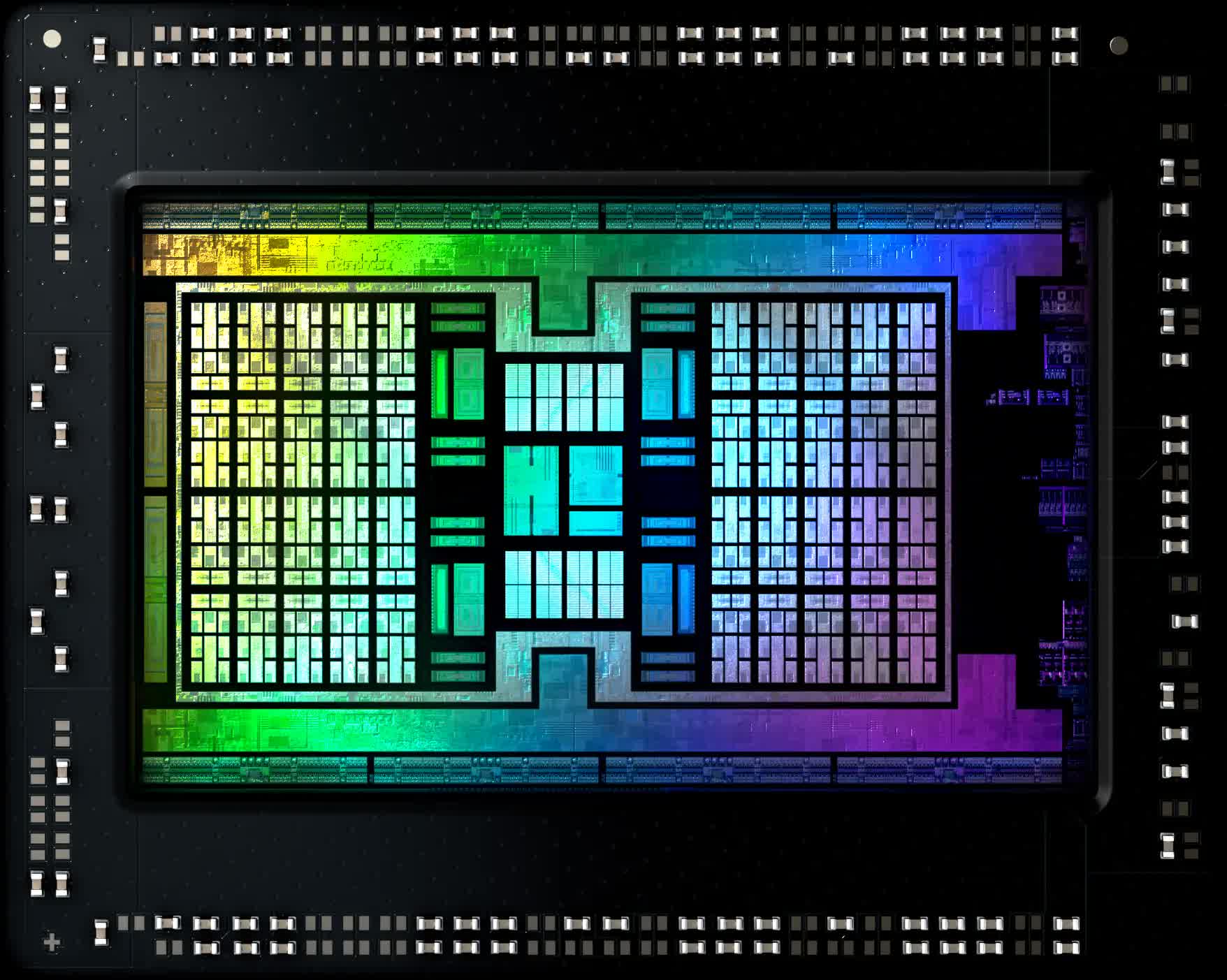

In the space of four years, GPUs have gone from having a few megabytes of cache to over tenfold that figure. Why did this happen and what benefits has it brought us?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why GPUs are the New Kings of Cache. Explained.

- Thread starter neeyik

- Start date

Theinsanegamer

Posts: 5,450 +10,228

I'm still waiting for AMD to combine their iGPU with 3d cache and give us something great. The upcoming 16cu strix point would be a great opportunity.

WhiteLeaff

Posts: 471 +856

A way to solve the problem of caches no longer shrinking as processes advance, wouldn't it be 3D stacking as used in HBM?

Kn0xx

Posts: 46 +51

Doesnt NVIDIA started to boost some of their Enterprise line, with CPU and GPU sharing a mass amount of vram/cache, like AMD "type" but overboosted ?

It is possible at some point CPU with integrated RAM and shared with GPU, start to reduce the need for "middleware" caches sizes ?

It is possible at some point CPU with integrated RAM and shared with GPU, start to reduce the need for "middleware" caches sizes ?

Feng Lengshun

Posts: 69 +29

I'm wondering if we'll eventually just see a general APU that just combines all these together, much like console's SDRAM. Right now, it doesn't seem great, but there are experiments that substitutes out expensive GPUs with APU fed with a bunch of DRAM that's converted into VRAM.

It's starting to feel like APU can become one giant SoC at some point, with integrated CPU, GPU, SDRAM working closely together. And it is rather appealing given that you can just grab a MOBO, an APU, and a PSU, and done, that's a computer and it works great...

...but I bet that they'll price it something stupid and that makes replacing APU really annoying.

It's starting to feel like APU can become one giant SoC at some point, with integrated CPU, GPU, SDRAM working closely together. And it is rather appealing given that you can just grab a MOBO, an APU, and a PSU, and done, that's a computer and it works great...

...but I bet that they'll price it something stupid and that makes replacing APU really annoying.

Which raises a tangential question, with more graphical cards sporting larger GDDR6XXX RAM in the order of 8GB-24GB the GDDR prices must have become somewhat more "palatable" than before. Why hasn't anyone attempt to replace "ordinary" DRAM for CPU, with the G (greater?) ones?

Similar threads

- Replies

- 50

- Views

- 796

- Replies

- 42

- Views

- 556

Latest posts

-

Generative AI could soon decimate the call center industry, says CEO

- VaRmeNsI replied

-

US investigators link Tesla Autopilot to dozens of deaths and almost 1,000 crashes

- Theinsanegamer replied

-

What should I do?

- HardReset replied

-

The Best Handheld Gaming Consoles

- Inthenstus replied

-

Microsoft Xbox Series X/S sales downturn continued last quarter

- Burty117 replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.