Image recognition software has come a long way over the past several years but the latest work from Google is really mind-blowing. The search giant has come up with a new machine-learning system that can not only recognize the subject of a photo but describe the entire scene.

In a blog post on the matter, Google Research Scientists Oriol Vinyals, Alexander Toshev, Samy Bengio and Dumitru Erhan point out that recent research has greatly improved object detection, classification and labeling. Even still, that's not enough for a computer to accurately describe a complex scene.

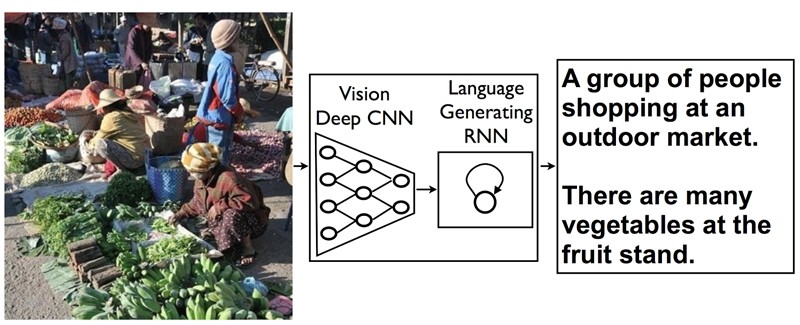

The idea for the new system comes from recent advances in machine language translation between languages. In those systems, a Recurrent Neural Network (RNN) converts a sentence in a foreign language into a vector representation while a second RNN uses the vector to build a sentence in the target language.

What Google has done is replaced the first RNN and its input with a deep Convolutional Neural Network (CNN) that is trained to classify objects in images. They then feed the CNN data into an RNN designed to produce phrases.

Sure, it's a little complicated but once you get the concept, it makes a lot of sense.

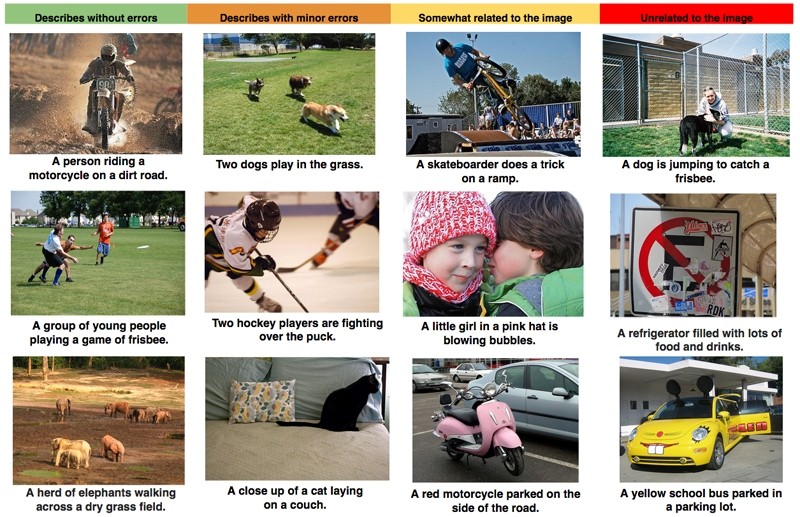

The scientists said their experiments on several openly published data sets have generated sentences that are quite reasonable. It's far from perfect but we also have to remember that it's still in an early stage of development.

The hope is that one day, the system may be able to help visually impaired people understand pictures, provide alternative text for images in regions of the world where mobile connections are slow and of course, make it easier to search for images on Google.