MSRP is irrelevant if you cannot buy at MSRP. What matters is: what am I getting for my money right now. I would have bought a 3080 at $700 to replace my 1080. I had planned for it and had the money, $700 was a good price for that GPU.

There were none available at that price. It's a simple as that.

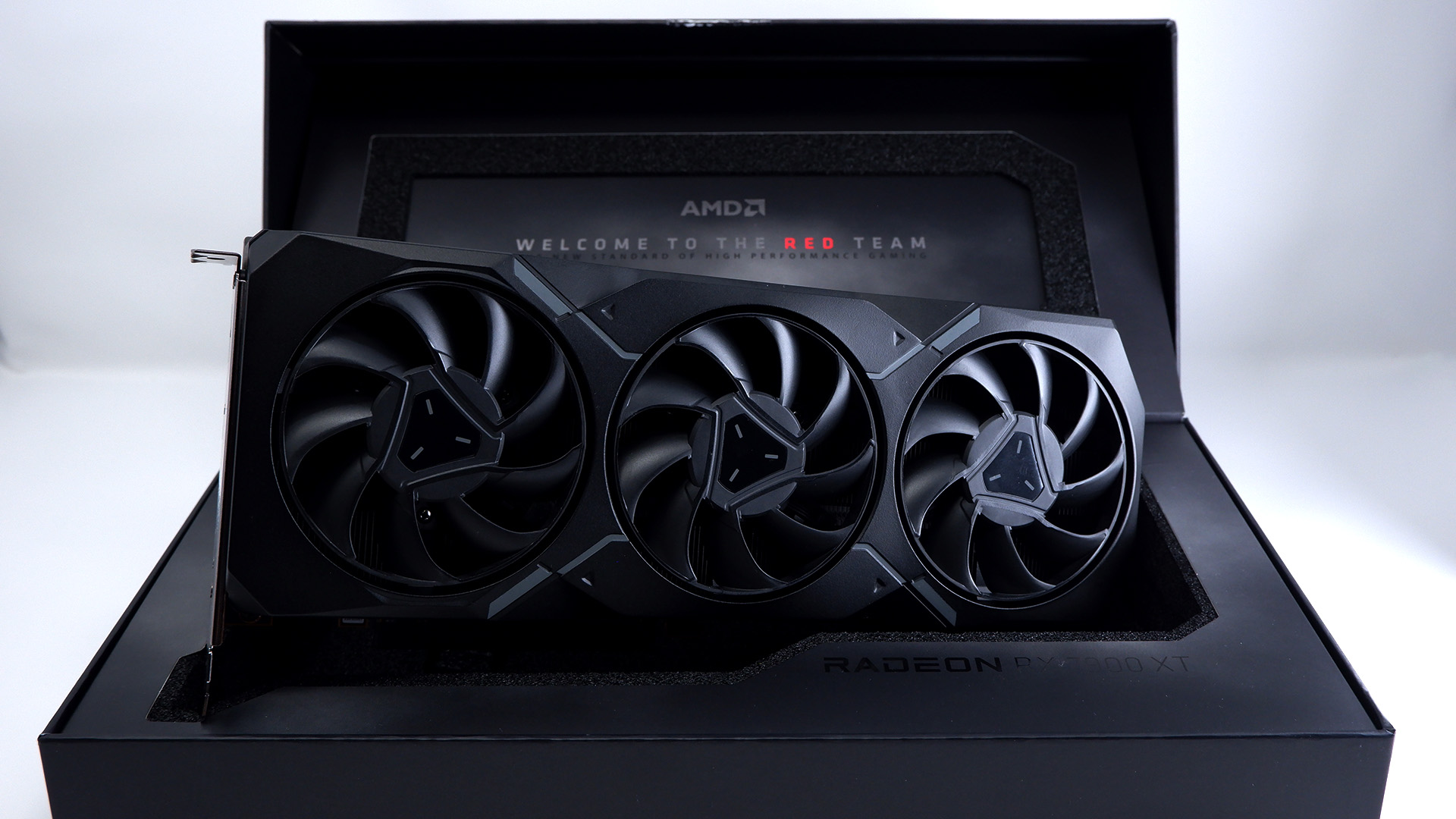

I waited and eventually got a 6800 XT at $560 when its price competitor was the 3070. The 3080 was between $900-1000. Pretty easy decision there.

Did you forget that both GPUs were released during the cryptocrapfest? Nvidia's MSRPs were lower because they were priced as crypto took off and AMDs were higher because they were released and priced later during the worst of crypto. Which is why these MSRPs are useless as neither reflect what buyers actually paid.

All the rest of the market share and enterprise blah blah is irrelevant and deviating from the point.

I did not forget anything, as I bought several 3070s for MRSP on release for people, I ordered 3 on release day and got them 1 day after, for 498-510 dollars each. I also bought like 5 3080's on release for clients + a few 3090s. Not a problem.

6700XT released much later and was caught in crypto boom, just like every other GPU. This happend later than RTX 3000 release and Nvidia took a huge marketshare by being first to the market + Using Samsung instead of TSMC for massive output.

6700XT launched about 6 months after 3070 and was priced the same, 20 dollars lower, yet still lost by 12-15% and had lackluster features and RT perf.

3070 was never priced on par with 6800XT. Unless you went with the cheapest 6800XT and the most expensive 3070, which is pointless.

When AMD tried to sell out their huge post mining GPU stock. Thats why 6700, 6800 and 6900 series were massively discounted and the reason why many 7000 series GPUs were heavily delayed (7700XT and 7800XT especially). AMD wanted to sell old tech rather than pushing new, because of HUGE INVENTORY.

No, Nvidia's prices were low because they used Samsung 8nm instead of TSMC, which is much cheaper. It was enough to beat AMD anyway.

I bought 100s of GPUs in the last two generations, I think I know what the prices have been.

If what you are saying was true (for entire world) then AMD would have tons of GPU marketshare. Yet they don't.

If you are in doubt, go check Steam HW Survey or these links:

Though it's worth noting overall more than 2.3 billion graphics cards have been sold since 2000, worth a staggering $482 billion.

www.pcgamer.com

Von Jon Peddie Research kommen die Marktanteile für Grafikchips für Desktop-Grafikkarten für das vierte Quartal 2023, gleichzeitig werden hiermit auch die erst spät nachgereichten (und daher an dieser Stelle leider untergegangenen) Marktanteile für

www.3dcenter.org

AMD was in full panic-mode trying to sell 6700, 6800 and 6900 inventory. Massive discounts. Yet they did not really sell well.

Second hands prices on AMD hardware is also WAY LOWER so the money you "save" are not really saved in the end. Nvidia cards keeps their price much better. Just like iPhones vs Android.

Why? Demand is MUCH higher and Nvidia/Apple don't cut prices all the time like AMD does. AMD is all about pricing and every single piece of hardware from them, are lowered multiple times in price over a generation or two, making old AMD hardware close to unsellable when you are done with it. This is a fact and has been true for all CPU and GPU generations from AMD.

AMD = Launch MSRP is set high. Allowing for price reductions, which are bound to happen.