AntiAlias, over simplified, is upscaling that is then downsampled so smooth things out. those juggles are referred to as "aliasing"

Yes I know and I'm specifically talking about TAA, Temporal Anti-Aliasing. It works the same way DLSS and FSR do, by combining information from past frames to smooth things over.

DLSS and FSR go a step further by recreating the image into a higher resolution.

Make no mistake, TAA has been used for years to hide how low res games are rendered. It's forced on by default in a fair few games (worth saying, some game engines call their tech something different like TSR (Unreal Engine) TSAA (CryEngine))

At the end of the day, it's practically the same inputs used for DLSS and FSR, they just do a much better job of not only anti-aliasing but also up-sampling the image.

If you want a laugh, you can edit an .ini file for Cyperpunk that disables the built-in TAA, then in the settings turn all upscaling off and man, it is jaggy heaven.

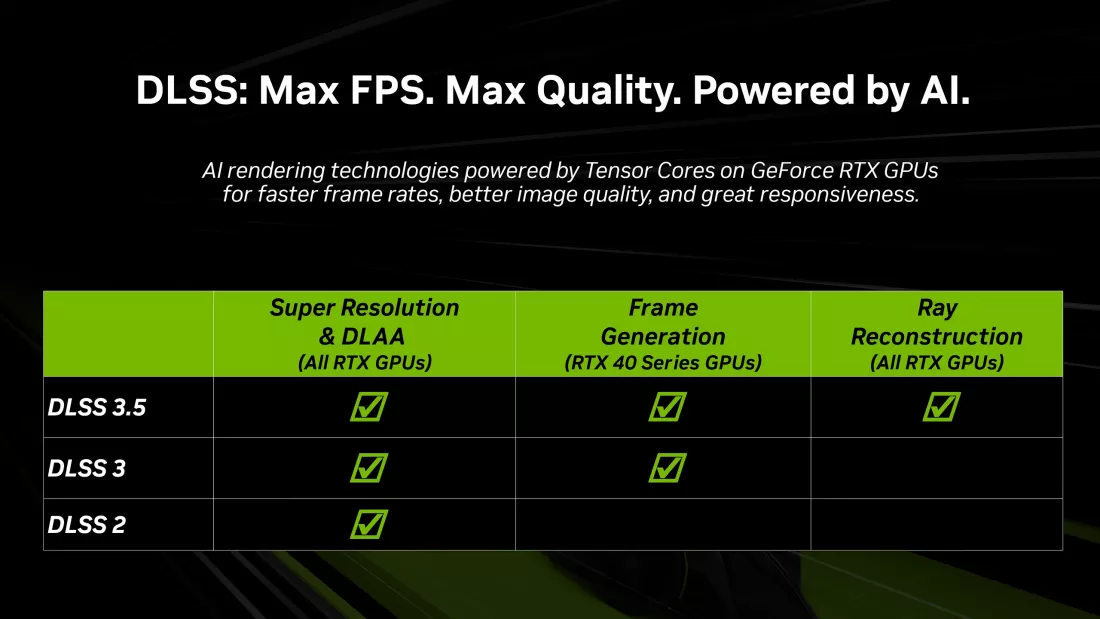

nvidia isn't even fully supporting DLSS among its generations of GPUs. I say this constantly, you're sacrificing quality for compatibility. If I have a 3090 I don't want to buy a 40 series card just to get access.to all for DLSS. DLSS made around the 50 series will likely not fully support the 40 series.

So, maybe 85% of gamers have nvidia cards but 85% of those gamers don't have full on DLSS support.

I completely understand the DLSS naming scheme is rubbish, but the 20 Series can use DLSS to upscale like they always have. As new cards have come out, they have more and better tensor cores, so Nvidia are pushing new technology to take advantage of the hardware, DLSS 3.5 which is Ray Reconstruction actually works fine on the 20 series:

You're basically arguing that they shouldn't improve the hardware and add features, to keep people who bought the previous gen happy. In my mind, that's the opposite of progress.

Yeah, if you put them in performance mode. realisticly, quality or higher is identical at 4k for me. to be perfectly honestly, my TV does a better job of upscaling than either FSR or DLSS.

So sorry, you're saying, if you lower the resolution of the game to 1440p, leaving everything default in the engine, your TV is doing a better job of upscaling and you're getting the same performance as using DLSS? Because you'd be the first to point this out I think?

nVidia is also starting to piss people off,I'm one of them. I've been buying the green teams GPUs for 19 years, I bought my first AMD card this year.

I really wanted to build a computer for someone with a 7800XT this year, but everyone who's come to me to build a computer have all requested Nvidia cards annoyingly.

To be perfectly fair, aside from frame generation, fsr3.0 doesn't really offer much over 2.0. It's been my experience that frame gen adds a 1.5- 2 frames of input lag and that's a noticeable at lower frames at lower FPS.

Yeah I'm with you there, even on a 4090, I don't like frame-gen in first person games, just at all, even at high framerates, third person games though like the Witcher 3, as long as the framerate is nice and high, I can get some benefit out of it, rarely used feature though to be honest.

On one finale note, Starfield is a garbage game. I dumped around 70 hours into it thinking "it'll get better" and it just didn't. Bethesdas last few releases have honestly sucked. Fallout 4 was atrocious unless you had a brain tumor, skyrim had the "level, loot and leave" combo down but the game didnt offer anything ground breaking and the story was lack luster at best.

Then we have starfield which, frankly feels incomplete.

You aren't the first person to say this to me, I had quite a few friends who were really looking forward to Starfield, pre-ordered it and all, convinced it would be amazing, after a month of playing, they've all said the same thing "I really wanted to like, I really wanted to love it, but it's just crap".

When I was watching people play it, I was surprised at how often you're quick travelling or in a load screen, why they haven't figured out how to go into buildings or large rooms without a load screen yet is baffling.

I will say that a BIG REASON Betheada decided to take AMDs money was that Microsoft was pushing them to release starfield. On top of that, all consoles use AMD GPUs so it didn't really make sense. I wouldn't be surprised if they got an order to cut DLSS after it wad already implemented, although I personally doubt that. I will say I bet they did put money on FSR2.0 because it is compatible with consoles and would be required for smooth play on XBOX. So more PC users may use DLSS but more gamers use AMD GPUs

Oh I don't doubt any of that, my issue really, is that if you implement FSR, DLSS uses the exact same inputs from the game engine, if you've developed the game for FSR, DLSS and XeSS are a doddle to implement as an extra option, there's almost no reason to not include the other options if you've developed the game for one of them.