In context: Remedy Entertainment is known for pushing hardware to its limits, as most recently demonstrated with their intensive use of ray tracing. However, even in the absence of this resource-intensive feature, the system requirements for Alan Wake II are expected to rank it among the most demanding titles of 2023.

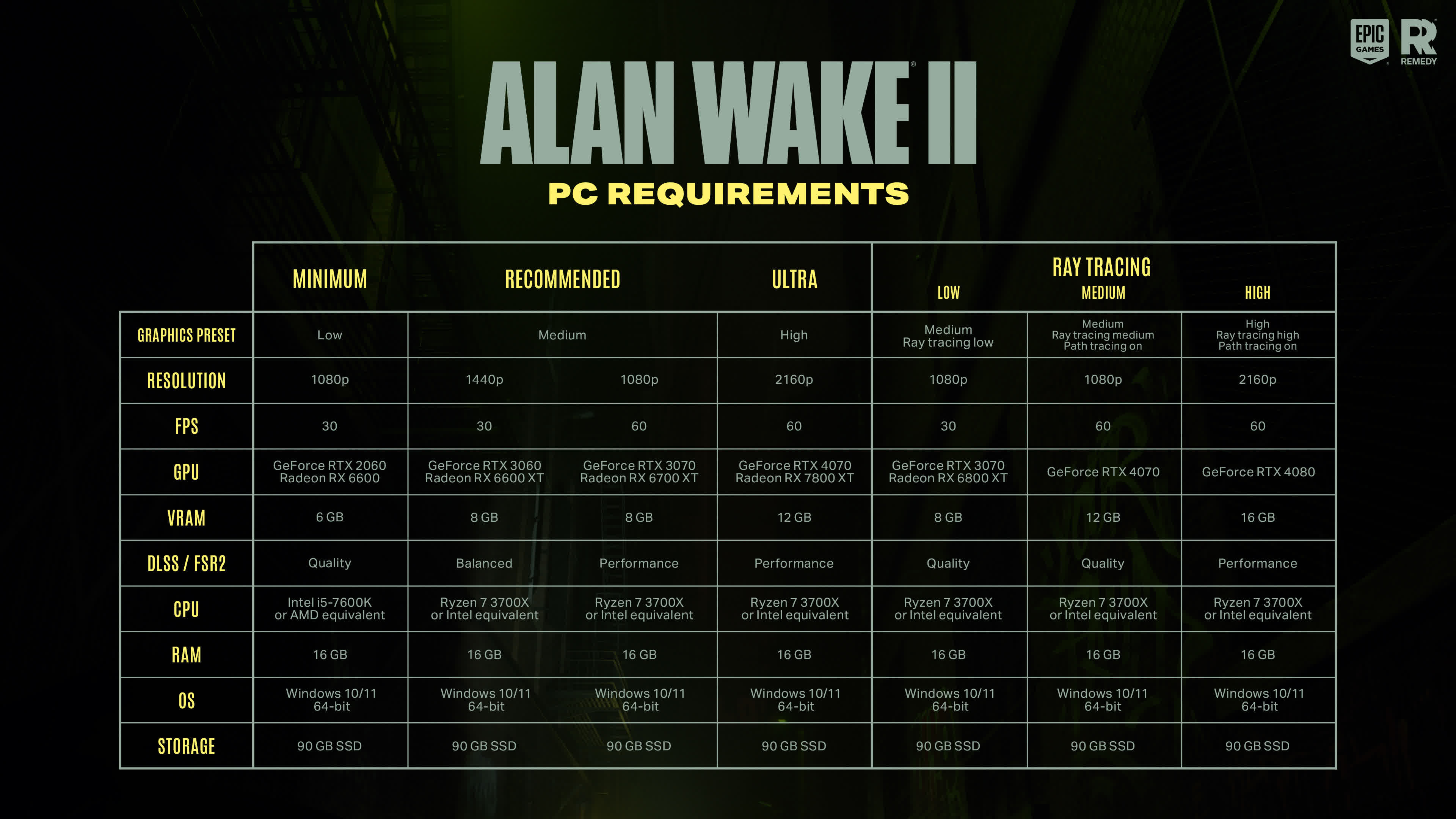

Remedy has unveiled an exhaustive system requirements sheet for "Alan Wake II," which will surely intimidate many. The sheet completely omits GPUs predating Turing and RDNA 2, signaling the end of the Pascal and RDNA 1 era. Notably, upscaling technologies like DLSS and FSR are listed as requirements.

Update (10/23): During the weekend, Redditors caught a Twitter comment from a Remedy developer who said the minimum graphics card requirements are explained by the need for mesh shader support, which is absent from previous generation GPUs (GeForce GTX 1000 and Radeon 5000 series). The somewhat controversial tweet was later deleted, but it read "I'm not sure it will run without it [mesh shader]. In theory the vertex shader path is implemented but had lots of perfs issues and bugs, we just dropped it. Meaning it might be possible to bring it back with a mod but don't expect miracles."

While the game's specifications seem high, they align with recent high-end releases such as A Plague Tale: Requiem, Lords of the Fallen, Immortals of Aveum, and Forspoken. To achieve gameplay at 1080p and 60 frames per second, somewhat beefy graphics cards like the GeForce RTX 3070 or Radeon RX 6700 XT are necessary. However, a notable point of concern is Remedy's apparent assumption that players won't be running Alan Wake II at native resolution.

Remnant II previously raised eyebrows when its developers disclosed the game was optimized for DLSS or FSR. However, Alan Wake II goes a step further, dedicating an entire system requirements section to upscaling. Every one of the six performance tiers includes upscaling modes, even for a 1080p output resolution.

Click to enlarge

The 1080p 60fps spec indicates medium graphics and upscaling at performance mode, meaning half resolution. The setting is typically intended for 4K displays, upscaling from a 1080p internal resolution, but engaging performance mode with a 1080p output means running Alan Wake II at 960 x 540. That's without ray tracing.

The game's recommendation for the lowest of three ray tracing profiles is similar to the 1080p 60fps spec, but with the frame rate expectation lowered to 30. Alan Wake II's cutting-edge path-tracing mode emerges in the two highest performance profiles, where AMD GPUs disappear in favor of high-end RTX 4000 series cards. By the way, Nvidia is bundling the game with high-end RTX 4000 GPUs until November.

On the bright side, aside from GPU performance, all other system specs are relatively standard. The 90GB storage space requirement isn't modest but aligns with contemporary standards for AAA games. All performance tiers require 16GB of RAM, unlike some recent releases that recommended 32GB for 4K gameplay.

Alan Wake II s set to debut exclusively on the Epic Games Store on October 27. Remedy has not indicated a release date for Steam yet.

https://www.techspot.com/news/100574-alan-wake-ii-assumes-everyone-use-upscaling-even.html