The AMD Radeon R9 Fury is expected to launch shortly, which might explain why we're suddenly seeing a whole bunch of leaks relating to the graphics card. The latest collection of information on the Fury, leaked by VideoCardz, includes both detailed specifications and images of a Sapphire Tri-X model.

The R9 Fury's Fiji-based GPU core will be cut down as expected, featuring 56 active compute units for 3,584 stream processors, compared to 64 CUs and 4,096 SPs on the fully-enabled Fury X. Core clock speeds appear to be slightly reduced as well, with the Fury reportedly having a standard maximum clock speed of 1,000 MHz.

Despite the reduction in clock speeds, there is a good chance that many Furies released to the market will feature overclocked cores. One Tri-X model features an overclocked core of 1,040 MHz, while previous rumors indicated the Fury might feature core clocks of 1,050 MHz to match the Fury X.

Like the Fury X, the Fury will feature 4 GB of HBM clocked at 500 MHz, providing 512 GB/s of memory bandwidth. Overclocking HBM is currently impossible, so don't expect any overclocked Fury cards to feature higher memory clock speeds.

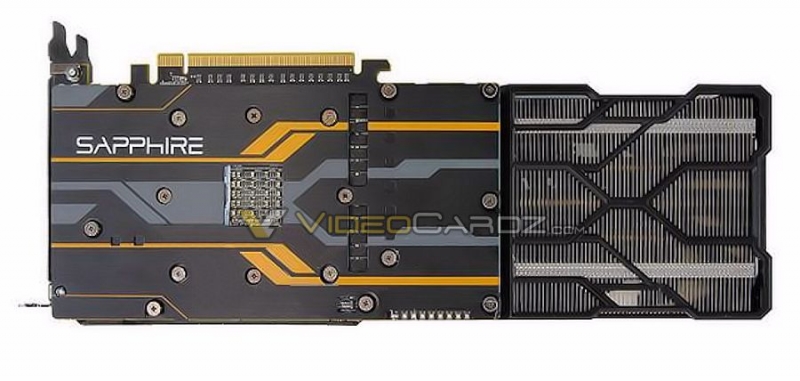

As for the Fury itself, images of the Sapphire Tri-X variant show a short PCB with an overhanging three-fan air cooler. This cooler isn't AMD's reference design, but the short PCB is a product of the memory modules being included right on the GPU's die.

AMD previously stated the $549 Radeon R9 Fury would launch mid-July, so it shouldn't be too long before these graphics cards start to hit the market (and our test bench).

https://www.techspot.com/news/61268-amd-radeon-r9-fury-specs-allegedly-confirmed-ahead.html