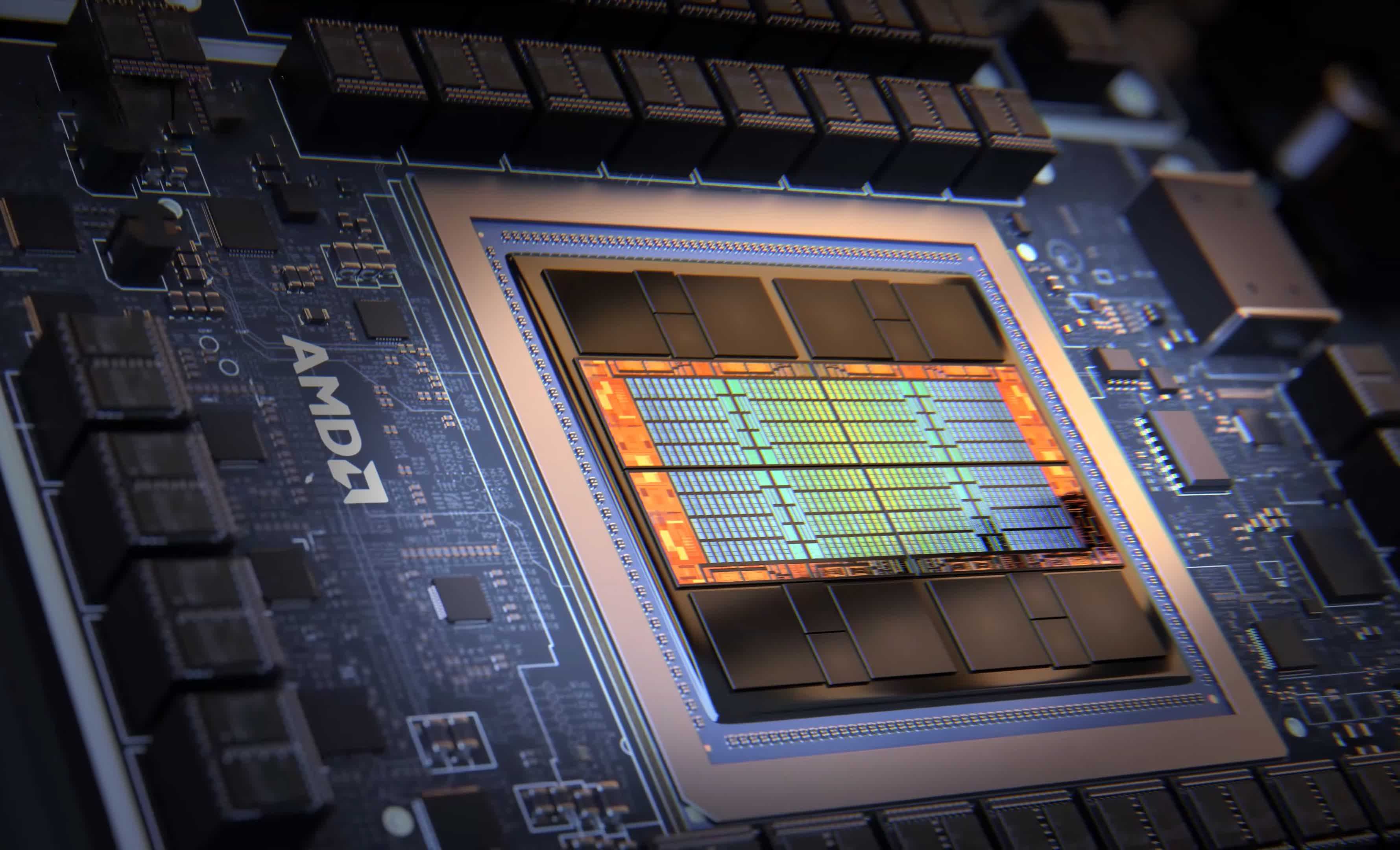

AMD held an analyst event last week, their second of the year. During their June event, they unveiled the impressive Instinct MI300, a GPU specifically designed for AI. The event featured numerous high-profile partners on stage and positive commentary on various initiatives.

And, of course, their stock fell the next day. Apparently investors were disappointed that AMD did not announce any paying customers for the product, nor did they release many performance metrics for the Mi300. However, at last week's event, they revealed that the Mi300 matches Nvidia's H100 in performance. Additionally, they announced two major paying customers: Meta and Microsoft.

Surprisingly, the stock declined following the news (it's since bounced back handsomely and then some).

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

AMD's had a great run over the past several years. They have capitalized on Intel's stumbles and picked up a healthy amount of share in the profitable data center market. Moreover, CEO Lisa Su does not get enough credit for the turnaround she put in place taking the company from a bumbler at execution, always missing deadlines, to a solid operations machine delivering compelling products at a regular clip.

Seen in that light, these latest news continue that trend. AMD announces a product, gets it into customers' hands, and are now poised to generate real revenue from them. Textbook execution. The Mi300 demonstrates that they have real capabilities in the AI market, and not just in the form of a product, but an entire ecosystem of hardware partners like Dell and Lenovo, and a steadily growing roster of software partners.

But, there are still plenty of obstacles ahead. Performance is one thing, actual customer usage is another. For companies buying data center silicon, especially the hyperscalers, many factors beyond raw performance matter. Moreover, AMD's software still has a long way to go.

The dominant force in AI systems today is Nvidia's CUDA software, which has become the de facto platform for most AI applications. In response, AMD developed RocM, now a complete product, but it hasn't yet emerged as a true contender to CUDA's dominance. RocM's support is limited to a select few of AMD's products, and more crucially, it remains largely unfamiliar to the majority of AI developers. The issue isn't inherently with RocM; rather, it's that CUDA has over a decade's head start, and Nvidia is not standing still.

Which brings us to the real challenge for AMD. Despite their consistent efforts over the years, the market has moved past them. Nvidia has overtaken everyone to become the leader in data center processors. In the new data center, AMD is in second place in CPUs and second place in GPUs. This has to be frustrating for a company that has worked so hard.

However, there's still potential for AMD to succeed. Even if Nvidia manages to dominate the data center market like Intel did for a decade with a 90% share (which seems unlikely), AMD represents an alternative GPU source that every customer says they want as they write ever bigger checks to Nvidia.

And if Nvidia's share eventually levels off somewhere below 70%, then that means a three-way contest for everything. In the past, hyperscalers saw the advantage in standardizing purchases with a few vendors. Now, in the era of heterogeneous computing, multiple vendors are necessary, and AMD should maintain its relevance.

As much as AMD seems destined to remain the perpetual second source in the data center, that may not be such a bad place to be. They don't need to have better CPUs than Intel or better GPUs than Nvidia. So long as their GPUs are better than Intel's and their CPUs are better than Nvidia's CPUs, AMD will have a healthy roster of paying customers. They do not need to outrun all the bears, they just have to outrun the other racers.

https://www.techspot.com/news/101166-long-amd-can-offer-better-gpus-than-intel.html