We've benchmarked the Radeon RX 7900 GRE and GeForce RTX 4070 Super across 58 different game configurations, taking an in-depth look at rasterization, ray tracing, and upscaling performance.

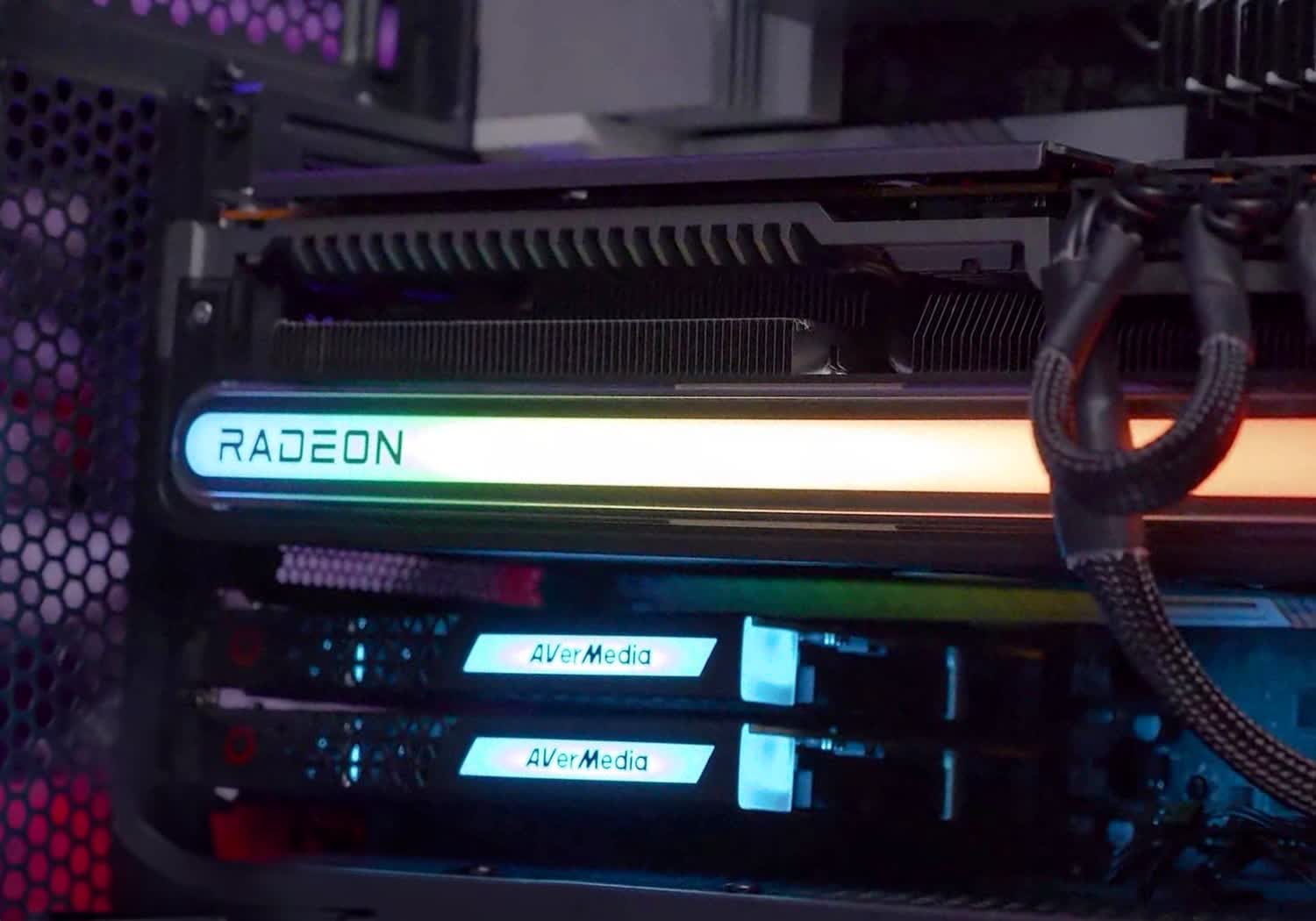

GeForce RTX 4070 Super vs. Radeon RX 7900 GRE: Rasterization, Ray Tracing, and Upscaling Performance