In context: Nvidia CEO Jensen Huang has consistently proclaimed the demise of Moore's Law in recent years. Although his counterparts at AMD and Intel hold differing opinions, a recent presentation from Google appears to align with Huang's perspective. This alignment might also help elucidate TSMC's trends over the past several years.

The tech industry frequently discusses how much time, if any, Moore's Law has left. Google's head of IC packaging, Milind Shah, recently supported a prior assertion that the trend, which has served as a crucial guidepost for the tech industry, ended in 2014.

In 1965, the late Intel co-founder Gordon Moore theorized that the number of transistors per square inch on a circuit board would double about every two years. The theory, named after him, has mostly held fast in the nearly six decades since but has recently faced turbulence.

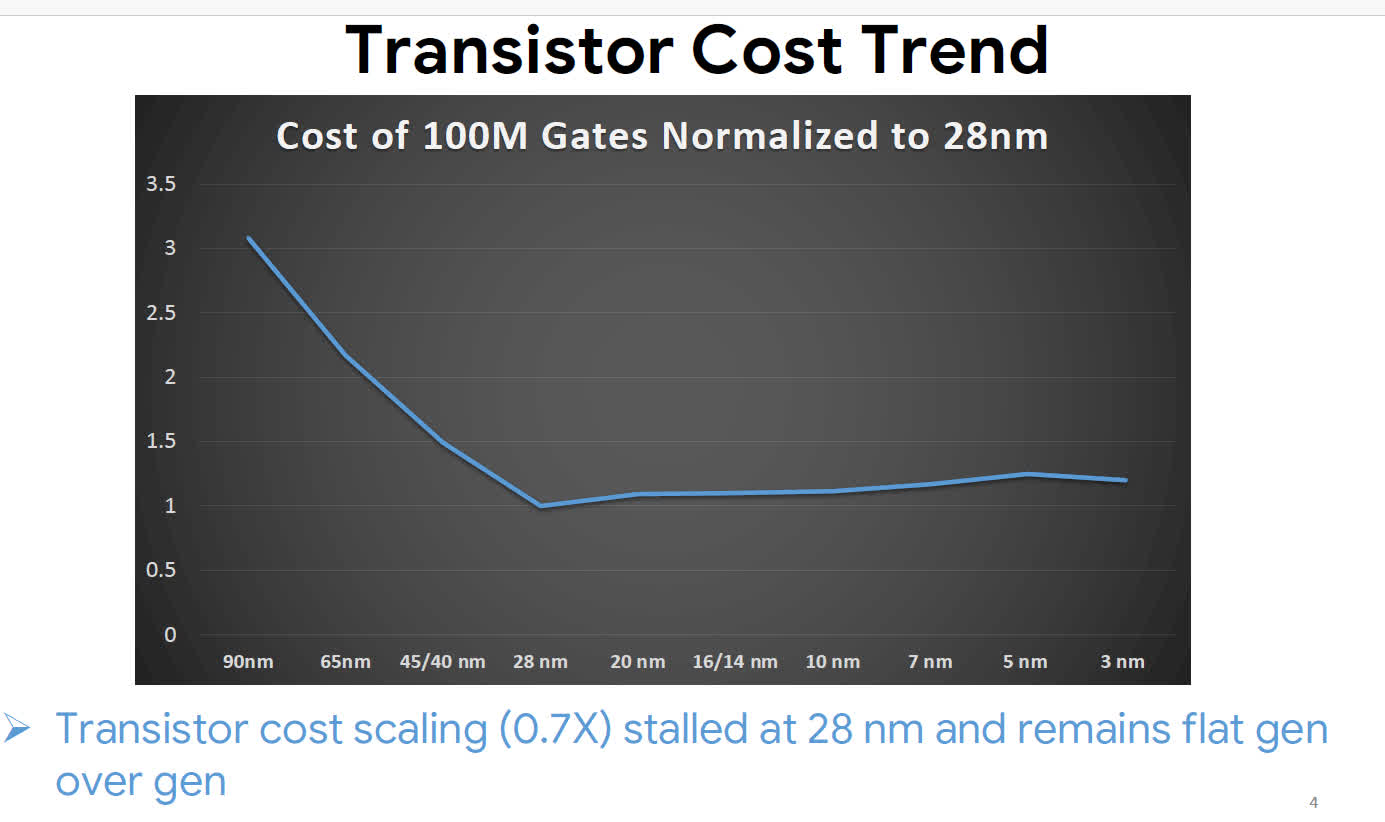

In 2014, MonolithIC CEO Zvi Or-Bach noted that the cost of 100-million-gate transistors, which had previously been steadily falling, hit rock bottom at the then-recent 28nm node. Semiconductor Digest reports that Shah, speaking at the 2023 IEDM conference, supported Or-Bach's claim with a chart showing that 100M gate prices have remained flat ever since, indicating that transistors haven't gotten any cheaper in the last decade.

Although chipmakers continue to shrink semiconductors and pack more of them onto increasingly powerful chips, prices and power consumption have increased. Nvidia CEO Jensen Huang has tried to explain this trend by proclaiming the death of Moore's Law multiple times since 2017, stating that more powerful hardware will inevitably cost more and require more energy.

Some have recently accused the Nvidia CEO of making excuses for the rising prices of Nvidia graphics cards. Meanwhile, the heads of AMD and Intel admit that Moore's Law has at least slowed down but claim that they can still achieve meaningful performance and efficiency gains from innovative techniques like 3D packaging.

However, the analysis from Or-Bach and later Shah might align with TSMC's wafer price hikes, which sharply accelerated after 28nm in 2014. According to DigiTimes, the Taiwanese giant's cost-per-wafer doubled over the subsequent two years with the introduction of 10nm in 2016. The outlet estimated that the latest 3nm wafers could cost $20,000.

As TSMC and its rivals aim toward 2nm and 1nm in the coming years, further analysis indicates that most of the semiconductor industry's recent growth comes from rising wafer prices. Despite wafer sales falling in the last couple of years, the average price of TSMC's wafers kept increasing.

https://www.techspot.com/news/101747-google-manager-claims-moore-law-has-dead-10.html