Something to look forward to: This year Nvidia has continued to dominate the high-end GPU world with AMD claiming a few victories at mainstream price points with Navi so far. It has also forced Nvidia to lower pricing thanks to the healthy competition. Next year, Intel will join the fray with at least four powerful discrete GPUs with hardware-accelerated ray tracing.

As happens every so often, Intel accidentally published a developer version of their 26.20.16.9999 graphics driver with the codenames for ten different categories of products. They’ve since taken it down, but Anandtech forum user ‘mikk’ republished them all.

The highlight, of course, is the references to Intel’s new Xe discrete GPUs, which are mentioned five times. Three mentions are thick with detail; “iDG2HP512,” “iDG2HP256,” and “iDG2HP128.” All Intel graphics processors feature the “I,” “DG” is thought to mean discrete graphics and the “2” is the variant. “HP” could mean high-power, implying these are fully-fledged desktop GPUs, and the following three-digit number could be the number of execution units, Intel’s equivalent to CUDA cores.

While 512 execution units sounds low compared to Nvidia’s specs, Intel’s graphics architecture is quite different. Intel’s Gen11, for example, provides 16 flops per execution unit per clock, such that a 512 EU graphics core operating at 1800 MHz would reach 14.7 TFLOPS, a little more than an RTX 2080 Ti. A 256 EU GPU would have 7.4 TFLOPS, the same as an RTX 2070, and a 128 EU GPU would have 3.7 TFLOPS. Of course, we have no idea what speeds the GPUs will actually operate at.

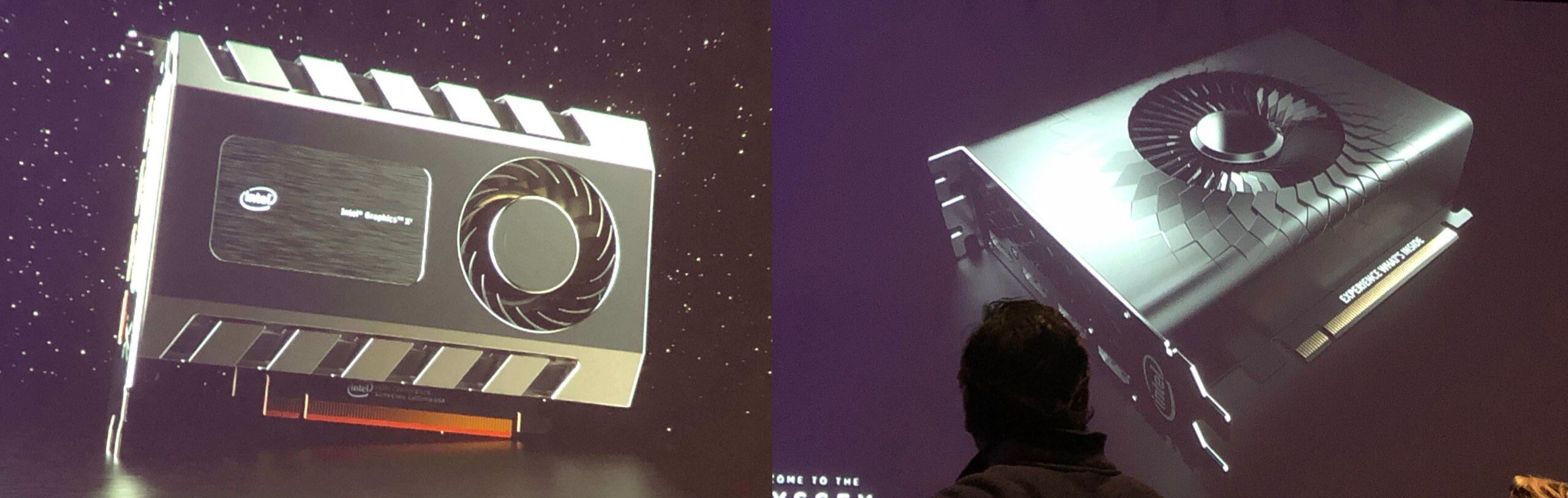

Intel's concept GPU shrouds.

The last two mentions of discrete GPUs are vaguer. An “iDG1LPDEV” suggests that a “1” low-power variant is in the works and might arrive later, due to the “dev” status. It could be a separate GPU for laptops. An “iATSHPDEV” name references the Arctic Sound code-name, which Intel is using for its first-generation discrete GPUs. Code relating to it describes it as using Gen12 architecture in a high-power configuration. It might be initial testing of a second-gen component.

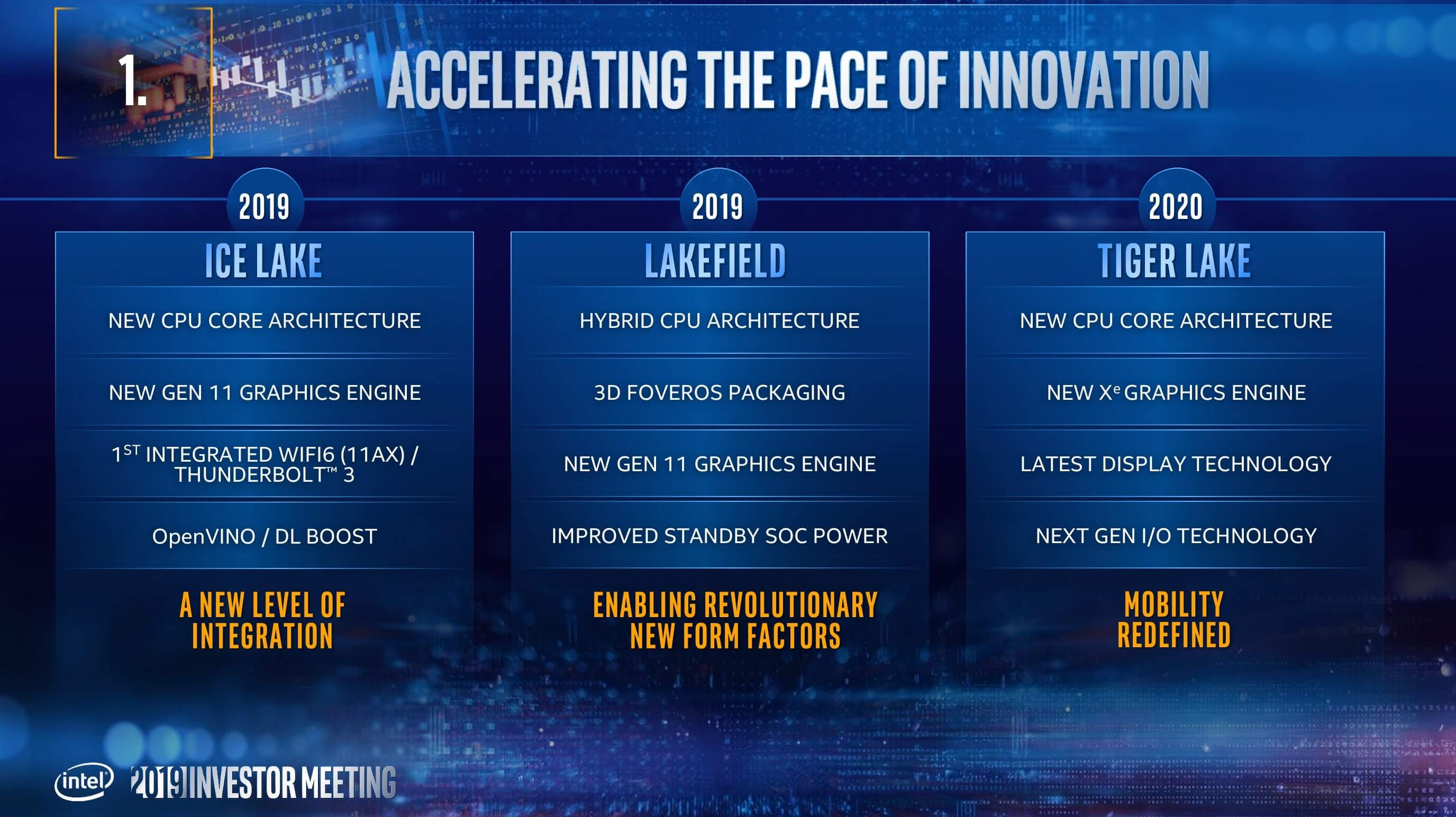

On the CPU side, the driver update lists five series’ worth. In order of soonest to be released, “LKF-R” is Lakefield, a strange low-power processor series with five cores (one at 22nm, four at 10nm). Four models appear in the listing. “EHL,” or Elkhart Lake, is a group of ultra-low-power SoCs built on 10nm expected to be released next year. It also appears with four variants.

“TGL” is the juicy Tiger Lake. The successor to the imminent Ice Lake, Tiger Lake will be the mainstream architecture for late 2020 using 10nm. The driver lists eight models employing Gen12 Xe graphics, though in a low-power configuration.

“RKL” is Rocket Lake, the replacement for Tiger Lake coming in 2021. We can see from the eight processors listed that it is still in the early stages of development. Breaking down one name, “iRKLLPGT1H32,” we can see that one Tiger Lake CPU will use the “GT1” graphics engine with 32 execution units, a solid show. Others in the listing employ “GT0” and “GT0.5” GPUs, some of which appear to use 16 execution units.

Last but not least we have “ADLS,” Alder Lake, which replaces Rocket Lake in 2021 but is still expected to rely on 10nm manufacturing. Only two “dev” models are listed, so we don’t know anything at all really, but it’s likely Intel doesn’t either – it’s still four generations away. There are also three listings yet to be deciphered, “iRYFGT2,” “iJSLSIM,” and “iGLVGT2.” Each with only one model.

While interpreting the leaked information doubtlessly involves some error, the general gist of it for both GPUs and CPUs is promising. Future integrated GPUs seem to be powerful and Intel is well underway in developing many generations’ worth of processors. Their discrete GPUs, providing there are no ugly surprises in the clock speed or software front, appear to be capable of battling Nvidia everywhere in the line-up. With a little luck, Intel will provide the competition the market deserves.

https://www.techspot.com/news/81173-intel-accidentally-confirms-four-xe-discrete-gpus.html