In brief: Deepfakes are one of those technologies that, while impressive, are often used for nefarious purposes—and their popularity is growing. Companies have been working on ways of identifying a real video from an altered one for years now, but Intel's new solution looks to be one of the most effective and innovative.

Deepfakes, which usually involve superimposing someone's face and voice onto another person, started gaining attention a few years ago when adult websites began banning videos where the technique was used to add famous actresses' faces to porn stars' bodies.

DF videos have become increasingly advanced since then. There are plenty of apps that let users insert friends' faces into movies, and we've seen the AI-powered process used to bring old photos back to life and put young versions of actors onto the screen once again.

But there's also an unpleasant side to the technology. In addition to being used to create fake revenge porn, it's been utilized by scammers applying for remote jobs. There was also an app designed to remove women's clothes digitally. But the biggest concern is how deepfakes have led to the spread of misinformation—the fake video of Ukraine president Volodymyr Zelensky surrendering was spread on social media earlier this year.

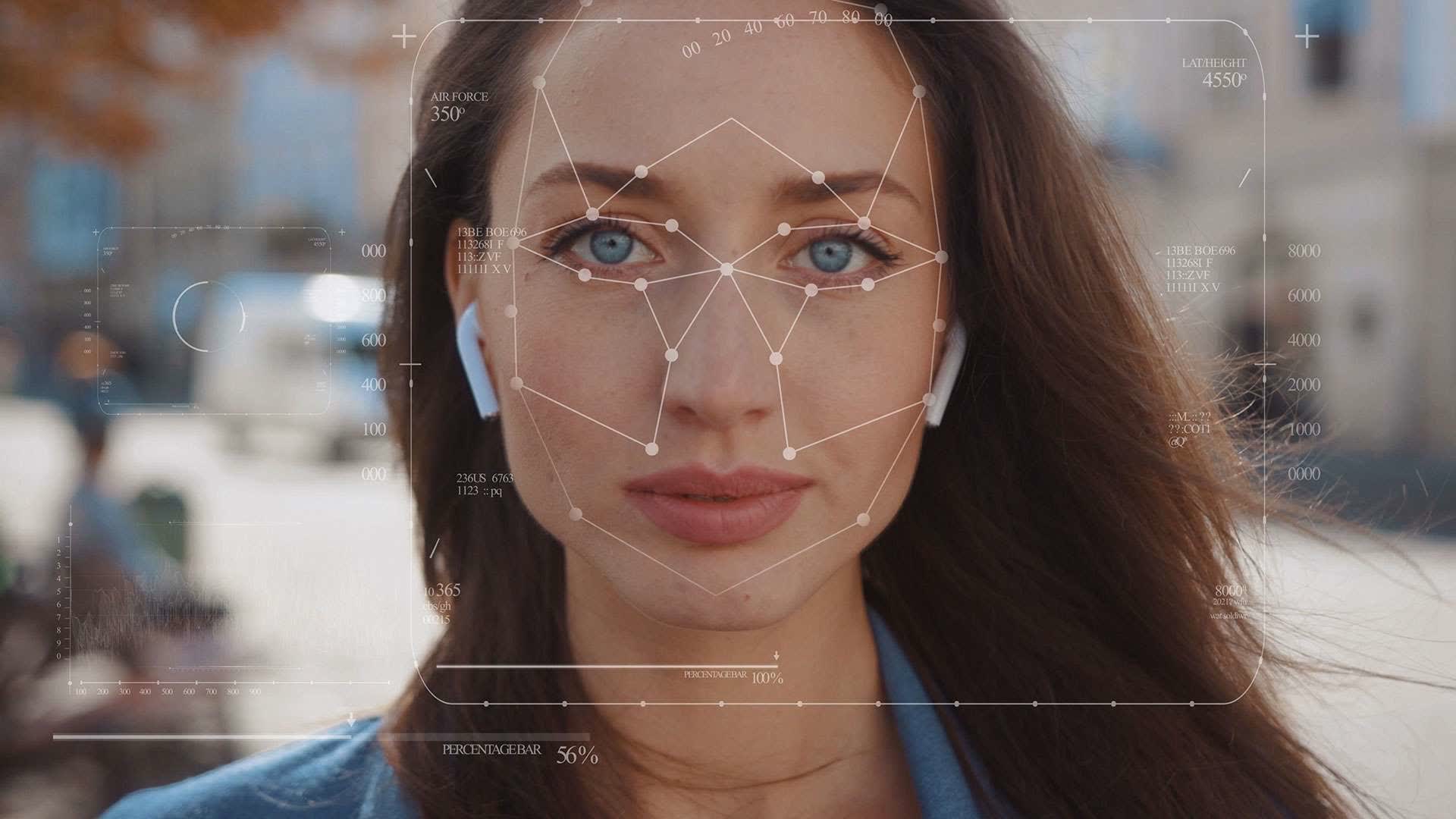

Organizations, including Facebook, the Defense department, Adobe, and Google, have created tools designed to identify deepfakes. Intel and Intel Labs' version, aptly called FakeCatcher, takes a unique approach: analyzing blood flow.

Rather than going with a method that examines a video's file for tell-tale signs, Intel's platform uses deep learning to analyze the subtle color changes in faces caused by the blood flowing in veins, a process called photoplethysmography, or PPG.

FakeCatcher looks at the blood flow in the pixels of an image, something that deepfakes are yet to perfect, and examines the signals from multiple frames. It then runs the signatures through a classifier. The classifier determines whether the video in question is real or fake.

Intel says that combined with eye gaze-based detection, the technique can determine if a video is real within milliseconds and with a 96% accuracy rate. The company added that the platform uses 3rd-Gen Xeon Scalable processors with up to 72 concurrent detection streams and operates through a web interface.

A real-time solution with such a high accuracy rate could make a massive difference in the online war against disinformation. On the flip side, it could also result in deepfakes becoming even more realistic as creators try to fool the system.

https://www.techspot.com/news/96655-intel-detection-tool-uses-blood-flow-identify-deepfakes.html