Big quote: "The first forensics tools for catching revenge porn and fake news created with AI have been developed through a program run by the US Defense Department."

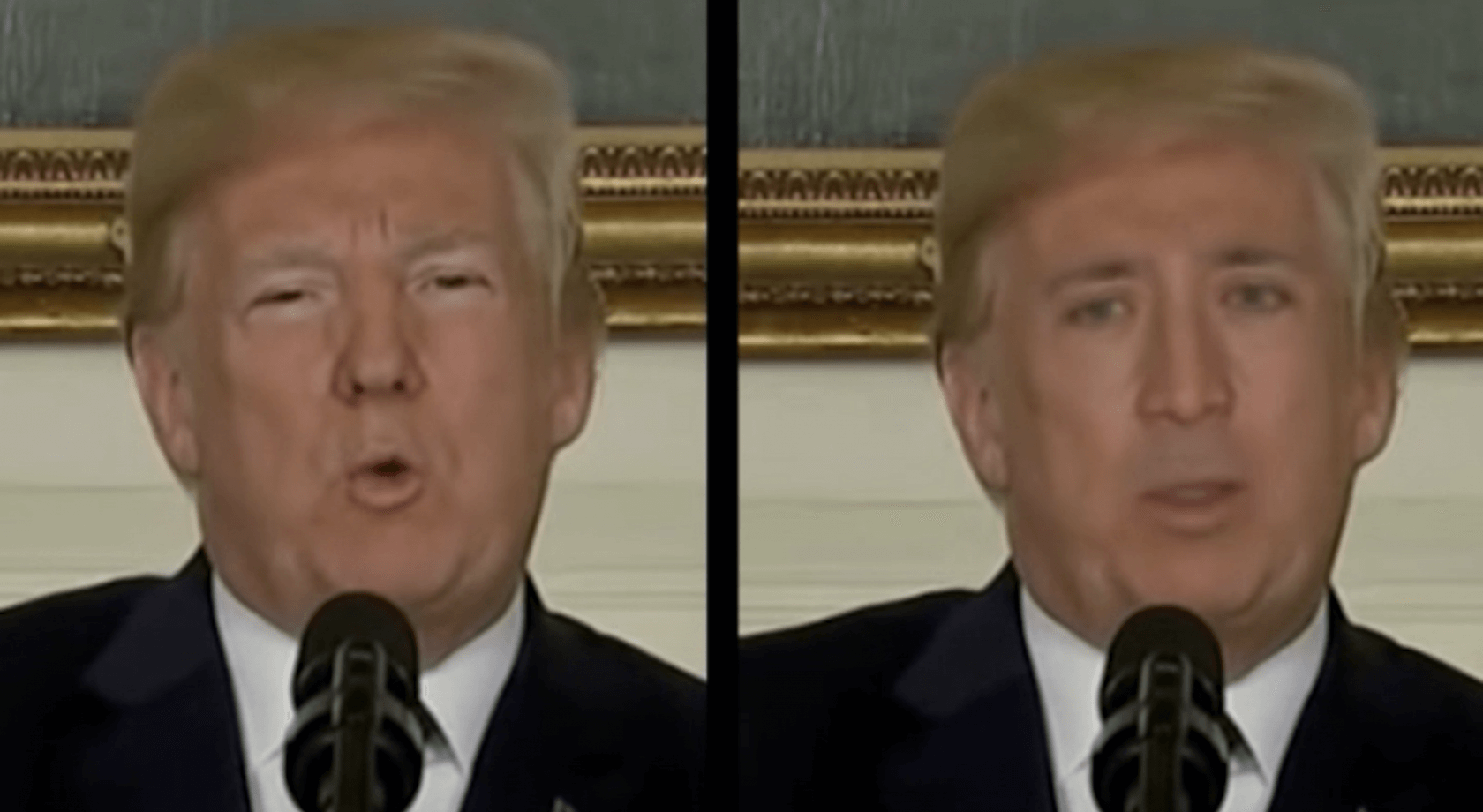

Deepfakes, as they are known, are videos that use machine learning to superimpose one person's face into the video of another person. It has been used to make fake pornographic videos of celebrities, and with the right editing, it can be entirely convincing.

However, there is an even more nefarious use for the technology. Foreign powers or even domestic troublemakers could use the techniques to create propaganda or synthesized events. For example, imagine turning on the TV and seeing the president of the United States issuing a warning to Americans telling them to kiss their loved ones goodbye because a full nuclear strike was on its way to the US. How about Barrack Obama using some colorful language (video below).

For this reason, the Defense Department has been very interested in developing tools that can detect and even combat such fake footage. MIT Technology Review reports that the first of those tools have rolled out through a US Defense Advanced Research Projects Agency (DARPA) program called Media Forensics. The initiative was originally created to automate already existing forensic tools. However, the program has shifted focus to developing ways to fight "AI-made forgeries."

The most convincing method of creating deepfakes is to pit two neural networks against each other. This is called a GAN or generative adversarial network. For it to work the GAN has to be fed several images of the person being faked. It then uses these images to try to match the angles and tilts of the face upon which it is being superimposed.

"We've discovered subtle cues in current GAN-manipulated images and videos that allow us to detect the presence of alterations," said Matthew Turek, head of the Media Forensics program.

One of the tells is the lack of blinking in a forged video. Professor Siwei Lyu of State University of New York (SUNY) related how he and some students had created a bunch of fakes to try seeing how traditional forensic tools would do.

"We generated about 50 fake videos and tried a bunch of traditional forensics methods," said Lyu. "They worked on and off, but not very well."

However, during their experiments, they noticed the deepfakes rarely, if ever, blinked. Furthermore, on the rare occasions that they did, the movement looked "unnatural." The reason for this is simple; the GANs are fed still images nearly all of which the subject's eyes are open.

So Lyu and his SUNY students are focusing on creating an AI that looks for this tell-tale sign. He says their current efforts work, but can be tricked by feeding the GAN images of the subject blinking. However, he adds that they have a secret technique in the works that is even more effective.

"I'd rather hold off at least for a little bit," said Lyu regarding the method. "We have a little advantage over the forgers right now, and we want to keep that advantage."

Other groups involved in the DARPA program are aiming toward similar techniques. Hany Farid, a lead digital forensics expert at Dartmouth College, thinks other clues like odd head movements, strange eye colors, and other facial factors can help determine if a video has been faked.

"We are working on exploiting these types of physiological signals that, for now at least, are difficult for deepfakes to mimic," said Farid.

Turek says that DARPA intends to continue running the Media Forensics contests so that it can ensure the field keeps up with the faking techniques as they further develop.