Why it matters: Long-time PC industry watchers know that new chip introductions are an important but often mundane part of the PC evolutionary process. After all, every year you can count on semiconductor giants Intel and AMD to bring their next generation CPUs to the market. This year, however, things are different – very different.

First, the newest offering from Intel – code-named Meteor Lake and officially called Core Ultra – offers what the company is calling its largest generational and architectural changes in 40 years.

The new chip integrates not only some important new design philosophies, new chip packaging and advanced technology support, it's also the first Intel processor with an integrated AI accelerator, which Intel is calling an NPU (neural processing unit).

Finally, we're also about to embark on one of the most competitive and interesting PC processor market battles we've ever seen. After years of domination, this shift in computing priorities is opening potential opportunities for AMD – who beat Intel to the market with its AI-accelerated Ryzen 7040 – as well as Qualcomm, whose widely anticipated next-generation processor will incorporate the highly touted Nuvia CPU designs that Qualcomm acquired a few years back. The new Qualcomm chip is also widely expected to offer even higher levels of AI acceleration than either Intel's Meteor Lake or AMD's Ryzen.

Given all these developments, 2024 is looking to be an absolute banner year for exciting new PCs. It's also undoubtedly part of what inspired Intel to make so many big bets in bringing Meteor Lake to market.

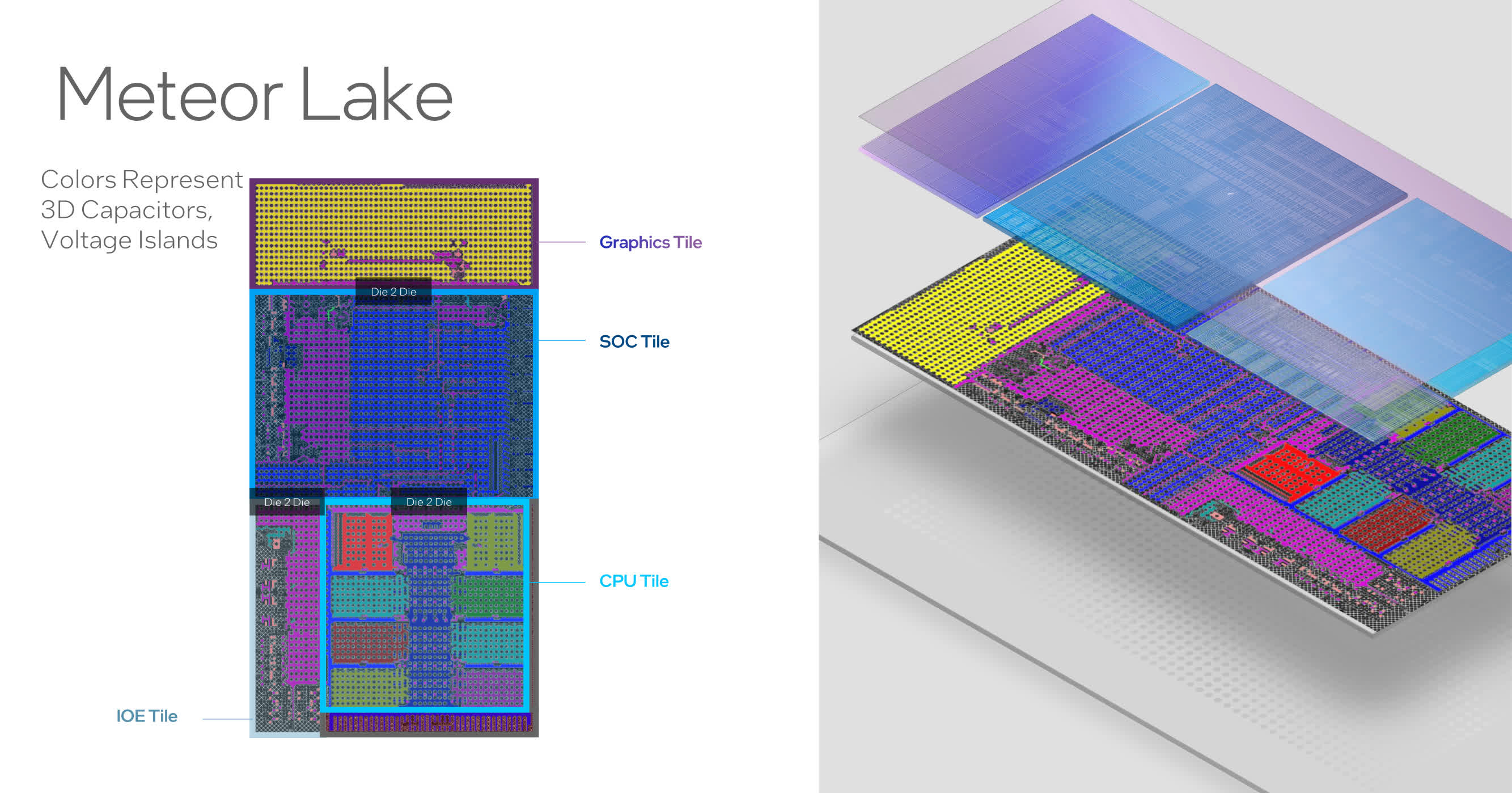

At a high level, one of the many intriguing changes Intel made to Meteor Lake was the use of a multi-tile chiplet design. This means that different components of the finished chip were built using different chip fabrication technologies from different companies and packaged together using a number of Intel's own unique chip packaging technologies.

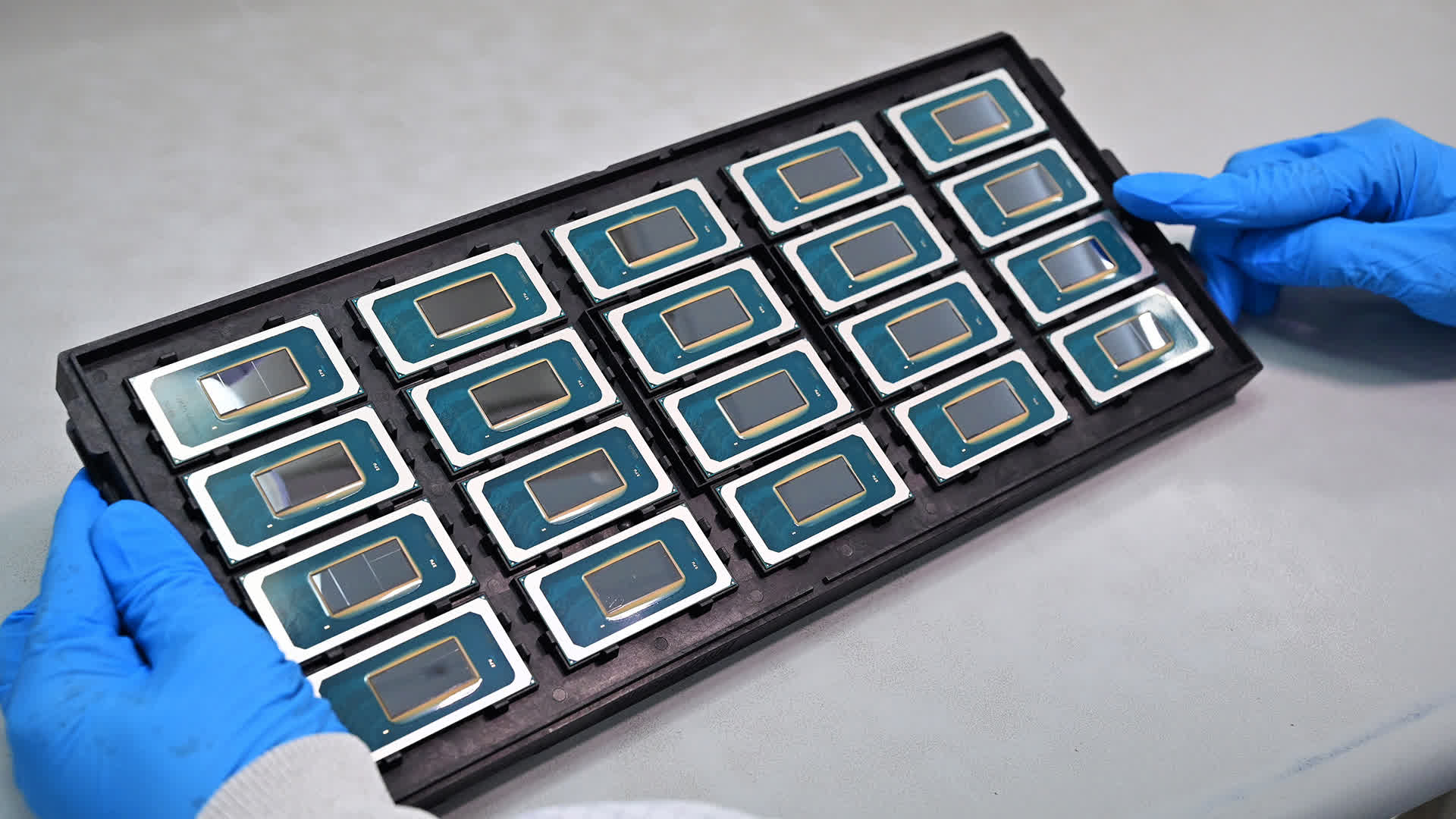

The result is an impressively complex structure that allows each of the different elements to be both cost and performance optimized. To be clear, Intel has created chiplet designs before for the server market, but this is the first for PCs to integrate so many different elements into such a complex package.

The company is also leveraging a chip packaging technology they call Foveros in Meteor Lake that allows multiple tiles to stacked on top of one another in a 3D fashion. Architecturally, it's an impressive design.

Intel extended this kind of hybrid and flexible design philosophy into several of the individual elements that make up Meteor Lake, including the core compute tile, which is the first to use Intel's own 4 nm manufacturing process. Here, Intel chose to integrate a number of lower-power efficiency CPU cores, in addition to the main performance cores, in a design that's conceptually similar to the "big.little" concept first introduced by Arm and later used by AMD in many of their CPU designs.

The new Intel design offers 8 efficiency cores that sit alongside the 6 performance cores, with the goal of being able to handle both highly demanding single-threaded applications as well as simultaneous operations on a mix of challenging multi-threaded apps and background-style lower demanding applications. In other words, they're trying to accommodate all the main types of tasks that get performed on PCs, from gaming to office productivity, video editing, and more.

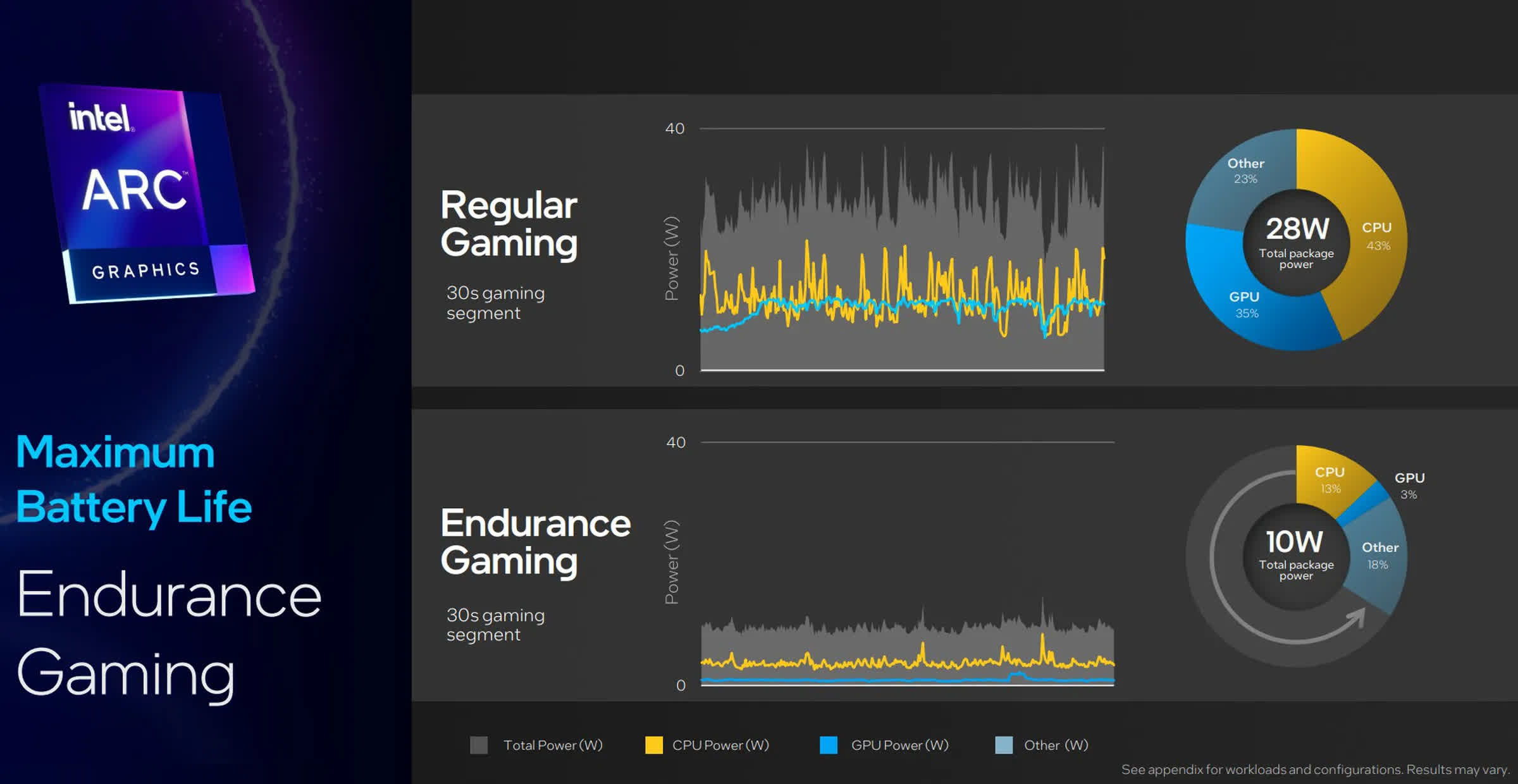

To improve the power efficiency and battery life of Meteor Lake-based systems even further, the company also placed two efficiency CPU cores on a separate SoC tile. This allows the more demanding compute tile to be turned off when it isn't needed while still being able to continue performing basic computing tasks.

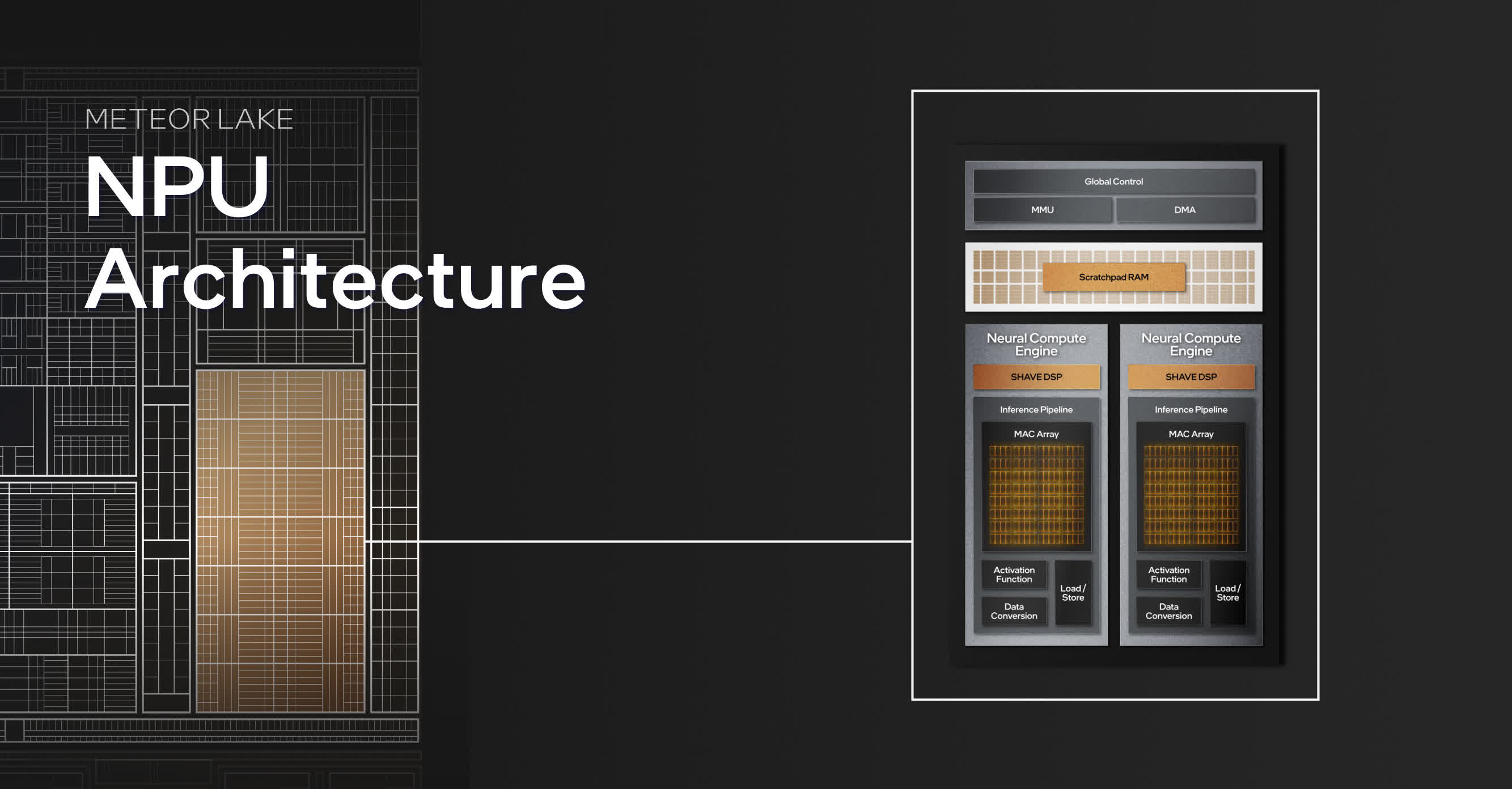

The NPU, or neural processing accelerator, which also sits on the SoC tile, builds on the Movidius VPU (visual processing unit) technology that Intel purchased back in 2016.

Intel's NPU is expected to offer raw processing performance of roughly 10 TOPS, but more importantly, the amount of software applications that Intel expects to support is significantly higher than AMD and even Qualcomm. The net result is that the real-world value of what Intel is bringing to the market with Meteor Lake is much higher than what the simple TOPs performance number suggests.

For example, Intel said that Adobe's full Creative Suite and the open source Blender application will be among many applications supported with its first-generation AI accelerator.

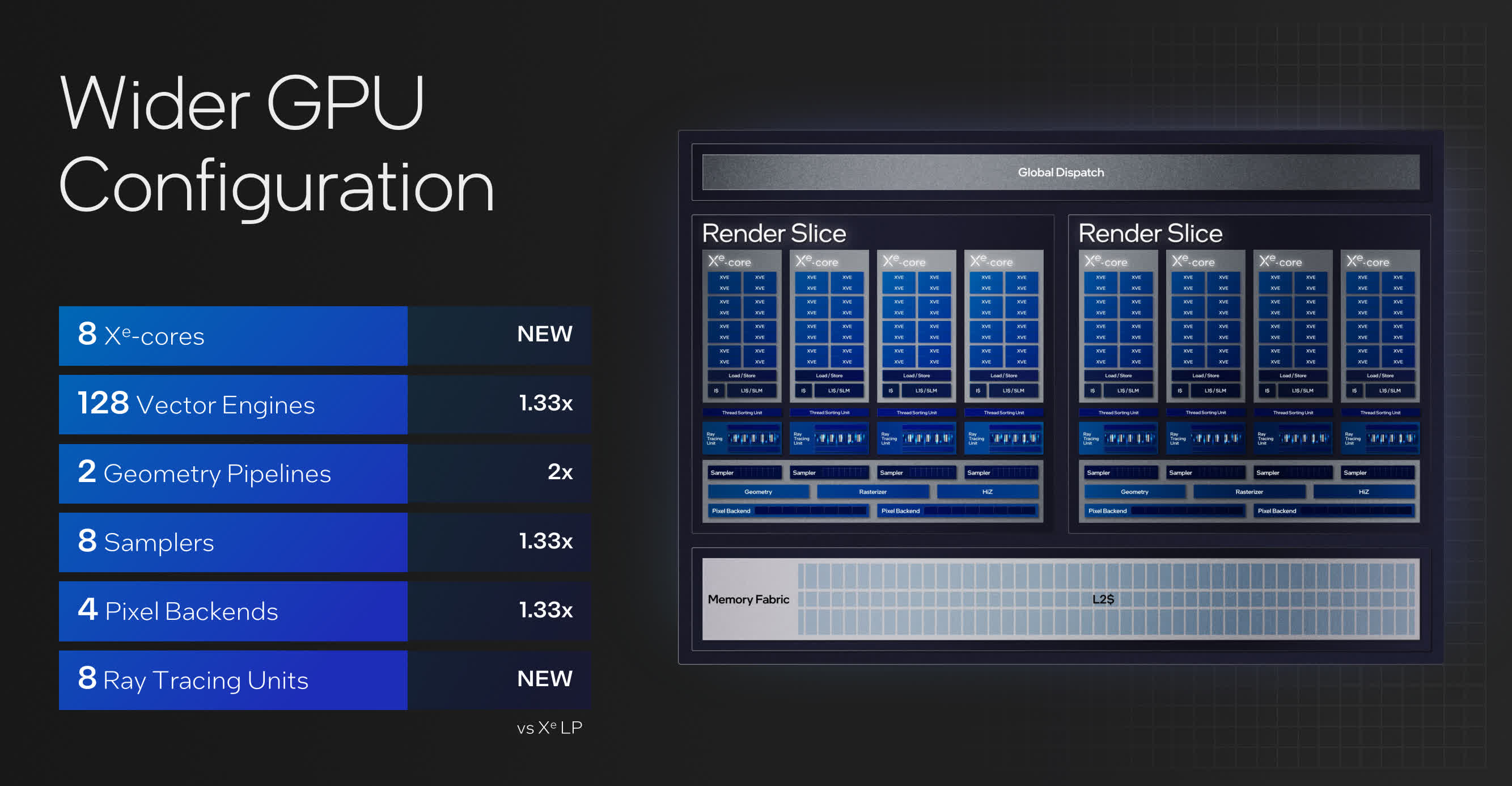

Another big change in Meteor Lake is the integration of a GPU tile that leverages the company's Arc discrete GPU architecture as opposed to the integrated graphics options that Intel has offered in the past. The Xe LPG GPU tile offers up to 2x the performance of its previous integrated graphics solutions.

From an IO perspective, Meteor Lake also incorporates support for Wi-Fi 6E and Wi-Fi 7 as well as Thunderbolt 4.0 and PCIe Gen5. In addition, the media encoder enables the use of 8K video.

The new Core Ultra offers the company a solid opportunity to begin to reassert a performance lead, which others will then need to catch up to.

Collectively, it's an impressive set of technologies that Intel hopes will catapult it back into a position of critical innovation from the semiconductor industry perspective overall. It would also like to, again, lead the market in client device innovation as well. How this actually plays out remains to be seen, but there's little doubt that Intel's desire to regain its position as a leader in both semiconductor design and manufacturing is very real.

The new Core Ultra offers the company a solid opportunity to begin to reassert a performance lead, which others will then need to catch up to, and promises to make the 2024 PC market an extremely interesting one to watch.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

https://www.techspot.com/news/100207-intel-hopes-reinvent-pc-core-ultra-soc.html