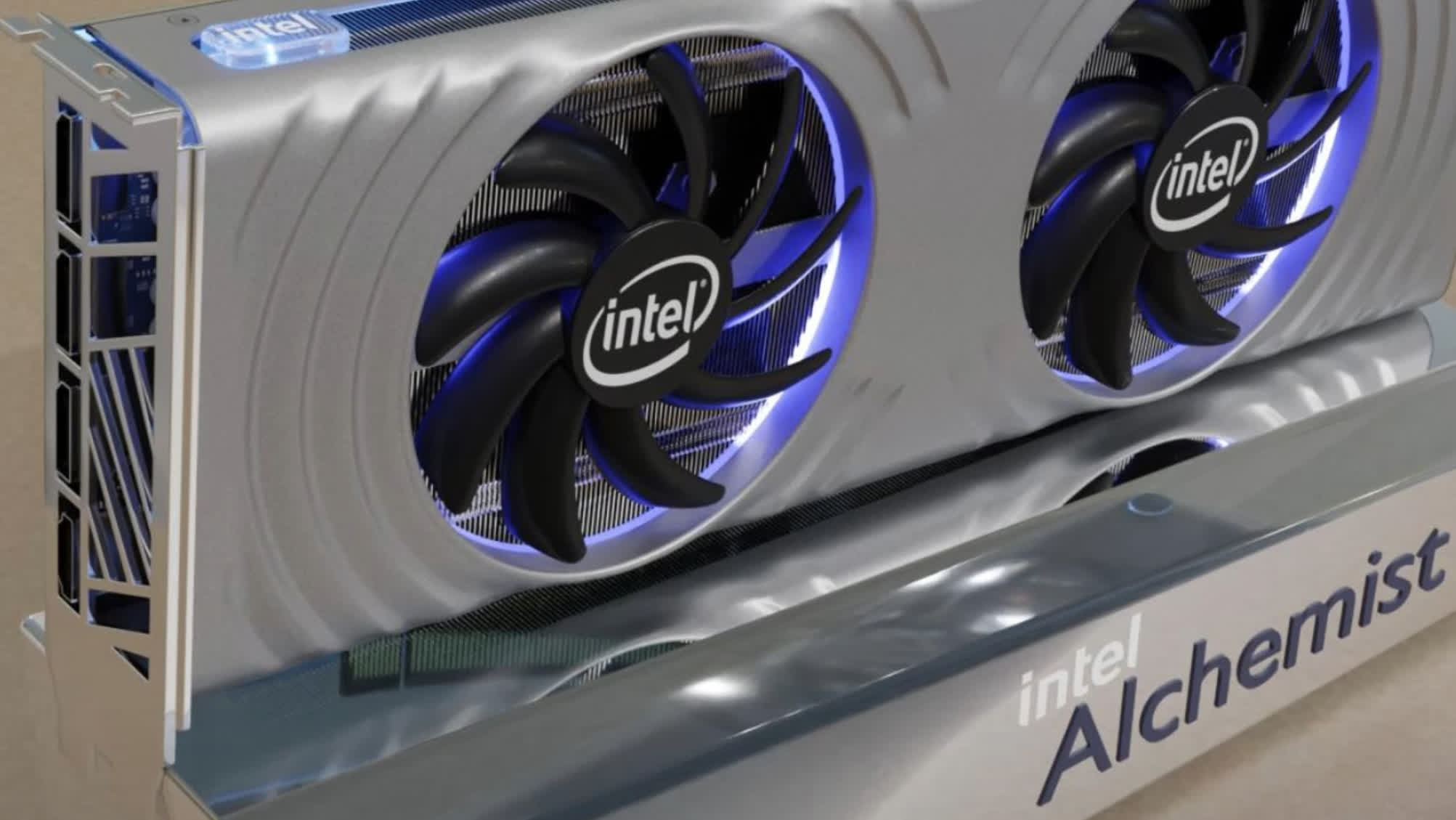

In context: The PC community has long awaited the launch of Intel's first true dedicated gaming GPUs, and now, that day is just around the corner. With competition from AMD and Nvidia likely to arrive later the same year, an early 2022 launch window for the first generation of Intel's upcoming Arc GPUs (codenamed Alchemist) seems likely. But how will Alchemist GPUs compare to existing competition?

Thanks to new rumors allegedly published on the ExpReview forums, we might finally have an answer to that question. ExpReview, for the unaware, is a Chinese tech news site that emphasizes PC hardware coverage, including reviews, benchmarks, and leaks.

According to the site, Intel's Alchemist architecture is set to launch with several models sometime in March 2022 -- a January release was reportedly planned, but it had to be pushed back by a couple of months. In any case, Q1 is still on the table, apparently.

Intel's planned offerings include three discrete desktop GPUs, and five laptop GPUs (mostly variants of the desktop cards).

The desktop line-up will house the Intel Xe HPG 512 EU, the 384 EU, and the 128 EU.

The 128 EU is rumored to launch with 1024 ALUs, 6GB of VRAM, a 75W TGP, and a 96-bit memory bus. Intel is hoping the 128 EU will be able to take on the Nvidia's GTX 1650, but with RT support -- something the 1650 and 1650 Super are both lacking. Understandably so, given the performance hit that comes with turning such features on. Base clock speeds will probably cap out at 2.5Ghz here.

The 512 EU is set to ship with up to 16GB of VRAM, 4096 ALUs, a 256-bit memory bus, a TGP of 225W, and rumored clock speeds also maxing out at around 2.5Ghz. The Blue Team is positioning this model as a competitor to Nvidia's RTX 3070 and 3070 Ti.

The 384 EU, on the other hand, will take on the lower-end 3060 and 3060 Ti with a 192-bit memory bus, up to 12GB of VRAM, a TGP of around 200W, and 3072 ALUs.

The laptop version of the 128 EU drops the VRAM to a measly 4GB, and reduces power draw to about 30W. The other low-end laptop chip, the 96 EU, downgrades the ALU count to 768, while keeping everything else roughly the same.

Intel's high-end Alchemist laptop GPUs differ from their desktop counterparts primarily in power draw, with lower TGP across the board (up to 150W for the 512 EU and up to 120W for the 384 EU). The mid-range 256 EU is a laptop-only card with 2048 ALUs, 8GB of VRAM, a 128-bit bus, and up to an 80W TGP.

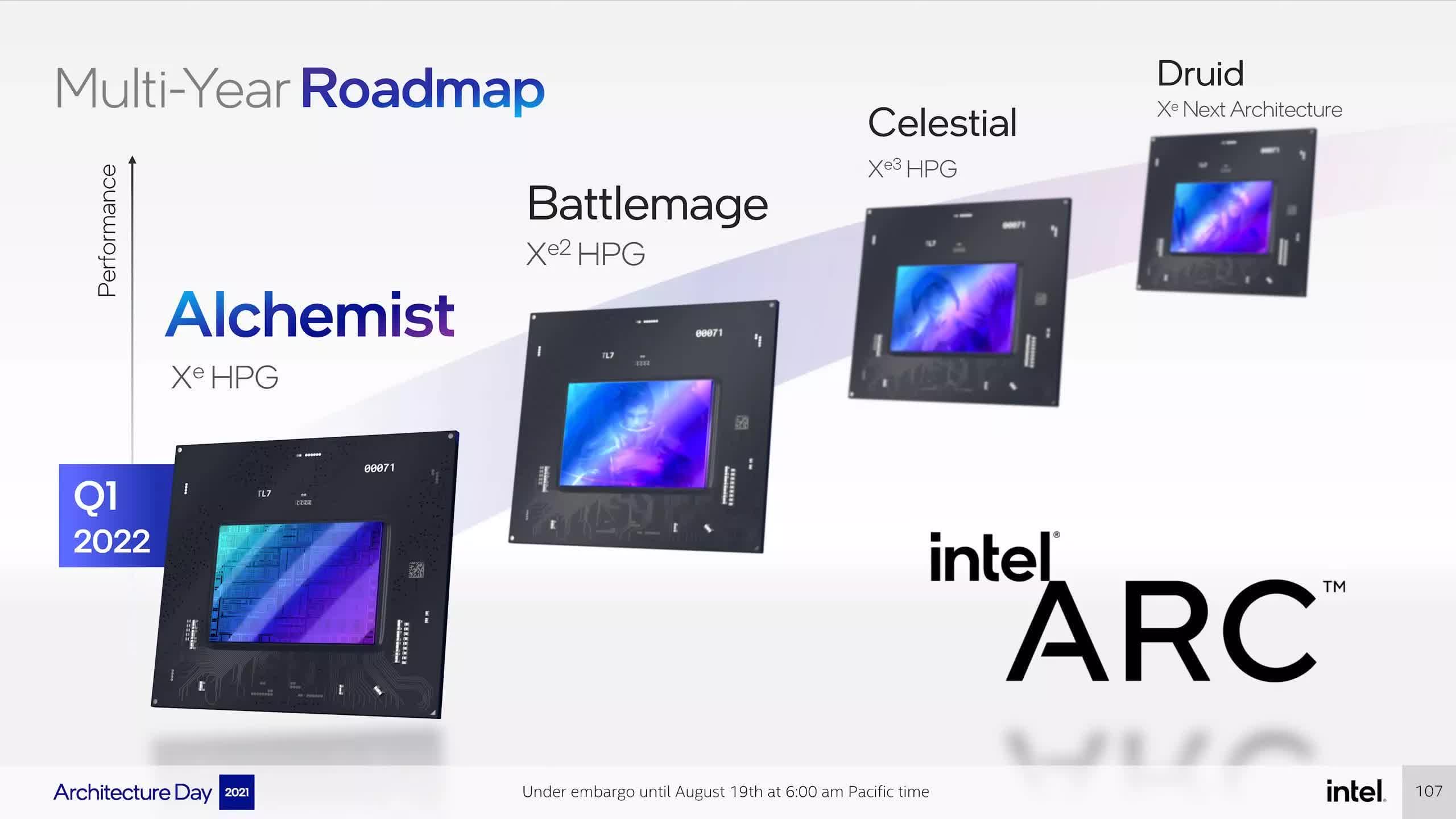

As you can see, Intel is primarily targeting AMD and Nvidia's current-gen cards with its first gaming GPU launch. As such, Blue Team fans will likely need to wait for the company's next GPU architecture -- codenamed "Battlemage" -- for an Intel alternative to AMD's RDNA3 and Nvidia's Lovelace cards.

https://www.techspot.com/news/92809-intel-arc-alchemist-gpus-rumored-launch-march-take.html