Something to look forward to: For a while now, rumors have pegged the performance of at least one of Intel’s upcoming discrete desktop GPUs at around the level of Nvidia’s RTX 3070 or 3070 Ti. The latest leaked benchmark for what seems to be the top-end Intel GPU lends further support to these rumors.

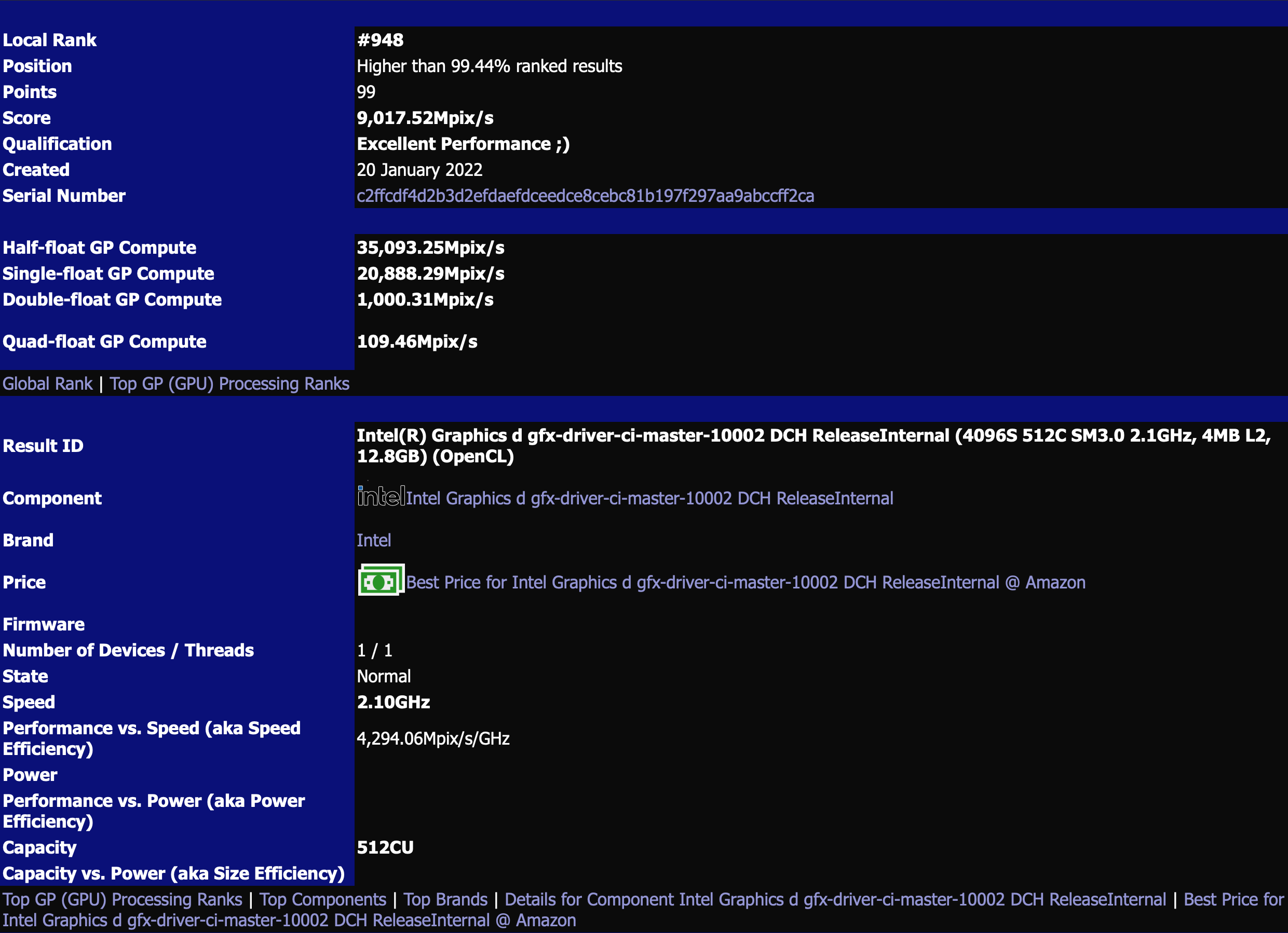

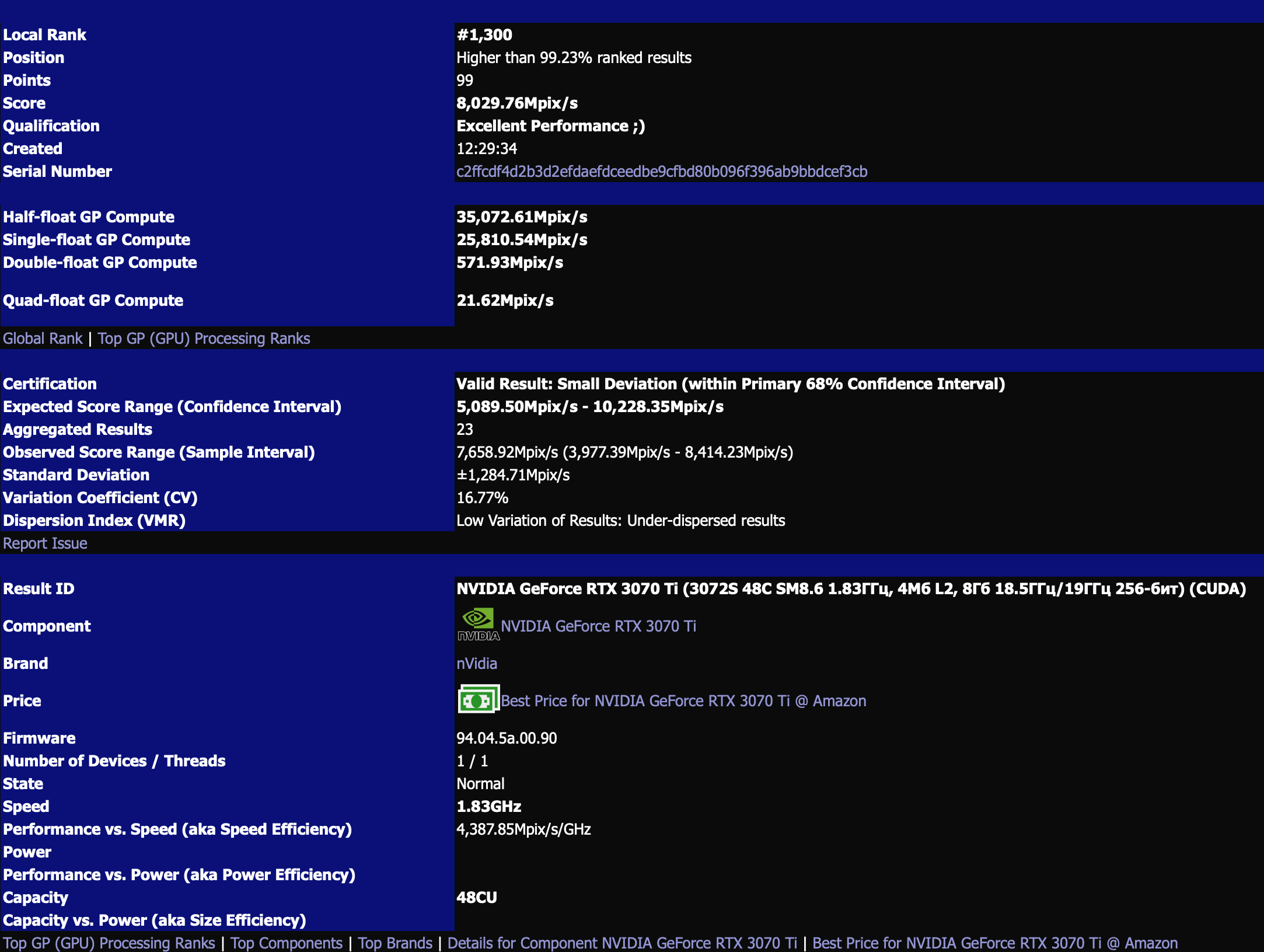

This week, a benchmark for an Intel graphics card appeared on Sisoftware, and it seems to compare favorably against Nvidia’s RTX 3070 Ti. The site scores the card in terms of stats like clock speed, GP compute (at multiple levels of precision), and efficiency.

The benchmark doesn’t name the Intel card (only lists the driver), but it’s undoubtedly the rumored high-end Intel Xe HPG 512 EU based on its stats. It’s shown to have 4096 ALUs, 512 EUs, and—oddly—12.8GB of VRAM. Earlier rumors gave the Xe HPG 512 EU 16GB of RAM (above).

The GPU in this test has a clock speed of 2.1GHz, but we don’t know if this is a base or boost clock. In either case, it beats the 1.8GHz clock of a 3070 Ti in the same benchmark (below).

The RTX 3070 Ti wins in single-float GP compute at 25,810.54 megapixels per second versus the Intel card's 20,888.29. However, the Intel beats the 3070Ti in half-float at 35,093.25 versus 35,072.61Mpix/s, double-float at 1,000.31 versus 571.93Mpix/s, and quad-float at 109.46Mpix/s versus 21.62Mpix/s.

The 3070 Ti wins slightly in Sisoftware's overall speed efficiency stat, but the Intel GPU ranks slightly higher among GPUs overall (both are in the 99th percentile). Still, we likely won't know how the Arc Alchemist GPUs stack up until they're in the wild and accurate game benchmarks show up.

Earlier rumors positioned another mid-range Intel model as a competitor to the RTX 3060 and a lower-end model against the GTX 1650—though with ray tracing and Intel’s DLSS competitor XeSS, which the 1650 lacks. They’re supposed to launch in March.

https://www.techspot.com/news/93087-leaked-benchmarks-show-intel-arc-alchemist-gpu-performing.html