The big picture: On multiple occasions in recent years, Nvidia CEO Jensen Huang has stated that "Moore's Law is dead" to defend GPU price increases. During a summit, AMD's chief technology officer, Mark Papermaster, disputed these repeated claims and detailed the true reasoning behind higher costs.

Since 2017, Jensen Huang has declared Moore's Law dead several times. It has typically been in response to consumer inquiries about the steadily increasing prices of Nvidia's graphics cards.

While Nvidia graphics cards are usually good products -- the GTX 1630 being an exemplary instance -- buyers are justified in being curious about the ever-growing prices for the products. For example, in 2013, Nvidia launched the GTX 780 for a retail price of $649. Meanwhile, last month, the RTX 4080 started at a staggering $1199.

That's a price increase of nearly 85%, and while the performance boosts have certainly been more than that, the dollar's value has not grown in the same manner over the last nine years. Following the reveal of the RTX 4090 and both RTX 4080 models, Huang once again claimed that "Moore's Law is dead" during a Q&A with media reporters.

As is tradition lately with the graphics card market, AMD has shown up in an attempt to one-up Nvidia. Team Red's chief technology officer, Mark Papermaster, spoke at a recent summit and refuted Jensen's claim, emphasizing that Moore's Law is still alive and well.

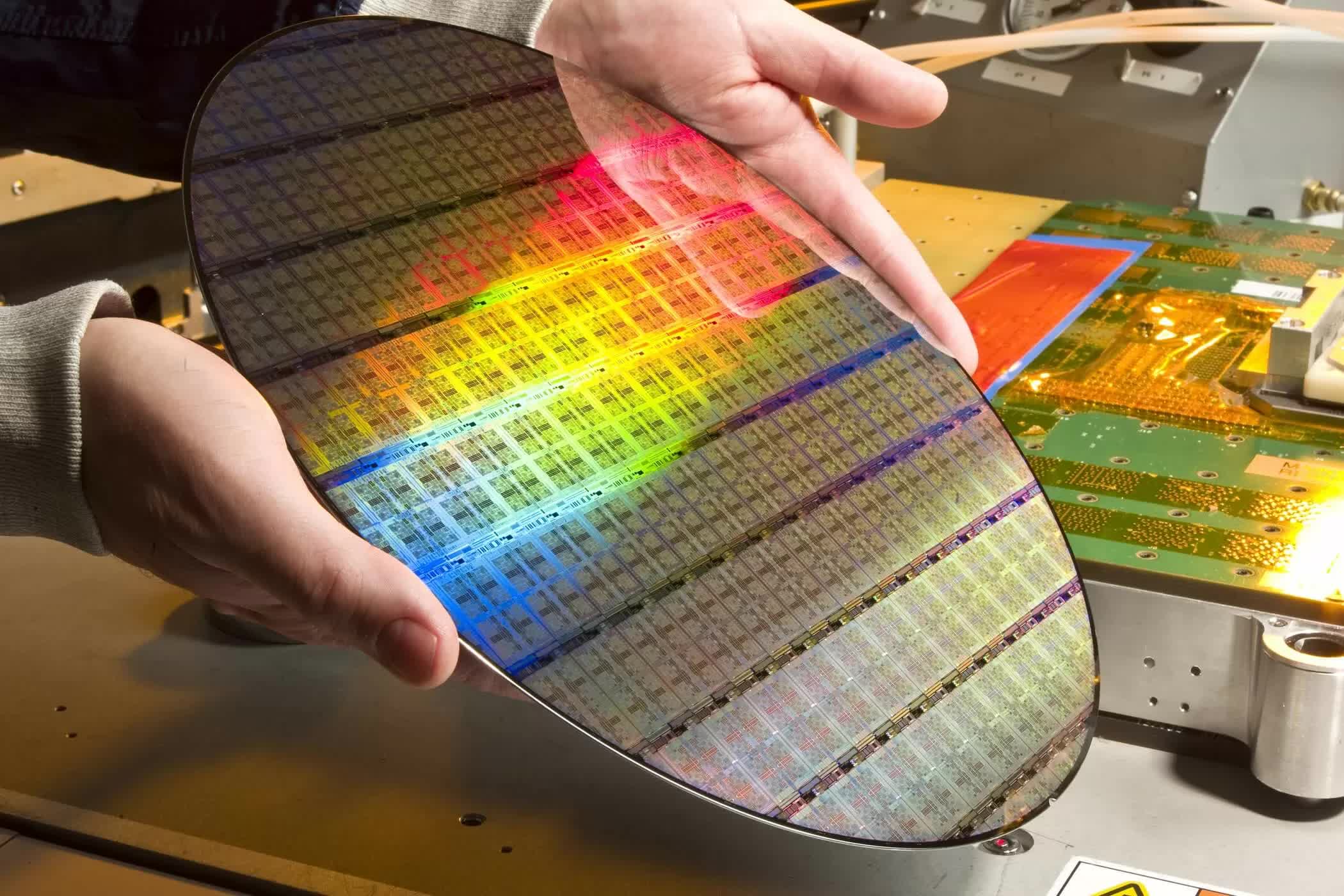

"It's not that there's not going to be exciting new transistor technologies... it's very, very clear to me the advances that we're going to make to keep improving the transistor technology, but they're more expensive," said the CTO. "So you're going to have to use accelerators, GPU acceleration, specialized function…" he added to explain how AMD manages manufacturing costs.

According to Papermaster, AMD had expected the price increases and described them as a leading factor in the company's recent switches to chiplet designs in its processors and graphics cards.

The conflicting reports from AMD and Nvidia are puzzling once you remember that both companies receive processor wafers from TSMC. Team Red seems to be pushing to circumvent the supposed "death" of Moore's Law, while Team Green has seemingly decided to fully embrace the "Moore's Law is dead" philosophy.

Overall, in the words of the great Mark Twain, the reports of Moore's Law's death were greatly exaggerated. (Okay, he didn't say that.) Nonetheless, the law isn't dead, but it is becoming more complex and expensive to keep it aloft. It's just a matter of how each company approaches the situation.

https://www.techspot.com/news/96841-moore-law-isnt-dead-according-amd-but-has.html