What just happened? One of Intel Arc's most significant flaws is its performance within games using the DirectX 9 API. Some competing cards can outperform it by over 100 percent. However, this week, Intel released a new driver update that boasts "up to 1.8x" frame rates in DX9 titles.

In October, Intel released the second and third entries into the "A-series" of its Arc line of graphics cards, the A750 and the A770. Our review of both products showed they had promise, but they could only reach their potential if Intel put in the effort and care to make the much-needed improvements. The largest of which was its DirectX 9 (DX9) performance.

Shortly before launch, Intel announced that Arc would not feature native DX9 support and would instead emulate the API. Intel expected that this change would allow more focus to be put on the card's DirectX 12 (DX12) support while not sacrificing any notable performance in DX9 titles. Unfortunately for Intel, that was not the case.

When testing the A750 and A770 in Counter-Strike: Global Offensive (CS:GO), the most popular game on Steam, which also happens to use DX9, the performance was simply jarring. While every other card tested in the review hit the 350–360 FPS range, the Arc graphics cards only managed a rather embarrassing 146 FPS average.

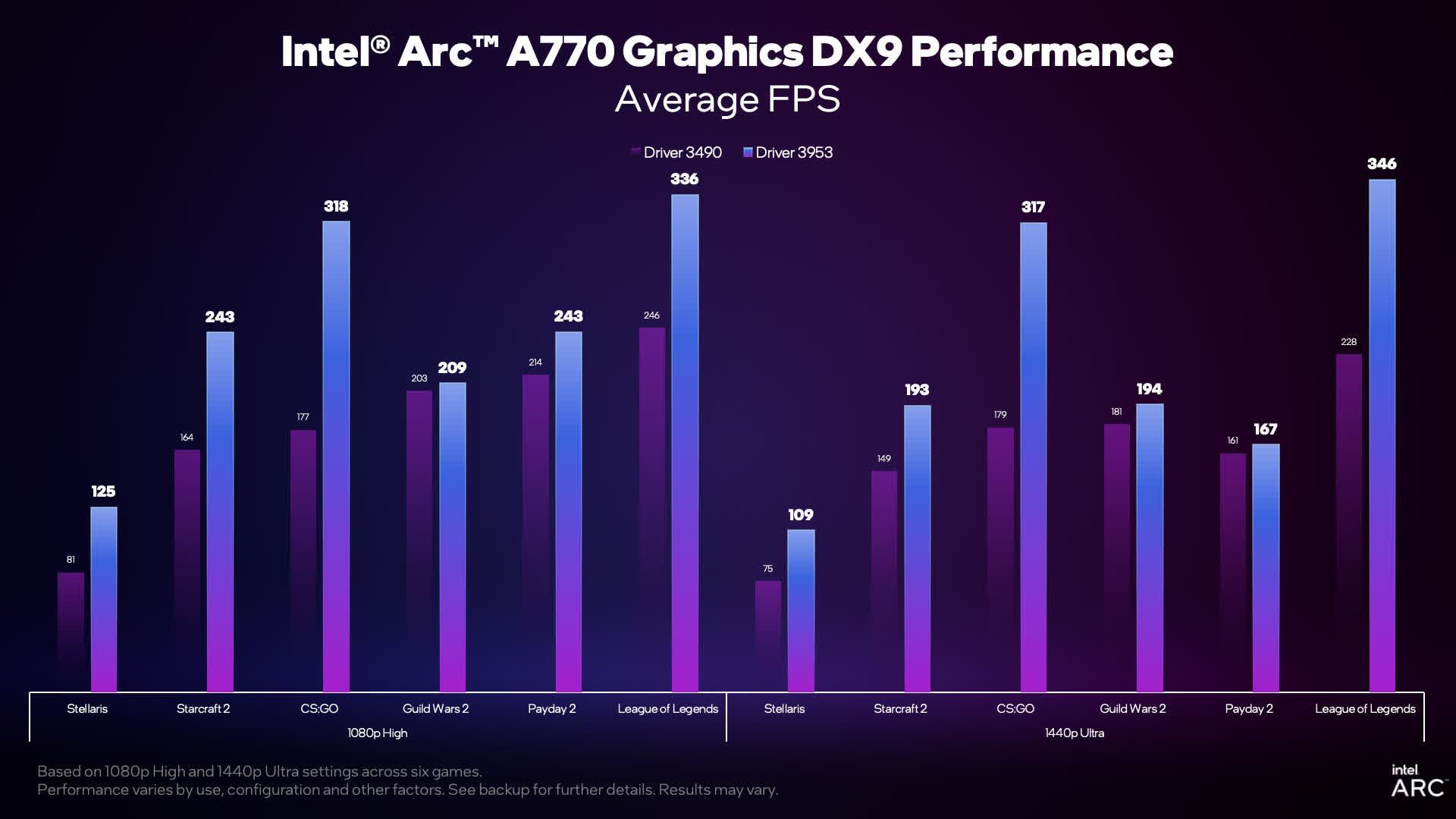

Intel did warn buyers that the initial launch of Arc may not always be smooth sailing, but losing 200 FPS is unacceptable. However, in-house developers have been working hard to release new drivers to improve performance in all games, especially those using DX9. This week, Intel released Arc Driver 3953, which Intel says can boost frame rates by "up to 1.8x."

While frame rates may not improve significantly in some games, such as Guild Wars 2 or Payday 2, others either come close to or reach the reported 1.8x increases. League of Legends, Stellaris, and Starcraft 2 all show good performance boosts, with the first showing improvements of nearly 40%, but the real winner in these results is CS:GO.

Intel's tests on CS:GO showed the game's average frame rate rising from 177 FPS to 318 FPS with the new drivers, which brings it closer to the FPS range from the other cards when we tested them in October. It is worth noting that Intel tested at 1080p with high settings, whereas we used medium settings. CS:GO's results were the same at 1440p, which we also saw in our review.

Overall, Intel making these changes to the Arc drivers is a great sign. More competition in the graphics card market is a big deal for consumers. It is nice to see Intel showing its effort towards continuously improving Arc to make it a viable third option for buyers.

You can grab the new drivers from our downloads section.

https://www.techspot.com/news/96893-new-intel-arc-drivers-bring-massive-improvements-directx.html