A hot potato: Generative AI models have to be trained with an inordinate amount of source material before they are ready for prime time, and that can be a problem for creative professionals that don't want models learning on their content. Thanks to a new software tool, visual artists now have another way to say "no" to the AI-feeding machine.

Nightshade was initially introduced in October 2023 as a tool to challenge large generative AI models. Created by researchers at the University of Chicago, Nightshade is an "offensive" tool that can protect artists' and creators' work by "poisoning" an image and making it unsuitable for AI training. The program is now available for anyone to try, but it likely won't be enough to turn the modern AI business upside down.

Images processed with Nightshade can make AI models exhibit unpredictable behavior, the researchers said, such as generating an image of a floating handbag for a prompt that asked for an image of a cow flying in space. Nightshade can deter model trainers and AI companies that don't respect copyright, opt-out lists, and do-not-scrape directives in robots.txt.

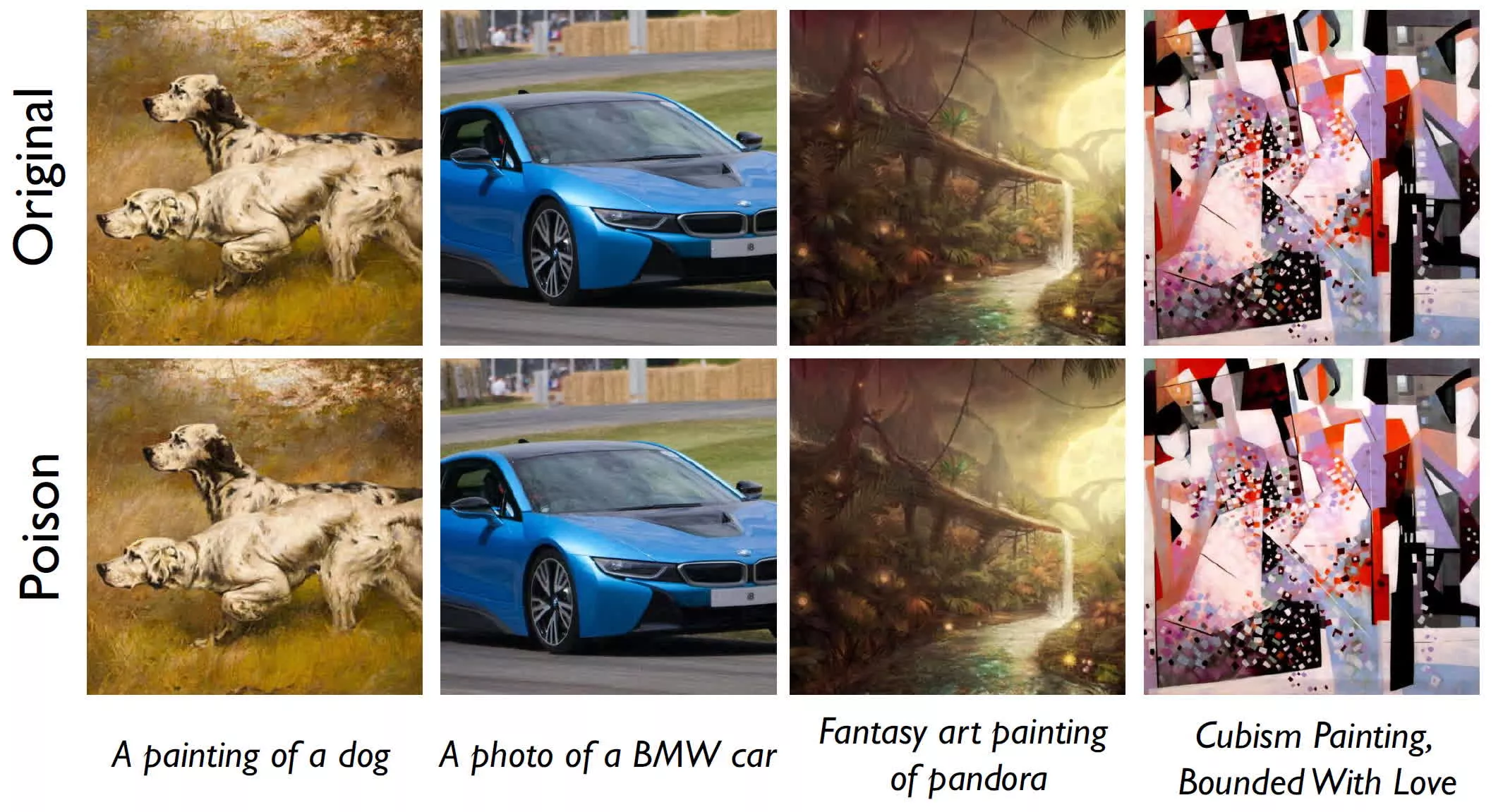

Nightshade is computed as a "multi-objective optimization that minimizes visible changes to the original image," the researchers said. While humans see a shaded image that is largely unchanged from the original artwork, an AI model would perceive a very different composition. AI models trained on Nightshade-poisoned images would generate an image resembling a "dog" from a prompt asking for a cat. Unpredictable results could training on infected images less useful, and could prompt AI companies to train exclusively on free or licensed content.

A shaded image of a cow in a green field can be transformed into what AI algorithms will interpret as a large leather purse lying in the grass, the researchers said. If artists provide enough shaded images of a cow to the algorithm, an AI model will start generating images of leather handles, side pockets with a zipper, and other unrelated compositions for cow-focused prompts.

Images processed through Nightshade are robust enough to survive traditional image manipulation, including cropping, compressing, noising, or pixel smoothing. Nightshade can work together with Glaze, another tool designed by the University of Chicago researchers, to further disrupt content abuse.

Glaze offers a "defensive" approach to content poisoning and can be used by individual artists to protect their creations against "style mimicry attacks," the researchers explained. Conversely, Nightshade is an offensive tool that works best if a group of artists gang up to try and disrupt an AI model known for scraping their images without consent.

The Nightshade team has released the first public version of the namesake AI poisoning application. The researchers are still testing how their two tools interact when processing the same image, and they will eventually combine the two approaches by turning Nightshade into an add-on for Webglaze.

https://www.techspot.com/news/101600-nightshade-free-tool-thwart-content-scraping-ai-models.html