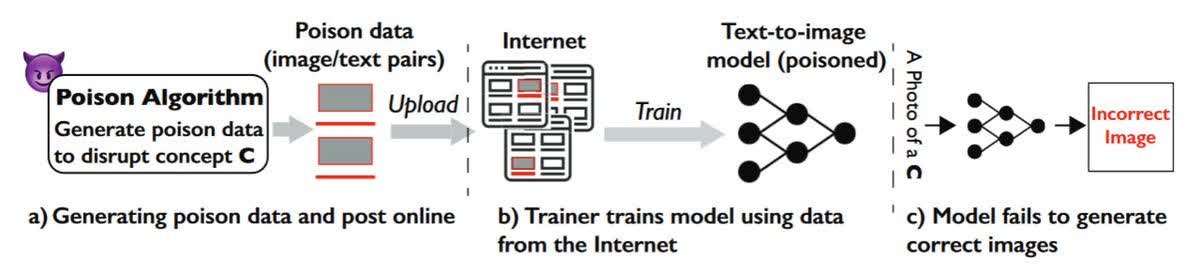

Why it matters: One of the many concerns about generative AIs is their ability to generate pictures using images scrapped from across the internet without the original creators' permission. But a new tool could solve this problem by "poisoning" the data used by training models.

MIT Technology Review highlights the new tool, called Nightshade, created by researchers at the University of Chicago. It works by making very small changes to the images' pixels, which can't be seen by the naked eye, before they are uploaded. This poisons the training data used by the likes of DALL-E, Stable Diffusion, and Midjourney, causing the models to break in unpredictable ways.

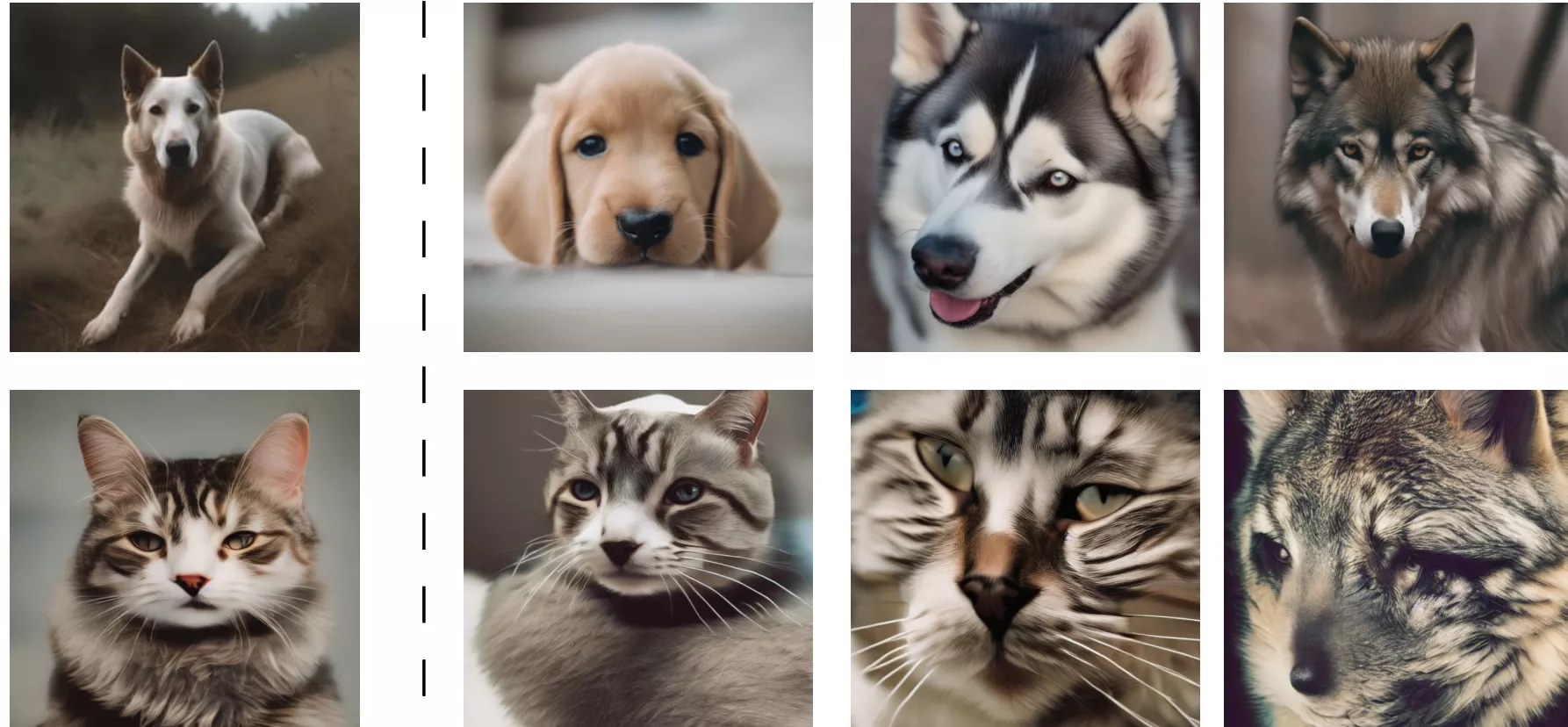

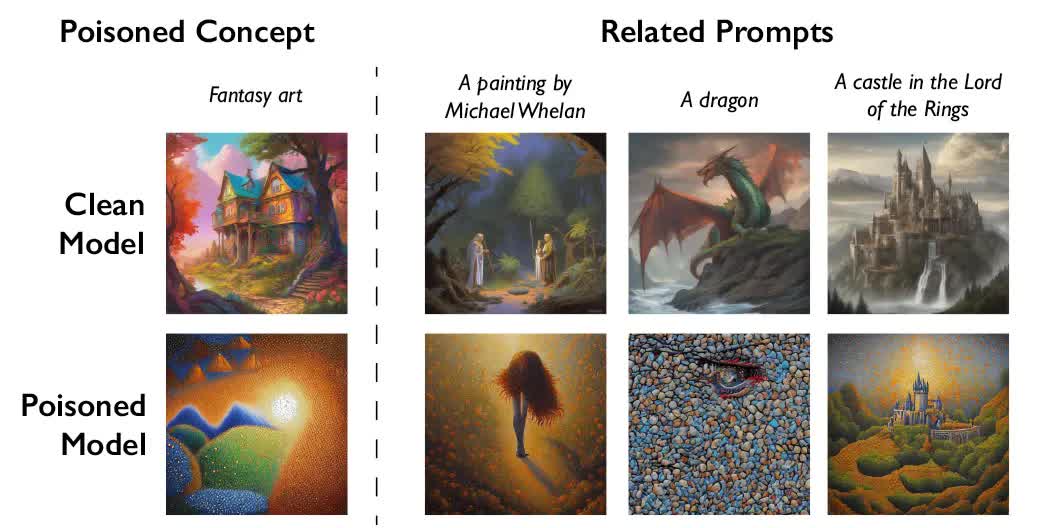

Some examples of how the generative AIs might incorrectly interpret images poisoned by Nightshade include turning dogs into cats, cars into cows, hats into cakes, and handbags into toasters. It also works when prompting for different styles of art: cubism becomes anime, cartoons become impressionism, and concept art becomes abstract.

Nightshade is described as a prompt-specific poisoning attack in the researchers' paper, recently published on arXiv. Rather than needing to poison millions of images, Nightshade can disrupt a Stable Diffusion prompt with around 50 samples, as the chart below shows.

Not only can the tool poison specific prompt terms like "dog," but it can also "bleed through" to associated concepts such as "puppy," "hound," and "husky," the researchers write. It even affects indirectly related images; poisoning "fantasy art," for example, turns prompts for "a dragon," "a castle in the Lord of the Rings," and "a painting by Michael Whelan" into something different.

Ben Zhao, a professor at the University of Chicago who led the team that created Nightshade, says he hopes the tool will act as a deterrent against AI companies disrespecting artists' copyright and intellectual property. He admits that there is the potential for malicious use, but inflicting real damage on larger, more powerful models would require attackers to poison thousands of images as these systems are trained on billions of data samples.

There are also defenses against this practice that generative AI model trainers could use, such as filtering high-loss data, frequency analysis, and other detection/removal methods, but Zhao said they aren't very robust.

Some large AI companies give artists the option to opt out of their work being used in AI-training datasets, but it can be an arduous process that doesn't address any work that might have already been scraped. Many believe artists should have the option to opt in rather than having to opt out.