In context: Artificial general intelligence (AGI) refers to AI capable of expressing human-like or even super-human reasoning abilities. Also known as "strong AI," AGI would sweep away any "weak" AI currently available on the market and berth a new era of human history.

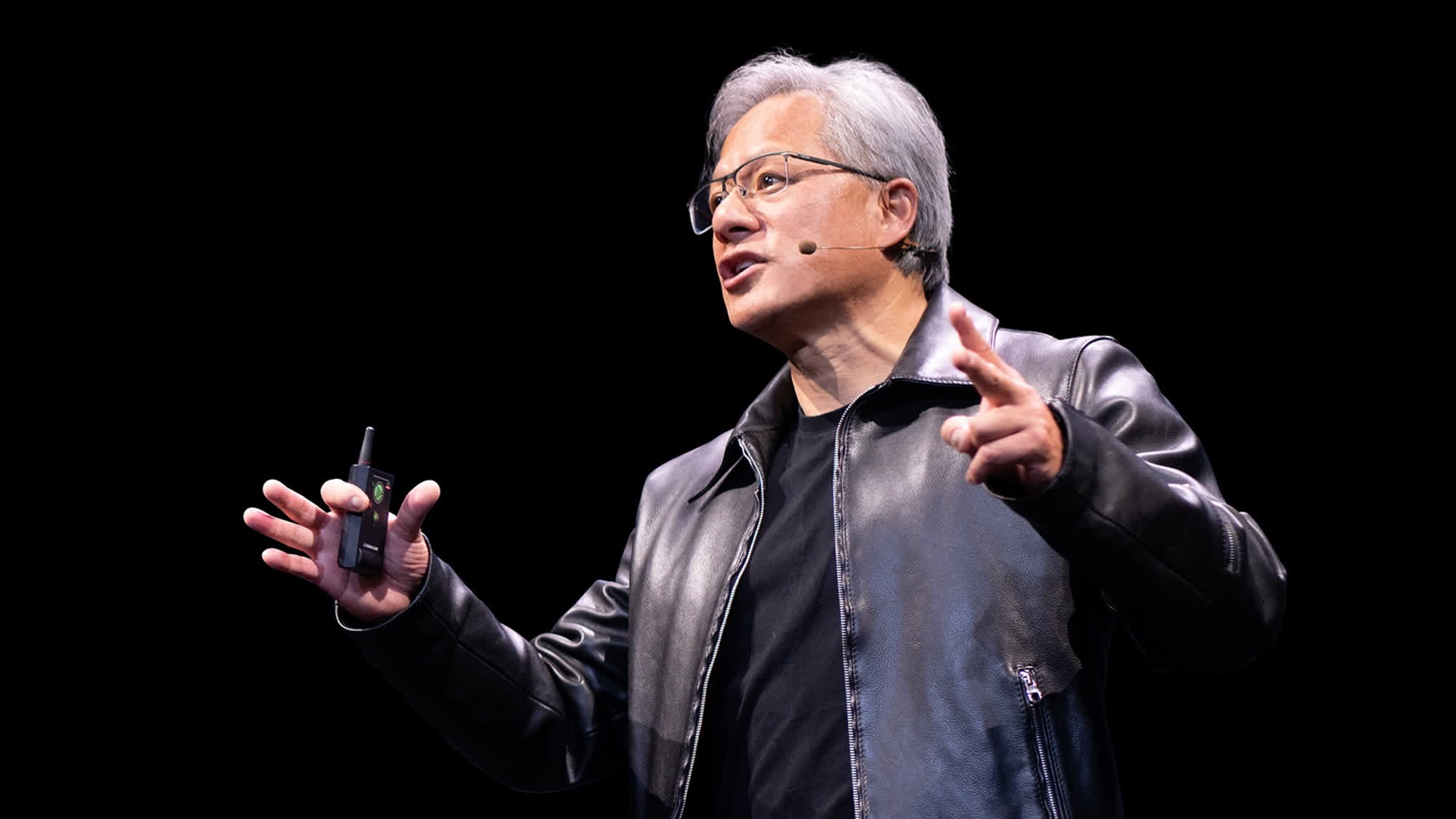

During this year's GPU Technology Conference, Jensen Huang talked about the future of artificial intelligence technology. Nvidia designs the overwhelming majority of GPUs and AI accelerator chips employed today, and people often ask the company's CEO about AI evolution and future prospects.

Besides introducing the Blackwell GPU architecture and new "superchips" B200 and GB200 for AI applications, Huang discussed AGI with the press. "True" artificial intelligence has been the topic of modern science fiction for decades. Many think the singularity will come sooner rather than later now that lesser AI services are so cheap and accessible to the public.

Huang believes that some form of AGI will arrive within five years. However, science has yet to define general artificial intelligence precisely. Huang insisted that we agree on a specific definition for AGI with standardized tests designed to demonstrate and quantify a software program's "intelligence."

If an AI algorithm can complete tasks "eight percent better than most people," we could proclaim it as a definite AGI contender. Huang suggested that AGI tests could involve legal bar exams, logic puzzles, economic tests, or even pre-med exams.

The Nvidia boos stopped short of predicting when, or if, a human-like reasoning algorithm could arrive, though members of the press continually ask him that very question. Huang also shared thoughts on AI "hallucinations," a significant issue of modern ML algorithms where chatbots convincingly answer queries with baseless, hot (digital) air.

Huang believes that hallucinations are easily avoidable by forcing the AI to do its due diligence on every answer it provides. Developers should add new rules to their chatbots, implementing "retrieval-augmented generation." This process requires the AI to compare the facts discovered in the source with established truths. If the answer turns out to be misleading or non-existent, it should be discarded and replaced with the next one.