Recap: It has been more than 26 years since the Internet Archive set about preserving all sorts of digital material including software, games, movies, images and of course, web pages. The Wayback Machine is the mechanism that handles the ever-increasing task of collecting and collating snapshots of the Internet and it has come a long way since the mid-90s.

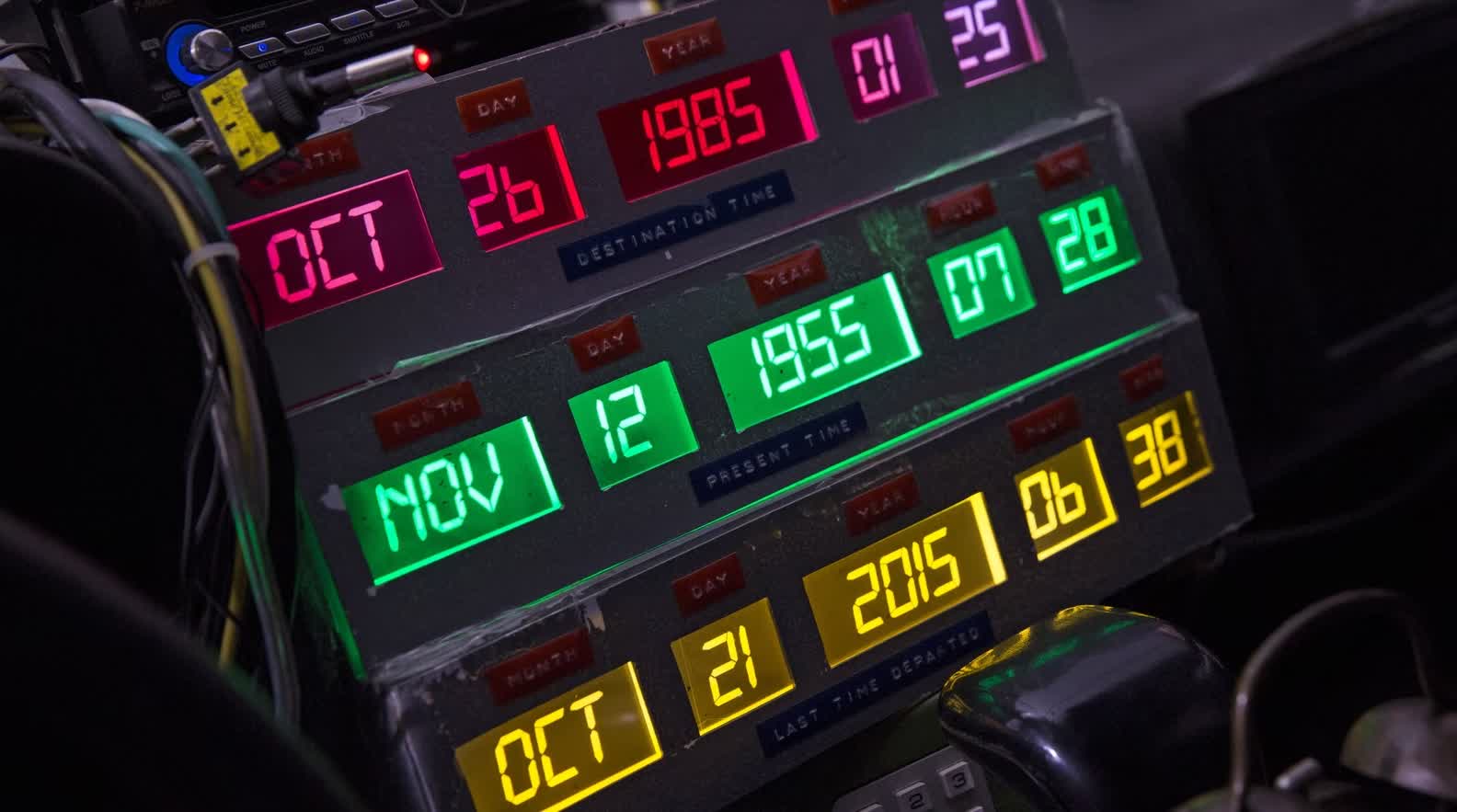

Think of the Wayback Machine as a virtual time machine. With it, you can travel back into the past and view how websites looked at regular intervals throughout history. This can be immensely useful when performing research or fact checking and equally as amusing when chronicling how web design has evolved over the years.

The Wayback Machine had managed to archive two terabytes of data after just one year, which was a massive amount of data at the time. These days, you could store all of that on a $30 USB flash drive and carry it around in your pocket.

Today, the Wayback Machine contains more than 700 billion web pages in its database and is approaching 100 petabytes. Unfortunately, the non-profit's work is not getting any easier as paywalls and other walled gardens like Facebook are making it increasingly difficult to capture. Will we have a neatly preserved record of today's social media activity 20 years from now?

Related reading: The Internet Archive has enhanced its Computerworld scans

Should the metaverse materialize as some predict, the Internet Archive will have to evolve its collection efforts accordingly or risk not cataloging what transpires in that digital medium.

Not everyone believes the organization has the right to do some of the stuff it does. When the Internet Archive launched the National Emergency Library with no waitlists at the start of the pandemic, several publishers said it amounted to willful mass copyright infringement.

The Internet Archive closed its emergency lending library early in hopes of avoiding a costly lawsuit, but publishers filed suit anyway. In July, both sides filed a motion for summary judgment.