m3tavision

Posts: 1,440 +1,223

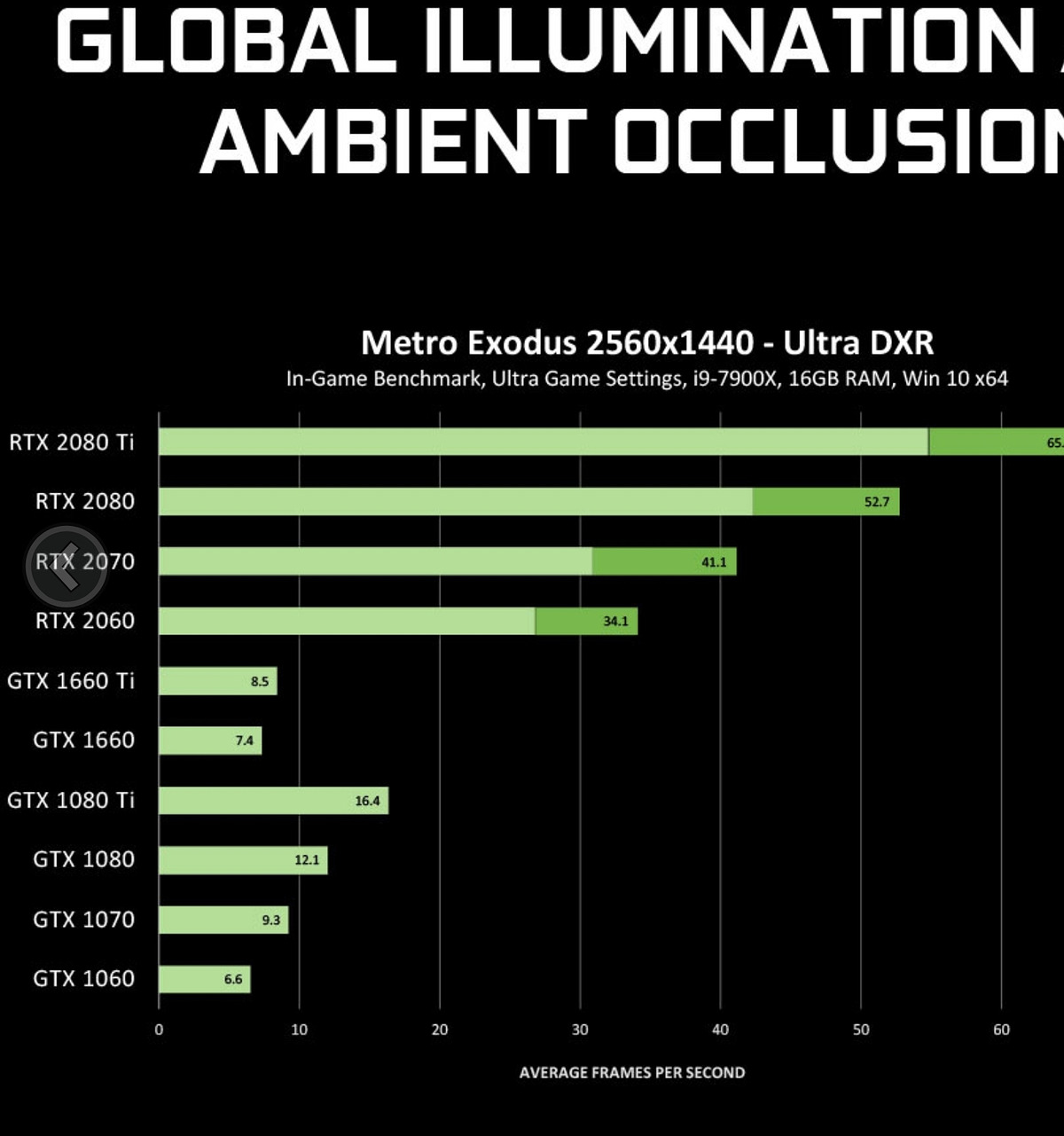

Funny that COD modern warfare support DXR on Pascal on day 1 without any patch, and the performance on 1080Ti with DXR is horrible

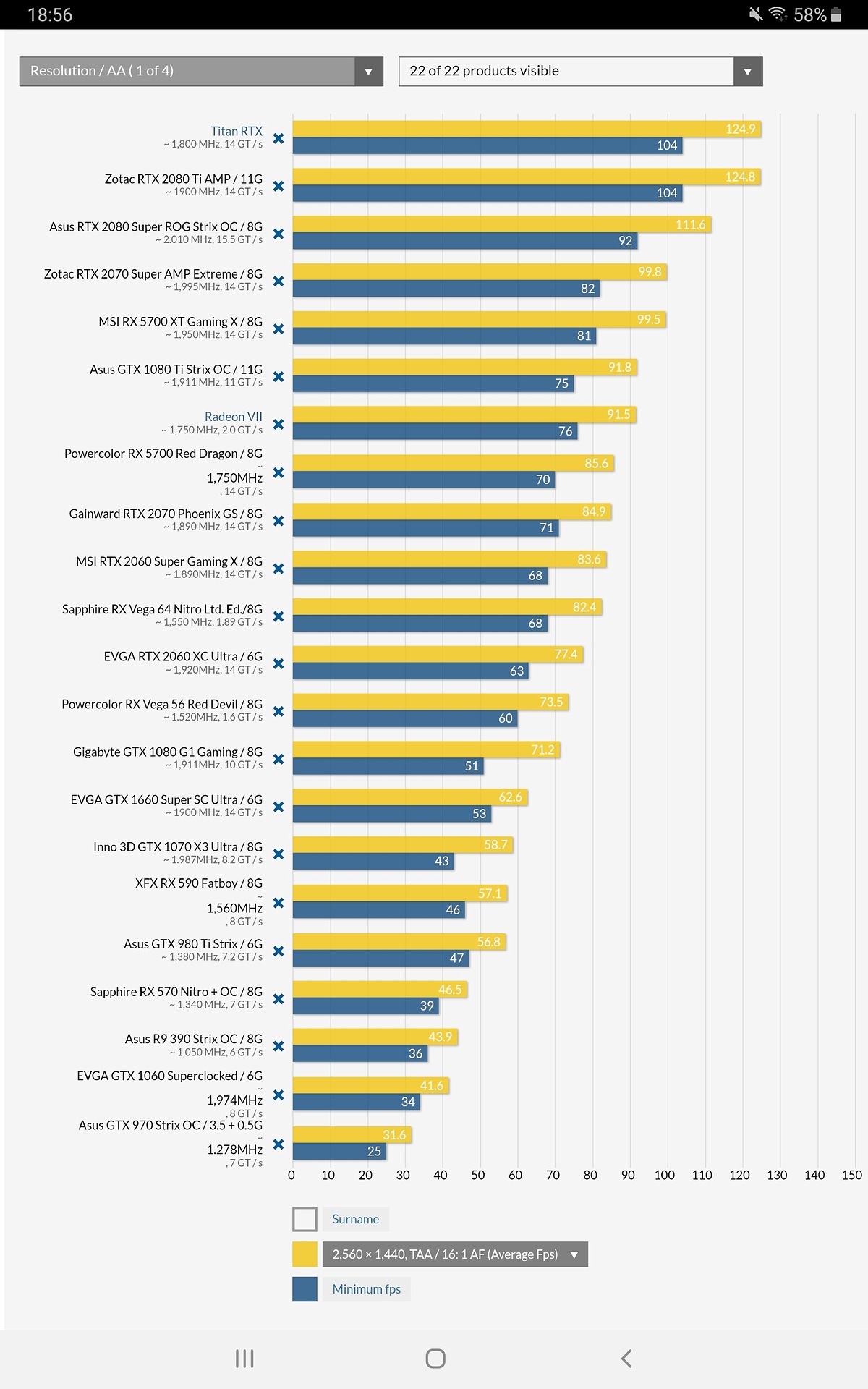

Without DXR

With DXR

Source https://www.pcgameshardware.de/Call...tracing-Test-Release-Anforderungen-1335580/2/

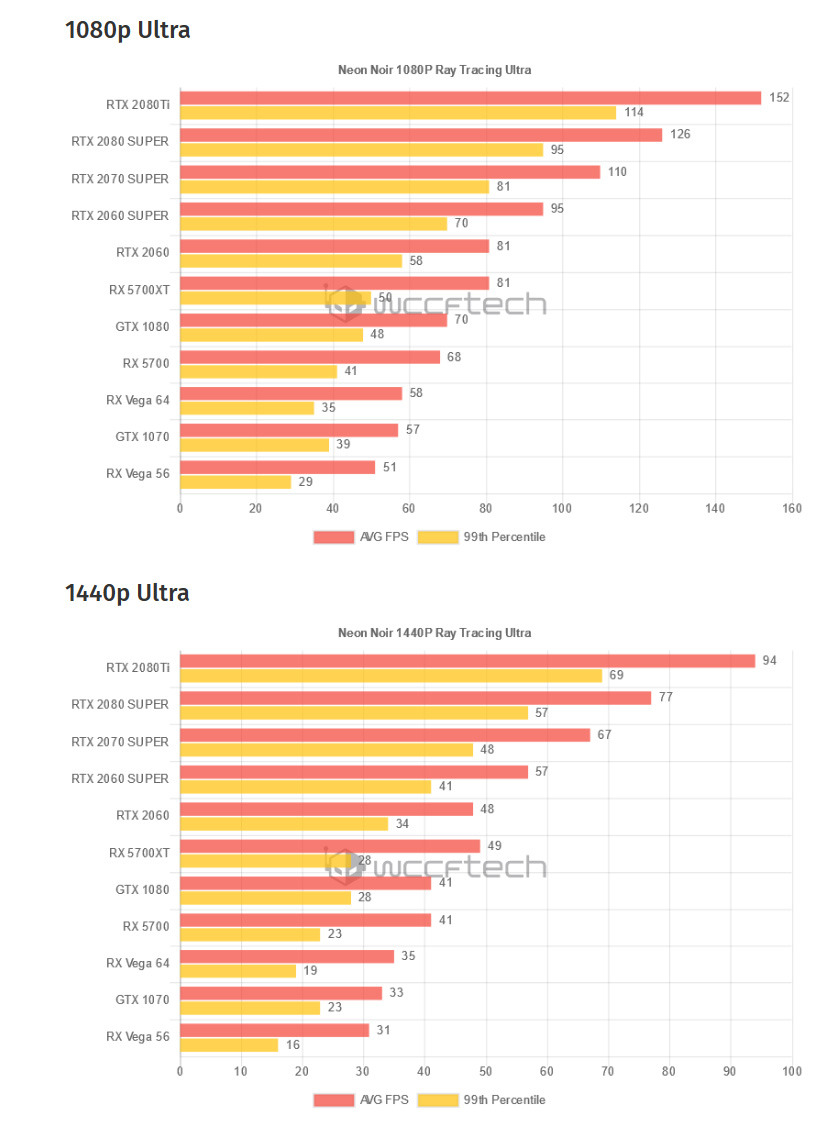

Yeah 1080Ti is pretty much slower or equal to 2060 on any game with DXR on. Let say AMD has the resource the support DXR, without the dedicated hardwares there is just no hope for Navi to compete with RTX cards on DXR performance. That's why AMD is ignoring DXR for now and hope that people like cheap rasterization performance better. Good luck spending more money for higher end Navi that can't use DXR though.

What does any of that, have to do with what I said..?

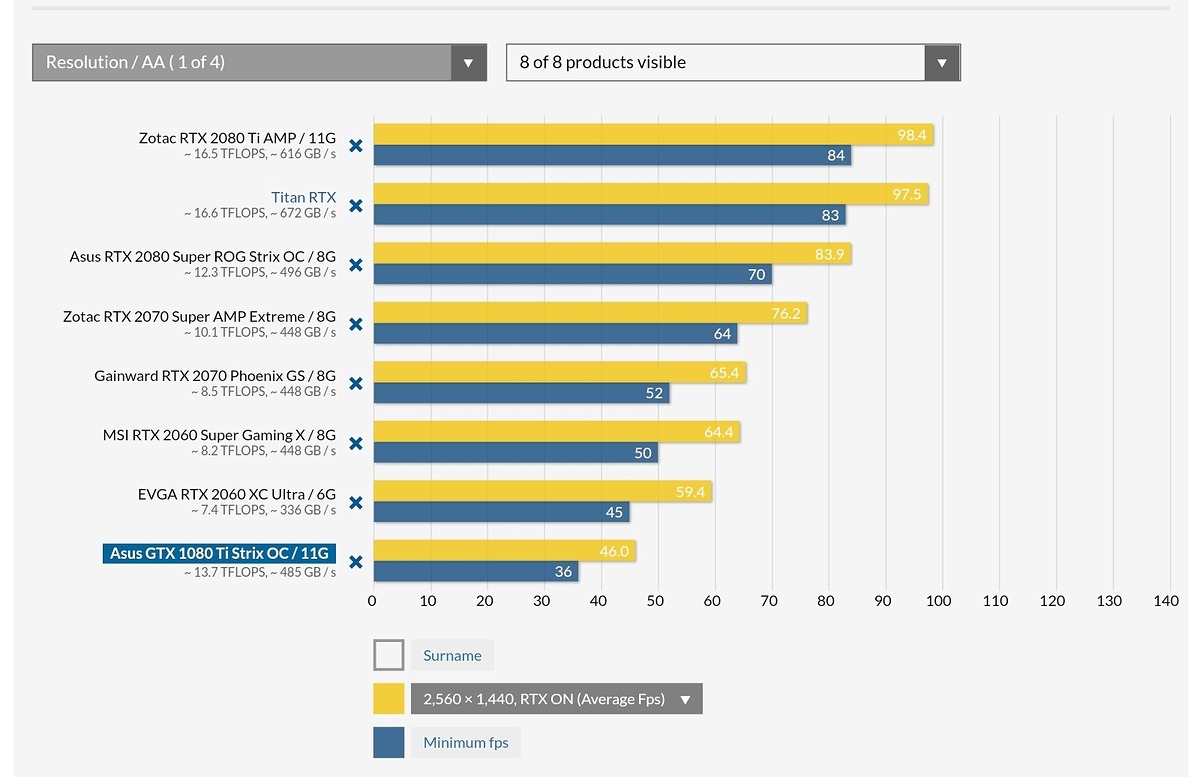

I am not refuting DXR in games..! I am laughing at how no games (except a few) make actual use of Turing's RT cores (ie: "RTX On"). As most games are just strait DXR code... and do not use the RT cores found in RTX Cards. Developers are not going to write for RTX ray tracing and DXR ray tracing for each game... (Nvidia was paying engineers to work with these Dev's, many opted out... leaving egg on Jensen's face)

Again, there is no such thing as RTX On when using DXR... because you are not turning on the RT Cores found within RTX cards. Ironically, GTX cards can do ray tracing (DXR), but they don't have RT cores therefore can't turn them on, so they don't have "RTX On".

Nvidia is using marketing, to hide the fact Turing isn't much more powerful than Pascal.. and that these datacenter AI chip's RT cores are a hoax. Because they require special software to turn them on and make use of them and Developers are not going to spend their resources doing that, when they have DXR that works on all brands (Navi, Pascal and Turing.)

Lastly, Navi can do DXR.

And better than pascal, because Navi (& Turing) have async compute, while Pascal does not. There are benchmarks supporting everything I have said....and there mere fact there are GTX cards are doing raytracing proves my point to what a gimmick Nvidia's "RTX On" moniker really is, regarding Turing's RT cores.