With frantic combat and fluid mechanics, Titanfall is precisely the sort of game you'd want to play on max quality with no hiccups -- perhaps even across multiple graphics cards and monitors. That's easier said than done without support for SLI and Crossfire, which is among other issues that have affected many players and prevented us from completing TechSpot's usual performance analysis.

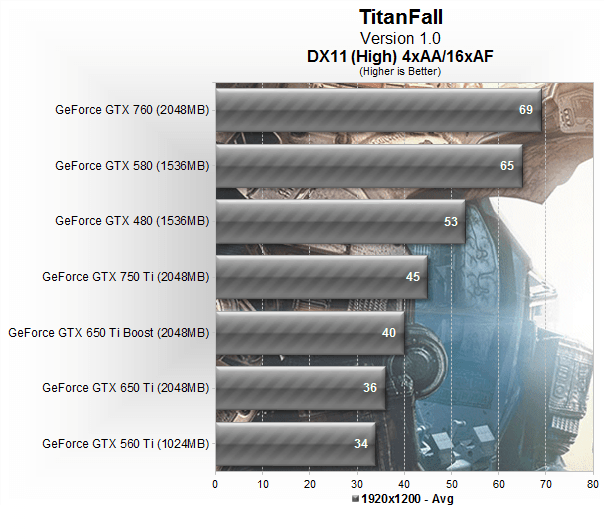

On the bright side, Respawn, Nvidia and AMD are working on updates and we're ready to test them as soon as they're available, though there doesn't seem to be a good estimate on when that will be. In the meantime, you should be able to determine approximately where your rig stands with these results of GeForce cards running Titanfall at 1920x1200 on high with 4xAA and 16xAF.

At those settings, the GTX 580 and GTX 760 comfortably exceeded 60fps while the GTX 650 Ti Boost and GTX 750 Ti managed 40 and 45fps. Those numbers aren't too surprising considering the minimum specs call for an HD 4770 or 8800 GT, so most modern cards should be able to handle the game in some capacity, even if it means reducing certain settings. Stay tuned for our full breakdown.

https://www.techspot.com/news/56041-titanfalls-performance-iffy-but-benchmark-answer-questions.html