Igp pci agp pcie future vr needs more bandwidth.

nGlide is a 3Dfx Voodoo Glide wrapper. It allows you to play games designed for 3Dfx Glide API without the need for having 3Dfx Voodoo graphics card. All three API versions are supported, Glide 2.11 (glide.dll), Glide 2.60 (glide2x.dll) and Glide 3.10 (glide3x.dll). nGlide emulates Glide...

www.zeus-software.com

If s3 card could arrive marked we would not needing ati nvidia and matrox gpus so much in that time. voodoo was great but to ekspensive.

3dfx card like voodoo 1 2 3 4 5 was great. nglide tool

can now be used simulating opengl 3dfx gaming.

playing with that gpu would be LIKE playing d3d and melted stone in some games to look perfect. ps1 gpu was low end.

but getting 3dfx on a pc would run better games like crock 1 2.

pixels would go away in better rendering. some agp x8 could only run low res games pcie could run doubble. we missed agp x16 as it was newer was released.

if AGP x16 x32 x64 x128 was still out and competing with pcie it may had a leading role.

when nvidia bought up 3dfx they could now use d3d opengl pcie to get gaming gpu too look better. a 4 mb s3 virge 375/385 could run many 3dfx titles in low OPEN GL d3d. when 3dfx was taken away they lost competetion to nvidia and later ati. they was fast in that time. I was playing carmageddon 1 2 on a p 60 128 mb ram and 128 sb sound card. now trying that on 4 mb it would simply crash. even a p 233 game run good. shadow man. you could run in all modes . resident evil had many gpu selction modes too. re 2 could change between low high and turn of some zombies ingame for less rendering time. re 3 was more future proofed.

Osaka-based M-Two is leading development on the latest series reimaginging…

www.videogameschronicle.com

re 4 was no more like r e 1 2 3. that changed many game to b e picture perfect and no more low res.

the fine with even doom e +exp pack can now run as low as 320x200 psx1 and high as 4k or dual screens 2k 4k screens for getting 5k 6k 8k supported.

if win 9x se could have been brought back in time we could played many old games today. but most of games are in 16 fat mode and cant even run. fat 32 was taking over. games that was made for fat 16 was not taken in to the heat. they was set out into the cold. try getting doom 95 running.

power vr gpu s was also out so talk about that too.

en.wikipedia.org

www.zeus-software.com

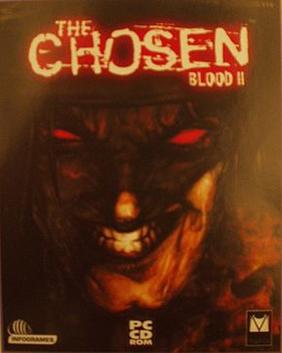

blood shadow warrior has gotten remakes

en.wikipedia.org

while blood 2 stands still

en.wikipedia.org

https://www.gog.com/game/blood_2_the_chosen_expansion im not stating or selling theese games. just showing what and where they are in the future. better rendering faster cpu gpu chipset pcie 1-7 20xx released.