Rumor mill: We're still several weeks away from the official reveal of the RX 7600. Gamers have been waiting for a mainstream graphics card to shake things up in the GPU market, and an early leak suggests the new AMD card has the potential to do just that. That is if Team Red is willing to price it close to the $300 mark.

Earlier this week, we learned that AMD's traditional AIB partners like Sapphire and PowerColor are gearing up for the launch of the Radeon RX 7600 graphics card. Supply chain insiders believe the new Team Red offering will break cover at Computex, so it won't be too long before we see a more affordable RDNA 3 product.

In the meantime, the rumor mill is abuzz with hints about the capabilities of the RX 7600, which is likely to be positioned as a competitor for Nvidia's RTX 4060. The already-launched laptop version of the Navi 33 GPU suggests it will feature 32 compute units (1792 unified shaders) paired with eight gigabytes of GDDR6 over a 128-bit memory bus.

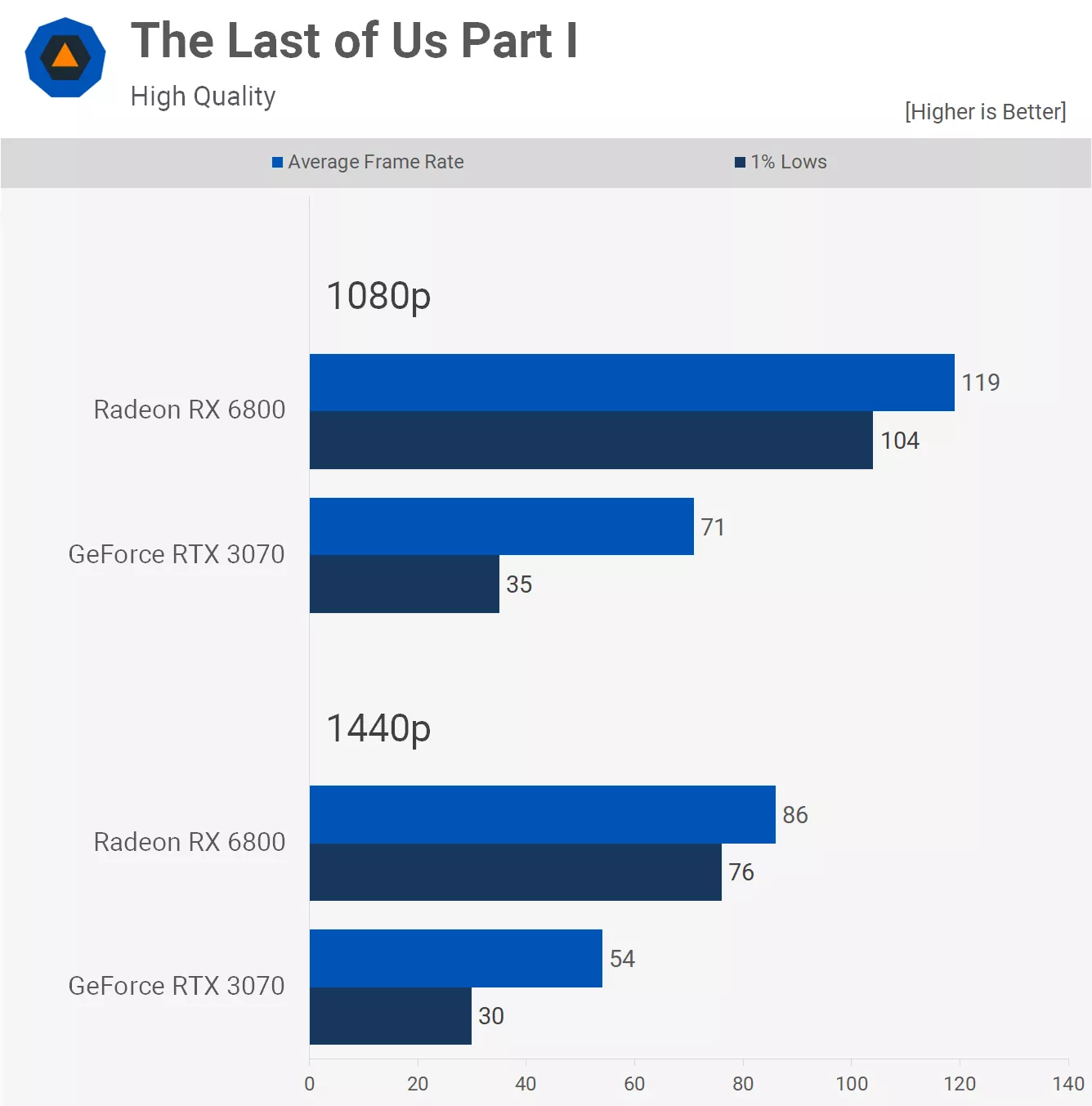

According to Moore's Law is Dead, an engineering sample of the new card performs at least 11 percent better than the RX 6650 XT using pre-release drivers. MLID claims to have spoken to a person with direct access to the new hardware but like all rumors, this should be taken with a healthy dose of salt.

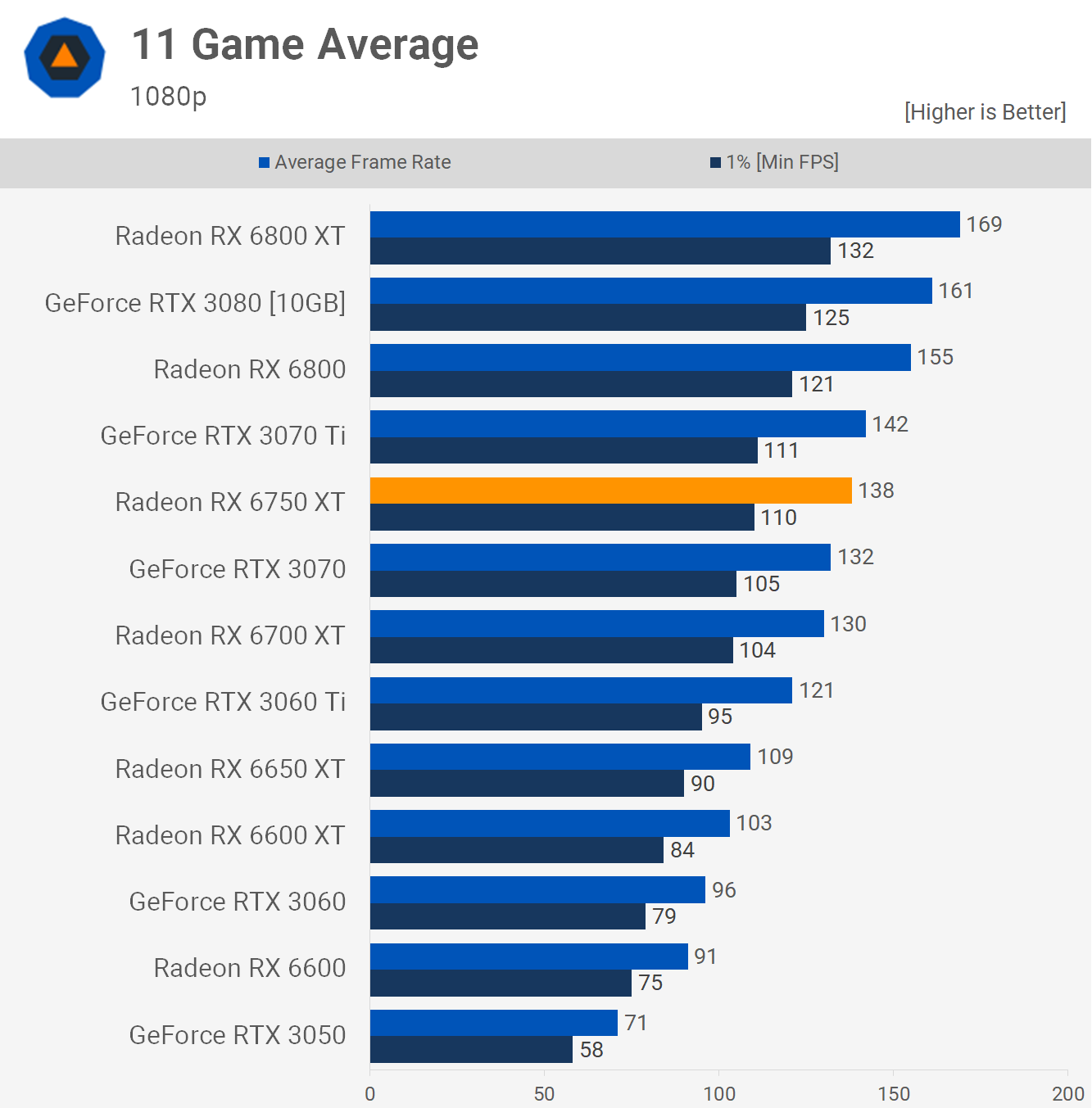

If true, however, it could mean the final product has a chance of slotting in between the RX 6700 and RX 6750 XT in terms of performance using optimized drivers. MLID's source also claimed the RX 7600 sample achieved boost clocks above 2.6 GHz and drew around 175 watts during gaming benchmarks. For reference, the RX 6650 XT draws around 180 watts, meaning the RX 7600 could also need just one 8-pin PCIe connector for external power.

Still, the price is what will make or break this product as gamers are no longer rushing to buy GPUs at exorbitant prices. Given the smaller die size for the Navi 33 GPU (204 sq mm) when compared to Navi 23 (237 sq mm), AMD should be able to cram more units of the newer GPU onto a wafer, meaning it will at least be more cost-effective to manufacture.

We expect the MSRP to fall somewhere in the $300 to $350 range, especially since eight gigabytes of VRAM are now decidedly low-end. Ideally, we'd like to see this only on sub-$200 products, but that's hardly possible when companies like Nvidia are doing everything in their power to prevent prices from dropping to more acceptable levels for most consumers.

https://www.techspot.com/news/98454-leak-suggests-radeon-rx-7600-could-reach-rx.html