Not long after sharing its 32nm processor plans with the press earlier this year, Intel revealed that it had begun shipping the first Westmere engineering samples to a select group of laptop and desktop PC manufacturers for testing. As it is often the case, one tech site seems to have gotten hold of these parts a little early and ran some quick benchmarks for us to look at.

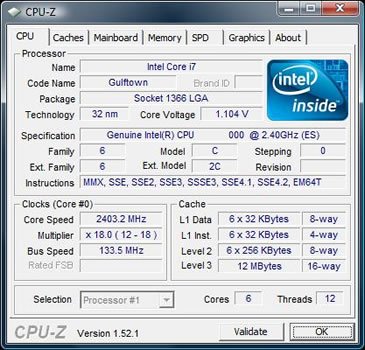

The part in question is a 32nm hexacore chip, known as Gulftown on the roadmaps and scheduled to debut in Q2 2010. If you are having a hard time keeping up with Intel codename's, Westmere is basically a die-shrink of the current 45nm Nehalem family of processors (released as Core i7) and Gulftown will be the desktop variant aimed at the high-end enthusiast segment. Featuring 6 cores and 12 threads with Hyper-threading enabled, it holds 12MB of L3 cache to support the additional data load over the QuickPath Interconnect.

It is based solely on socket LGA 1366 and retains compatibility with the X58 chipset that drives all Core i7 motherboards today. Chinese site HKEPC particularly tested a 2.4GHz part and came to some interesting though not quite surprising conclusions. First off, that the six-core beast runs cooler and draws less power than current Core i7 chips, and secondly that while there is definitely a step up in processing power most software isn't ready to fully benefit from the additional cores.

As a result, the chip will likely show its worth on specific tasks such as professional image and video editing, but with an expected price between $1,000 and $1,500, this is not necessarily the best investment as far as gaming is concerned. You can find a translated version of the complete report here.

It's also worth mentioning that unlike upcoming mainstream desktop and mobile variants, known as Clarkdale and Arrandale, Gulftown will not include an integrated graphics core alongside the 32nm CPU.