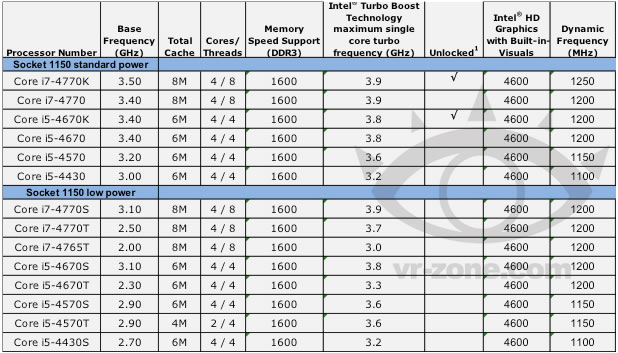

It has come to our attention that Intel will be releasing 14 different Haswell desktop processors spanning the Core i5 and Core i7 brands next year. The next generation chips will use a new socket so if you are looking to upgrade from your existing setup, know that you'll have to spring for a new motherboard with a Lynx Point chipset to do so.

VR-Zone got their hands on the Intel lineup that includes six standard processors and eight low-power CPUs. The top-of-the-line chip will be the Intel Core i7-4770K which features a clock speed of 3.5GHz on all four cores. The chip also uses HyperThreading so expect eight processing threads in total. It's capable of ramping up to 3.9GHz using Turbo Boost and carries 8MB of cache.

Interestingly enough, the i7-4470K has a higher TDP than current generation Ivy Bridge chips, 84 watts versus 77 watts, despite it being designed to fully optimize power saving benefits found in the tri-gate transistor technology first used in Ivy Bridge.

Furthermore, since this is a "K" series SKU, users should expect to be able to overclock the chip without much effort. The only other K series chip in the lineup is the i5-4670K that's clocked at 3.4GHz with 6MB of total cache on board. Only the Core i7 chips feature eight threads; all of the other i5 chips are configured as 4/4 or 2/4 (cores/threads).

Haswell is expected to debut sometime in the first half of 2013. It uses the same 22nm process that Intel introduced with Ivy Bridge but is built using a new microarchitecture that will improve performance.