Preparing for the upcoming launch of AMD's Radeon HD 8000 series, which will presumably kick off with a single-GPU flagship leading the charge, Nvidia is reportedly hoping to steal some of its rival's thunder by releasing a new card that will exist between today's GeForce GTX 680 and the dual-GPU GTX 690.

According to several sources speaking with SweClockers, Nvidia's newcomer will appear late next month for $899 as the GeForce Titan – a neat reference to the Titan supercomputer built by Cray at the Oak Ridge National Laboratory, which is comprised of 18,688 nodes equipped with Nvidia's Tesla K20X GPU.

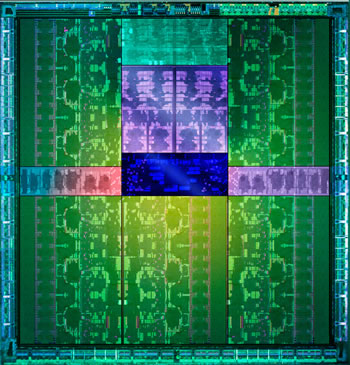

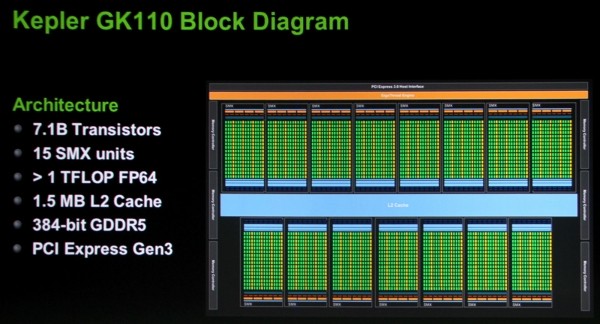

Instead of using the GTX 600 series' GK104 GPU, the Titan will be armed with the GK110, which powers Nvidia's enterprise-class Tesla range, though the Titan's chip will have at least one SMX unit disabled from the top configuration, leaving it with 2688 CUDA cores (still over a thousand more than the GTX 680 has).

It's also said that the Titan will have a clock rate of 732MHz (200 to 300MHz lower than the GTX 680 and 690), while its 6GB of GDDR5 VRAM will run at 5.2GHz and have 384-bit bus (50% wider than the GK104 offers. All told, the card will supposedly be 15% slower and at least 10% cheaper than the GTX 690.

Assuming those figures are accurate, such a value discrepancy would probably be justifiable when you consider the fact that the GTX 690 has a 300W TDP, while the Titan should consume less given that the Tesla K20X is rated at 235W – not to mention the reduced hassle of not dealing with a SLI-based card.

Update: As noted by dividebyzero in the comments, the specs striked above are for the Tesla K20X, while the GeForce Titan's configuration hasn't been revealed yet unfortunately. For whatever it's worth, the estimate about the new card being roughly 15% slower than the GTX 690 still seems to be relevant.