With 2012 delivering the industry's first 28nm GPUs from both AMD and Nvidia, we enjoyed watching the bitter ritual of one-upmanship as the titans scrambled to earn your cash. After a year's worth of staggered releases, price cuts, exclusive deals and driver updates, the dust finally settled enough last November for us to run a generational comparison between the Radeon HD 7000 and GeForce GTX 600 series.

Based on pricing and performance at the time, AMD's HD 7850 was the best $150 to $200 option, while Nvidia's GTX 660 Ti was the best solution when spending $250 to $300. Those parting with $400 and up were best off with the HD 7970 as it matched the GTX 680 in terms of performance but was almost $100 cheaper – a stark contrast from our original findings if you care to revisit the GTX 680's review.

Considering next-gen cards are still months away, we didn't expect to bring any more GPU reviews until the second quarter of 2013. However, we realized there was a gap in our current-gen coverage: triple-monitor gaming. In fact, it's been almost two years since we pitted the HD 6990 and GTX 590 against each other to see how they could cope with the stress of running games at resolutions of up to 7680x1600.

Battlefield: Bad Company 2, Crysis, Civilization V, Dragon Age 2, and Mafia 2...

We're going to mix things up a little this time. Instead of using each camp's ultra-pricey dual-GPU card (or the new $999 Titan), we're going to see how more affordable Crossfire and SLI setups handle triple-monitor gaming compared to today's single-GPU flagships.

On AMD's side, we'll test a pair of HD 7850s (~$360) and an HD 7970 (~$430), while Nvidia's corner will feature two GTX 660 Tis (~$580) and the venerable GTX 680 (~$470).

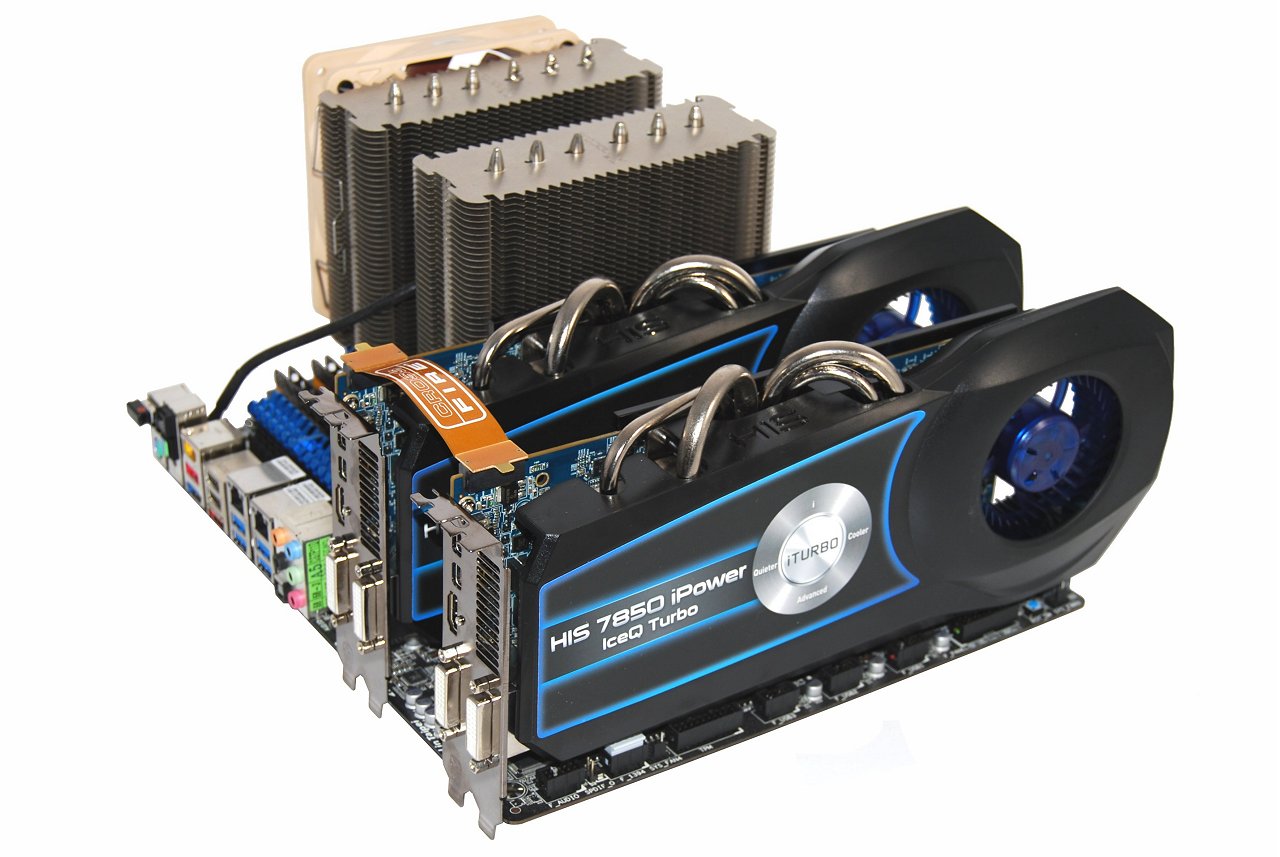

We also have a pair of the new HIS Radeon HD 7850 iPower IceQ Turbo graphics cards in house, which are the first 7850s with 4GB of memory. The extra memory buffer is designed to help at high resolutions and when using high levels of anti-aliasing, so it'll also be interesting to see whether the 4GB cards can justify their ~50% price premium over the standard 2GB models when playing at resolutions such as 5760x1080.

Test Setup and System Specs

We tested with three Dell 3008WFP 30-inch LCD monitors that support a native resolution of 2560x1600. Once the monitors were connected to the graphics cards, creating a group configuration was easy. Both AMD and Nvidia drivers automatically added extra resolutions such as 7680x1600, 5760x1200 and 5040x1050 (our tests were only performed at 5760x1200 and 5040x1050).

- Intel Core i7-3960X Extreme Edition (3.30GHz)

- x4 2GB G.Skill DDR3-1600(CAS 8-8-8-20)

- Asrock X79 Extreme11 (Intel X79)

- OCZ ZX Series (1250w)

- Crucial m4 512GB (SATA 6Gb/s)

- Gainward GeForce GTX 680 (2GB)

- Gigabyte Radeon HD 7970 (3GB)

- HIS Radeon HD 7850 iPower IceQ Turbo (4GB) Crossfire

- AMD Radeon HD 7850 (2GB) Crossfire

- Gainward GeForce GTX 660 Ti Phantom (2GB) SLI

- Nvidia GeForce GTX 660 Ti (2GB) SLI

On the software side of things, we used Windows 7 64-bit and graphics card drivers Nvidia Forceware 313.96 and AMD Catalyst 13.2.