Did you know it's been two years since Nvidia introduced GeForce RTX 20 GPUs? Incredibly, it's been that long, and if you somehow haven't heard, next month they'll start rolling out the next generation of GPUs a.k.a. Ampere, rumored to be much more powerful.

That's certainly exciting and is something that will keep us busy in the weeks and months to come. Except that we've got a tough choice to make. You see, these new graphics cards will support PCI Express 4.0, but our GPU test system which we just recently upgraded to a Core i9-10900K, doesn't, rather it's limited to PCIe 3.0.

So we can either stay the course and do what we've always done, test with the fastest gaming CPU available at the time, and then investigate PCIe 4.0 performance on the side. At which point we'd be hoping it makes no difference – or we'll have to redo all our GPU data on a PCIe 4.0 compliant platform eventually.

Or for the first time ever, we could switch the GPU test system over to AMD, knowing in some instances we might be sacrificing a little performance, but in return we'd have apples to apples data comparing older PCIe 3.0 graphics cards against the new PCIe 4.0 models, in a wide range of games at multiple resolutions.

Another advantage to going with the Ryzen 9 3950X is that we'd be using the same processor architecture as many of you on the desktop. That one is debatable but overall if we look at the stuff you buy after reading our reviews, Intel 8th, 9th and in particular their 10th-gen Core sales haven't been good for desktop CPUs. That's another reason for us to start testing GPUs with AMD, it's not the primary reason, but it is a reason.

PCI Express 4.0 support is not a gimmick and we suspect in the not too distant future it will hand AMD a performance advantage. Of course, Intel plans to support PCIe 4.0 with their next generation, but in the meantime the technology is exclusive to AMD.

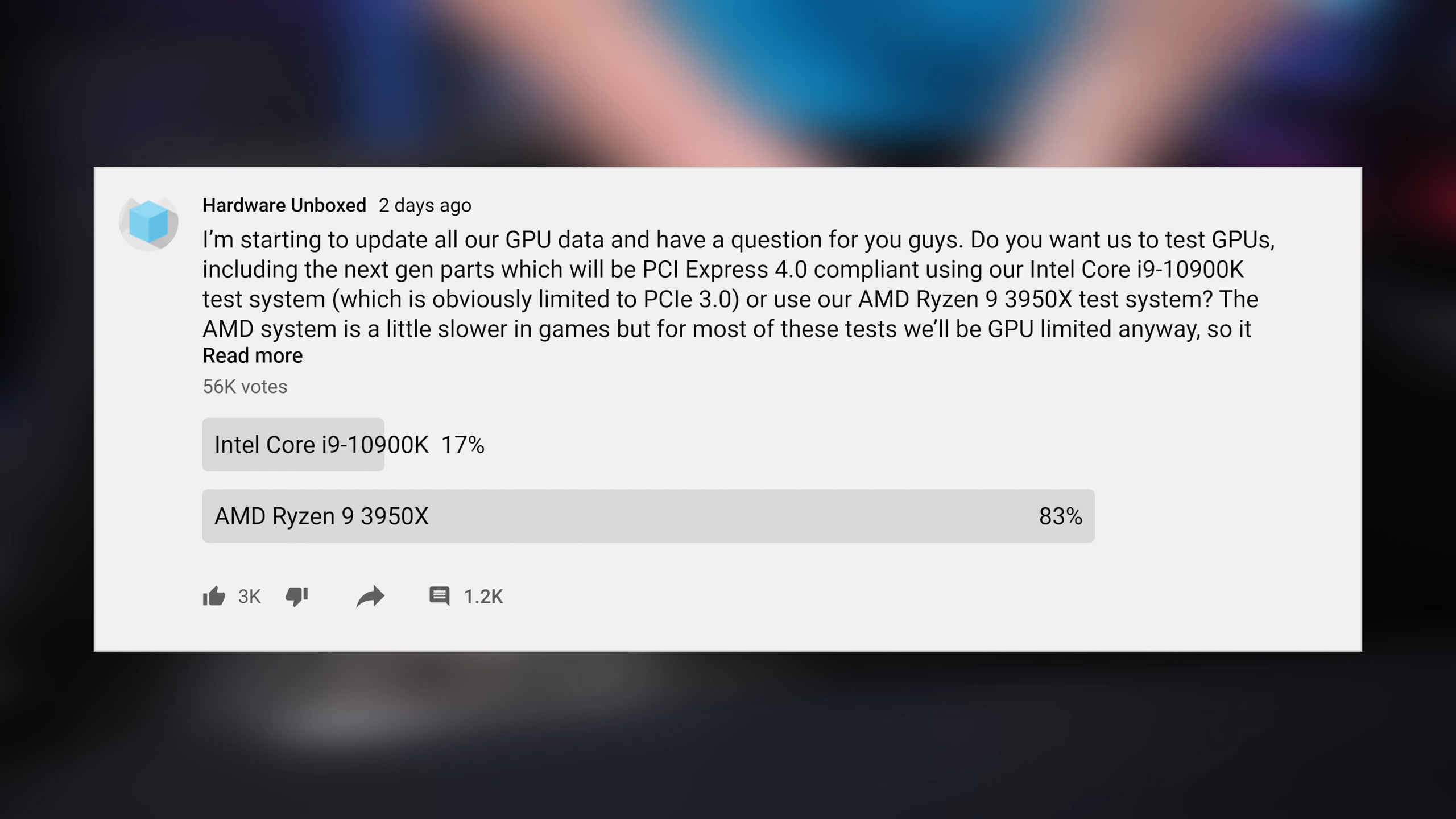

Still unsure as to which way we should go on this one, we ran a poll on the Hardware Unboxed channel and the response was overwhelming: 83% of the 52K people that voted requested we use the Ryzen 9. It's pretty clear what the majority wants and that's a compelling enough reason to go with AMD. But before we make any final decisions, we thought it would be wise to dig into the data and see what we're giving up and potentially what we have to gain.

If you recall mid-way through last year we compared the Ryzen 9 3900X and Core i9-9900K in 36 games using a GeForce RTX 2080 Ti. All testing took place at 1080p and what we found was that on average, the 3900X was ~6% slower and in a few instances was a little faster. For the most part we were looking at single digit margins, with the biggest loss seen when testing with StarCraft II where the Ryzen processor was 16% slower. In that instance, although the gap was not huge, it made sense to test GPUs with the 9900K given PCIe support wasn't a factor to consider.

Today we want to benchmark a few of the games that'll be included in our upcoming next gen GPU reviews and we're looking at two things here: first, how much slower is the 3950X when compared to the 10900K, and we're not focusing exclusively on 1080p this time as this won't be a resolution we'll include for GPUs pierced over $500. So if there's an RTX 3080 Ti, for example, the focus of that review will be 4K performance.

Second, we want to see how the Radeon RX 5700 XT performs using these two processors as it is currently the fastest GPU to support PCIe 4.0. Are we already starting to see some benefits for the increased PCIe bandwidth, or will we have to wait for much faster graphics before the newer standard is of any benefit?

For testing both platforms we configured our test beds with 32GB of DDR4-3200 CL14 memory and the Corsair Hydro H150i Pro RGB AIO liquid cooler. In total we have tested 9 games at 1080p, 1440p and 4K...

Benchmarks

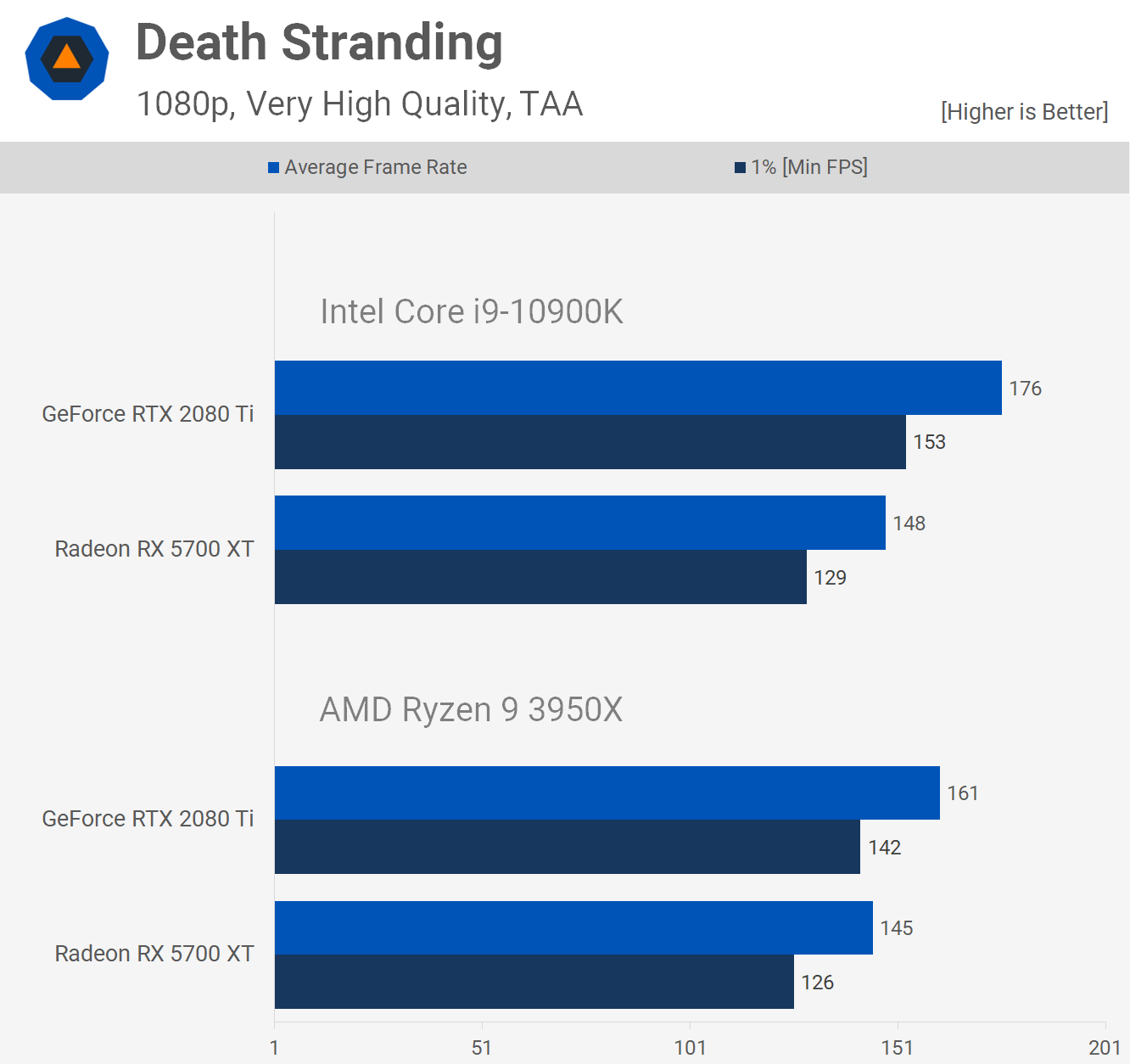

Starting with Death Stranding at 1080p, we see when using the RTX 2080 Ti that the 10900K is 9% faster than the 3950X, which is a decent margin. Then with the slower 5700 XT that margin is reduced to just 2%.

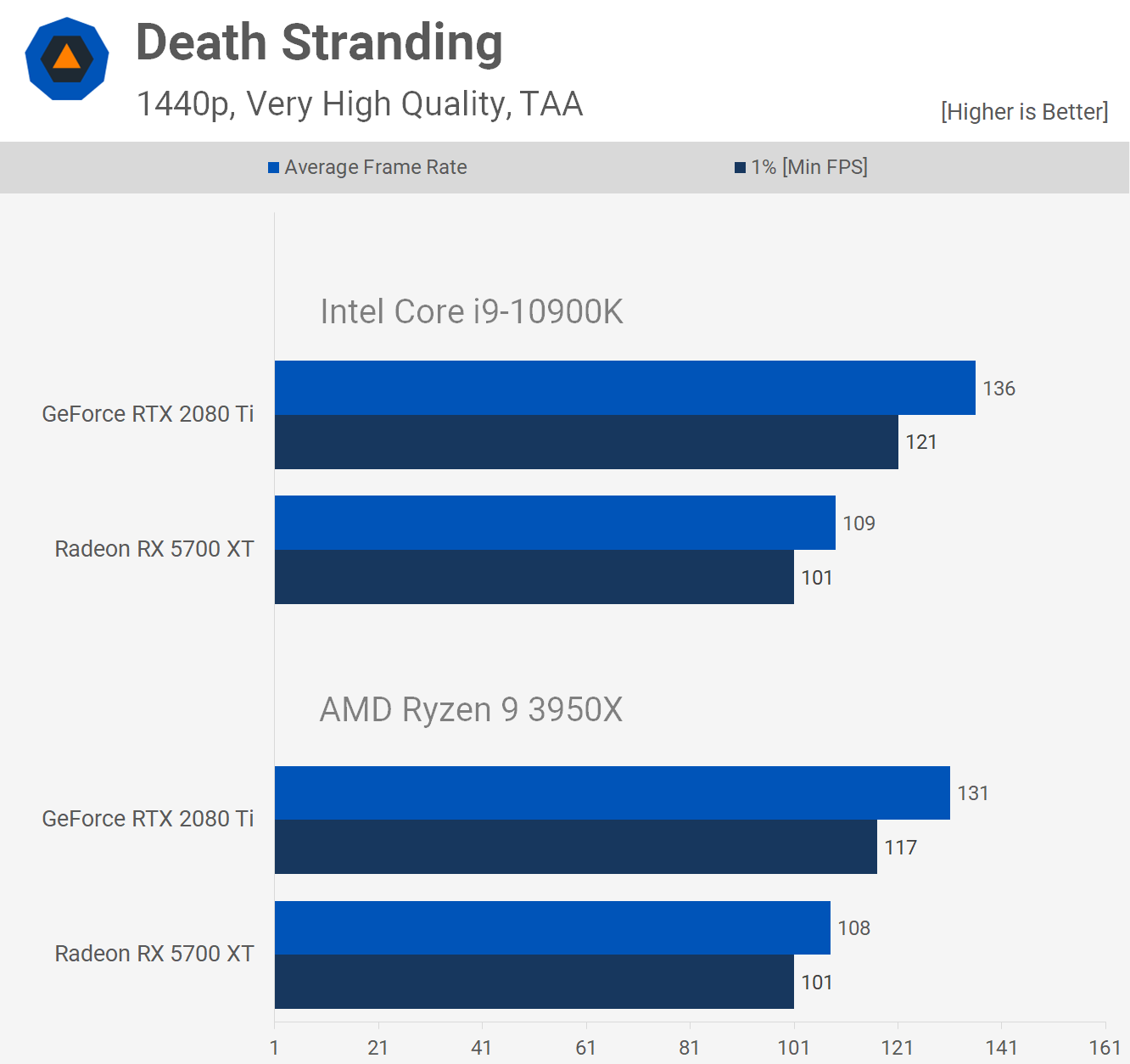

Now if we increase the resolution to 1440p, we find the margin is reduced to just 4% in favor of the 10900K, which is really insignificant. Here we also find virtually no difference between these two CPUs when using the 5700 XT, so this is very much a GPU limited test.

Then finally at 4K both systems are entirely GPU bound as the 2080 Ti delivered the same level of performance with both CPUs. Interestingly, the 5700 XT was consistently a few frames faster with the 3950X and our 3 run average landed it 3% ahead, an insignificant margin, but interesting given what we saw at 1080p and 1440p.

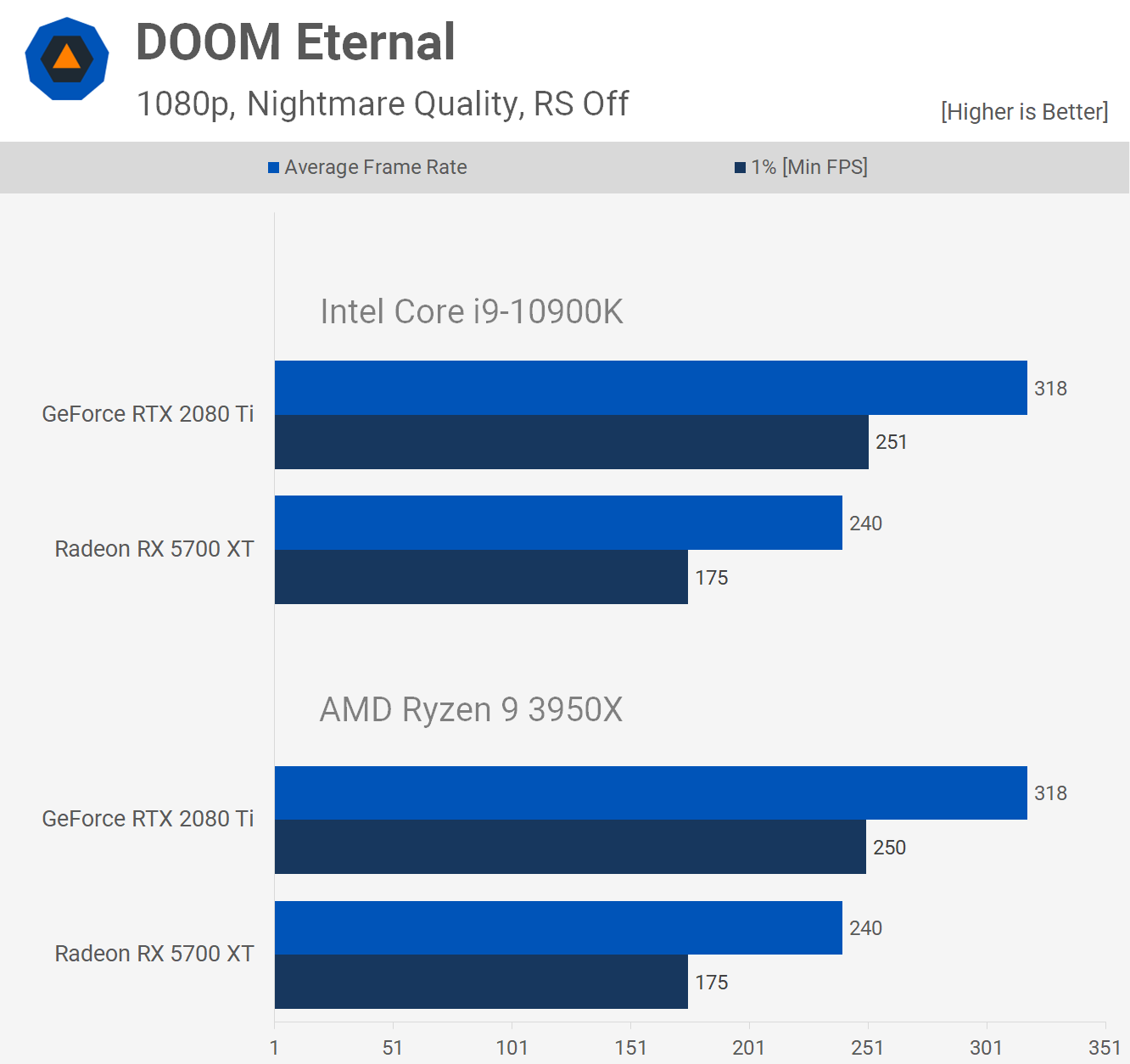

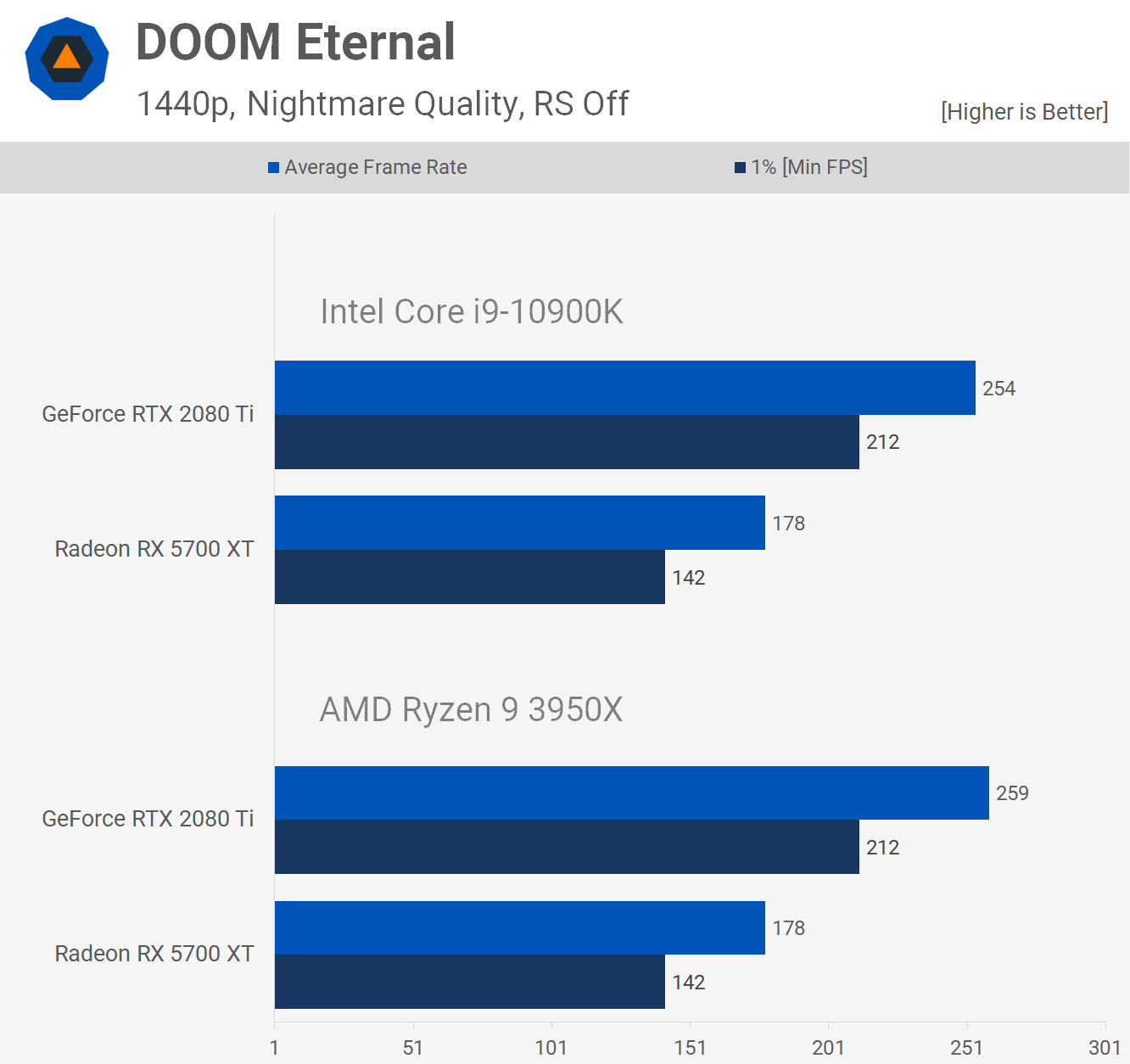

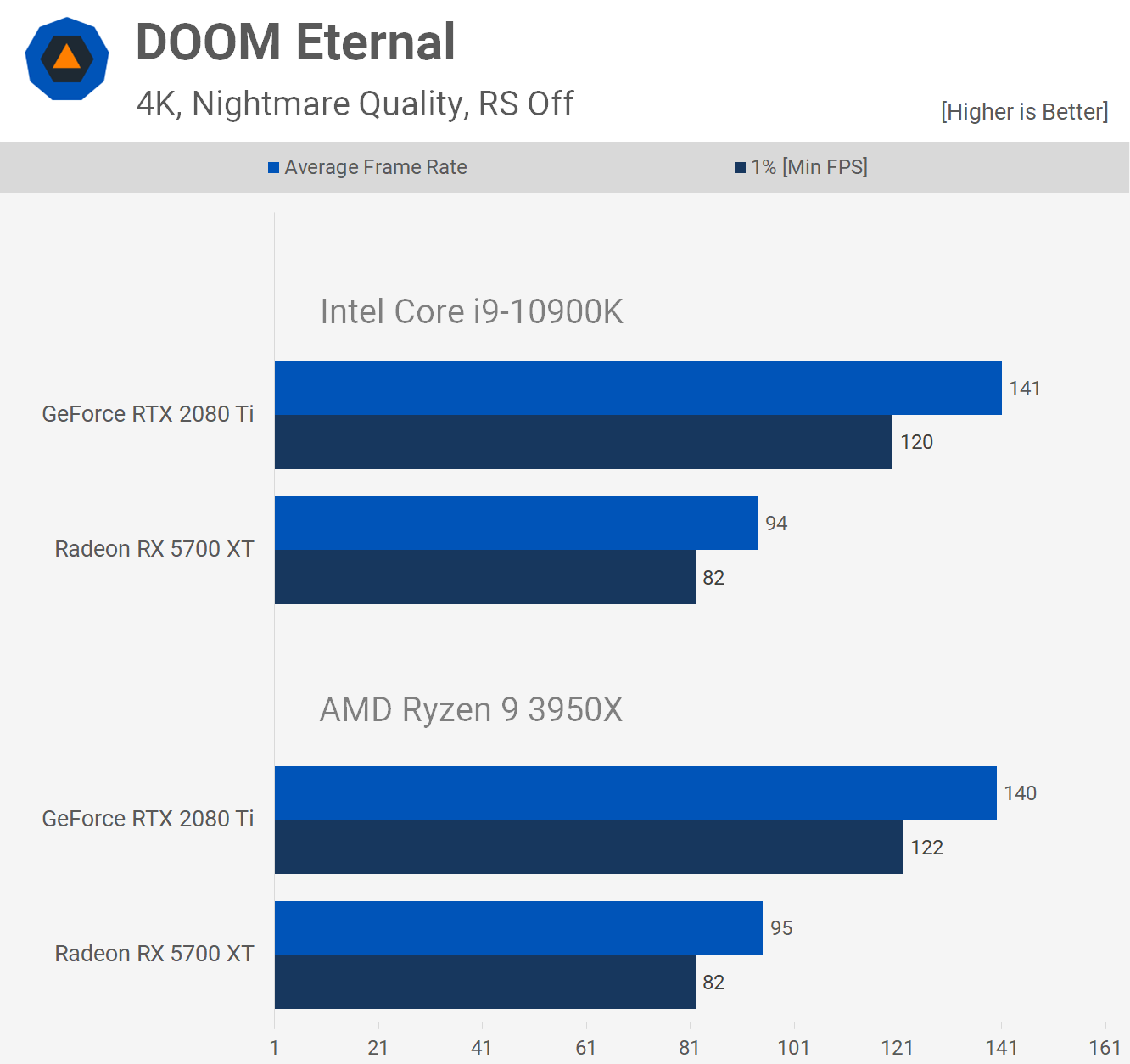

Like a lot of games, especially those using low-level APIs, DOOM Eternal isn't very CPU demanding and as a result, even at 1080p with an RTX 2080 Ti, we see the same level of performance on both platforms and this was also true with the 5700 XT.

Naturally increasing the resolution does nothing to change that situation as the GPU bottleneck increases and this game doesn't appear to require excessive PCIe bandwidth. Then at the 4K resolution we find more of the same, the 3950X has no trouble keeping pace with the 10900K in this title.

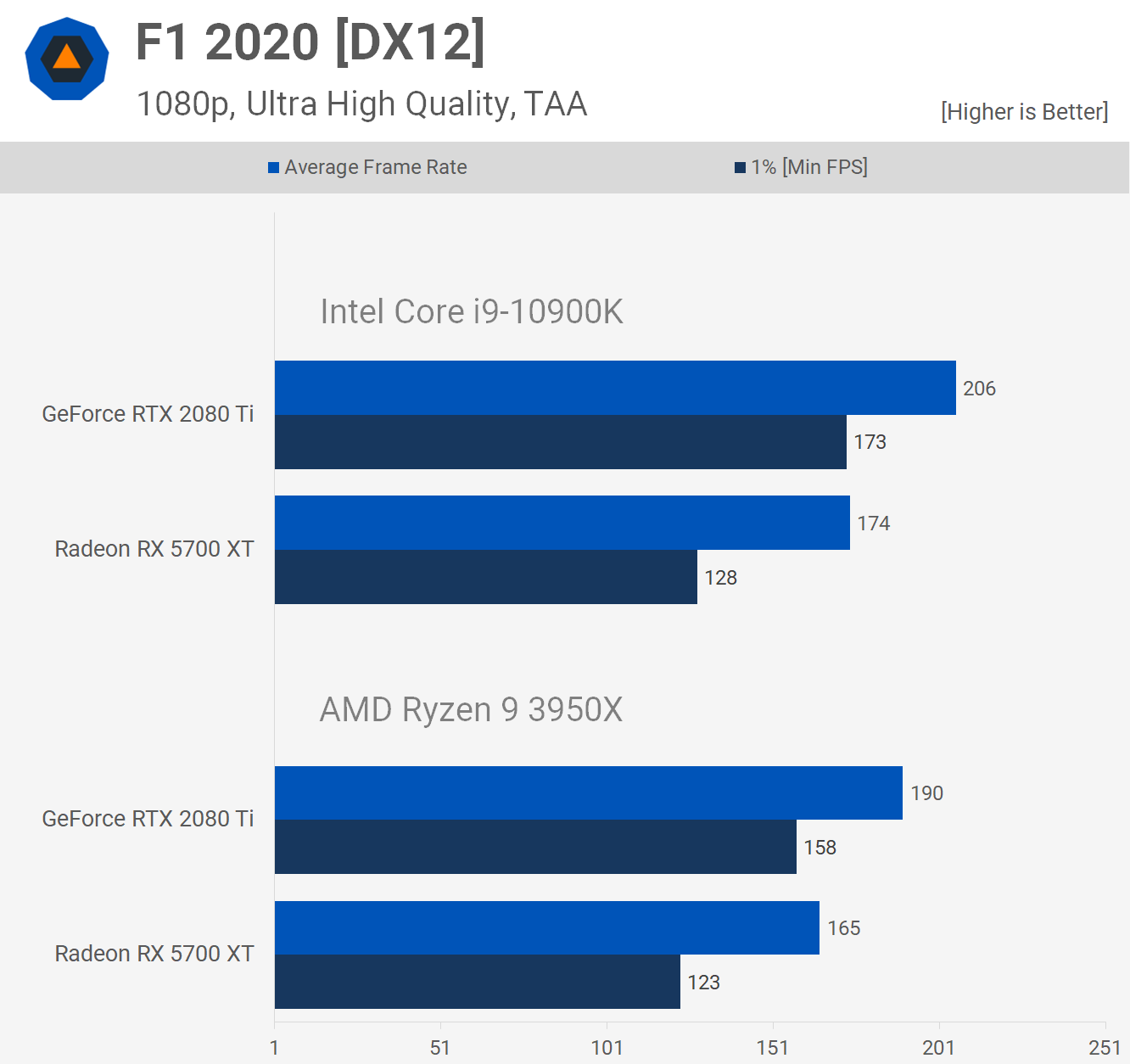

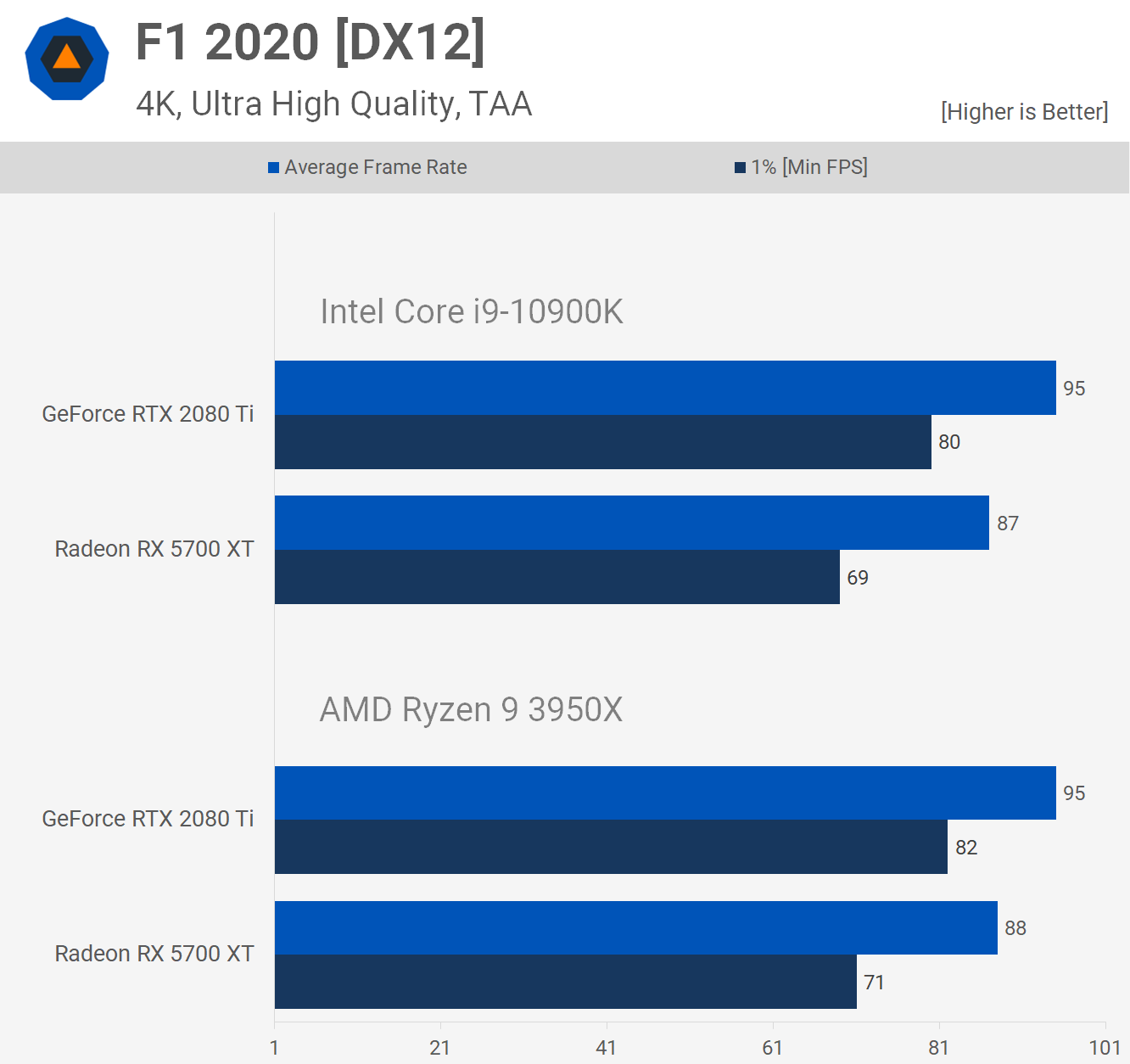

Moving on we have F1 2020, and starting with the 1080p results it's clear the 10900K does enjoy a performance advantage in this title, boosting frame rates with the 2080 Ti by 8% over the 3950X. It was also 5% faster with the 5700 XT, so not massive margins but the Intel CPU is clearly faster under these conditions.

However, we again find that increasing the resolution does start to reduce those margins and now the 10900K is just 5% faster with the 2080 Ti and 3% faster with the 5700 XT, both of which we'd say are insignificant differences.

Then finally at the 4K resolution there is no distinguishing between the 10900K and 3950X, even with the 2080 Ti we're looking at the same 95 fps on average.

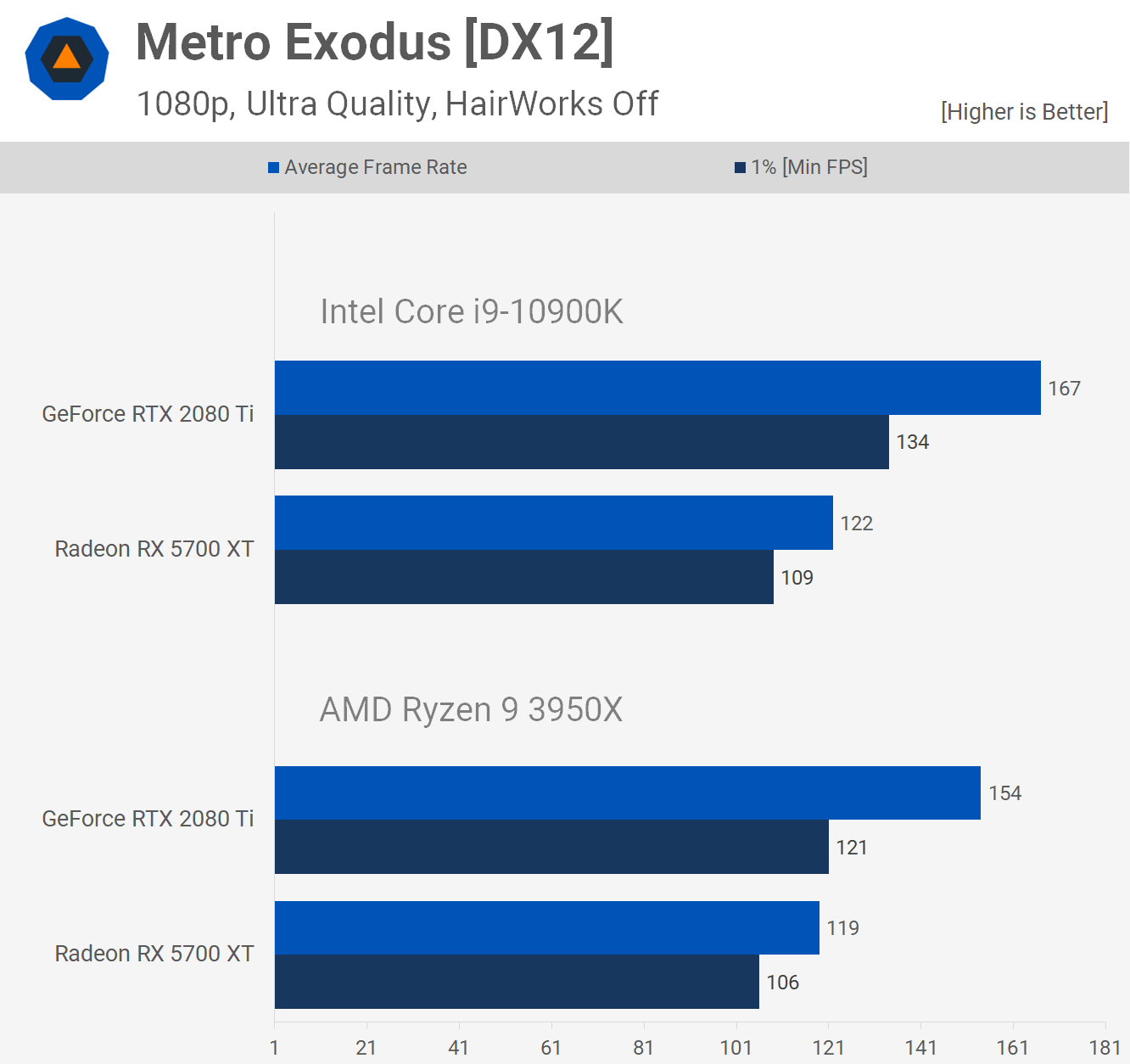

Next up we have Metro Exodus and here we're again finding the 10900K to be up to 8% faster at 1080p when comparing the average frame rate, though it was just 3% faster with the 5700 XT.

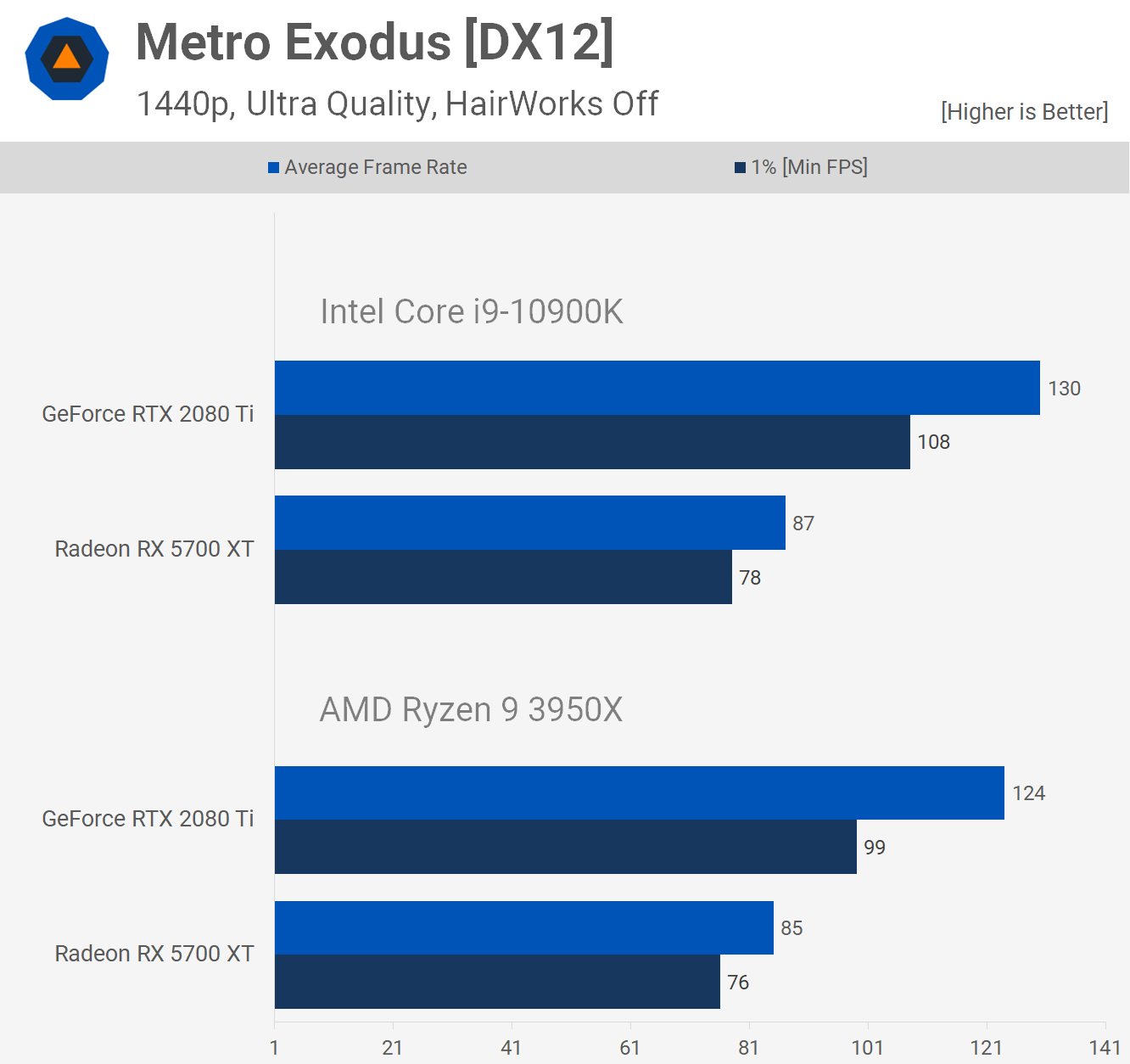

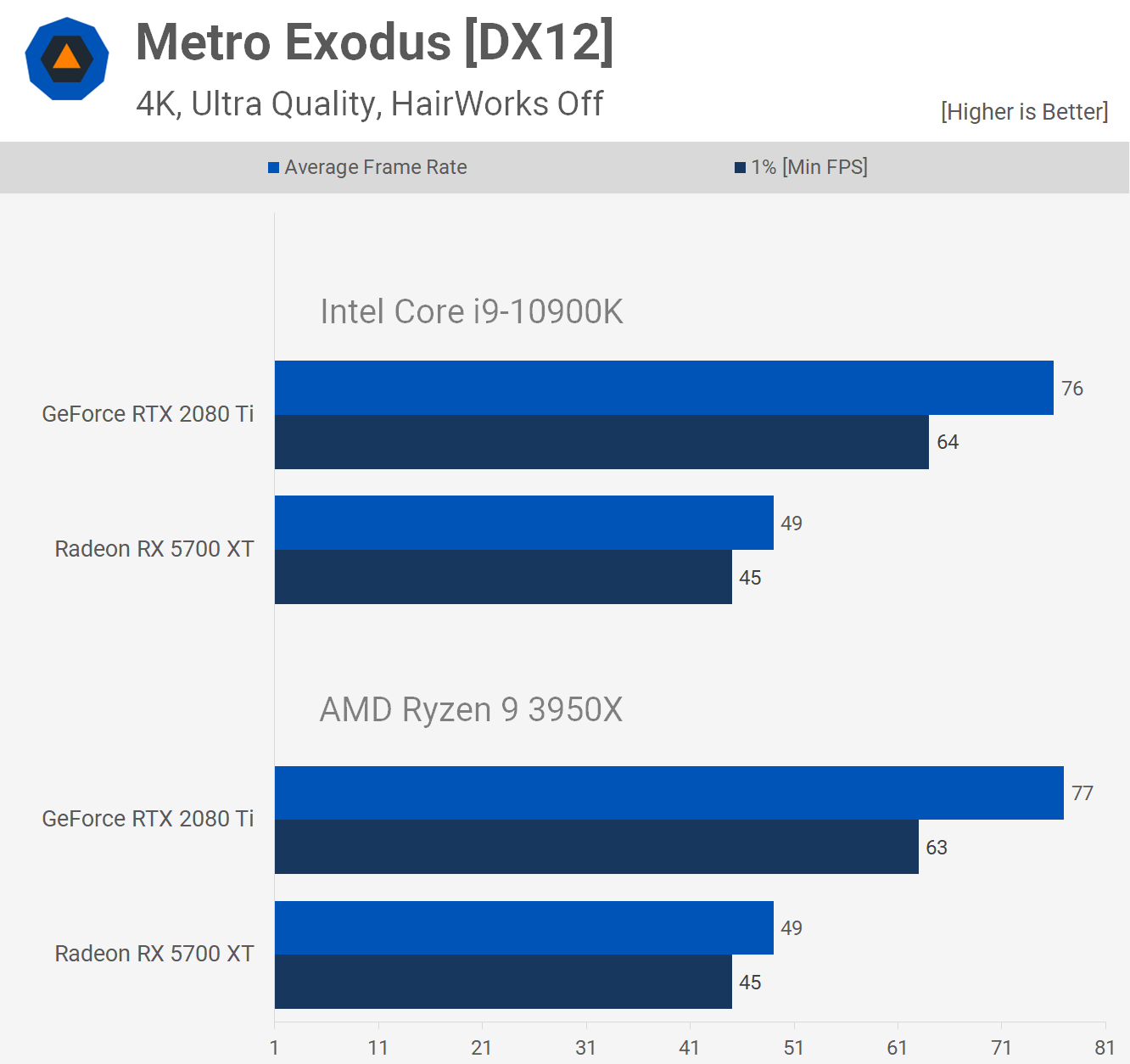

Again, the margin is reduced at 1440p and now the Intel CPU is just 5% faster with the 2080 Ti and just 2% faster with the 5700 XT. Then once again we're looking at identical performance at 4K, where we're entirely GPU limited and the CPU choice makes no difference when testing with a high-end PCIe 3.0 graphics card.

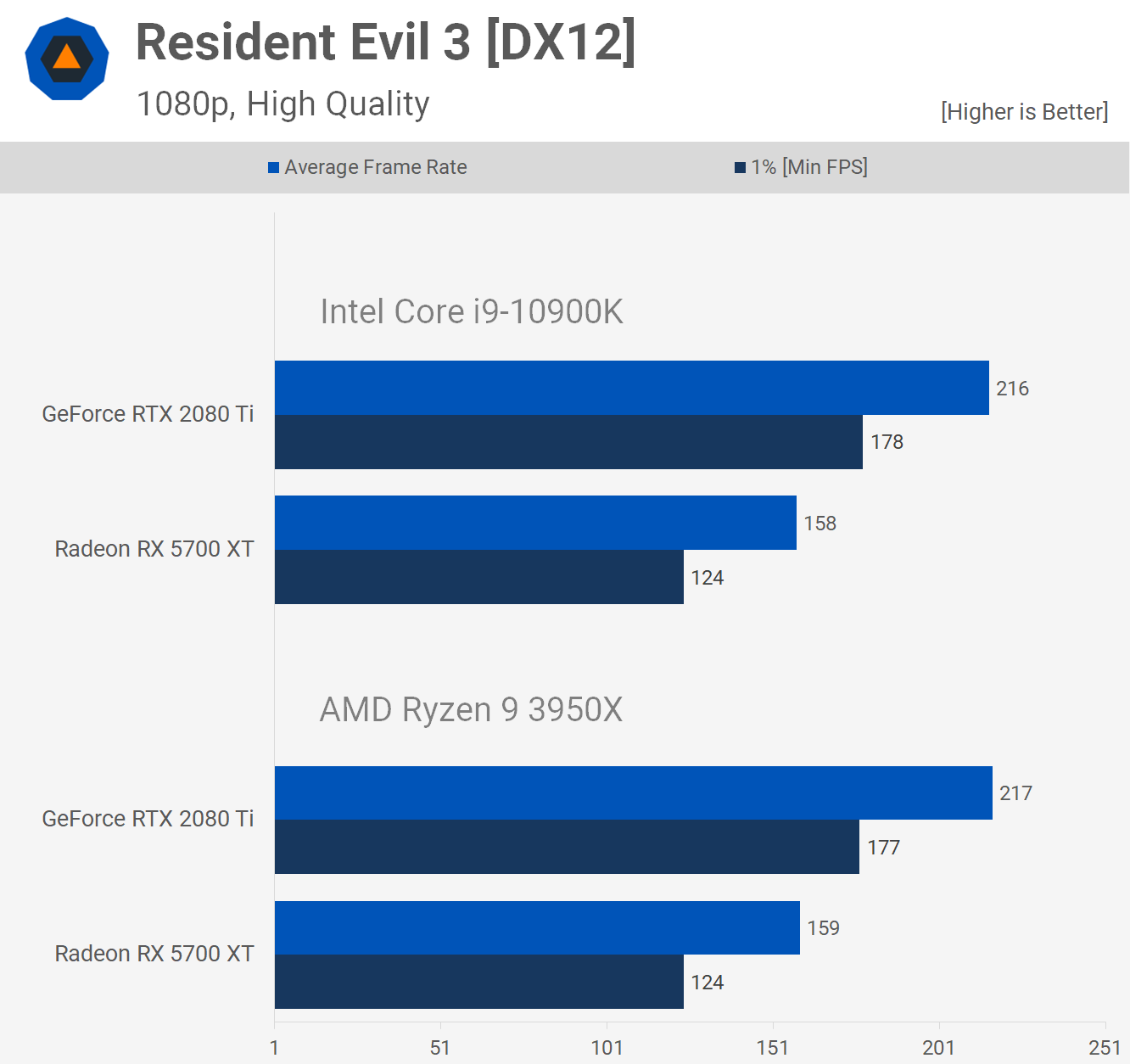

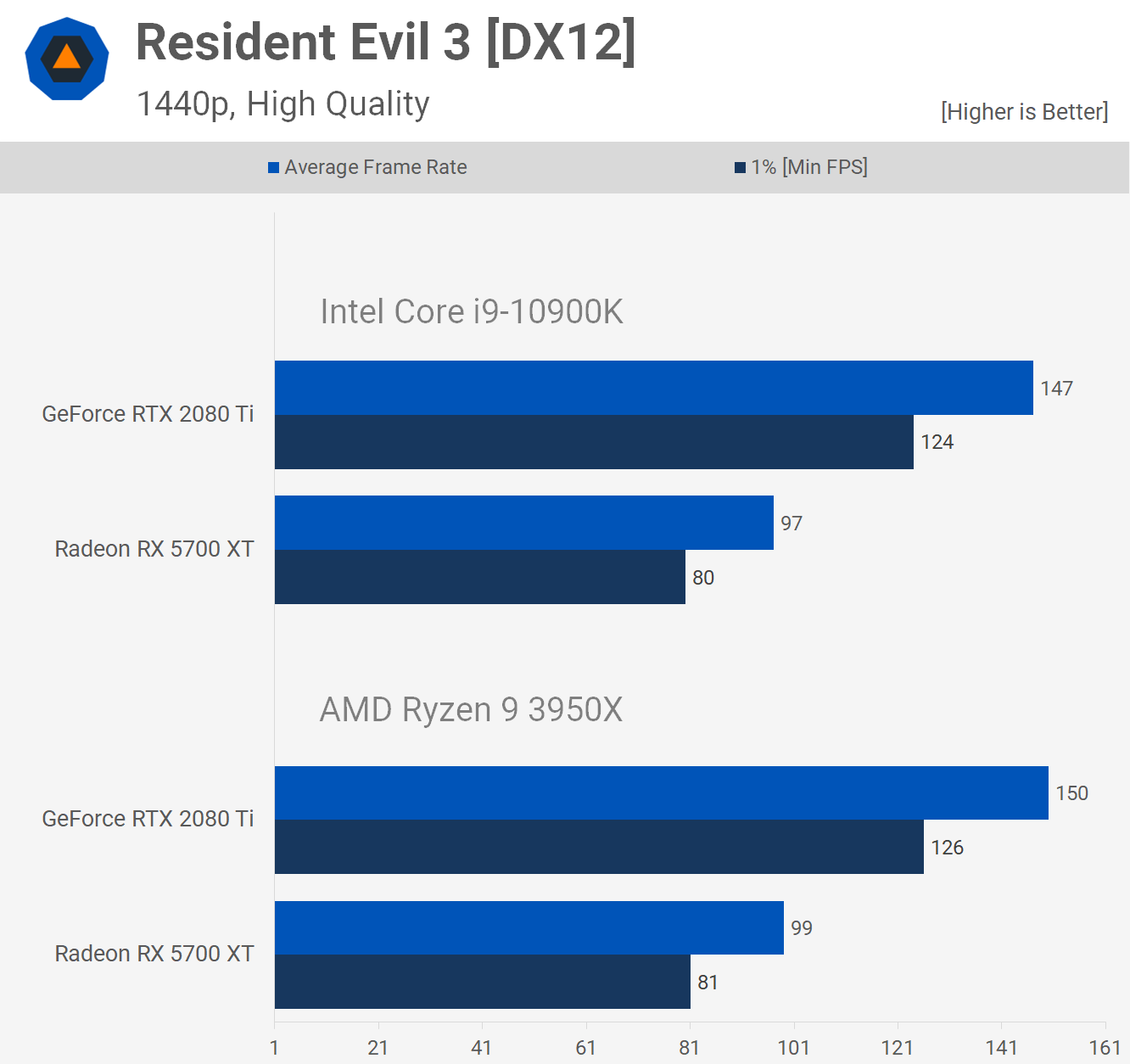

Like Doom Eternal, and many other games for that matter, Resident Evil 3 isn't very CPU sensitive and as a result even at 1080p, there's no difference in performance between these two CPUs with either GPU. The same is true at 1440p, though the 3950X was actually slightly faster than the 10900K here, we're talking just a few frames, but it was consistently faster, so that's interesting.

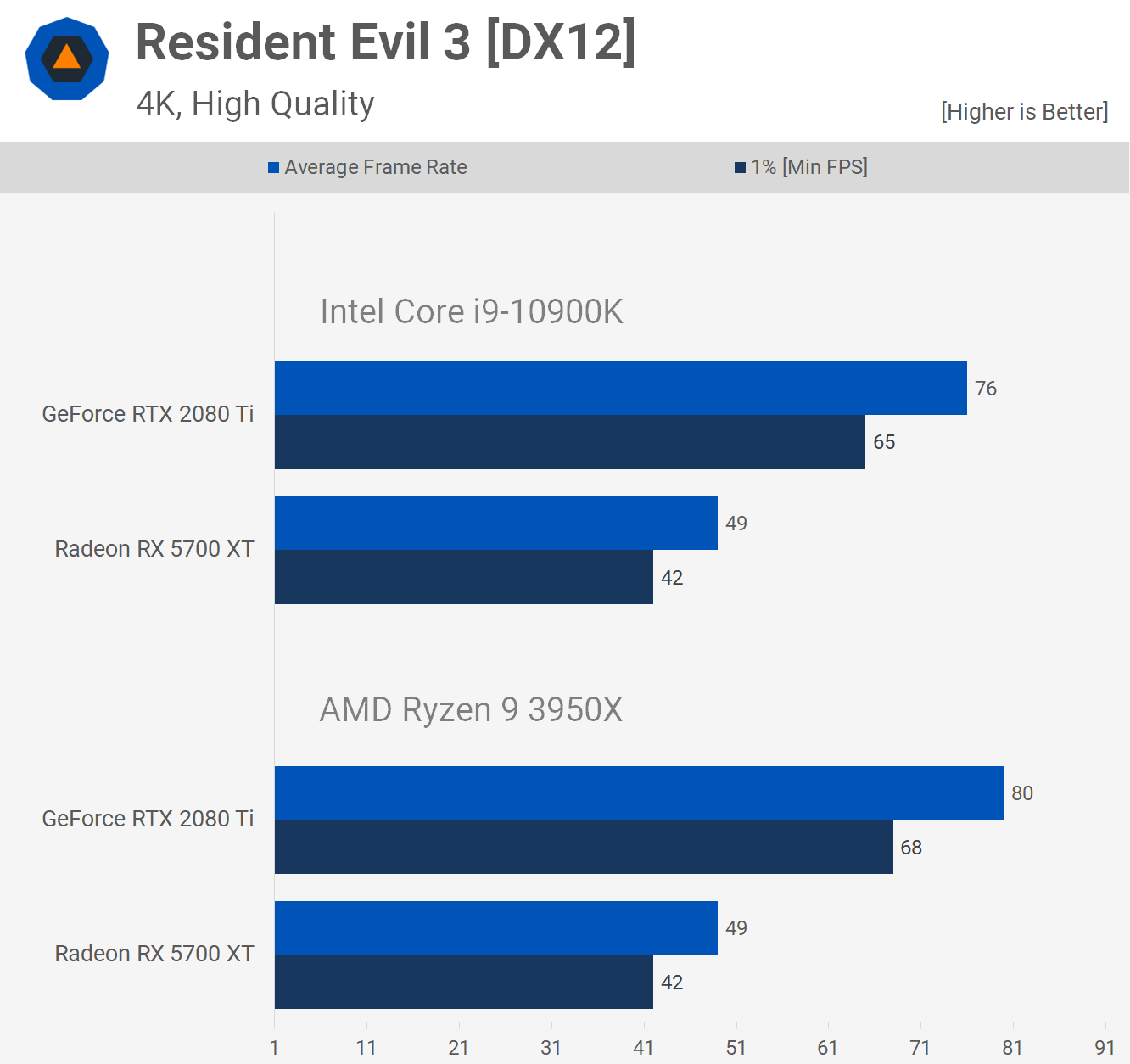

Then as we bump the resolution up to 4K the 3950X pulled further ahead with the 2080 Ti and is now up to 5% faster which was somewhat of an unexpected result. Not a huge margin, but it is a small win for the red team.

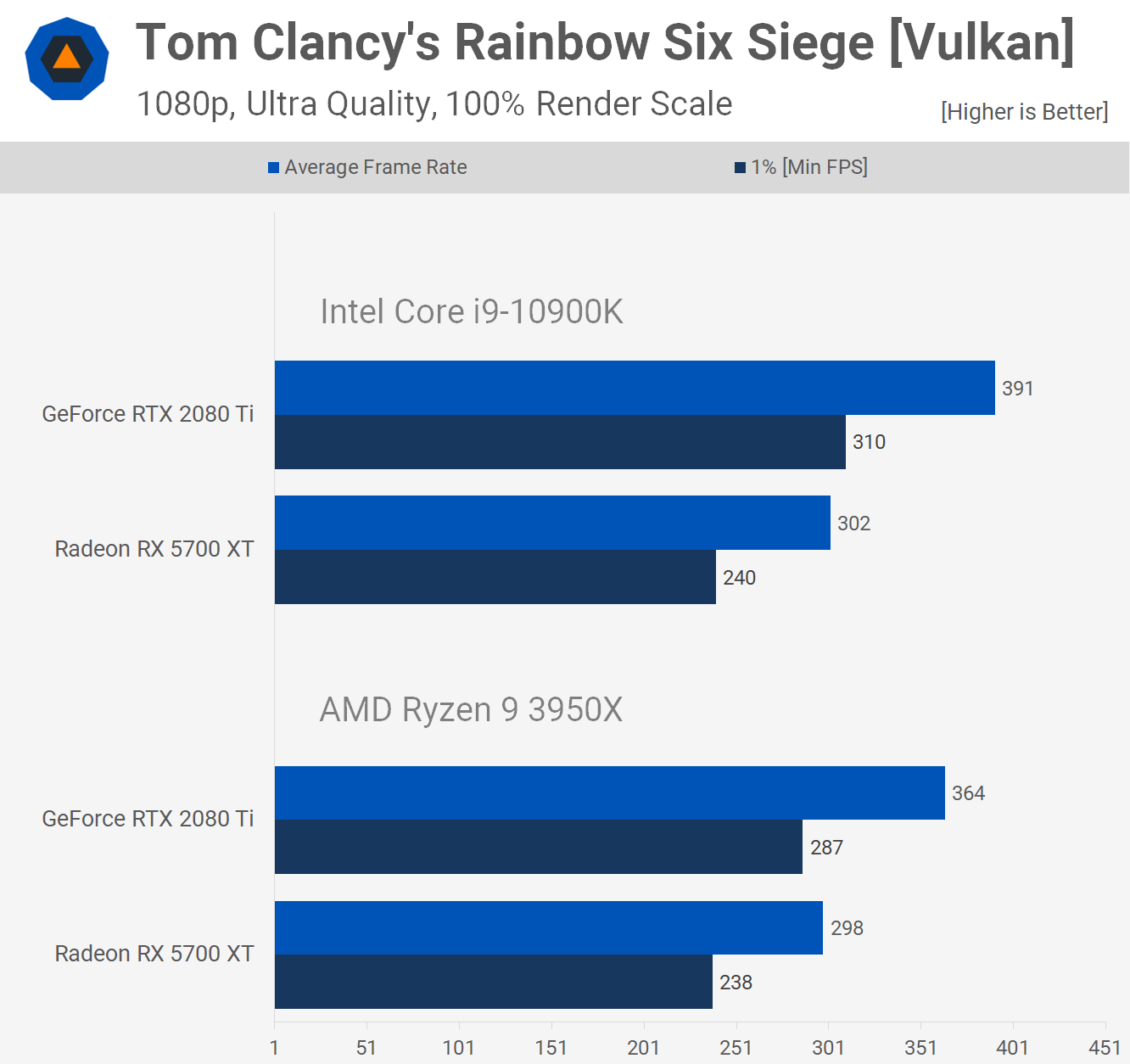

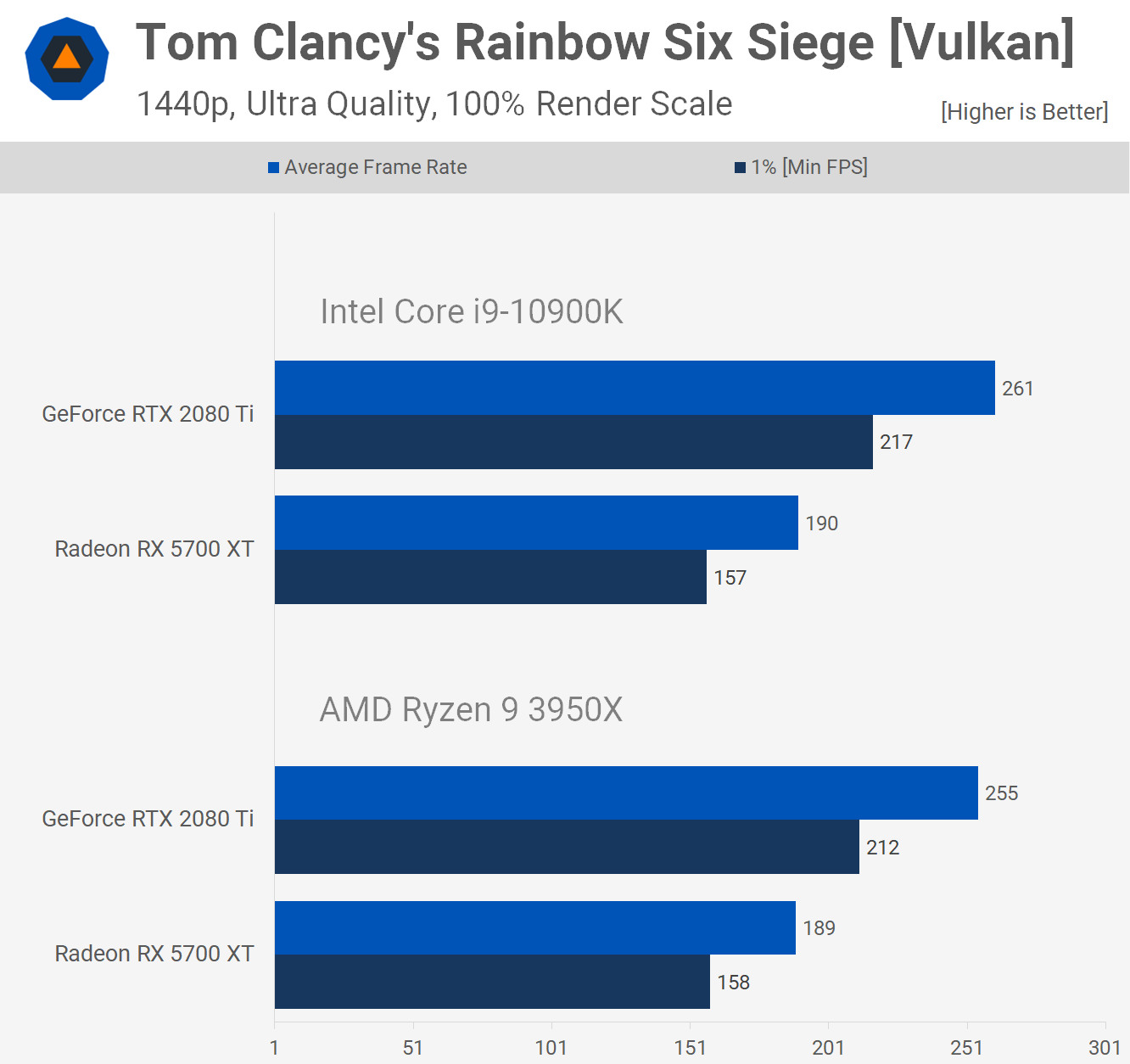

When testing with Rainbow Six Siege we find that the 10900K was 7% faster with the 2080 Ti at 1080p, it's a reasonable increase in fps given we're talking about a 7% increase from 364 fps, but we're also only testing at 1080p.

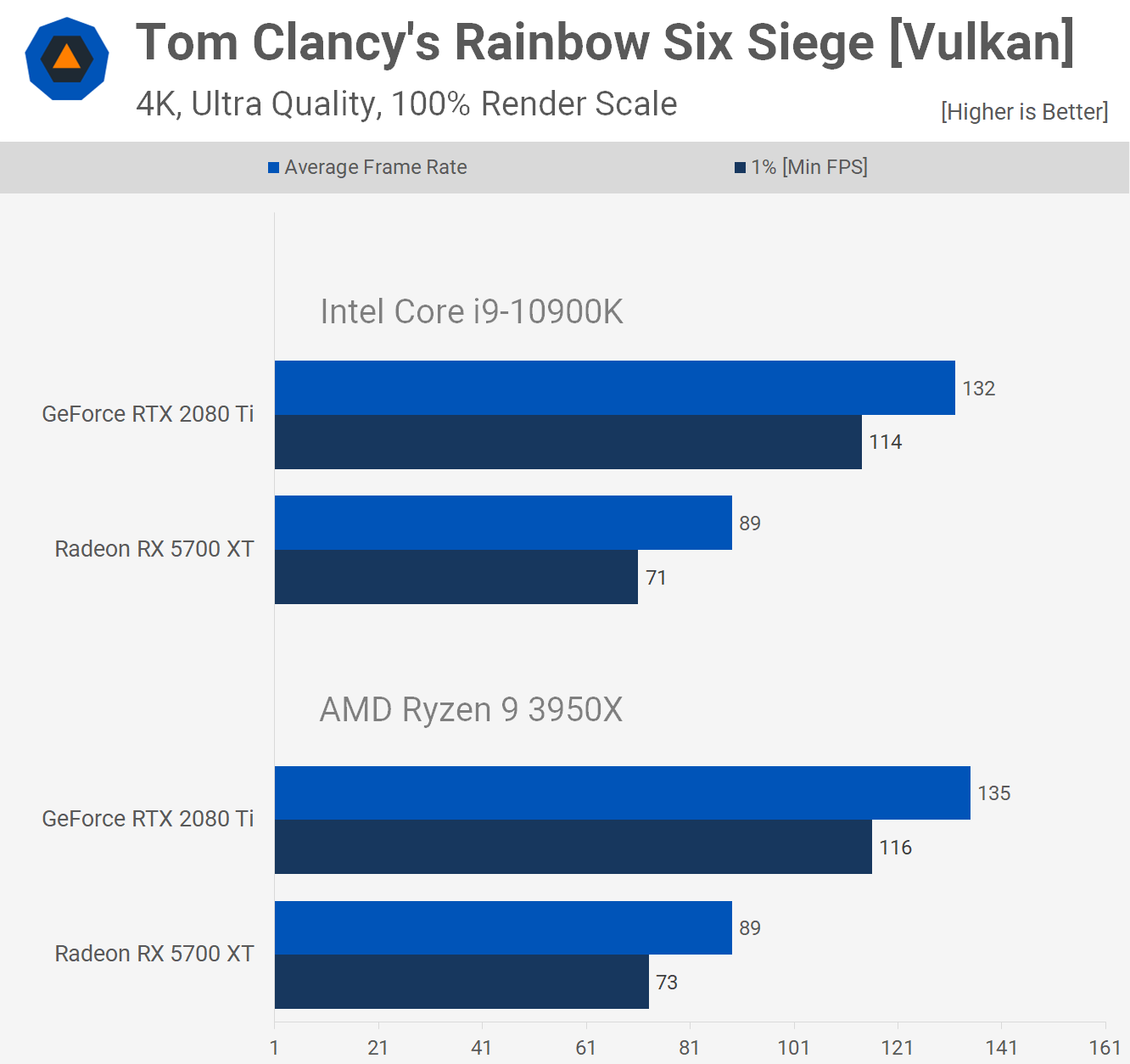

The margin seen when using the 5700 XT is virtually non-existent, here the Intel CPU was a percent faster. Testing at 1440p sees the margin with the 2080 Ti reduced to just 2%, so a pretty meaningless difference really. Then at 4K we see something quite interesting, where the 3950X was slightly faster.

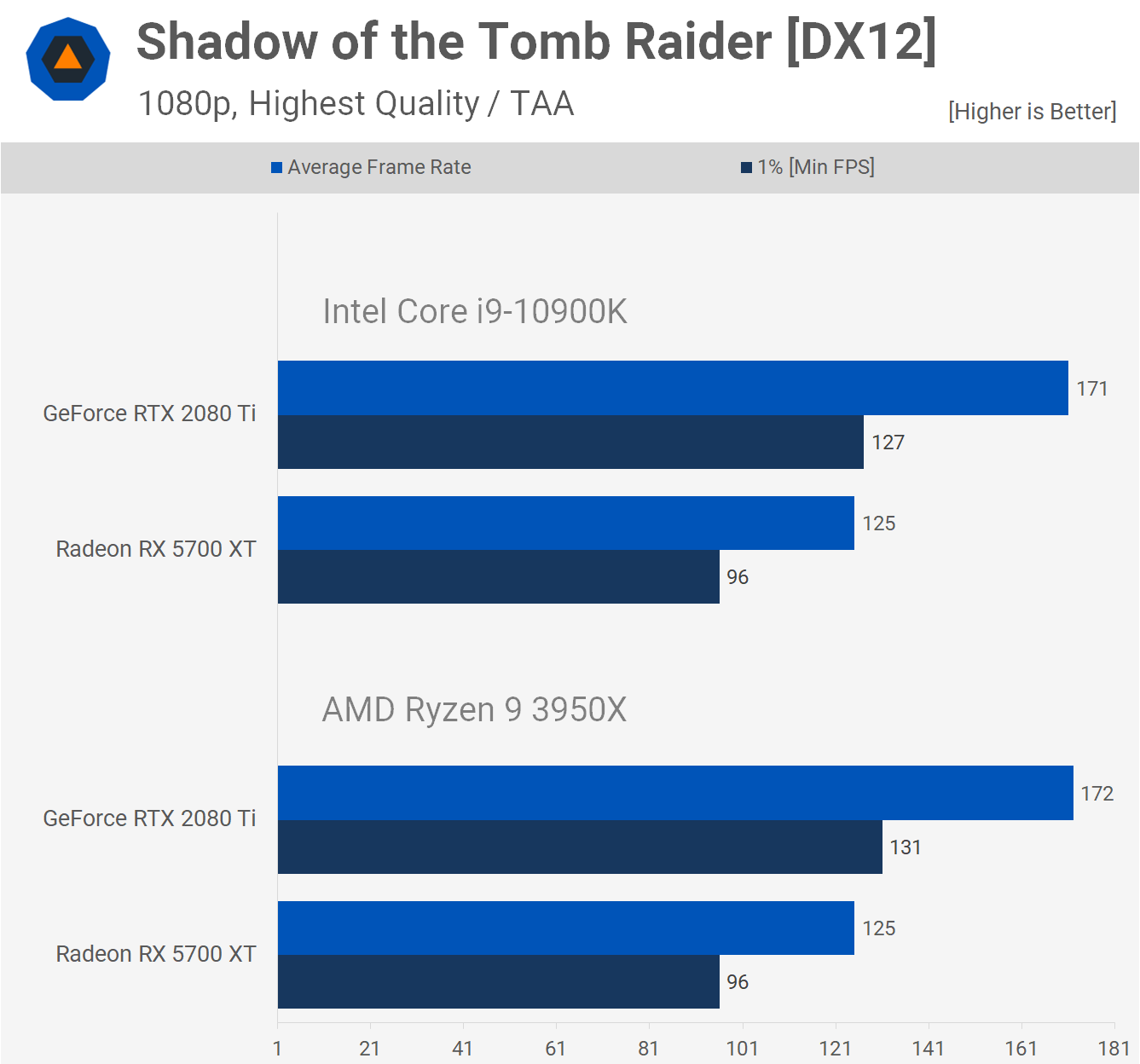

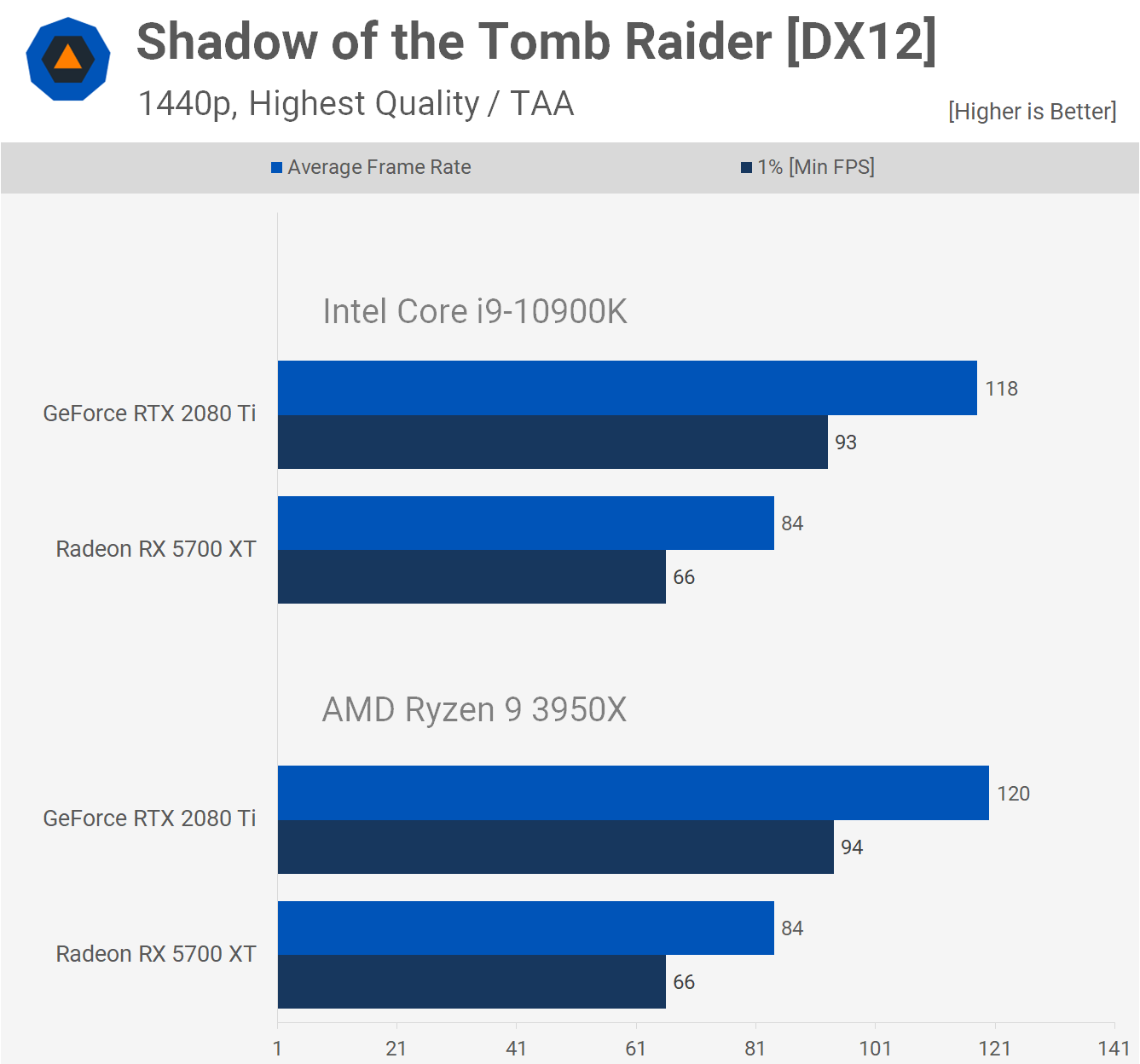

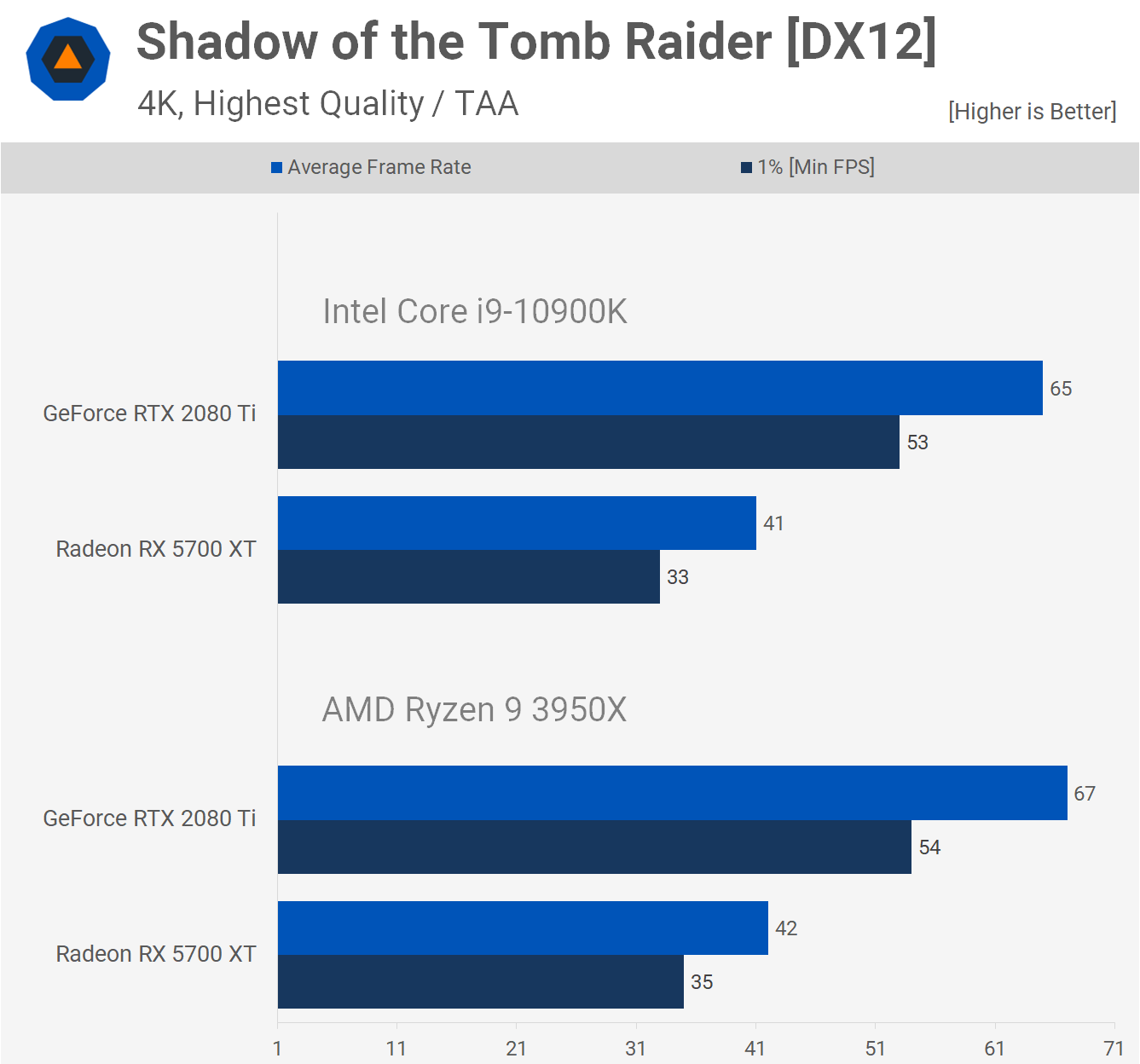

Shadow of the Tomb Raider is a very CPU demanding game though please note for this test we're using the built-in benchmark as we often do for GPU testing. Here the 3950X and 10900K delivered virtually identical performance with both the 2080 Ti and 5700 XT.

That being the case we find the same story at 1440p, identical performance with both GPUs. Then at 4K the results are much the same, though the 3950X was consistently 1-2 fps faster which is curious.

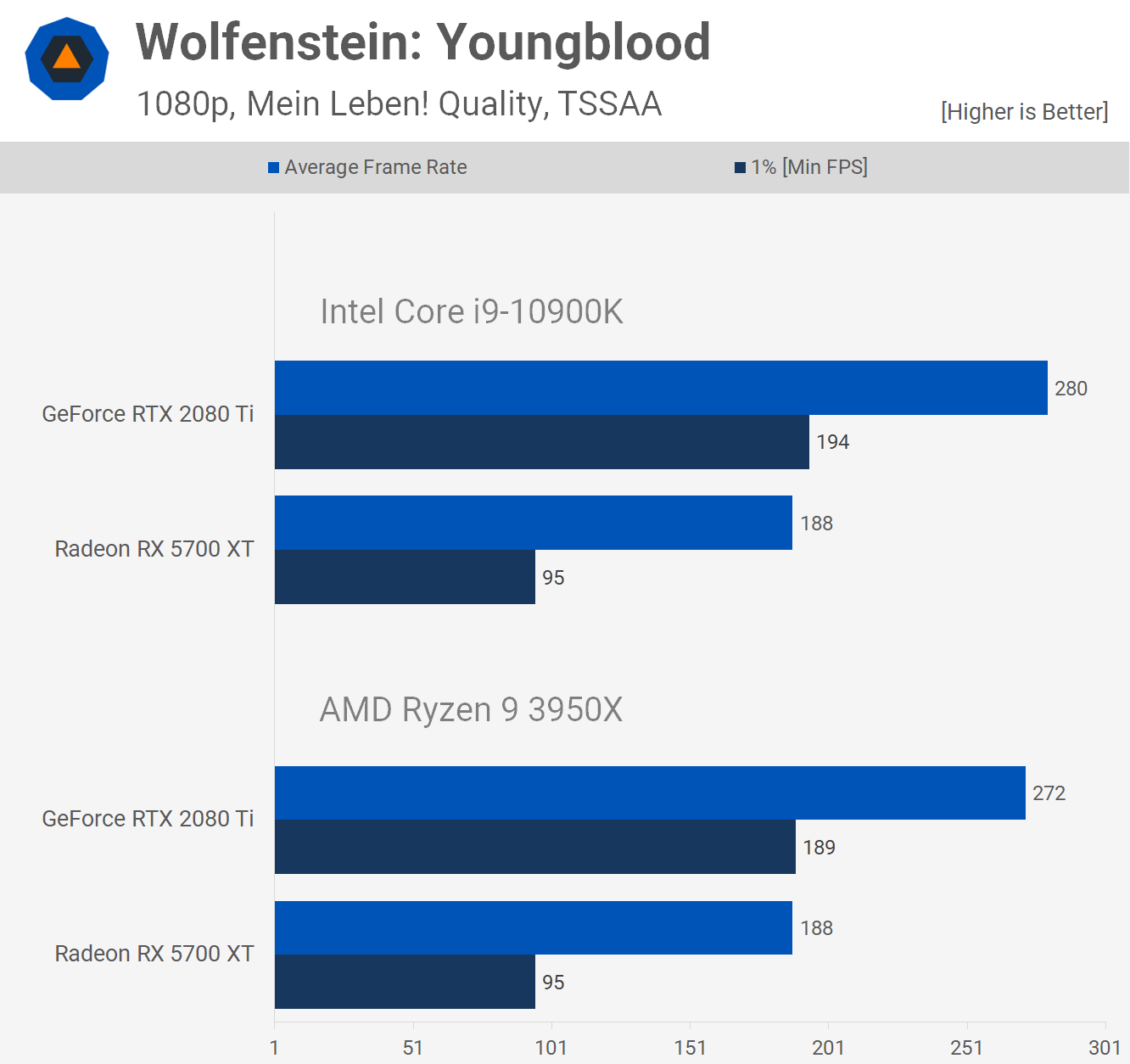

Wolfenstein: Youngblood, like Doom Eternal, is not a CPU sensitive game. So at 1080p we're seeing virtually identical performance using either CPU, with a small 3% performance advantage in Intel favor.

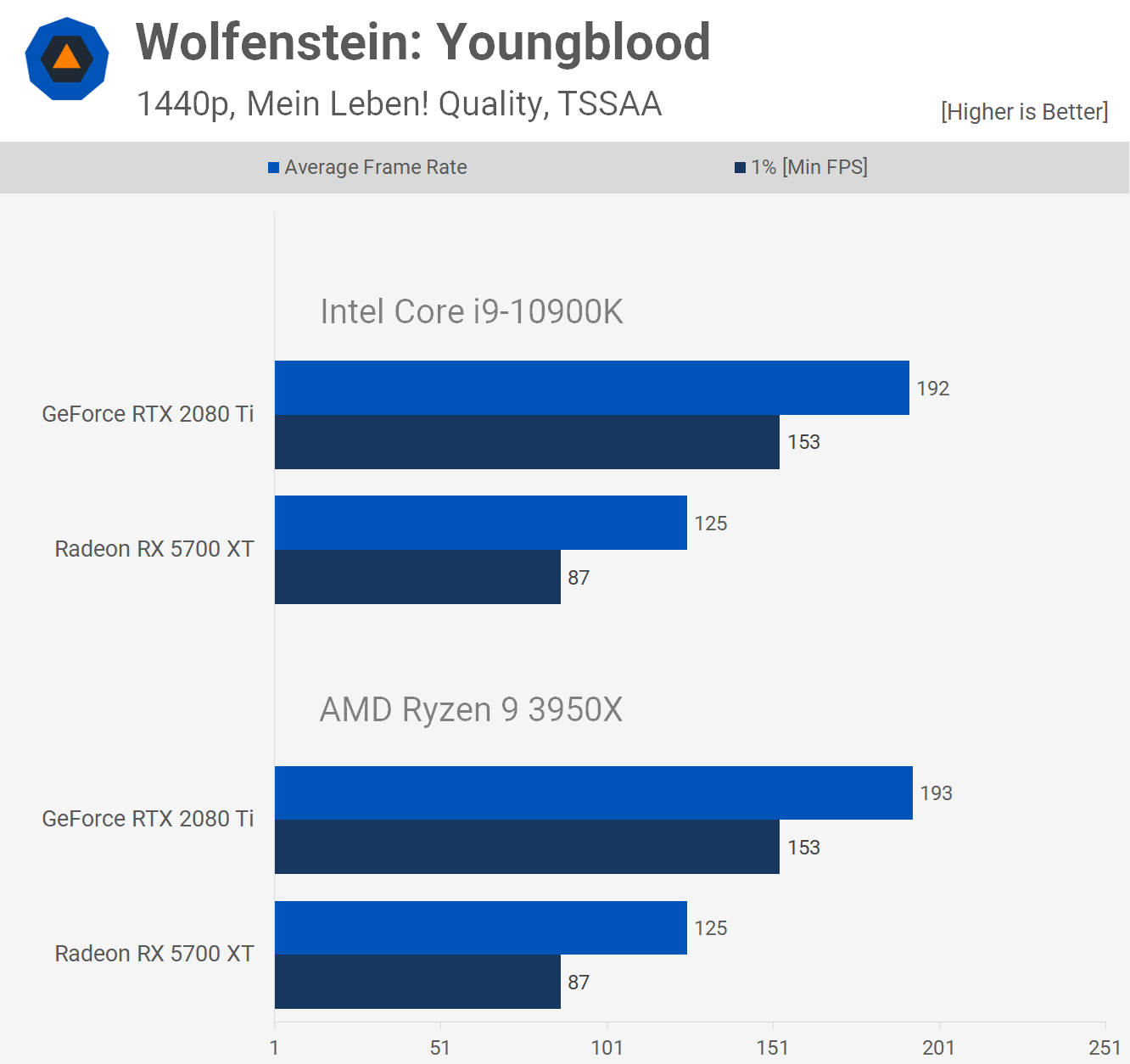

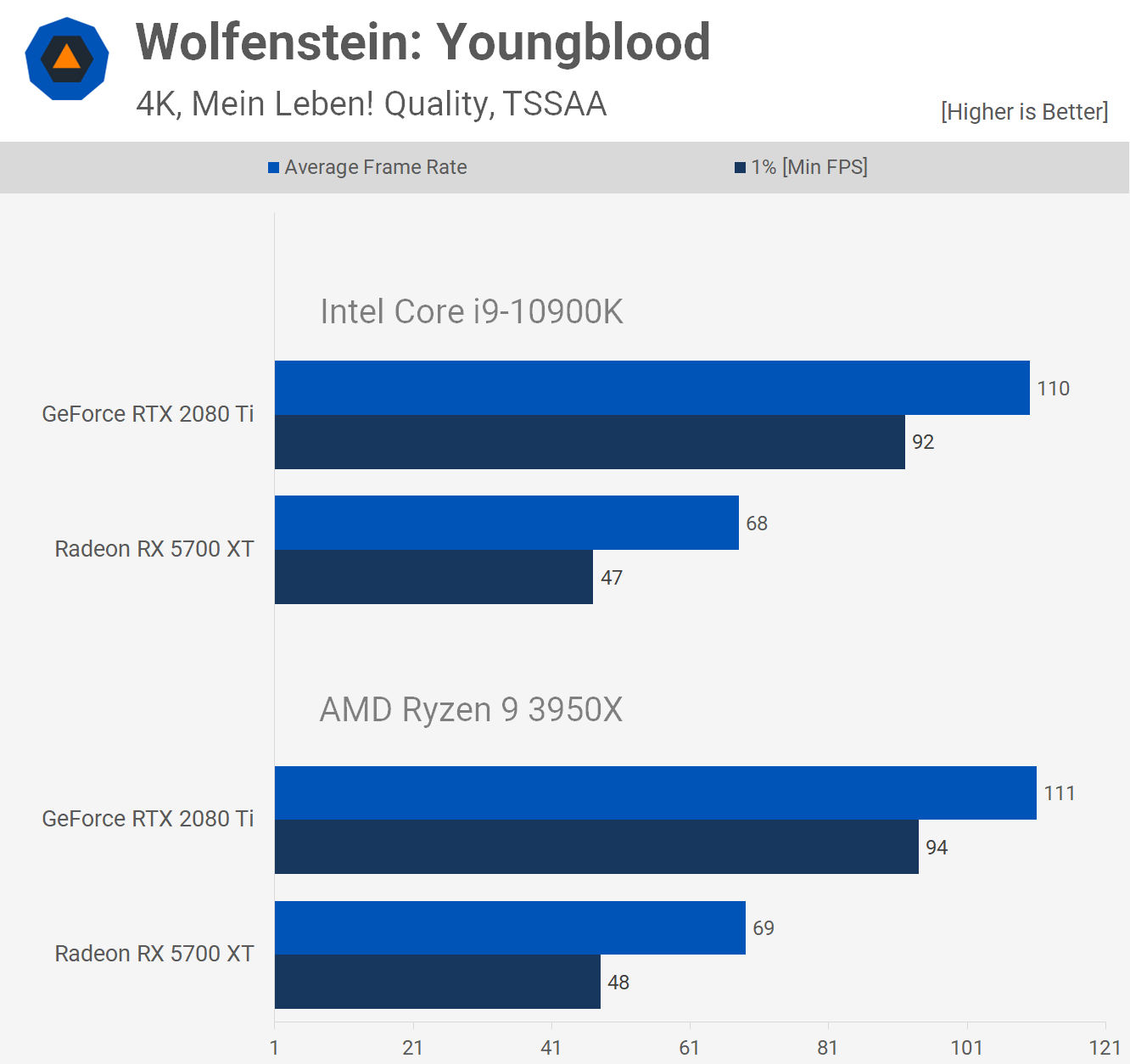

That being the case there's nothing in it at 1440p, so either CPU is able to maximize performance with the 2080 Ti. And of course, the same is true of the 4K resolution, here the CPU used doesn't matter, well assuming it's either a 10900K or 3950X.

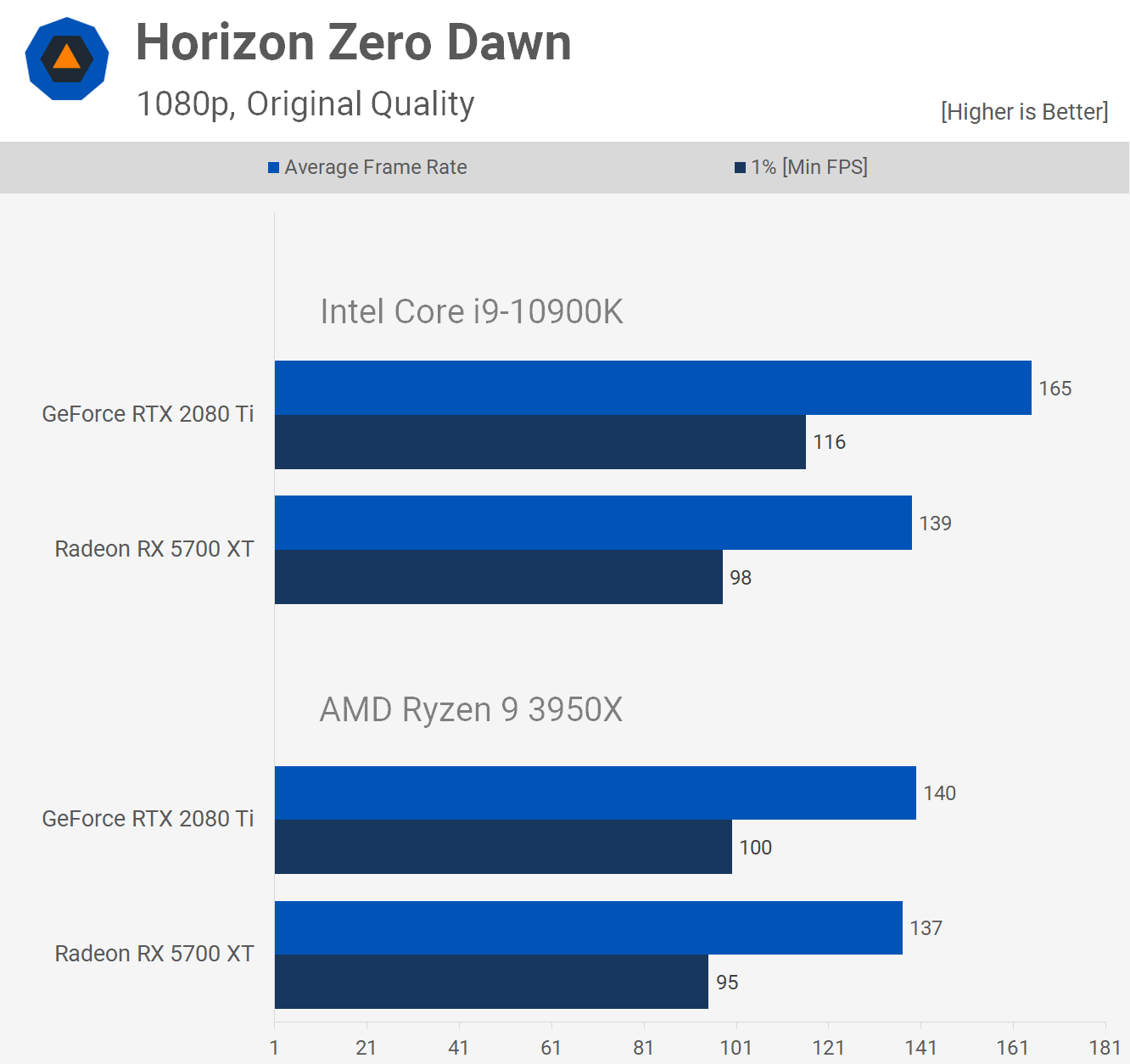

Last up we have Horizon Zero Dawn results and this game is a bit of a rollercoaster. At 1080p we find some devastating results for AMD as the 10900K is 18% faster here and that's a significant margin. We see virtually no difference when using the 5700 XT, but it's the 2080 Ti results that are the issue.

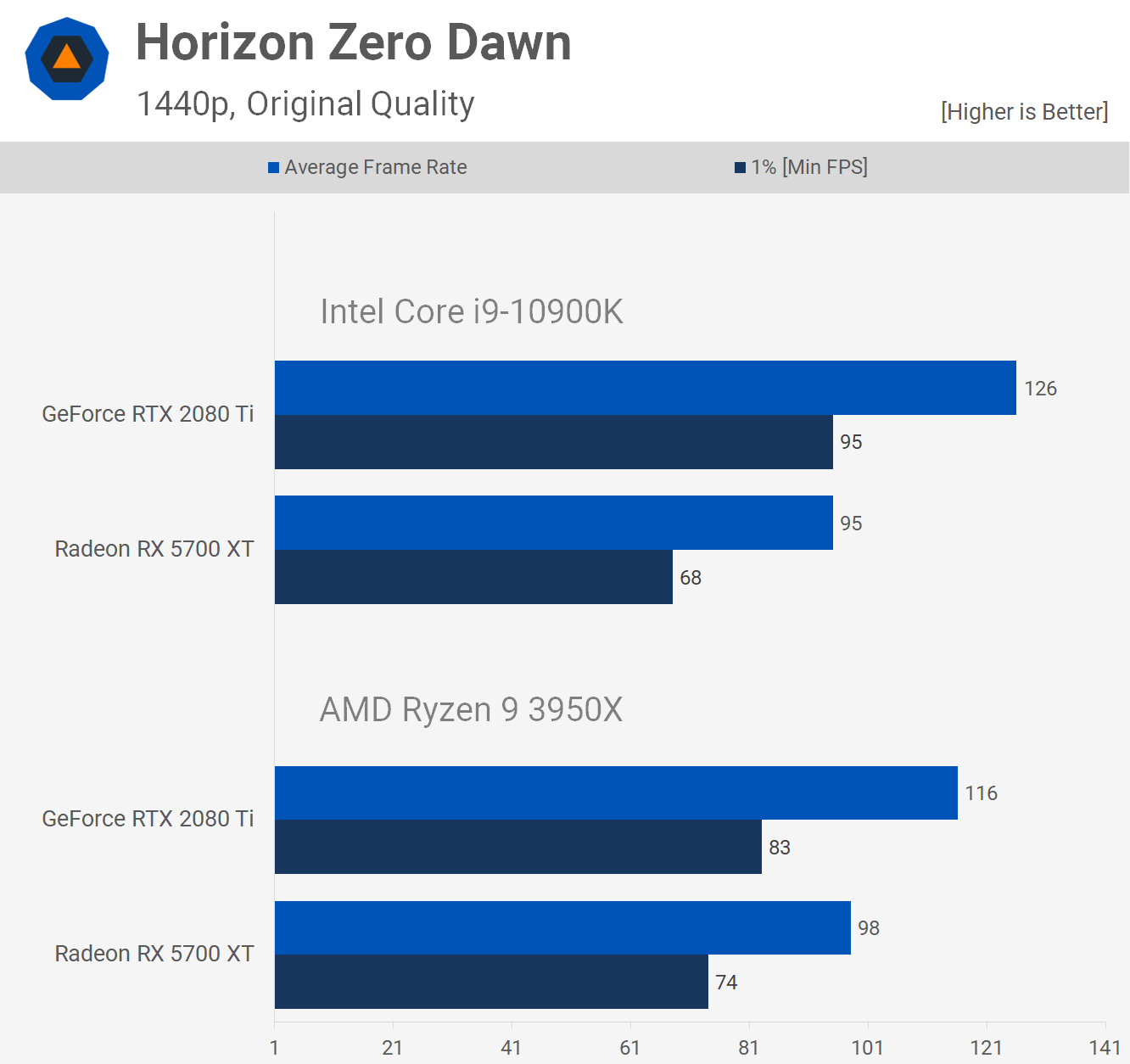

Even at 1440p the 2080 Ti is still 9% faster with the 10900K, 14% faster if we look at the 1% low performance. However, we also see something else here that's very interesting. The 5700 XT is up to 9% faster with the 3950X, though it's just 3% faster on average. Not huge margins but the Radeon GPU was consistently faster with the 3950X, a strong suggestion that the extra PCIe bandwidth is being utilized here.

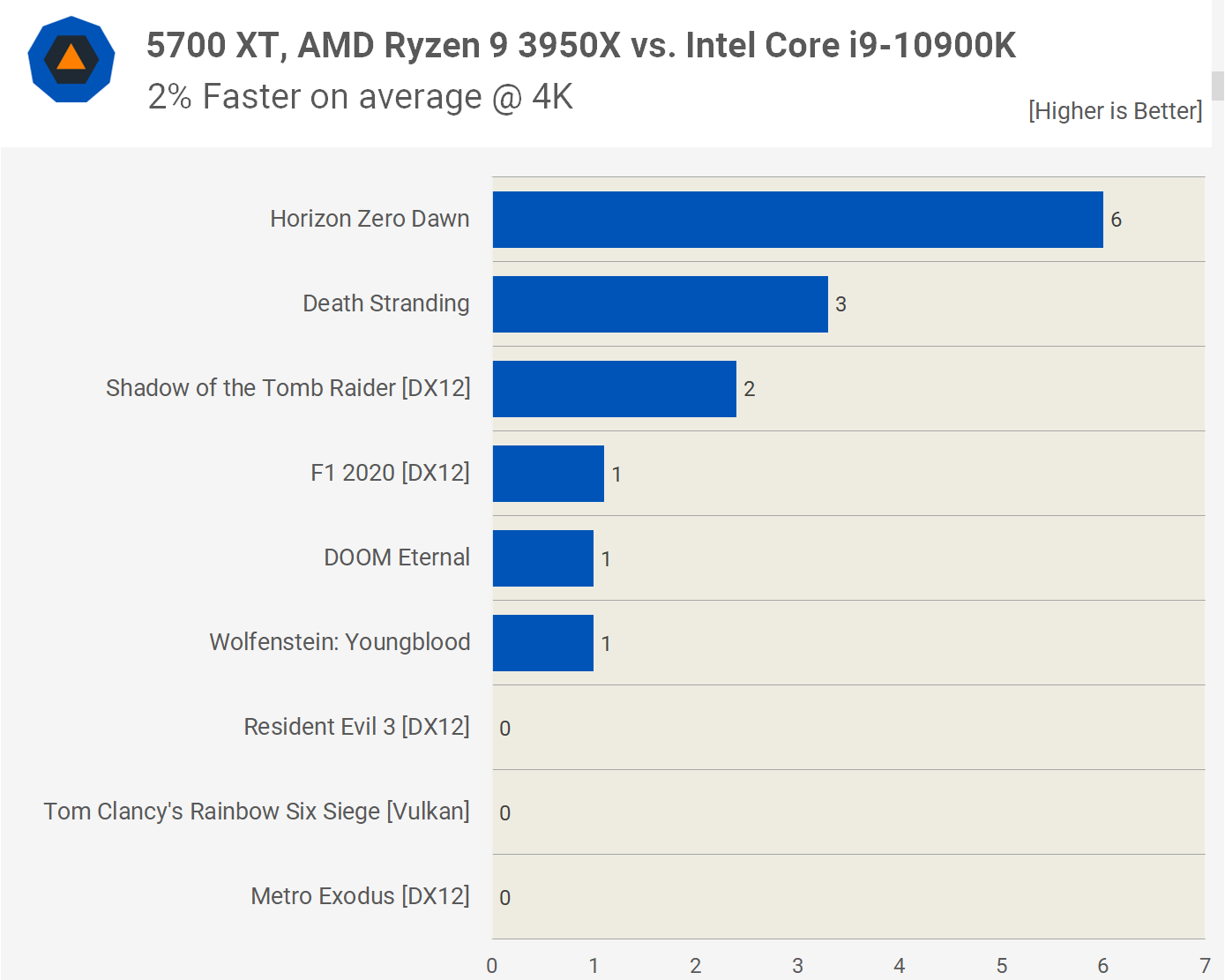

At 4K, the 10900K is just 3% faster when comparing the average frame rates while the 3950X was 6% faster with the 5700 XT. So based on that, if the 2080 Ti supported PCIe 4.0 there's a good chance performance at 4K would be slightly better with the 3950X.

Performance Summary

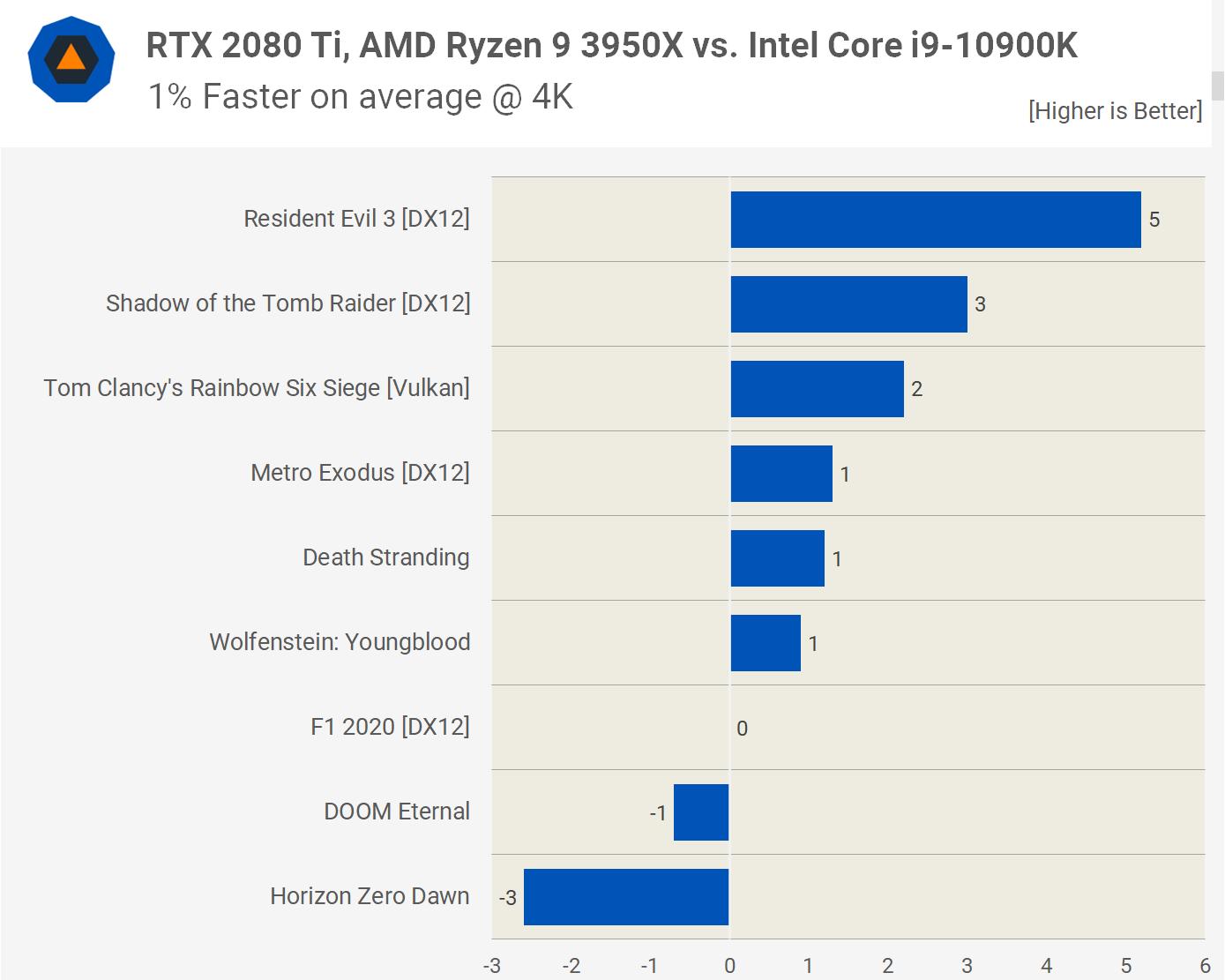

Those results weren't terribly surprising, we've benchmarked the crap out of Intel's Core series and AMD Ryzen processors at this point, so we have a very good idea as to how they compare. That said, it was interesting to see the 3950X often offering a little extra at 4K, and in a few instances we saw better performance with the 5700 XT, presumably due to the PCIe 4.0 support. But before we get into that, let's quickly go over the data.

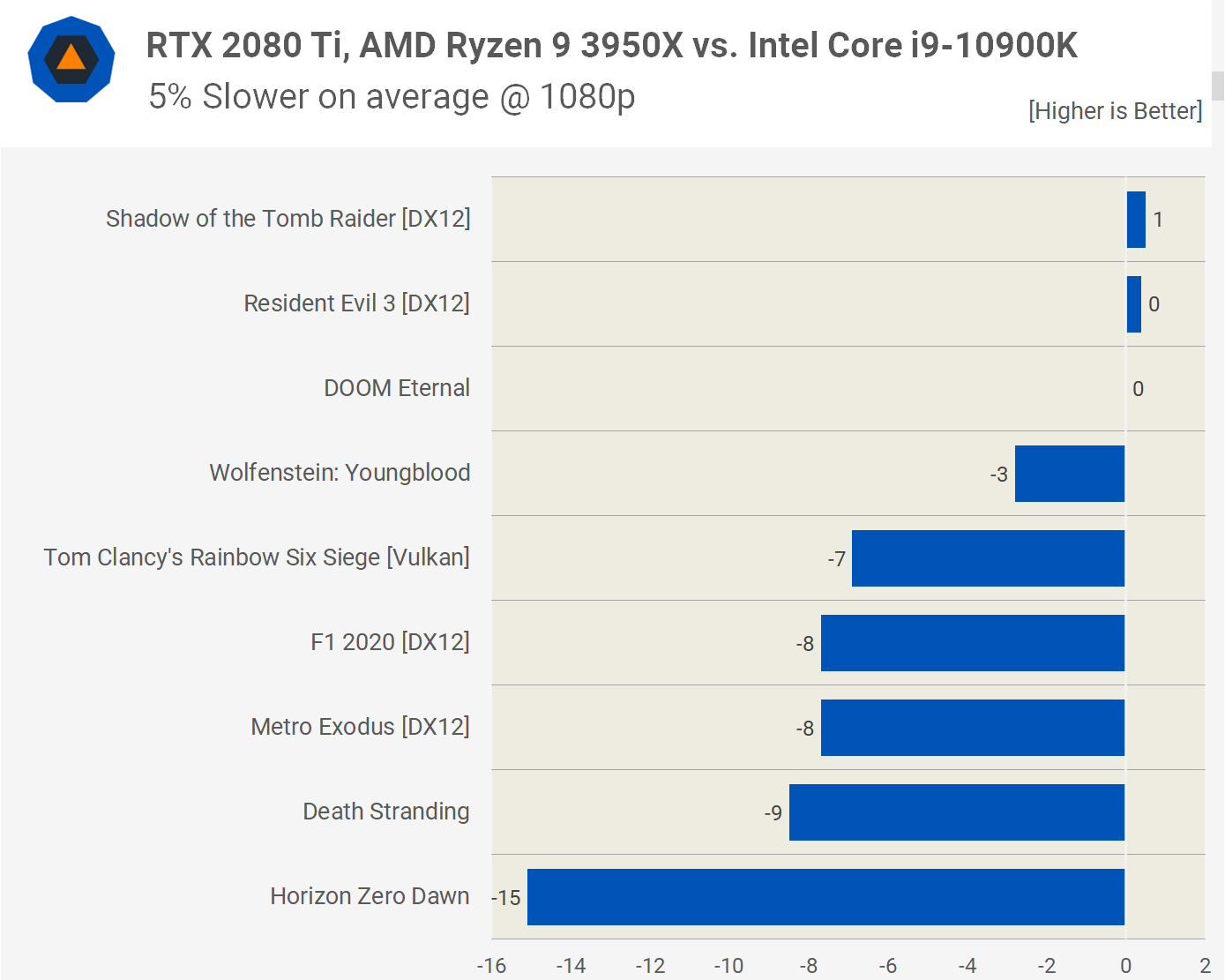

At 1080p with the 2080 Ti, the 3950X was on average 5% slower with the vast majority of the results single digit margins. The biggest loss was seen in Horizon Zero Dawn where the 3950X was 15% slower, an outlier here but as we saw in the 9900K vs 3900X comparison last year, there are games where this can happen at 1080p.

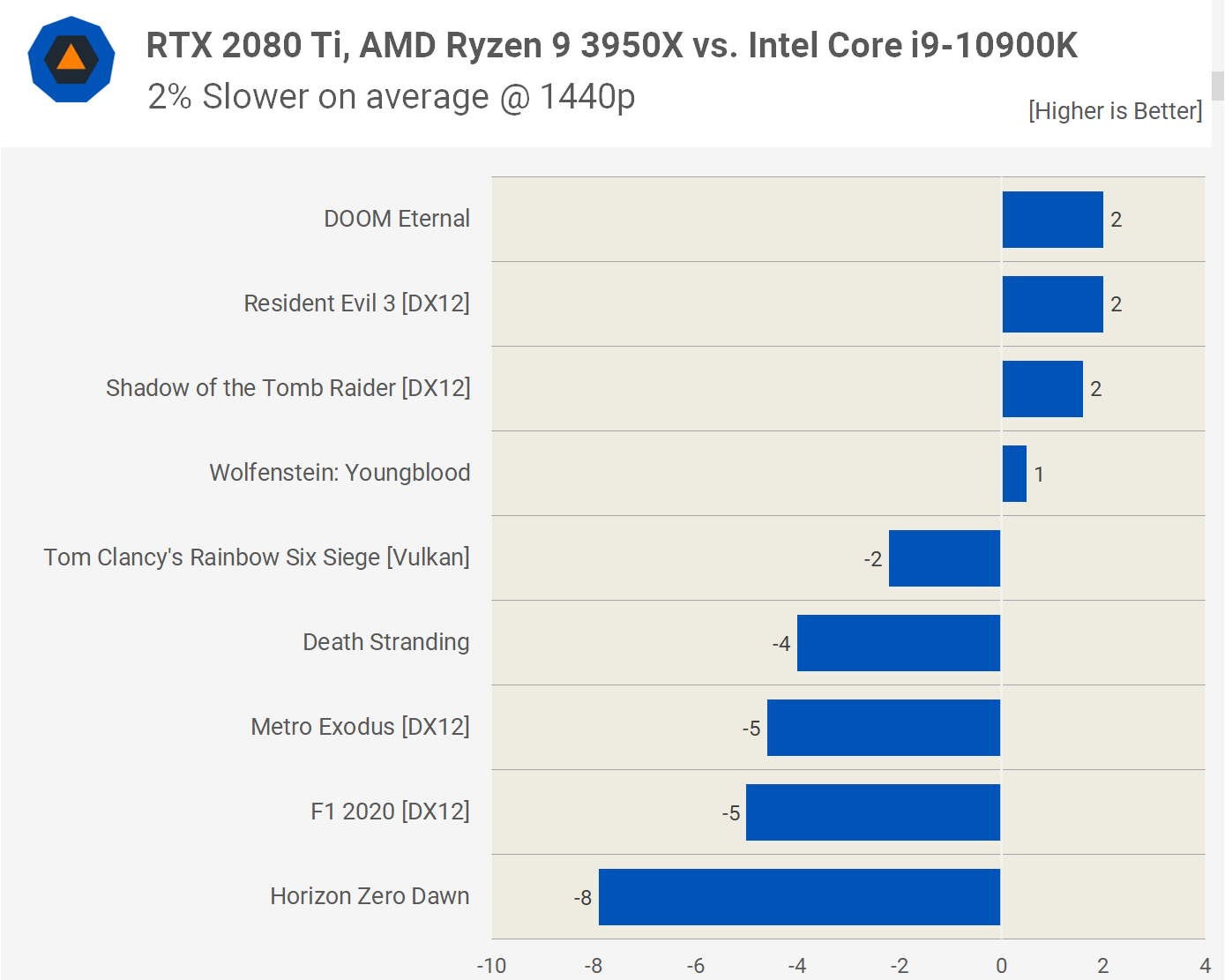

Jumping up to 1440p reduces the margin to just 2% and now the biggest outlier was just 8%, with the rest at 5% or less. The difference here is negligible for the most part with the current GeForce king.

Then at 4K the 3950X can hit the lead, albeit by a single percent. The biggest win was seen in Resident Evil 3 where the Ryzen CPU offered a small 5% boost, a meaningless difference in the grand scheme, though it means when testing at 4K it doesn't matter which of these two CPUs are used. Even if next-gen GPUs are 50% faster, that won't really change much.

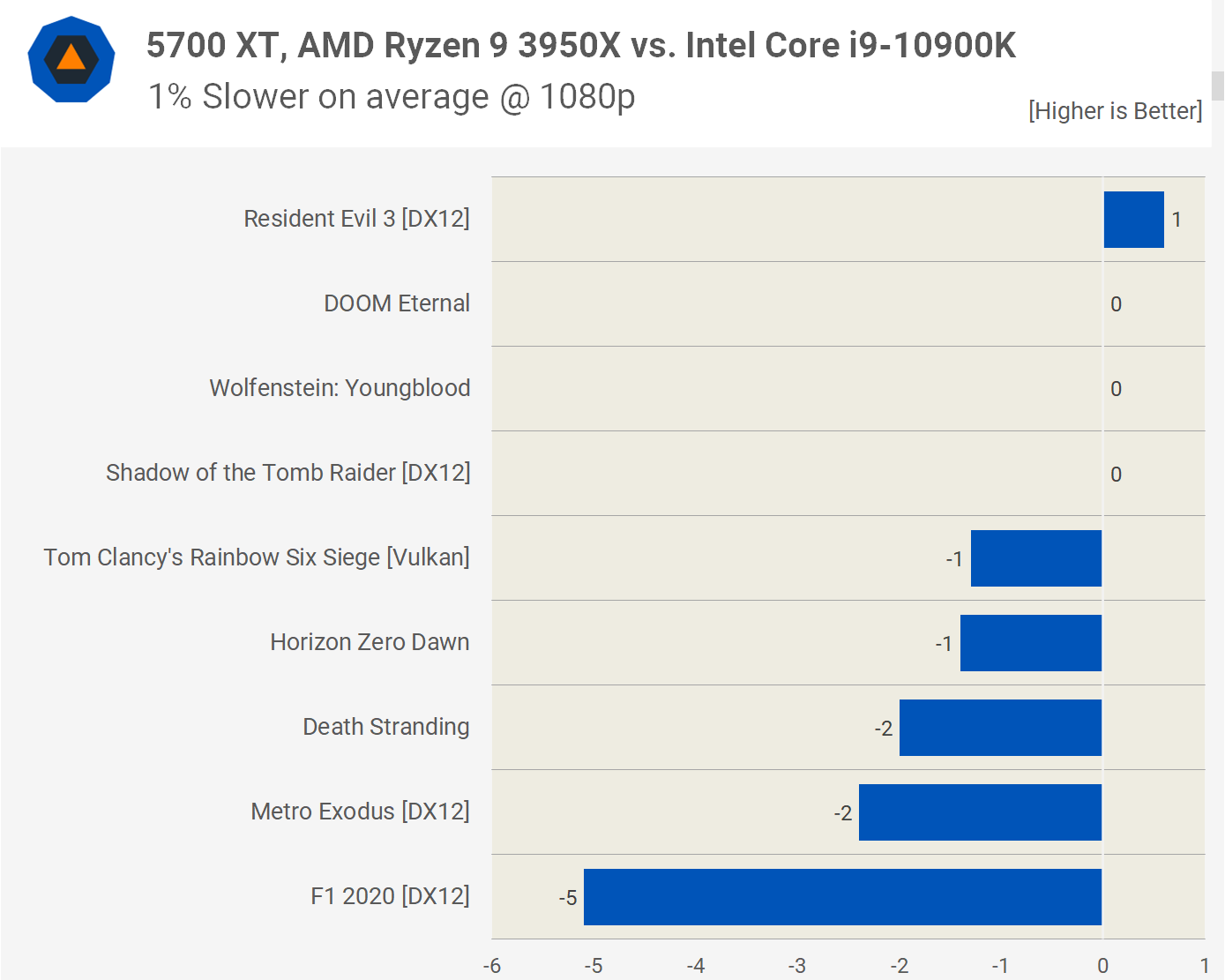

Now with a mid-range GPU like the 5700 XT, we see little to no difference between these two CPUs, even at 1080p. The biggest margin was seen in F1 2020 where the 3950X was 5% slower, but outside of that we're talking 1-2% at most.

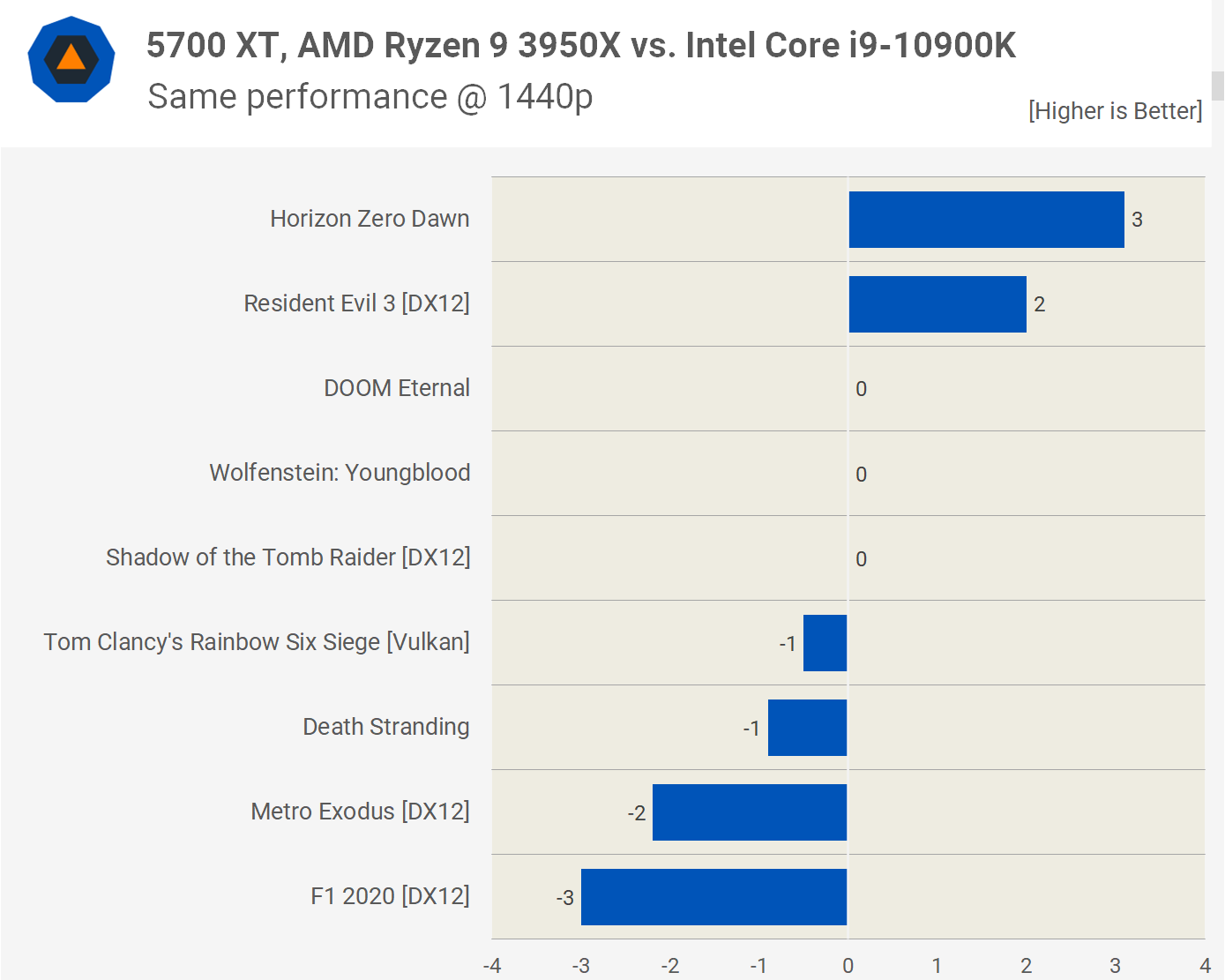

At 1440p we see identical performance on average, the 3950X was up to 3% faster in Horizon Zero Dawn while it was up to 3% slower in F1 2020.

Once again, it's the 4K results that are the most interesting. There wasn't a single instance where the 3950X was slower than the 10900K. For the most part we saw a minimal 1-2% difference and that's still within the margin of error. However, we're getting beyond that with Horizon Zero Dawn and it looks like here the extra bandwidth PCIe 4.0 offers is helping to boost performance of a mid-range GPU, which is surprisingly to see.

What We Learned

When you consider the fact that the Ryzen 9 3950X was just 2% slower on average at 1440p, it's not a stretch to say that the margin could be reduced to nothing, or even swing a few percent in AMD's favor with a more powerful GPU supporting PCIe 4.0, especially when testing with the latest and greatest games.

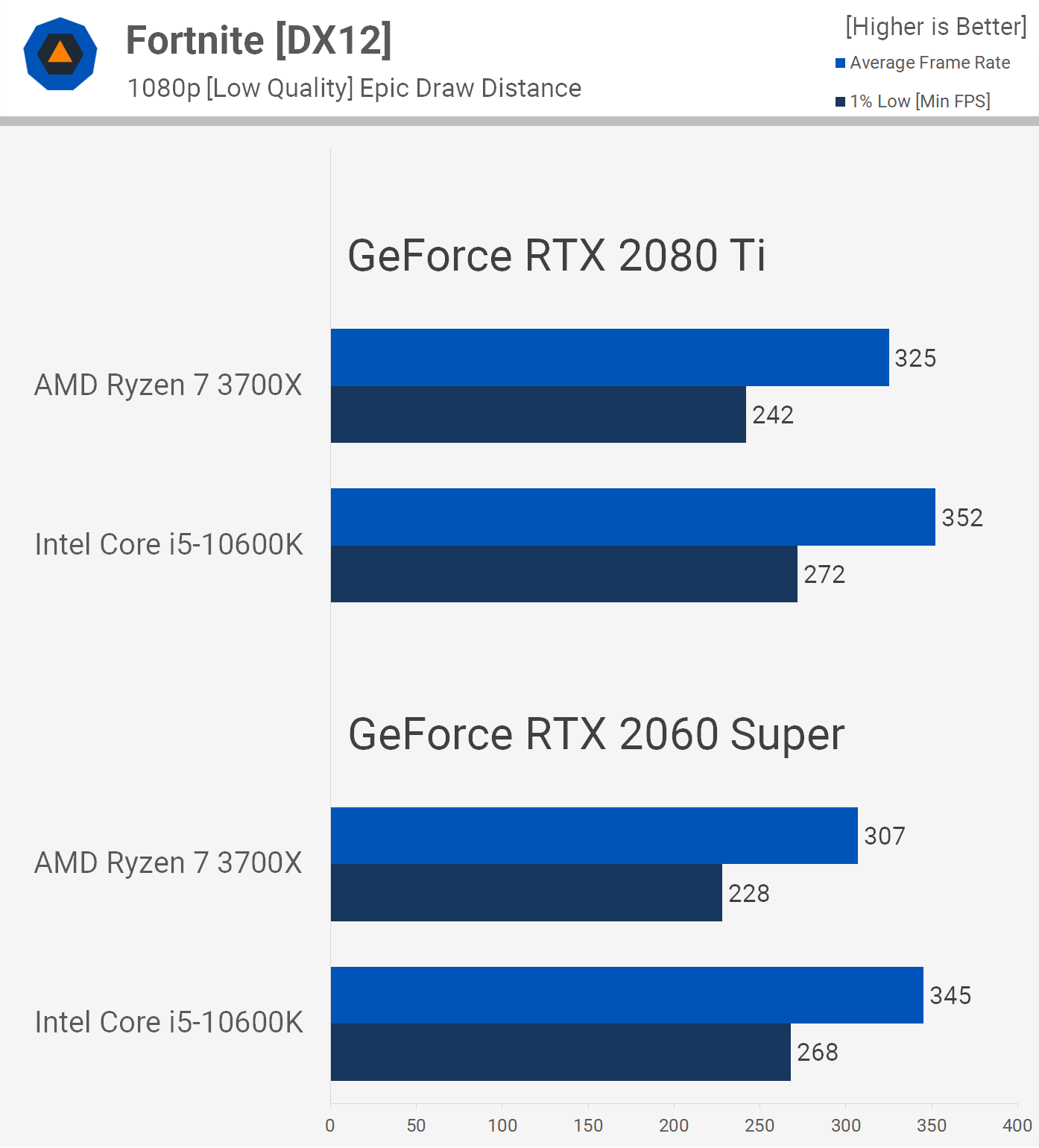

For new GPU releases we're not interested in testing games such as StarCraft II, World of Tanks, Rocket League, Fortnite, CSGO, and so on. They're not exactly cutting edge titles, and as such they're not going to push next gen hardware to its limits. Get an RTX 2060 if that's all you want to play. Games that we'd like to add to this list include titles such as Microsoft's Flight Simulator 2020 and Cyberpunk 2077, for example.

As a side note, if you do care about performance in esports titles and you want to know what CPU to get, we do have an entire series dedicated to that testing, and it's true, Intel does have an advantage here for those seeking maximum performance.

But it's unlikely upcoming AAA titles will see a strong CPU bottleneck with either the i9-10900K or 3950X at 1440p and beyond, and as we said there's a chance PCIe 4.0 could come into play. We're not banking on it though.

On that note, further luring us towards the 3950X is that few or maybe even no other reviewers will use it for testing next gen GPUs. Breaking away from the norm can be risk, but giving us confidence to give it a shot is 83% of you. Whichever way we go, there's a good chance before long we'll throw all that data away and start over again once Zen 3 arrives – if Zen 3 is as good as we think it's going to be – making this decision much, much easier.

Do let me know what you think about this data. Was any of it surprising, and ultimately what do you think we should do? Ditch Intel in favor of AMD, or stick with our current Core i9-10900K test system?