Adaptive sync display technologies from Nvidia and AMD have been on the market for a few years now, however it's just recently that it's become more mainstream with gamers taking the plunge thanks to generous selection, a wide variety of options, and monitor budgets. Initially, Nvidia's G-Sync and AMD's FreeSync significantly differed in their implementation and user experience, but now that both technologies and ecosystems have matured, it's a good opportunity to revisit them to see where the differences lie in mid 2017.

Technology

For those that haven't been keeping up with adaptive sync, here's a quick refresher on what it brings to the table. Traditional monitors (without adaptive sync) have a fixed refresh rate, which sees the display update its image at the same interval regardless of what your PC is doing. For 60 Hz monitors, this means the image is always updated every 1/60th of a second.

The issue with a fixed refresh rate is that when you're playing games, your graphics card isn't always outputting frames at the same interval as your monitor's refresh rate. Occasionally you may hit a locked 60 FPS, which produces frames every 1/60th of a second to match a 60 Hz monitor's refresh, but frame rate fluctuations are far more common. For example, if you're playing at 45 FPS, your graphics card is producing frames every 22.2ms when your 60 Hz display wants to update every 16.7ms.

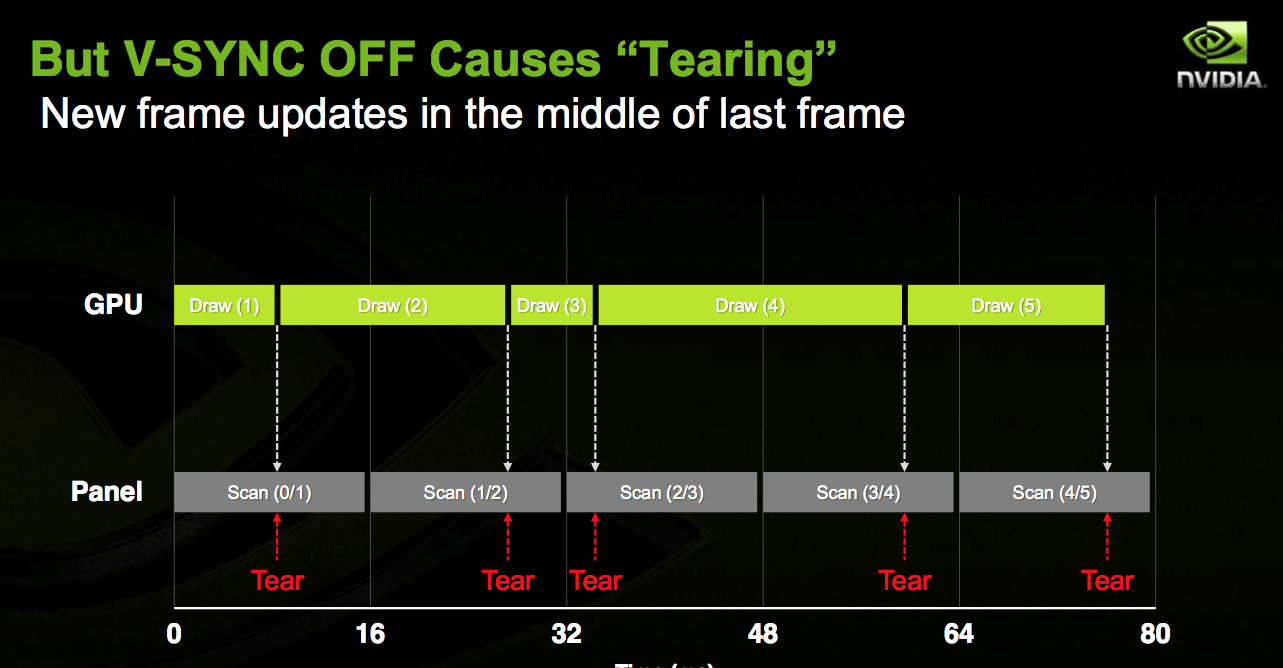

This sort of mismatch results in one of two things. With v-sync off, you'll get screen tearing, as a new frame may become ready half way through the display refresh process, leading to both frames being shown at once.

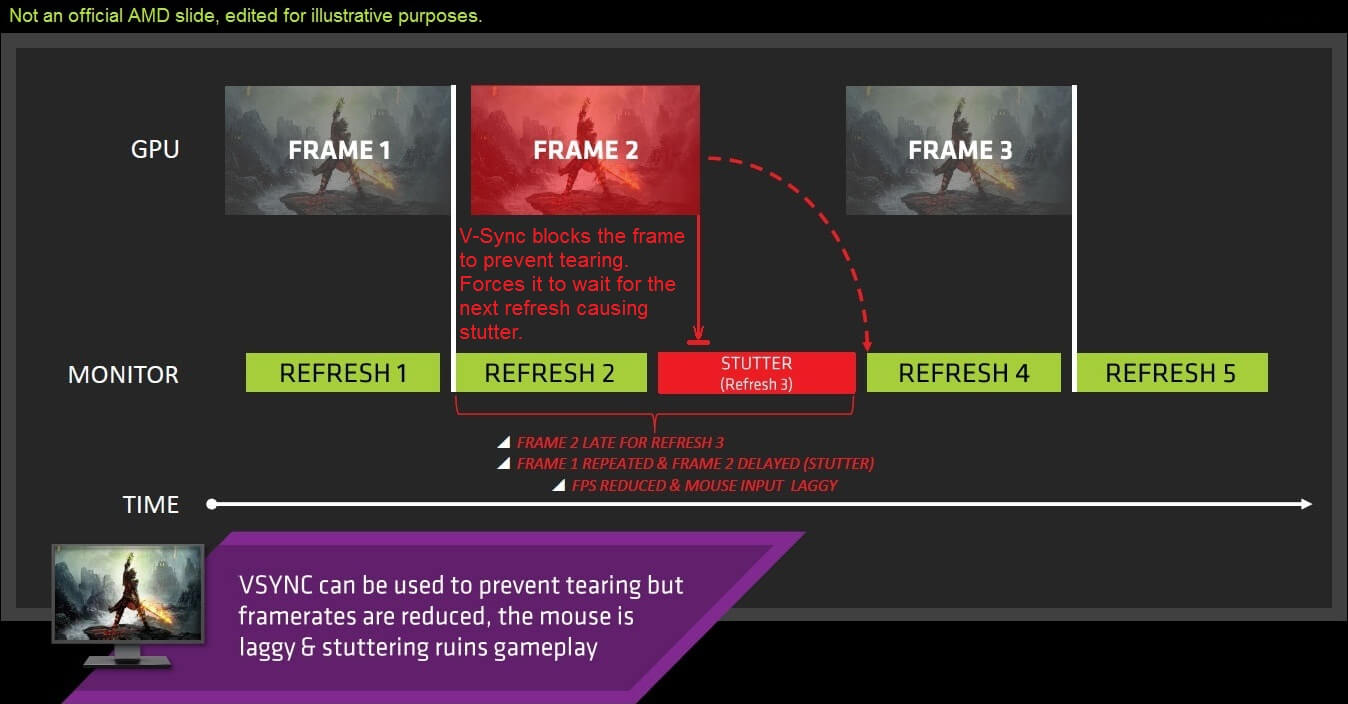

Screen tearing is ugly, jarring and annoying during gameplay. Switching v-sync on solves screen tearing, as it forces each frame to wait until the display is ready to refresh, but it often leads to noticeable stuttering if your frame rate is fluctuating below the display's refresh rate.

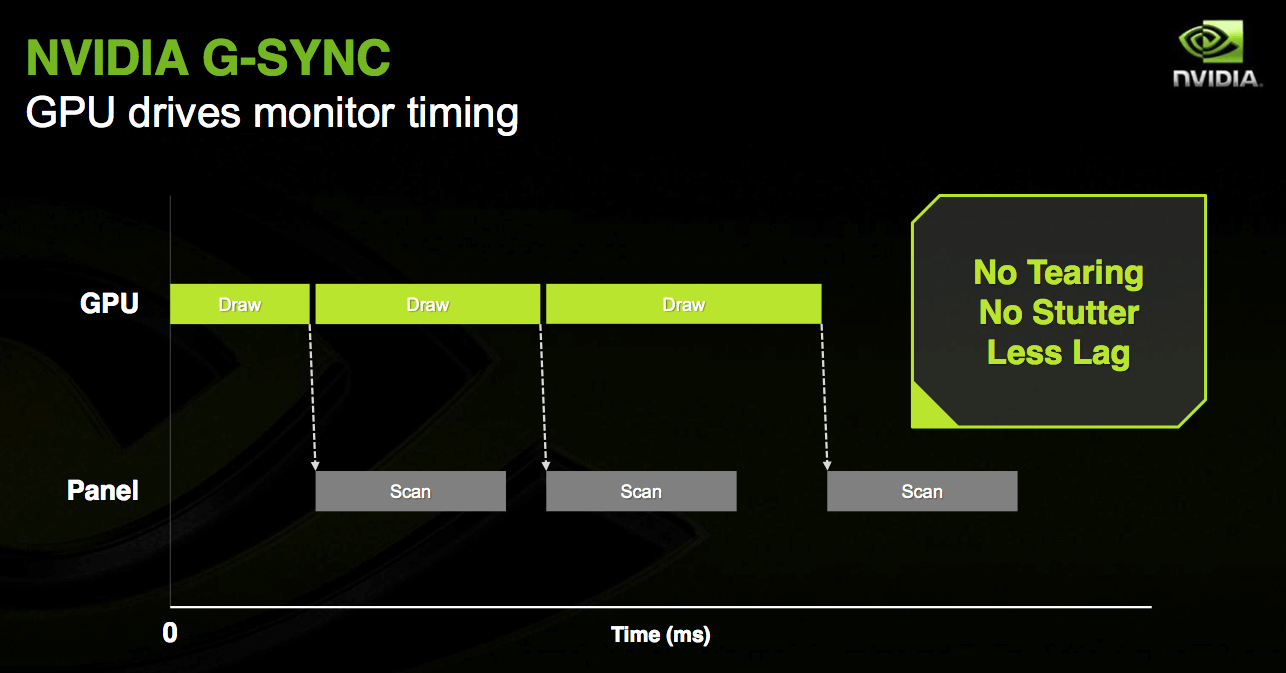

The solution to these issues is adaptive sync, which informs the display when to refresh based on the frame rate produced by the GPU. If your game is running at 45 FPS, adaptive sync tells the monitor to refresh at 45 Hz. If the game jumps up to 57 FPS, adaptive sync makes the monitor refresh at 57 Hz. This creates a dynamic monitor refresh rate that's synced to the GPU output rate, eliminating screen tearing and stuttering, leading to a smoother and more enjoyable gaming experience.

The improvement is especially noticeable in the 40 to 60 FPS range, often giving lower frame rates a similar level of smoothness as 60 FPS on a non-adaptive-sync 60 Hz monitor. At higher refresh rates (greater than 60 Hz), the benefit of adaptive sync is reduced, though the technology still helps to remove screen tearing and stutters caused by frame rate fluctuations.

The implementation of adaptive sync differs between FreeSync and G-Sync. FreeSync uses the VESA Adaptive-Sync standard, a component of DisplayPort 1.2a, along with a variety of off-the-shelf display scalers that support adaptive sync.

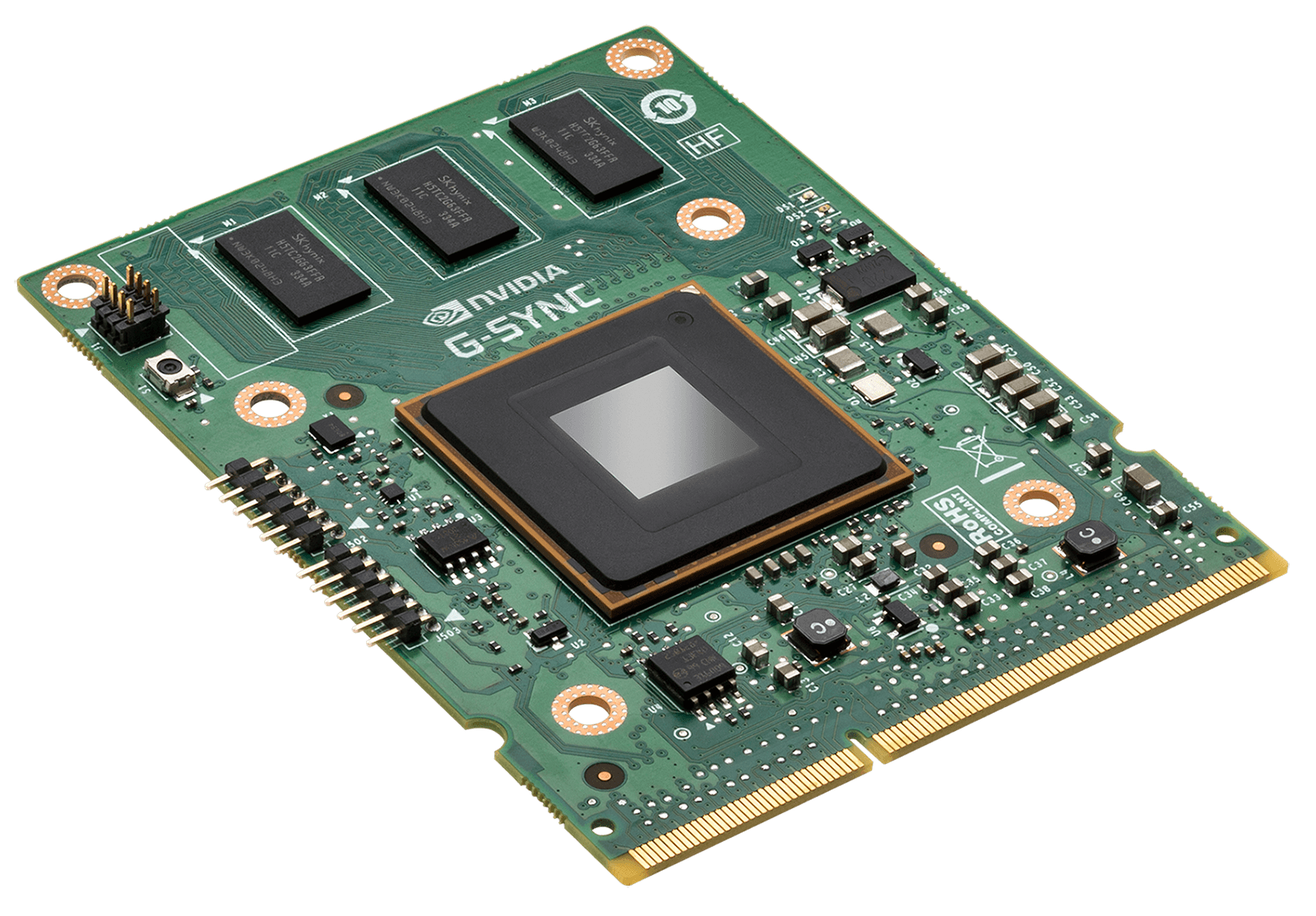

G-Sync uses a proprietary module from Nvidia in place of the usual display scaler, though it also communicates over DisplayPort. The proprietary module along with the closed nature of the G-Sync platform makes it more expensive to implement than FreeSync, which I'll explore in more detail later.

Feature Differences

Both G-Sync and FreeSync provide the key features of adaptive sync, but due to differences in implementation, there are some features differences as well.

As G-Sync monitors use a proprietary scaler module, most displays are limited to just DisplayPort and HDMI for connectivity, with only DisplayPort supporting adaptive sync. FreeSync uses standard display scalers, so FreeSync monitors often have many more connectivity options than their G-Sync counterparts, including multiple HDMI ports and legacy connectors such as DVI and even VGA.

FreeSync has another connectivity advantage through a feature called FreeSync over HDMI. As the name suggests, AMD has managed to get adaptive sync working over standard HDMI connectors and cables, provided both the GPU and monitor support the feature.

There are a few benefits to running adaptive sync over HDMI rather than DisplayPort, among them that HDMI cables are cheaper than DisplayPort cables, and devices with limited room for ports (such as laptops) can use the more widely-adopted HDMI standard for compatibility with other displays without losing support for adaptive sync.

G-Sync's proprietary module does have its advantages, too. G-Sync continuously tweaks monitor overdrive on the fly to eliminate ghosting wherever possible, which has been shown previously to improve ghosting performance compared to FreeSync displays. Driver and monitor tweaks over the past few years have improved FreeSync displays in this regard, though.

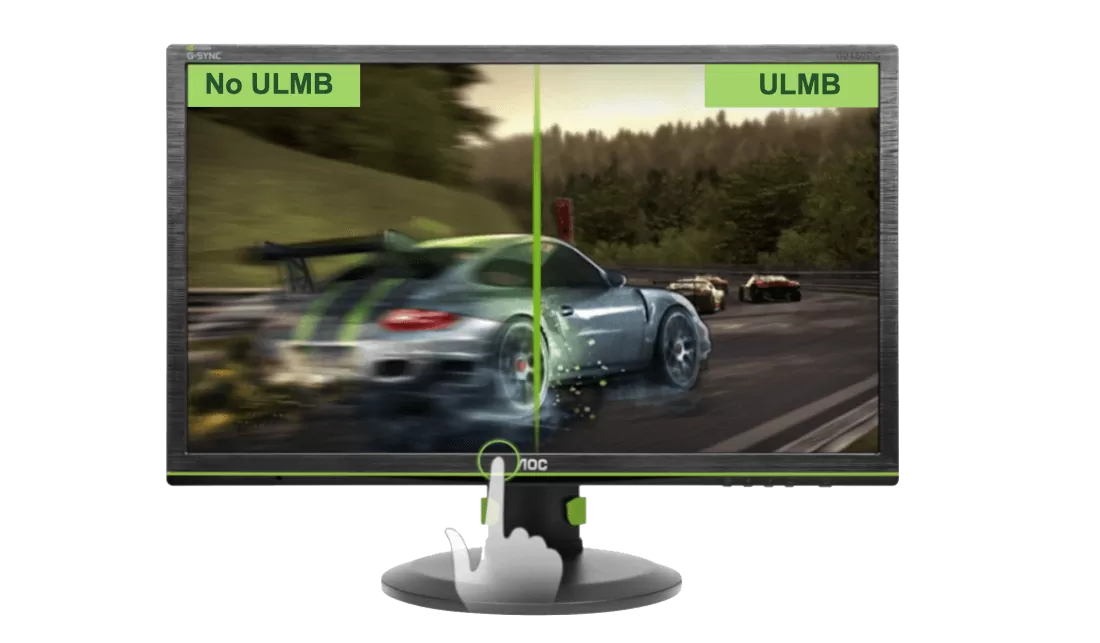

Nvidia has integrated a feature called Ultra Low Motion Blur (ULMB) into every G-Sync monitor, which strobes the backlight in sync with the display's refresh rate to reduce motion blur and improve clarity in high-motion situations. The feature works at high fixed refresh rates, typically at or above 85 Hz, though it does come with a small brightness reduction.

The main downside to ULMB is that it can't be used in conjunction with G-Sync. In other words, you need to choose between variable refresh rates without stuttering and tearing, or high clarity and low motion blur. Most people will prefer to use G-Sync for the smoothness it provides, while esports enthusiasts will love ULMB for its responsiveness and clarity at the expense of tearing.

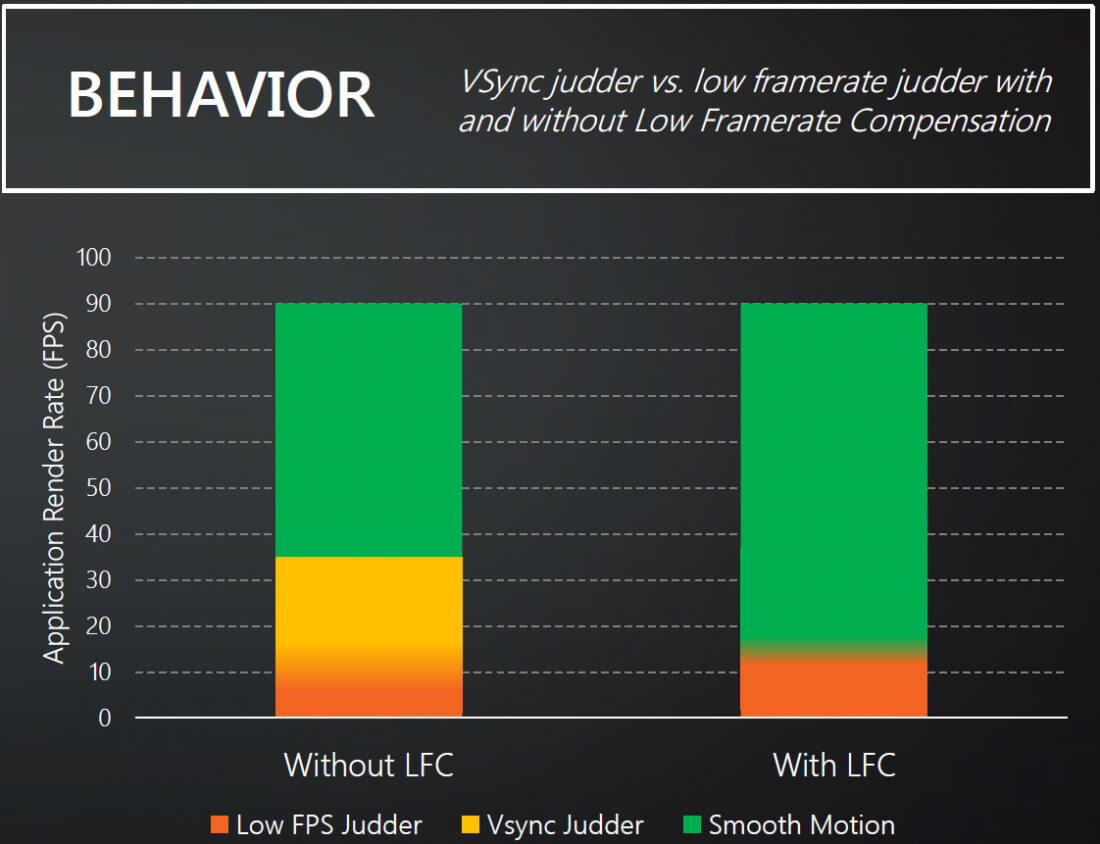

Low framerate compensation (LFC) is another point of difference between G-Sync and FreeSync. Every adaptive sync monitor has a refresh rate window, for example 30 to 144 Hz, within which the refresh rate can dynamically adjust to the GPU's render rate. What happens between 0 Hz and the display's minimum refresh rate - 30 Hz in the case of my example - is determined by whether the monitor supports LFC.

Monitors that support LFC will duplicate frames and refresh rates when frame rates are below the display's minimum to ensure variable refresh continues to function below the minimum. For example, when 20 FPS gameplay is played on a 30 to 144 Hz adaptive sync monitor with LFC, every frame is duplicated and the monitor operates at 40 Hz; within its refresh window. Monitors without LFC would run at 30 Hz with either tearing or stuttering, depending on the v-sync setting.

LFC is extremely important on monitors with high minimum refresh rates, such as 48 Hz. LFC on these monitors allows the variable refresh window to extend into the crucial 30 to 48 Hz zone and function as if the monitor has no minimum refresh rate. Without LFC on these monitors, there is a jarring effect when frame rates fluctuate in the 40 to 55 FPS zone, as variable refresh is continually activating and deactivating at the 48 FPS boundary. LFC is crucial for the best adaptive sync experience.

Every G-Sync monitor comes with support for LFC, so when buying a G-Sync display it's not something you have to worry about. FreeSync is a different story, as only some monitors - mostly high-end ones - support LFC. You'll need to consult AMD's display list to check whether a FreeSync monitor on your radar supports LFC, whereas it's a known quantity with every G-Sync display.

Some of the initial teething issues with both adaptive sync technologies have been resolved now. V-sync works the same in both FreeSync and G-Sync, with v-sync controls only affecting how frames are displayed outside the variable refresh window. Borderless window gaming with adaptive sync is also supported now by both FreeSync and G-Sync, although AMD's implementation appears to be a bit dodgy in some situations.

As for graphics card support, FreeSync requires a 'Sea Islands' Radeon Rx 200 series card from 2013 or newer, while G-Sync requires a 'Kepler' GeForce 600 series card from 2012 or newer. G-Sync doesn't work on AMD graphics cards, and FreeSync doesn't work on Nvidia graphics cards, as has always been the case.

The main takeaway from looking at a range of G-Sync and FreeSync displays is that G-Sync is a known quantity, whereas FreeSync monitors vary significantly in quality. Basically every G-Sync monitor is a high-end unit with gaming-suitable features, a large refresh window, support for LFC and ULMB - in other words, when purchasing a G-Sync monitor you can be sure you're getting the best variable refresh experience and a great monitor in general.

With FreeSync, some monitors are gaming-focused with high-end features and support for LFC, but many aren't and are more geared towards everyday office usage than gaming. Potential buyers will need to research FreeSync monitors more than with G-Sync equivalents to ensure they're getting a good monitor with all the features necessary for the best variable refresh experience.

Pricing

Pricing is one of the most contentious issues with FreeSync versus G-Sync, as Nvidia charges a hefty premium for the use of their proprietary module. I've researched a bunch of near-identical FreeSync and G-Sync monitors to examine the price differences, and here are the results.

| Monitor Type | FreeSync | Price | G-Sync | Price |

| 24" 1080p 144 Hz | AOC G2460PF | $249 | AOC G2460PG | $449 |

| 24" 1080p 240 Hz TN | ViewSonic XG2530 | $449 | Acer Predator XB252Q | $549 |

| 27" 1440p 144 Hz IPS | Acer XF270HU | $599 | Acer Predator XB271HU (OC to 165 Hz) | $799 |

| 27" 4K 60 Hz IPS | Acer H277HK | $649 | Acer Predator XB271HK | $879 |

| 34" 1440p 21:9 100 Hz VA | Philips 349X7 | $899 | AOC AG352UCG | $1099 |

| 35" 1080p 21:9 144 Hz VA | Acer XZ350CU | $599 | Acer Predator Z301C (OC to 200 Hz) | $799 |

Looking at near-identical monitors from the same manufacturer, G-Sync adds $200 in most cases to the MSRP over the FreeSync model. When looking across brands, the margin can be as low as $100, but it often hovers near the $200 mark. For the six monitor types I researched, the average price difference when looking at the most similar models was $188.

Two of the G-Sync models could be overclocked using the on-screen display beyond what the equivalent FreeSync model was capable of, which adds a bit of value to the premium price you're paying. For the most part, though, you're only getting the aforementioned benefits of G-Sync like ULMB, LFC and, of course, adaptive sync compatibility with Nvidia graphics cards.

FreeSync monitors are universally cheaper, though one of the six monitors I examined (the Acer H277HK) did not support LFC due to its limited refresh rate windows.

Future: FreeSync 2 and G-Sync HDR

New adaptive sync monitors are set to hit the market in the coming months, which harness some new additions to the FreeSync and G-Sync ecosystems.

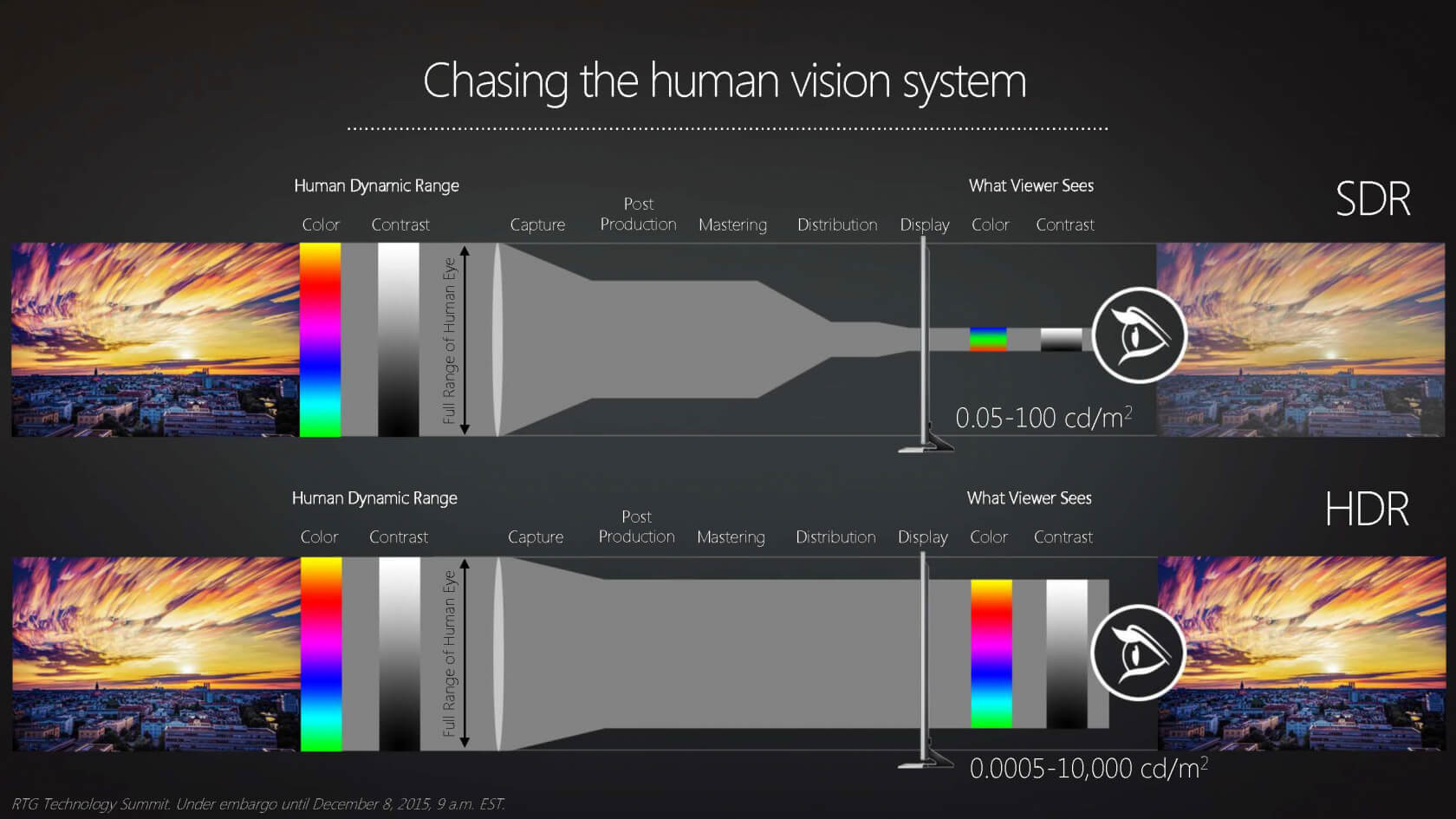

G-Sync is expanding its feature set to include support for HDR monitors and wide color gamuts. HDR monitors with G-Sync will support features like ULMB and LFC, though they will also include far larger gamuts and higher brightness for HDR functionality. Drivers will seamlessly switch between an SDR environment for desktop work, and HDR in supported applications where appropriate.

FreeSync 2 is a much larger update, that not only includes support for HDR monitors, but also introduces a monitor validation program that will see only the best monitors receive a FreeSync 2 badge. FreeSync 2 monitors will have at least twice the maximum brightness and color volume over standard sRGB displays, and monitors will be validated to meet input lag standards (in the "few milliseconds" range). All FreeSync 2 monitors will support LFC.

FreeSync 2 will include similar features to G-Sync HDR as well, like support for larger gamuts, higher brightness and automatic switching between SDR and HDR modes. There's still no word on whether AMD will charge a premium for FreeSync 2 validation and branding, though the updated technology will bring FreeSync closer to what G-Sync provides in every monitor.