We recently took our first look at AMD's Raven Ridge desktop APUs after a grueling four day grind of benchmarking the Ryzen 5 2400G and Ryzen 3 2200G. Although both chips were impressive on that initial run, there's still loads of information that we'd like to cover, including deeper overclocking tests.

However, since posting the review, a few questions have popped up and the one that I've probably seen the most revolves around the Vega GPU's memory allocation.

Unlike a typical discrete graphics card, most integrated solutions don't have their own dedicated memory. For example, a Vega 64 has 8GB of dedicated memory where it can store data and then access it quickly when it needs to. Because this is a high-end graphics card, not only it has a large 8GB buffer but the bus it uses to access this memory is also quite fast. HBM2 provides a 2048-bit wide bus that with the memory clocked at 1.25GHz allows for a bandwidth of 483GB/s.

Further down the food chain, like at the bottom, you'll find graphics cards such as the RX 550. Because the compute performance for this card is around nine times lower, it doesn't require such a massive bandwidth or an 8GB memory buffer either. In fact, the RX 550 could only take advantage of half that capacity and in today's games the card works as well with a 2GB buffer. Regardless of capacity though, it uses memory clocked at 1.75GHz and with a 128-bit wide bus can be fed data at 112GB/s.

Shifting data in and out of system memory is significantly slower than VRAM.

So a typical RX 550 has a 2GB memory buffer that can shift data at a peak rate of 112GB/s. Now if a game requires 3GB of VRAM but you only have 2GB, some game assets spill over into system memory (RAM). I covered this in a bit more detail somewhat recently that investigated how much RAM gamers need.

Shifting data in and out of system memory is significantly slower than VRAM. The Raven Ridge APUs for example are limited to a memory bandwidth of around 35GB/s for system memory when using DDR4-3200. So in the case of the RX 550, it has a bandwidth of 112GB/s when accessing data locally using the VRAM, but when accessing data from system memory it's limited to 16GB/s (PCIe 3.0 x16 limit), which is to say that it takes at least seven times longer to process the same data.

If your computer runs out of system memory, game assets are then moved to the local storage device – meaning your hard drive, or hopefully SSD – and depending on how fast that device is and how heavily it's hit with data, you are very likely to see a noticeable dip in framerate at this point as the bandwidth would be reduced to around 500MB/s with a SATA SSD.

Keeping all that information in mind, note again that the integrated graphics chip inside the Raven Ridge APU has no local memory. We're stressing this because some integrated GPUs like the Vega M graphics in upcoming Intel Kaby Lake-G processors do have their own dedicated memory and this greatly enhances performance, but it's also much more costly.

Since AMD's Raven Ridge APUs are budget solutions, it wasn't practical to include HBM2 memory. So with no dedicated VRAM, it relies exclusively on system memory that is restricted to bandwidth of around 35GB/s when using DDR4-3200.

Of course, bandwidth is just part of the issue here, memory capacity also plays a key role. A system with a base model Radeon RX 550 and 8GB of DDR4 memory effectively has 10 GB of total memory. But when using the integrated Vega 8 or 11 graphics inside the Raven Ridge APUs, you have 8GB of memory that needs to be shared between the CPU and GPU.

Generally, Windows does a good job of managing memory and prioritizing applications for best results, but at least some portion of your system memory will be allocated to the integrated graphics. Raven Ridge APUs use a method called Unified Memory Architecture or UMA for short.

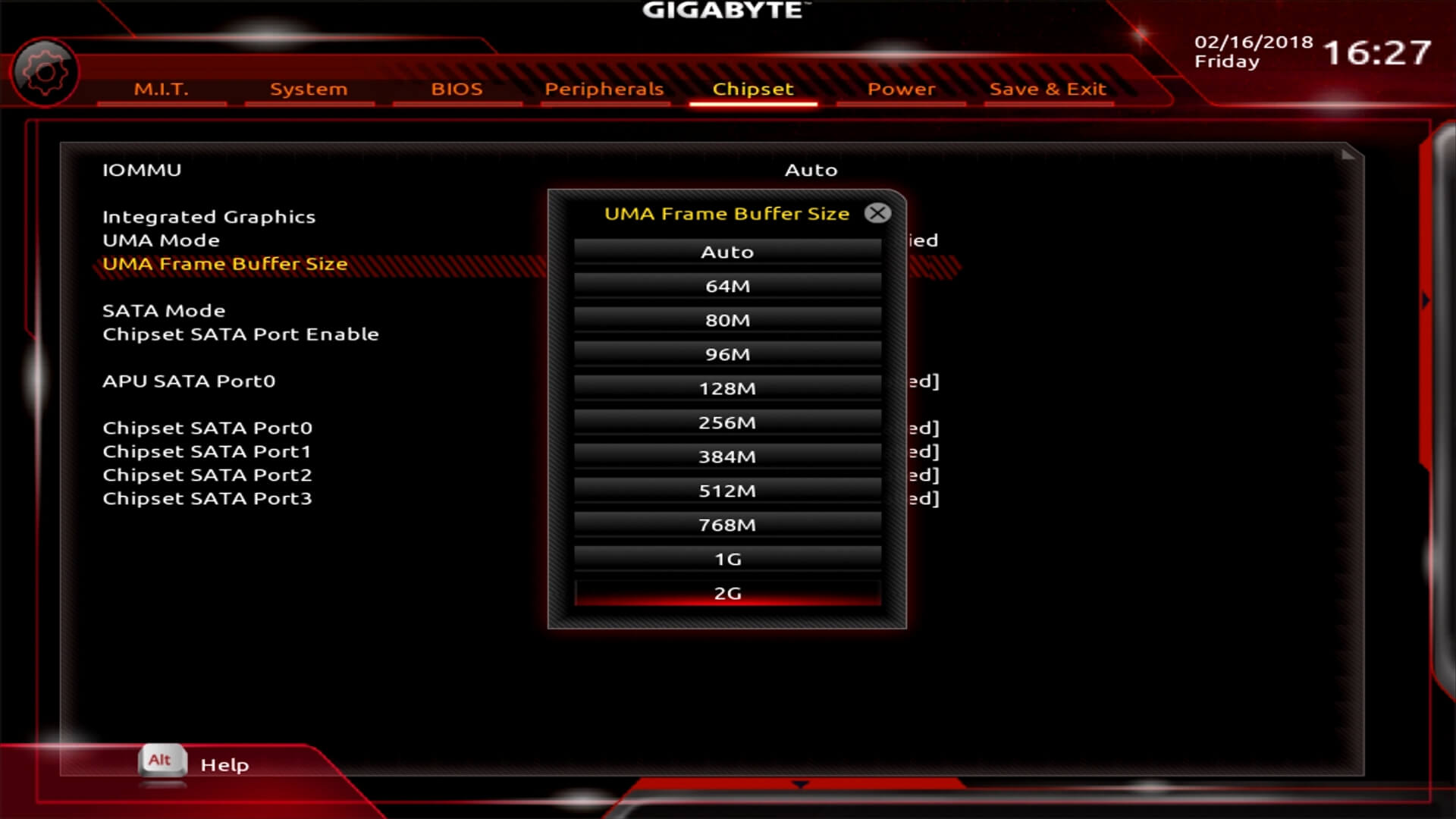

Most current AM4 motherboards let you set the memory size somewhere between 64MB and 2GB. Depending on what size you select, this will determine the maximum amount of system memory that is allocated exclusively to the Vega graphics. Once allocated, it can only be used as graphics memory and will no longer be accessible to the operating system or applications.

Now this is where I'm seeing a bit of confusion and misinformation. Some people are claiming that for best results reviewers should test with the frame buffer set to the maximum size and right now that's 2GB. However, this isn't necessarily true and as we're about to find out, most of you who have built or are planning to build a Raven Ridge system will want to do the complete opposite.

You are far better off selecting the absolute minimum amount of system memory that you can, because as I said, once you allocate a portion of your system memory to the graphics processor, that's all it can be used for.

If you go overboard here, then while running tasks that don't require much video memory in Windows you will have a significant chunk of idle memory that can't be used. If you were to select a 2GB buffer on a system that has 8GB of DDR4 memory, that would mean you'd only have 6GB of system memory available.

I wouldn't suggest choosing a 2GB buffer even if you wanted maximum performance in games. As I discussed earlier, when gaming, once the graphics memory or VRAM fills up, game assets are then loaded into system memory anyway and the Raven Ridge APUs using system memory exclusively, so regardless of whether you allocate 64MB or 2GB, it doesn't really matter.

If a game calls for 2GB of video memory but you've only allocated 64MB, usage still spills over into the shared RAM and because it's all the same memory the bandwidth remains the same and so does the performance. Windows manages this very well so by allocating 2GB you're just restricting the operating system's ability to manage system memory optimally.

When trying to make sense of why AMD was offering a 1GB and 2GB frame buffer, I initially thought that maybe by reserving a certain amount of memory, say 2GB, this would ensure maximum gaming performance as the operating system wouldn't have to shuffle things around, especially when using 8GB of RAM versus 16GB or more.

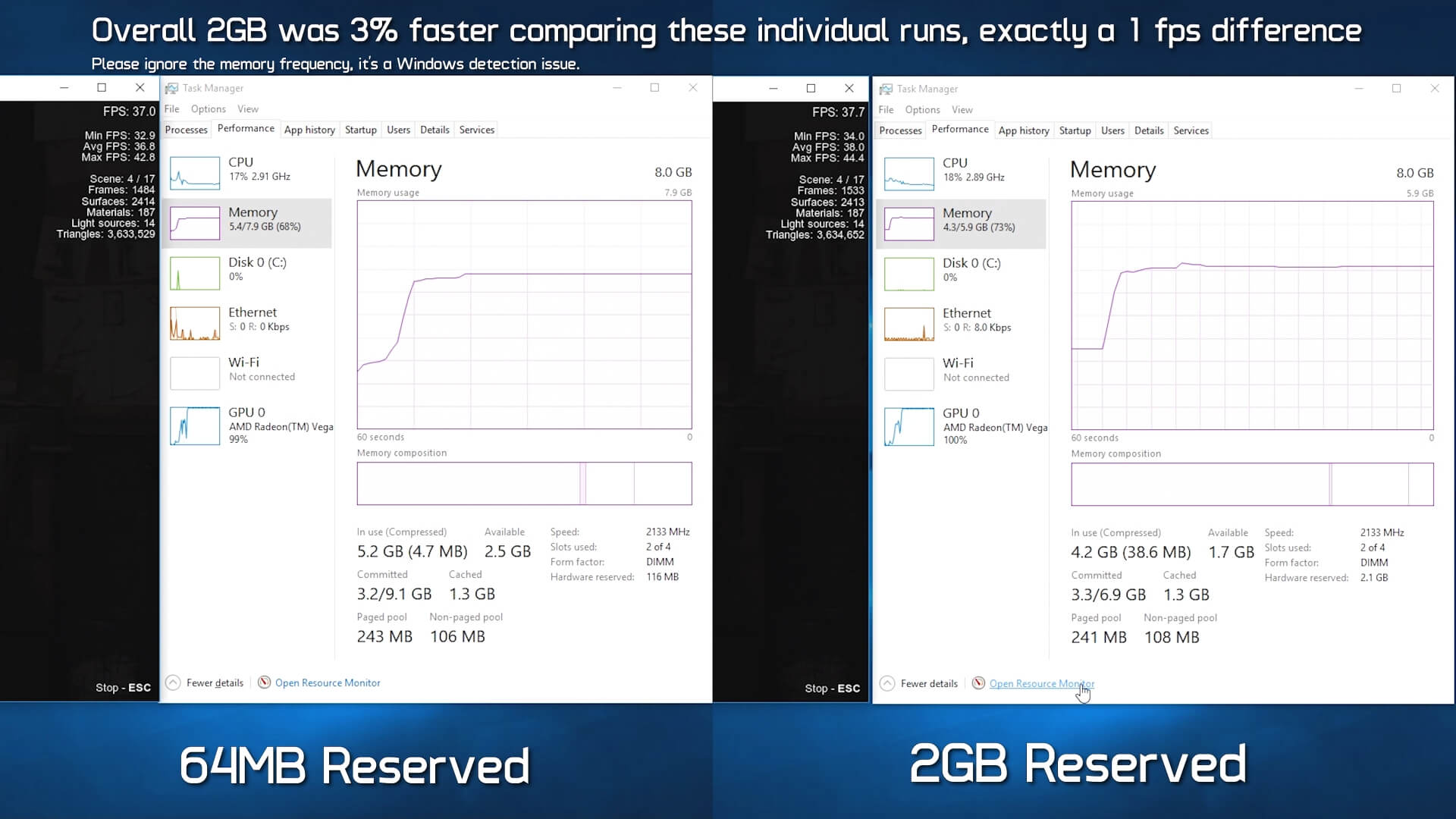

However, after testing various configurations I found this had little to no impact on gaming performance, certainly nothing you'd notice when gaming. Using both 8GB and 16GB of dual-channel DDR4-3200 memory with the exact same timings, I found no real performance difference between reserving 64MB or 2GB of system memory for example. I tested half a dozen modern titles that all call for around 2-3GB of VRAM at 1080p using low to medium quality settings.

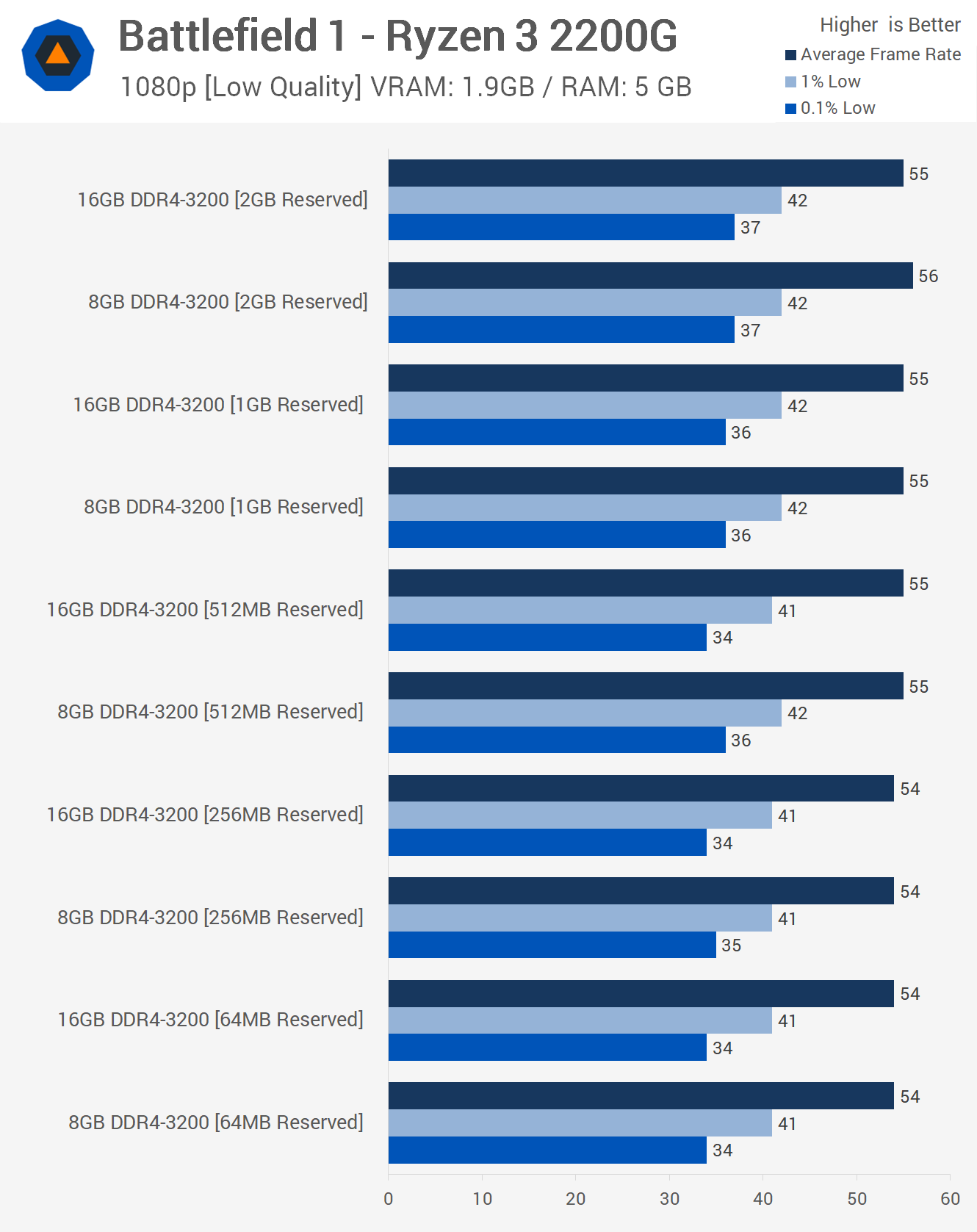

Rather than look at the half a dozen titles I've tested, all of which show the exact same thing, I'm just going to put up the Battlefield 1 results at 720p and 1080p, along with some additional testing with Metro: Last Light. As you can see with BF1, all the results are within the margin of error for a three run average and we're not just talking about the average frame rate but also frame time performance as well.

The 2GB configuration was up to 9% faster when comparing the 1% low results, but if we accept that there's a +/- 1fps margin of error here the difference could be as small as 3%. In any case it wasn't possible to spot this difference when actually playing the game and Battlefield 1 was one of the few games to show any kind of measurable difference.

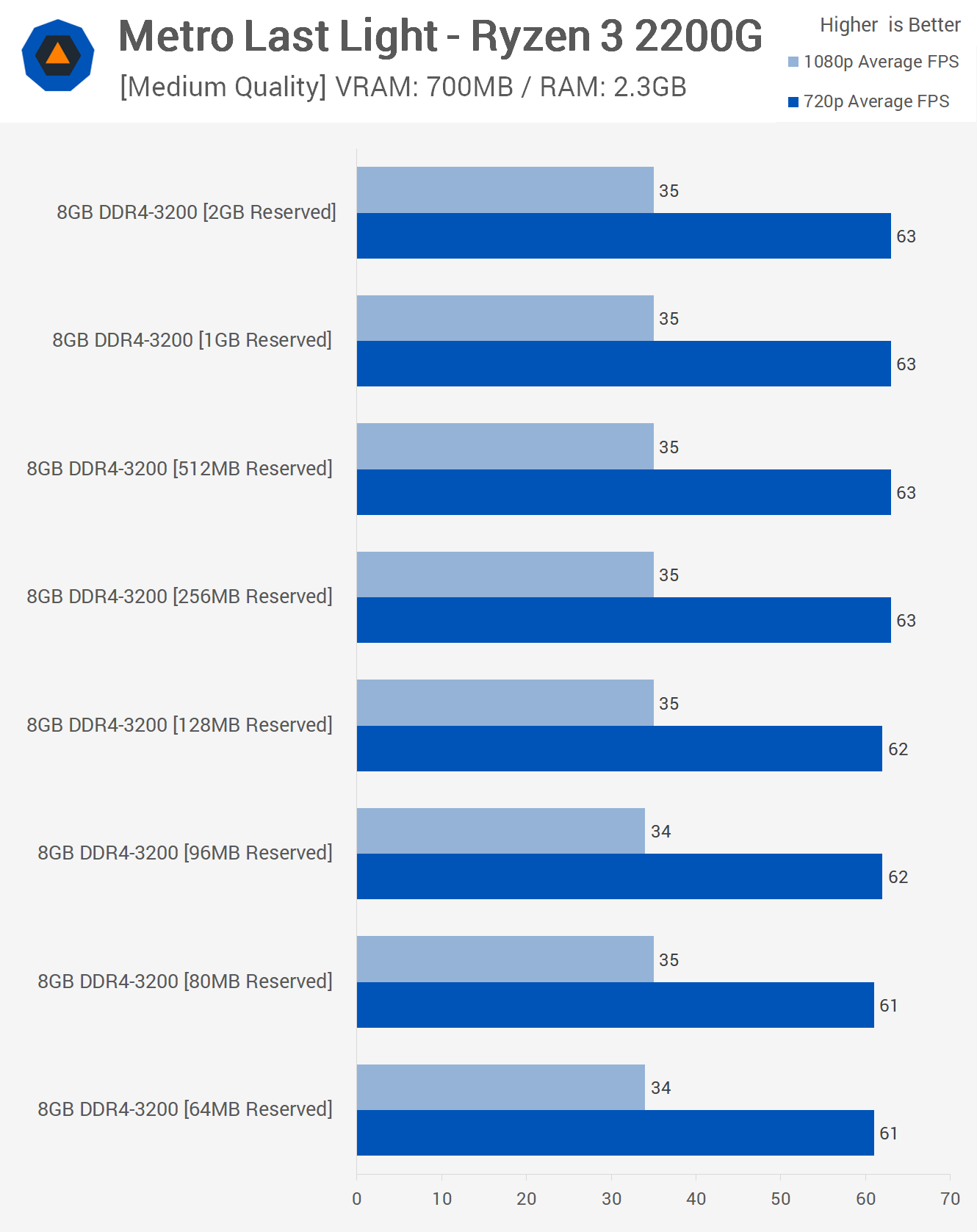

I received a few reports that Metro: Last Light saw a massive performance uplift when going from 512MB to 2GB and although I stopped testing with this title about three years ago I thought it would be odd if true so I decided to check it out. This game uses very little memory and here we saw no difference in performance when comparing the average frame rate at 720p and 1080p, the same was true for the frame time results as well.

Given what we discussed previously before getting into the results, this really shouldn't surprise anyone. Regardless of whether the Vega GPU is accessing data via the allocated memory or not, if it's still using system memory and is limited to the same bandwidth, around 35GB/s in this case.

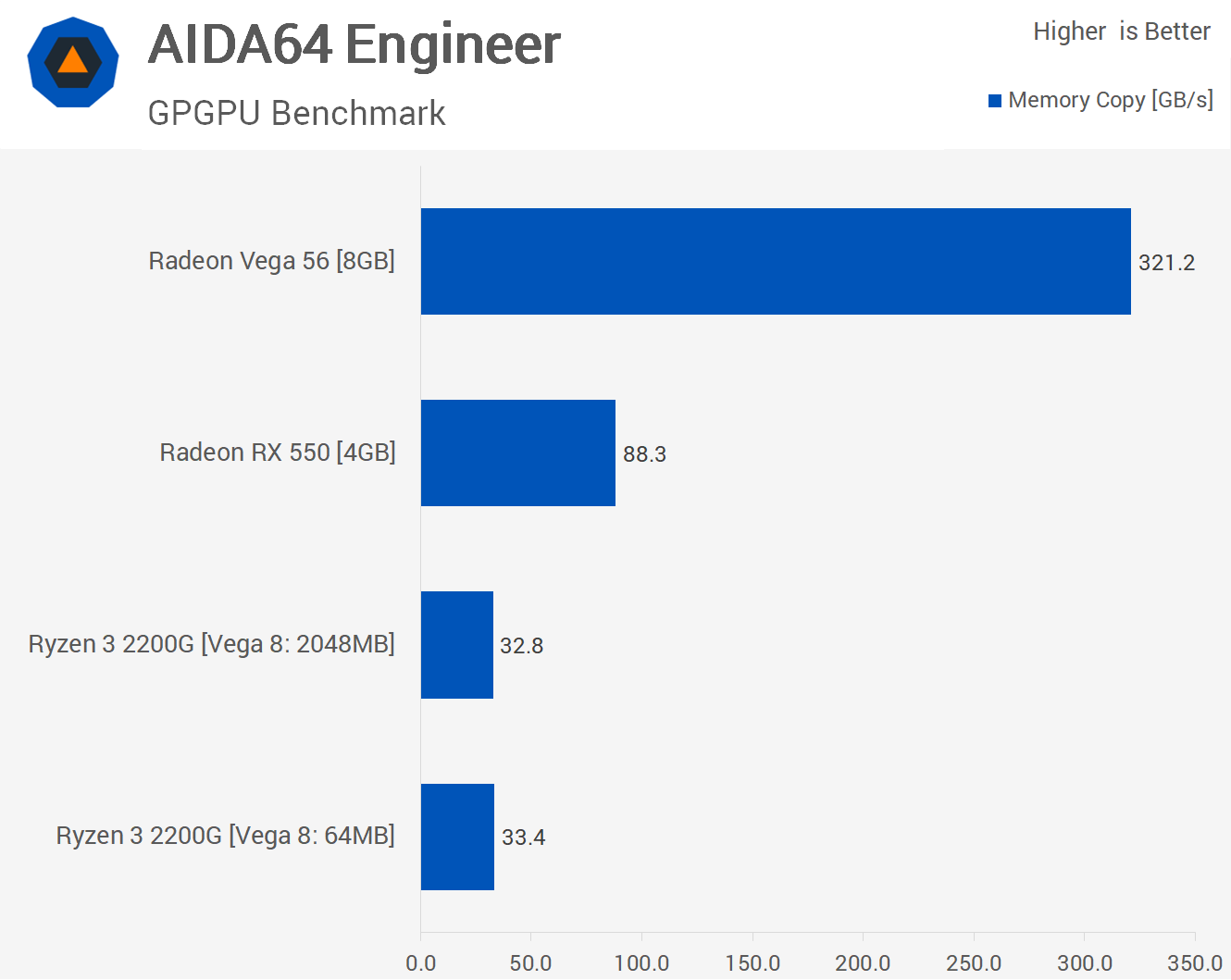

In fact, we can look at this a little more closely. Using the AIDA64 GPGPU benchmark tool we can measure read and write performance between the CPU and GPU, effectively measuring the performance the GPU could move data in and out of its own device memory into the system memory. This is also called Device-to-Host Bandwidth.

More importantly, for what I want to show, we can also look at memory copy performance. This test measures the performance of the GPU memory by copying data from its own device memory to another place within the same device memory. So in the case of the RX 550 that would be the onboard GDDR5 memory, but in the case of the Raven Ridge APUs, it's the system memory.

Here we can see that with 64MB of RAM allocated, the Vega 8 GPU in the 2200G has a throughput of 33.4GB/s when copying data from within the system memory, and that's pretty much in line with the 35GB/s the CPU cores have when accessing the DDR4-3200 memory.

If we increase the allocation size to 2GB, this has no impact on bandwidth and instead based on an average of three runs we saw a slight decrease but that is within the margin of error. Given how long this test takes it's safe to assume we are transferring well over 2GB of data so it's not just benchmarking within the allocated buffer. Then if we look at the RX 550 which has a theoretical peak bandwidth of 112GB/s we see in this test it was good for 88GB/s. For comparison, I benched a Vega 56 and this model hit 321GB/s with a theoretical peak bandwidth of 410GB/s.

So all the evidence suggests that setting the iGPU allocated memory buffer beyond 64MB is pointless and on systems with limited RAM, even a bit foolish. That said, while I've tested quite a few games and applications now, I haven't test them all and of course I won't be able to as there simply aren't enough hours in the day.

The only reason I can see why you might want to increase the reserved memory buffer is for games that need to detect a certain amount of VRAM before they will load. We've seen games with built in safeguards that won't allow you to load them without meeting a minimum hardware spec. It's annoying as the game developer isn't saving anyone from anything, certainly no real harm, instead they're just inconveniencing gamers that probably have acceptable hardware but are waiting on a driver update to improve detection.

I expect that AMD will always deliver drivers to solve these kinds of issues for new games if and when they arise, but we might see situations where in the meantime gamers can increase the allocation to meet the VRAM requirements, allowing them to load into the game. So short of potential compatibility issues, I can't think of any reasons why you'd want to sacrifice more than 64MB of memory to the GPU, but maybe you guys have some ideas.

Please also note that 64MB might be an extreme example, maybe err on the safe side and set it to 512MB. Or you could just go with 64MB and wait till you run into an issue and if you do please let us know about it. At that point, you would only have to reboot the system, increase the allocation and then load back into Windows.

Since most APU users will be using two 4GB memory modules for an 8GB capacity, especially those buying the incredibly good value Ryzen 3 2200G for $100, those users will want to save as much memory as possible and telling them to lop off 1-2GB for the Vega GPU seems like really bad advice based on my findings.

Circling back to the time when I was talking about trying to make sense of why AMD was offering up a 2GB frame buffer, there's even been talk that AMD is pushing board partners to offer a 4GB option. But why, AMD? Why give your customers the ability to degrade their experience on your impressive Raven Ridge APUs?

Well, the reason is simple, marketing. AMD has to play the game of numbers. Saying 2GB or up to 2GB of graphics memory sounds better than 64MB. That said, AMD doesn't appear at this stage to be advertising Vega 8 and Vega 11's memory spec. Maybe this will start to pop up once the company nears the launch of 4GB allocation.

As we were putting final touches on this feature and our testing, we got in touch with AMD and after giving them our results they're having their engineers looking into it. AMD tells us they are testing a wide range of games and we have also tested beyond what you see here.

For the most part we saw very little difference. At most, averages vary by up to 5% in some instances but it's mostly 3% or less. You may gain an extra 2-3 fps using 2GB opposed to 64MB in the best case scenario, but Raven Ridge is way more appealing on the low end if I can have almost all 8GB of my system memory when using applications in Windows, rather than only 6GB.

So for users with 8GB or less RAM you're better off with the 64 - 512MB options. Just apply a small overclock to gain a few extra frames when you need them.