No, you really weren't. I thought that I was imagining things too but then I went back and looked at the

review of the RTX 4080. They initially gave that card a score of NINETY only to lower it to 80 because they got a tonne of pushback on it. Techspot isn't reviewing fairly when the card that costs more but performs worse gets a positive review over the card that costs less but performs better.

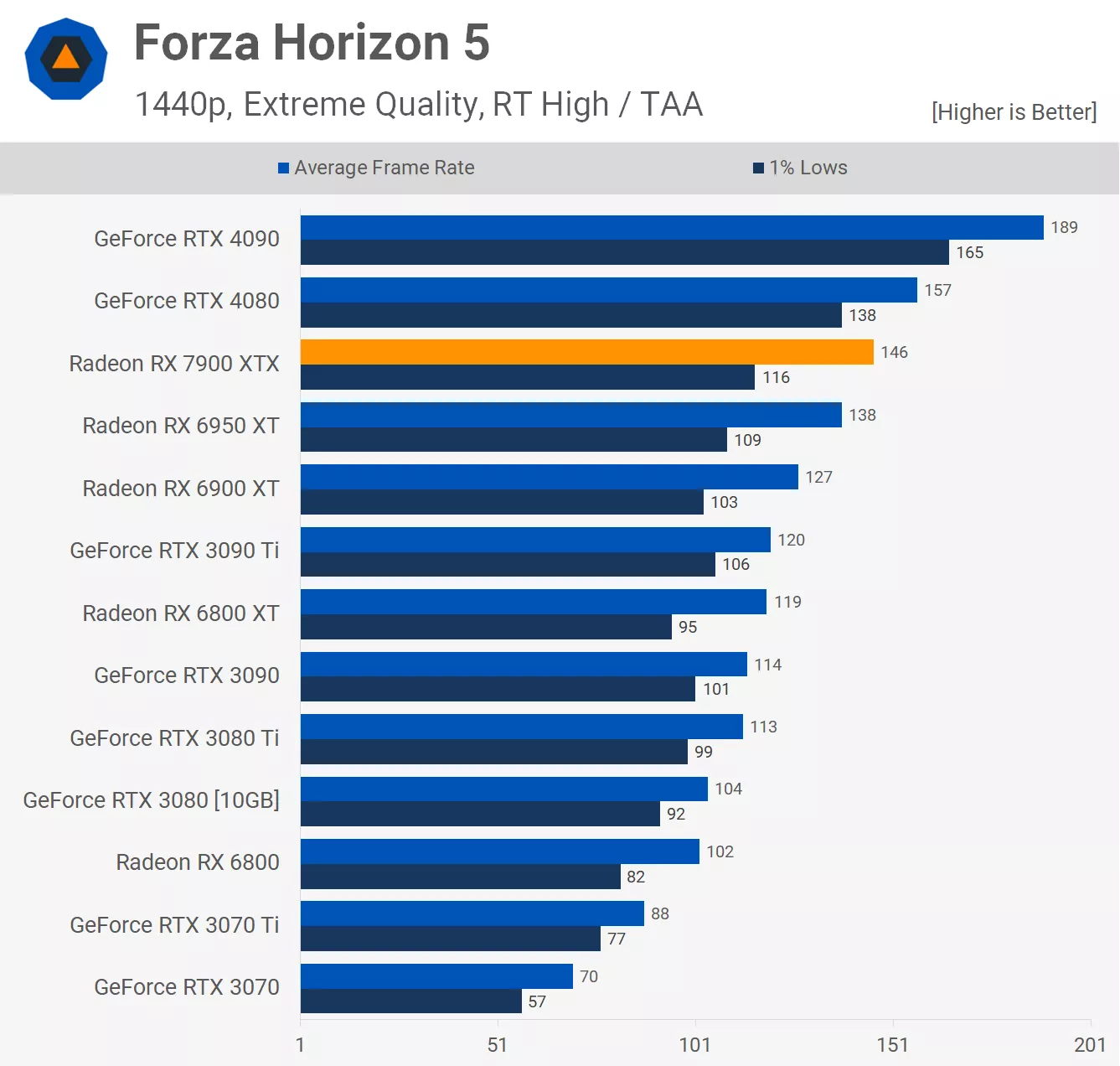

Then there's that "driver issue" with Forza Horizon... Steve

conveniently neglected to mention that he had RT turned on and claimed a "driver issue". Note the "RT High" in the graph:

NOTE: These were stuck in with the rasterisation benchmarks, not the RT benchmarks. That's pretty sneaky because I didn't see it right away and I bet nobody else did either.

Interestingly, TechPowerUp didn't experience anything like that with the RX 7900 XTX being about 1fps behind the RTX 4080, which is a tie:

There's no way that this was an accident. This was nothing short of an attempt by Steve Walton to mislead his audience and it pisses me off. The fact that he's so good at what he does means that he doesn't get to plead "it was an honest mistake" because we know damn well that he's not that stupid. This is as bad as when he "failed" to mention that nVidia was involved in the development of Spider-Man while he was showing us just "how much better nVidia is". After all those years of great reviews, I honestly can't believe that Steve would destroy the trust that he'd earnt over the last 15 years, but he did.

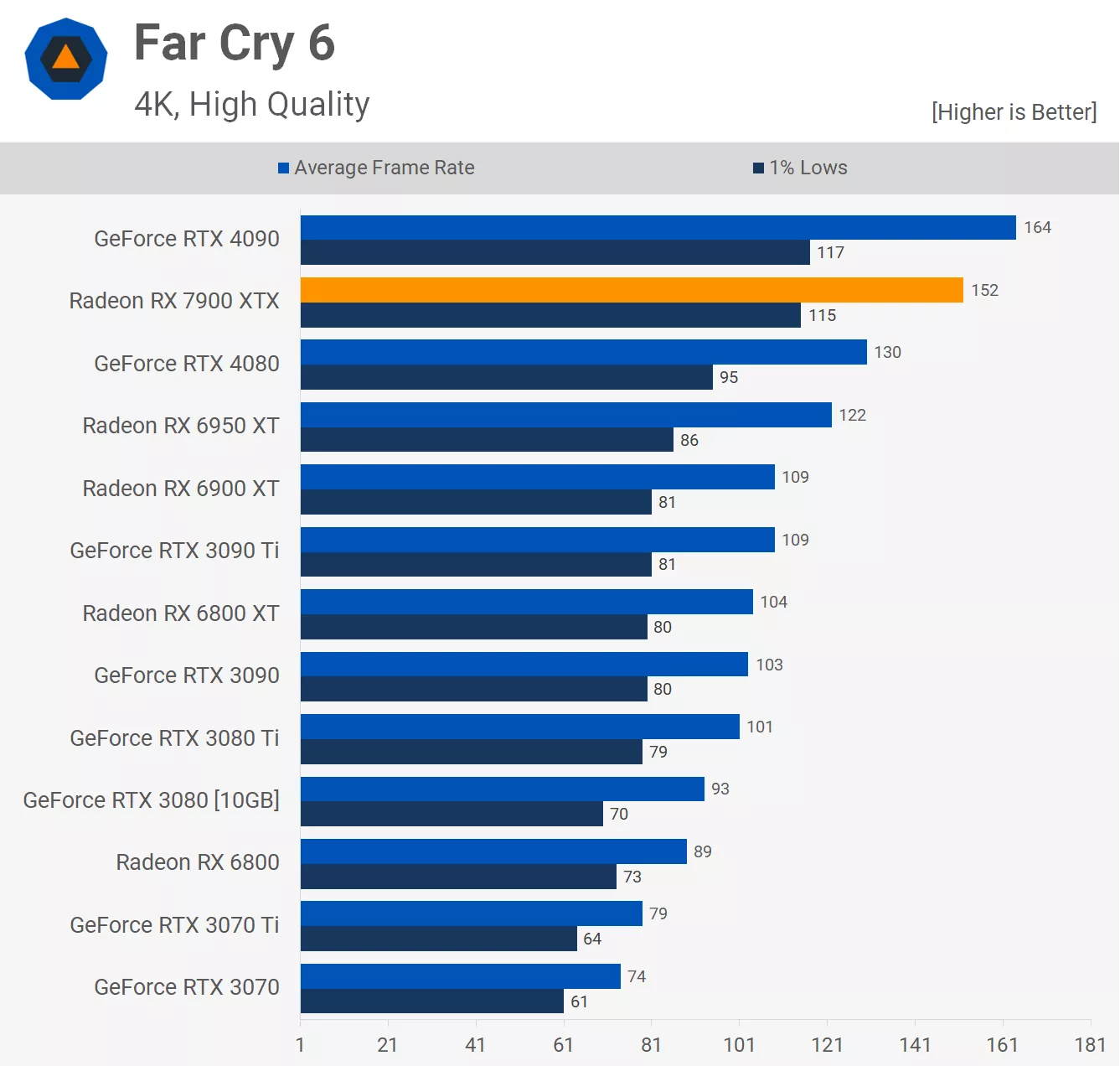

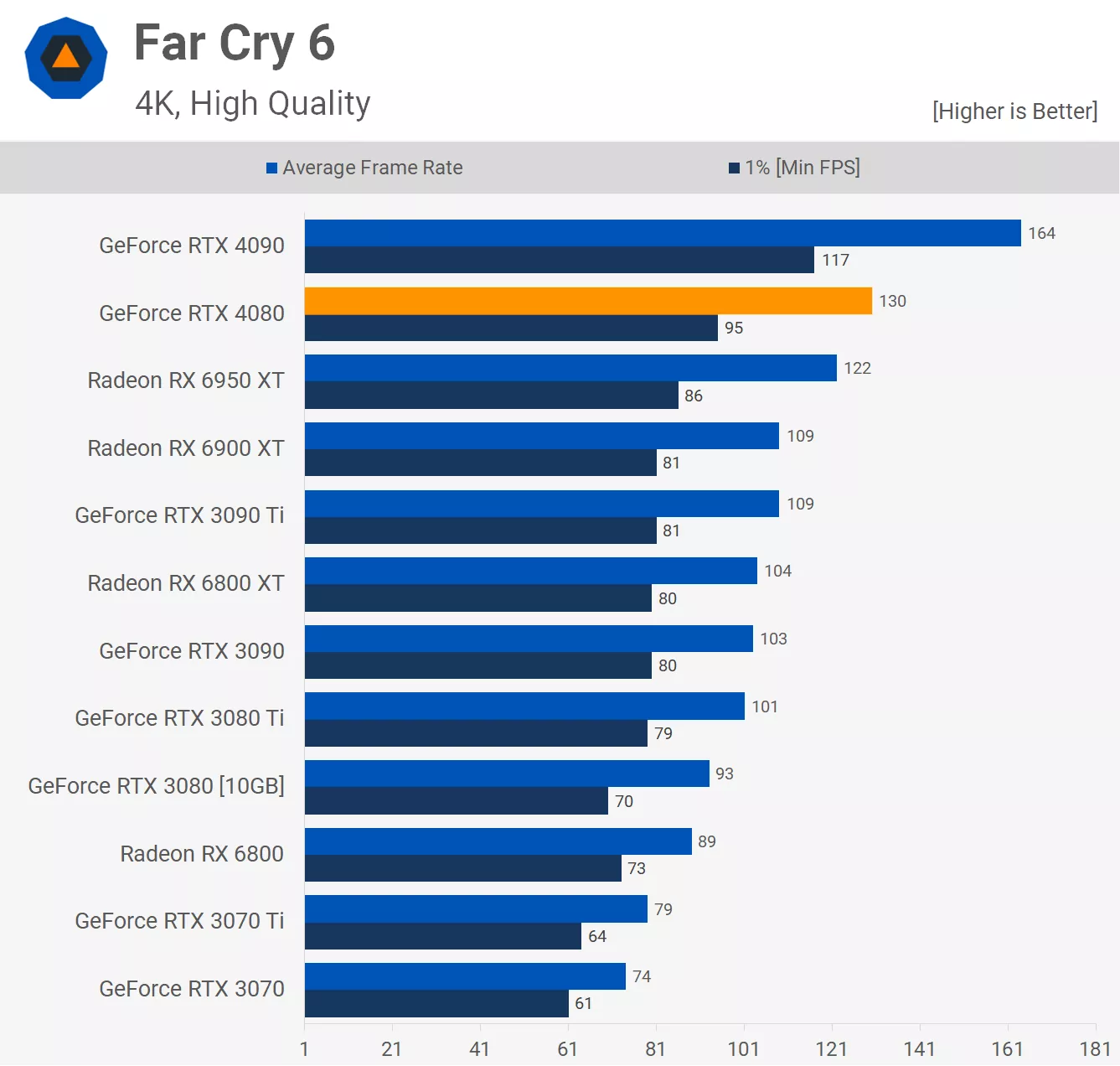

The waters get even muddier because "according to Steve", the fastest card in Far Cry 6 was the RTX 4090:

Yet somehow, TechPowerUp found something else entirely with several versions of the Radeon RX 7900 XTX, those being the OG, XFX MERC and ASUS TUF:

Now, I had no idea what settings that TechPowerUp was using but Guru3D has almost the exact same numbers and it was specified as Ultra High Quality:

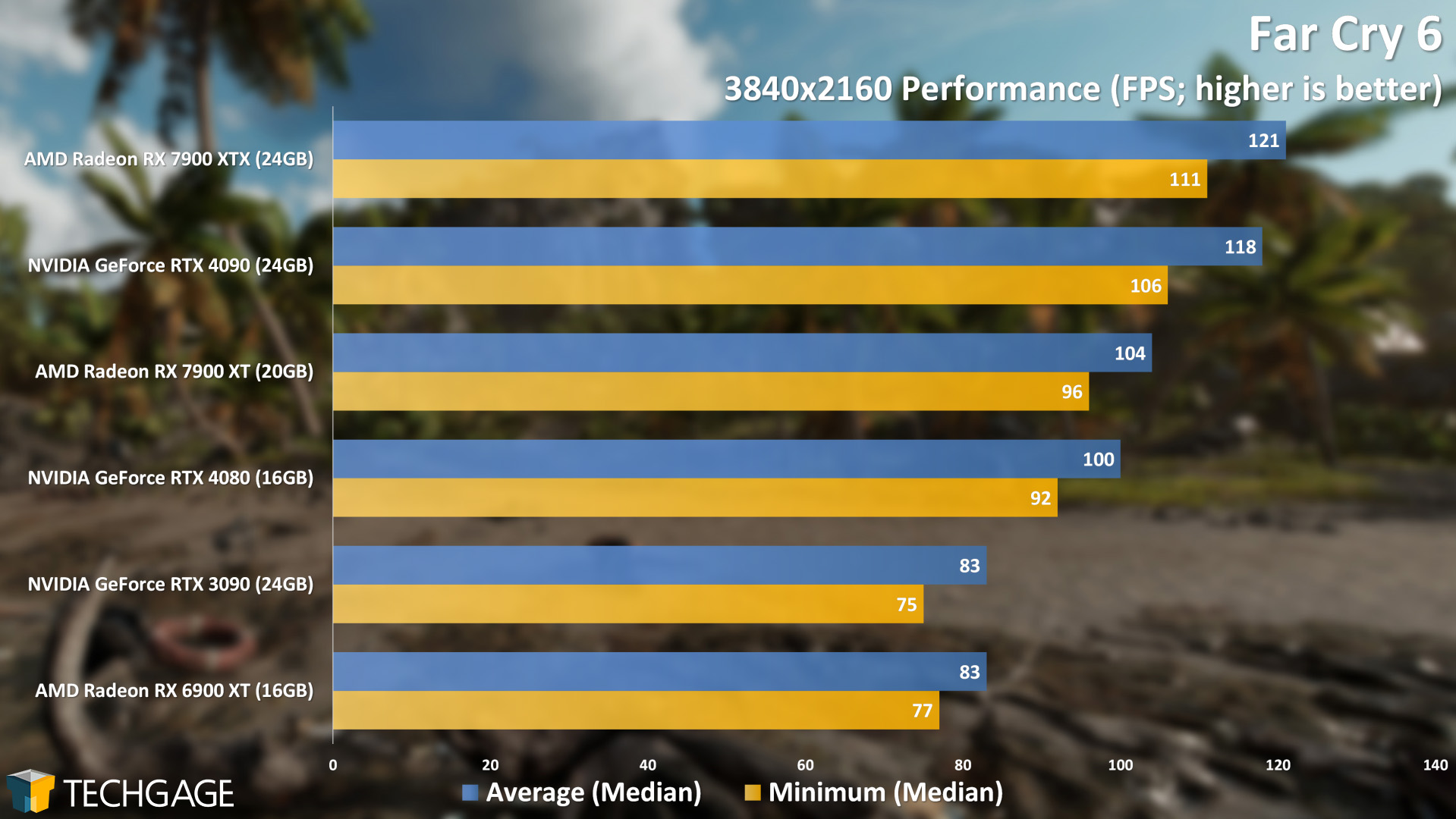

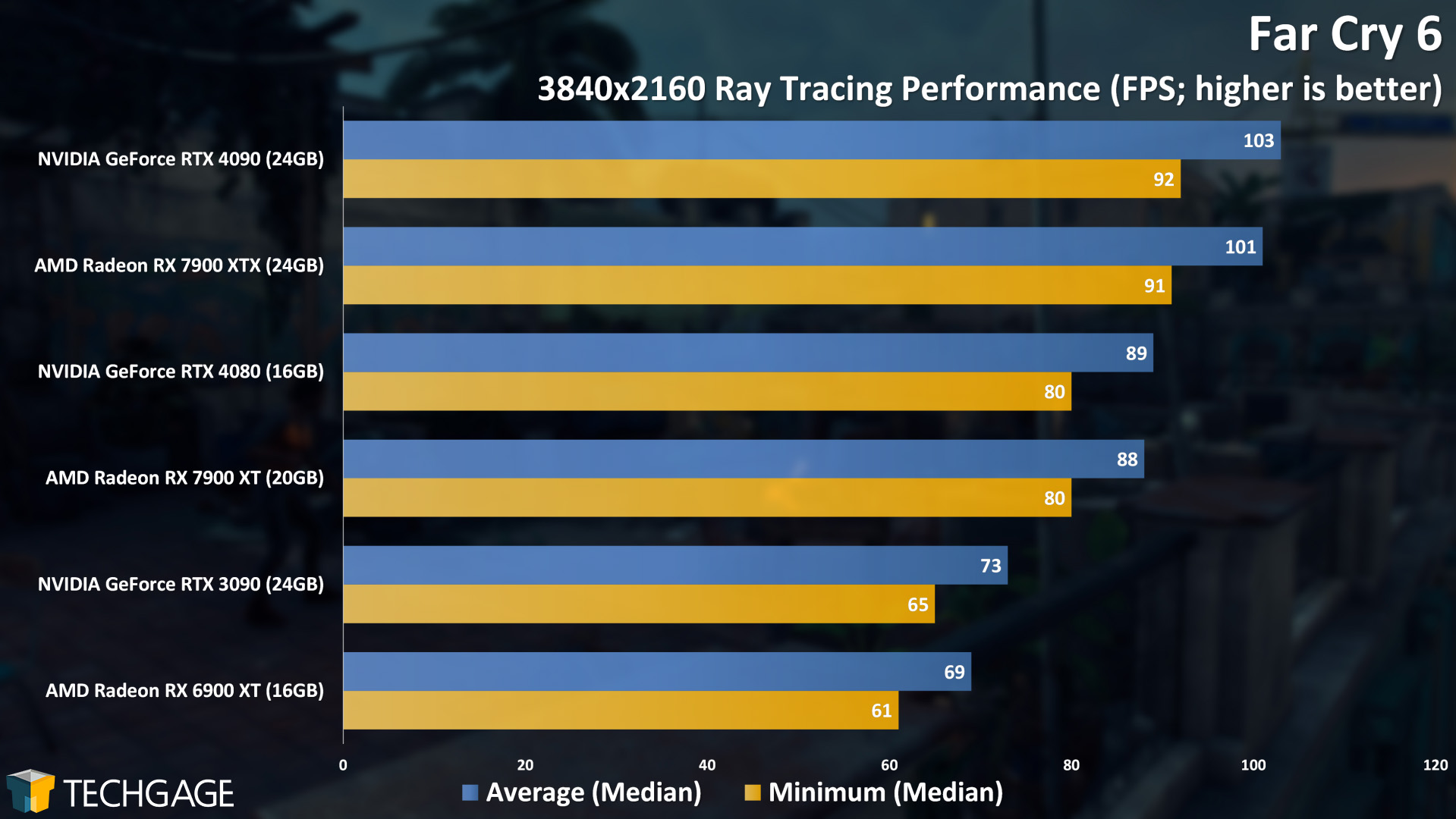

Here's Techgage agreeing:

So, Steve didn't use ultra settings to test 2160p. That makes no sense to me because why on Earth would you test halo-level cards with anything below Ultra quality? The fact that Steve used High settings instead of Ultra is the reason that the RTX 4090 got a higher score than the RX 7900 XTX.

However, it's clearly a flaw in the testing methodology because the only people who will really be gaming at 4K will be doing it with halo-level cards and so ultra settings would be the most appropriate. Hell, I have an RX 6800 XT and I choose to game at 1440p because I can run any game maxxed-out and I can't really tell the difference between 1440p and 4K from a graphical fidelity standpoint even on a 55" panel. This means that, to me, 1440p ultra looks better than 4K high.

Yes they are, but you'll notice that the RTX 4080 is even worse and the article he wrote about it was positively

glowing when compared to this. He's holding the RX 7900 XTX to a higher standard than the RTX 4080 and that's wrong, especially considering the outrageous MSRP of the RTX 4080. Considering how nVidia treated Steve and Tim when they tried blacklisting Hardware Unboxed, Steve has no reason to be so kind to nVidia. I honestly think that nVidia got their message across that HU had better fall in line, or else.

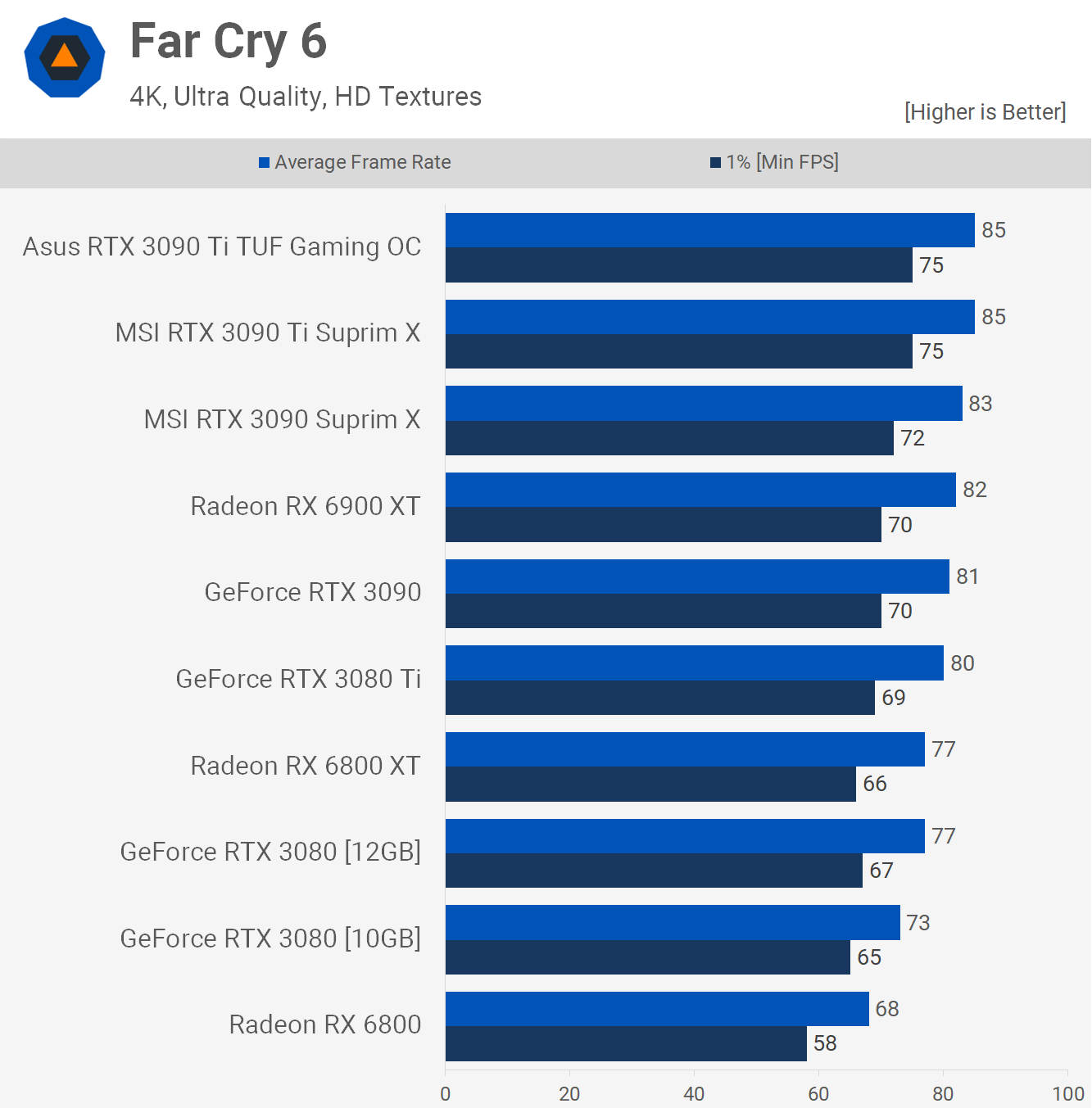

One of those demands may have been that Far Cry 6 was only to be shown with high settings at 4K because nVidia clearly has a weakness there. Note that for the RTX 3090 Ti, Steve used 4K ULTRA settings:

However, he then inexplicably switched from ultra with HD textures to simply "high" for the review of the RTX 4080:

This change in testing methodology was never explained and it doesn't make any sense either. When everything down to the RX 6800 can get >60fps at ultra, there is no reason to change from that. If you want to know a GPU's performance, you use 4K ultra. It's just like if you want to know a CPU's performance, you use 720p low or 1080p low depending on a game's options and the level of GPU you're testing with.

This is bad because it's not consistent. If the standard testing methodology changes, you need to explain to your audience that it has and why. Sure, it

says ultra and it

says high but who the hell can remember what the settings were on the last card?

In this case, the change was critical because it directly affected the outcome and it's dishonest. If the RX 7900 XTX is faster in Far Cry 6 than the RTX 4090 at 4K ultra settings, then it IS the faster card. The fact that the RTX 4090 is faster at 4K high is irrelevant, just like for total performance, the results at 1440p, while important, do not say which card is ultimately the fastest because it's not as strenuous a test as 4K ultra.

It gets even worse than that because there's no Far Cry 6 RT chart. Techgage showed that there's at least one game in which the RX 7900 XTX is every bit nVidia's equal:

Sure, it's just ONE game but this is seriously important because it completely defies expectations.

It actually gets better for the RX 7900 XTX because, as it turns out, the RTX 4080 is only 15% faster in RT performance than the RX 7900 XTX according to TechPowerUp:

This actually surprised the hell out of me because the impression I got from Steve was that the RT performance of the RX 7900 XTX was somewhere around that of the RTX 3080. Clearly, this is NOT the case.

Sure, the RTX 4090 destroys the RX 7900 XTX, but it also destroys the RTX 4080. A difference of 15% is like comparing an RX 6800 XT with an RX 6950 XT. Sure, it's there but it's not worth paying extra for, something that

Steve himself said when reviewing the RX 6950 XT. Now suddenly he changes his tune when it comes to RT? Remember that nVidia tried banning Hardware Unboxed for not paying

enough attention to RT and DLSS so I don't buy it for a second that he really believes that.

I don't know what it is, but

there's something rotten in the state of Denmark and nVidia's involved. My faith in Steve Walton has been completely shattered by this hit piece that he dared to call "a review". I used to believe that he was beyond things like this but the more I look at this "review", the more I find wrong with it.

Steve turned the settings down in Far Cry 6 from the RTX 3000-series to the RTX 4000-series. That makes ZERO sense unless nVidia wanted him to hide a weakness that exists in Ada Lovelace. There can be no other reason that I can think of based on the facts available.

Then he even had the nerve to stick an RAY-TRACING test in with the rasterisation tests and blame some mythical "Driver Issue" for its seemingly weak performance and then go on to bash it further. What could be the reason for that if not to re-stoke the fears of the uninitiated that Radeon drivers are bad? This is LITERALLY the most dishonest hardware review that I've ever seen. This is Jayz2Cents-level bad made even worse by the fact that we know what to expect from Jay. From Steve Walton... I

never expected this.

Feel free to fact check everything that I said.