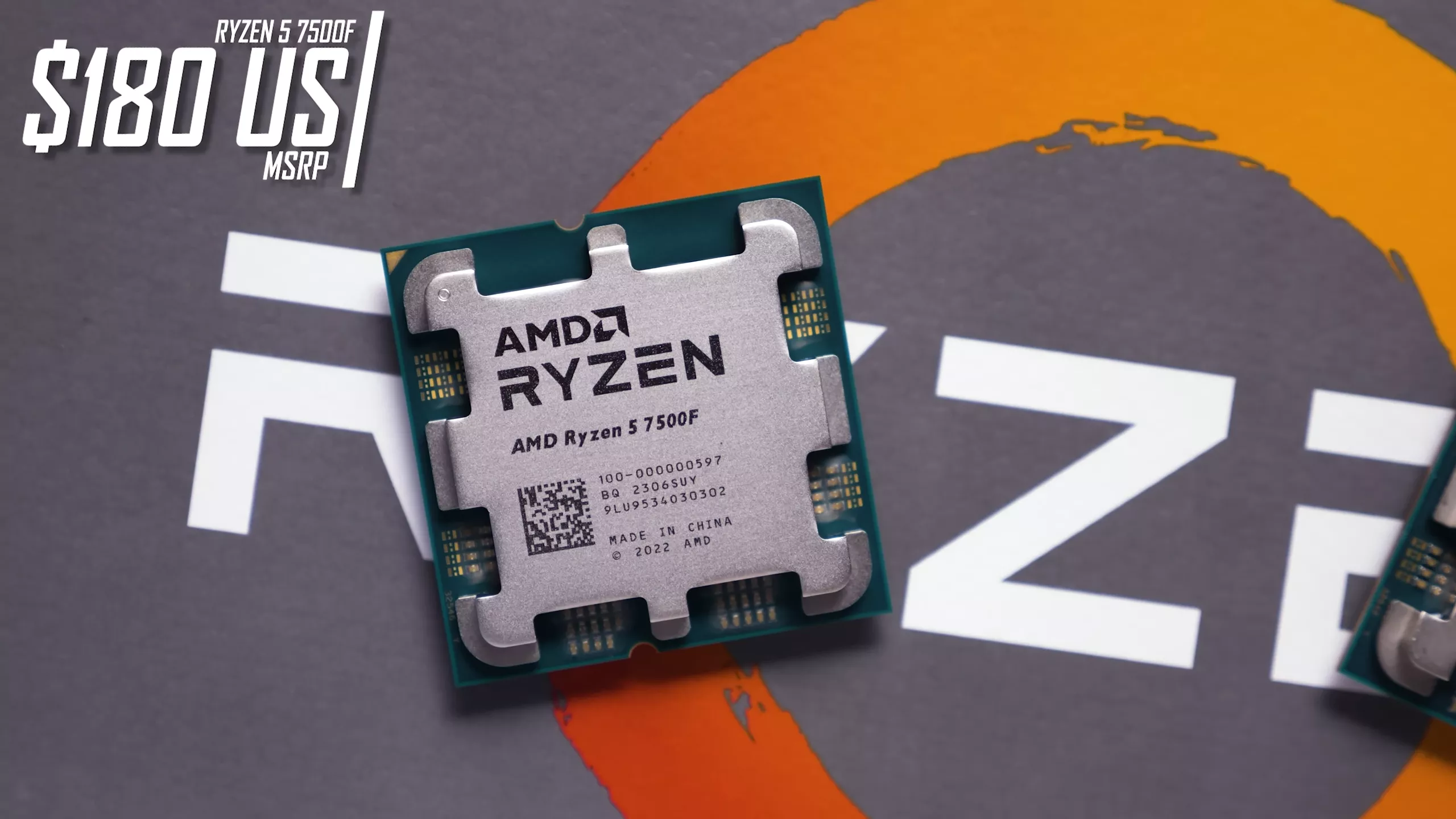

The Ryzen 5 7500F is AMD's most affordable Zen 4-based CPU, though it's still a bit of an elusive offering, in this review we'll be comparing it against its chief rival, the Intel Core i5-13400F.

https://www.techspot.com/review/2728-ryzen-7500f-vs-core-13400f/