Does higher FPS translate into lower latency? Isn't that the reason why people are clamoring for more FPS? It's also looking like faster CPUs are becoming less of a factor than faster GPUs. I suppose it depends on the game. If I understand it correctly, faster GPUs gives better framerates while faster CPUs process more characters on the screen. I can definitely say that faster hard drives has helped out tremendously in open world games when moving into new sections. In the early days, games gain an definite uplift with faster CPUs, but today it doesn't seem to be necessarily so and augments the thinking in upgrade strategy. As someone who has to be budget conscious, I'm just looking for good gameplay at 720p. Can anyone explain this better than myself?

Anyone who's been into PC gaming for as along as I have scratch our heads at how little understanding there is as to what a CPU does and does not contribute to gaming.

The GPU is always the bottleneck in 99.99% of any real life gaming scenario. A bottlenecked GPU primarily removes the CPU from the equation altogether and it doesn't really matter

what CPU you have as long as its not pentium 4 or bulldozer - any modern mid to high end CPU from either AMD or Intel will make almost no difference if the GPU is the bottleneck.

When is a CPU not bottlenecked? If you have a modern CPU (ryzen / 6thgen core+) with at least 6 threads, and your GPU is less than a 2080 series GPU you will never have a CPU bottleneck.

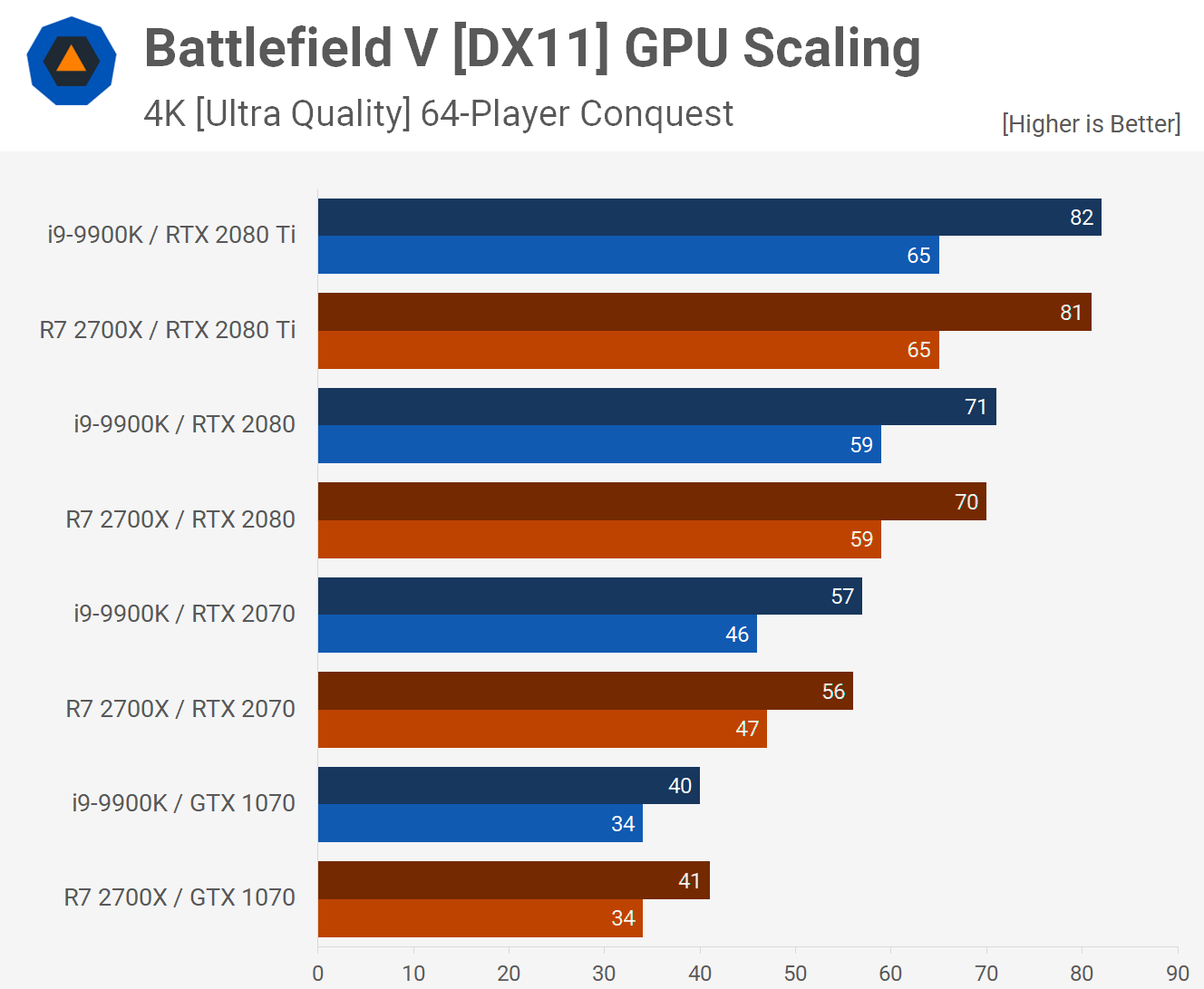

If you game at 4k with any video card including 2080ti, you will never have a CPU bottleneck.

If you game at 1440p with any card less than a RTX 2080 you will never have a CPU bottleneck.

If you game at 1080p with an card less than a RTX 2070 you will never have a CPU bottleneck.

If you like to crank all the visual settings to ultra, you'll almost have no CPU bottleneck with any card less than a 2080ti.

If you use raytracing features, you will never have a CPU bottleneck.

Now deduce whether you will have a CPU bottleneck or not ... if not, then the difference between any modern AMD and Intel CPU will be ZERO.

If you don't have a CPU bottleneck, your CPU makes almost no difference

If you have a monitor that has less than 120hz, even if you DO have a CPU bottleneck, your displays refresh will cap it all at 120 anyway. If your FPS is less than that, then you don't have a CPU bottleneck.

I find it hard to believe that people on this forum struggle so hard to understand this, when Techspot is actually pretty good about including a variety of graphs showing what performance is like with lesser cards or higher resolutions. Do you guys just discard all that to focus on the artificially induced bottlenecked CPU numbers that only represent about 0.001% of all gaming setups? (sorry, rhetorical question - I'm addressing this response to the public in general, not specifically to you Danny101)

At 4k even with a 2080ti, the $200 R7 2700x (last gen AMD) is equal to a $550 9900k ... anyone want to waste $350 on 4k gaming? Anyone? Anyone? Bueller? Bueller?

As far as the, grasping at straws, "latency factor" goes, the difference betwen 150fps and 165 fps isn't going to affect your latency. besides, we now have latency reduction as a BUILT IN feature in all modern GPUs ...

Now, don't even get me going on how average FPS isn't even the deciding factor in quality user gaming experieince amd smooth gameplay is concerned. 120fps with 60fps 1% lows is inferior to 90fps with 75fps 1% lows.

Average FPS doesn't tell what the gameplay quality and smoothness is like at all. There's more to it than avg FPS to tell the story of how well you experience the game.

Steve Burke from Gamers Nexus now recommends against 9600k because while the average FPS numbers are good, the poor 1% lows in some games causes stutter, ruining gameplay. He notes that the r5 3600 doesn't have this issue and considers it a superior gaming processor to the 9600k.

Actually I DO know why there is this complete lack of clarity in regards to what a CPU does and does not contribute to gaming. When Ryzen first hit the scene it offered 100% more cores than Intel's flagship mainstream desktop part and whooped it at a whole lot of things. Intel's equivalent 8 core CPU was HEDT and cost $700 for the chip alone - almost double the AMD offering.

Before this point, every enthusiast was well aware that gaming performance was dictated by the

GPU,

not CPU, (which it still is) with the exception of inducing an artificial bottleneck that didn't represent real life ... this was well known, especially the "not real life" part.

Intel, needed a new marketing (propaganda) strategy in light of Ryzen, so they emphasized the importance of a CPU to

gaming (to cast the widest net possible) and incentivized reviewers to focus all their CPU comparisons for Ryzen on artificially bottlenecked gaming, instead of CPU work.

You can still see the effect today with some reviewers taking workstation targeted CPUs, doing 75% of their testing on bottlenecked gaming, and not even really testing the CPU for the applications in which it was designed for. 12, 16 and 18 core CPUs are not designed for gaming - that is not their main purpose, yet the reviews will still almost entirely focus on just bottlenecked gaming that isn't even real world ...

Don't underestimate Intel's ability to make people think they way they want them to think. They fool their investors constantly with paid for headlines.

They didn't hire an army of tech writers for nothing ... (they actually did that)