My post is addressing to Tim the writer of this article and also to chat forum too.

Since your articles about DLSS generated a lot of controversy I think that you should give us more basic info about what and how you are doing these tests, like:

1. How many of these games tested are sponsored, paid or supported by Nvidia in any way, including unofficial, behind the scenes, Nvidia support.

I want to know if a game is sponsored, publicly or behind the scenes, by any corporation, like Nvidia, AMD or Intel. Cyberpunk 2077 is the most noisy example of this. Nvidia is so in bed with CP2077 game devs without officially admitting, that CDPR can be considered an Nvidia subsidiary. I want to know if DLSS is so "superior" in the games especially if in fact they are "sponsored" or "supported" by Nvidia as they did with Cyberpunk 2077 and Portal, etc (like in Nvidia doing all the work for "free" for those game developers). Because, in reality, customers are paying a revolting higher prices for this "free" DLSS support from Nvidia.

2. What videocards did you used for this test? Why only 1 Nvidia? Nor AMD too? Did you find some games running FSR2 better on AMD cards than on Nvidia? Or you just resumed yourself to test FSR and DLSS only on this Nvidia card? Because too many games are sponsored by Nvidia, and also Nvidia videocards may run FSR worse than AMD counterparts videocards. I find odd that you did not mention and did not took this into consideration.

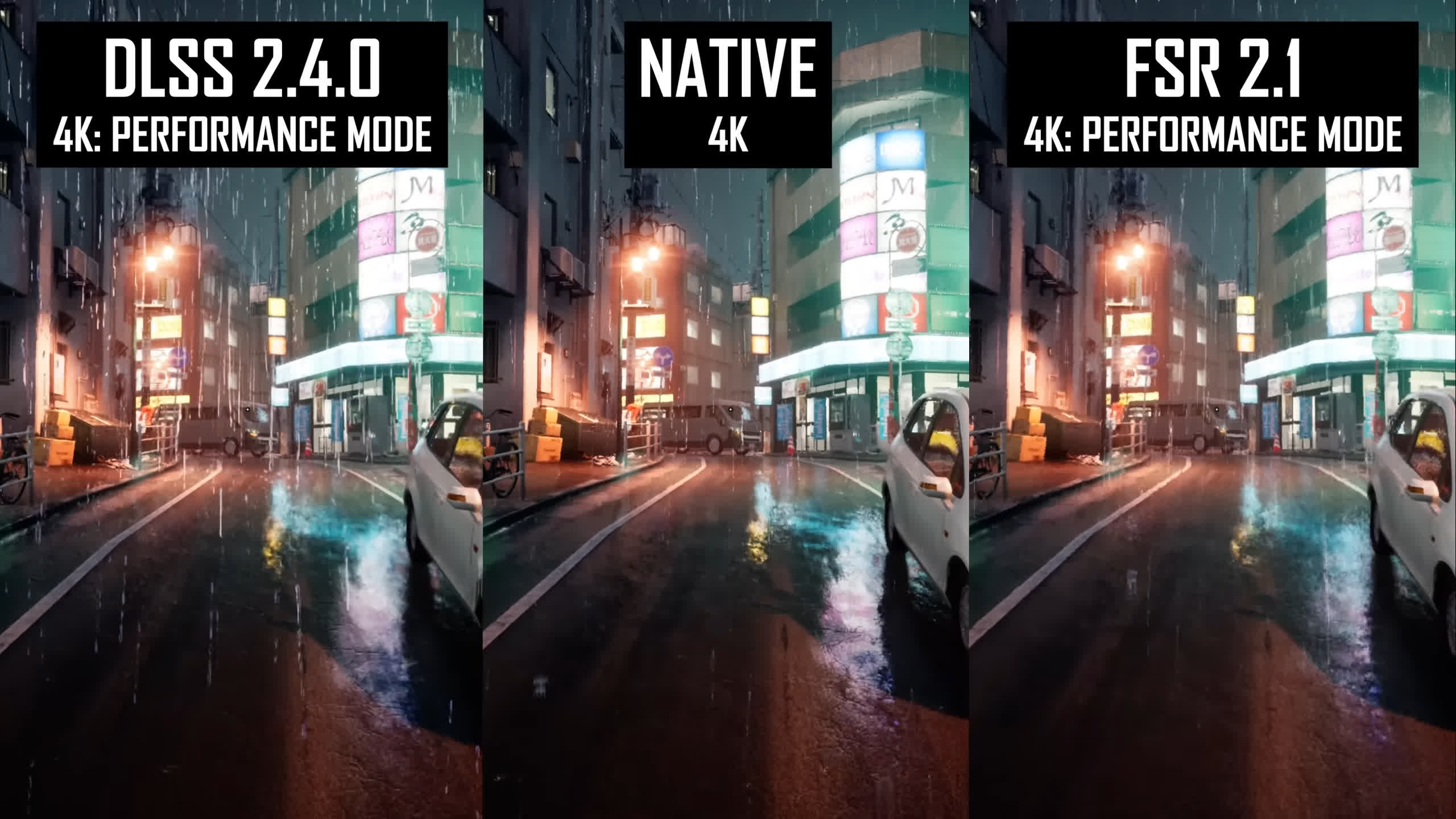

3. Citing "4K using Quality mode upscaling, 4K using Performance mode upscaling, and 1440p using Quality mode upscaling".

Can you specify on which Nvidia videocards DLSS2 runs better than native exactly?

Because many Nvidia videocards, like those with 10GB Ram or less, have a serious VRAM limitation and do not run 2K or 4K high or ultra settings without degrading image quality or stuttering which is worse.

8GB vs16GB VRAM: RTX 3070 vs Radeon 6800

This narrow the Nvidia videocards DLSS almost universally "superiority" claims only to 4080 and 4090, and for some games to 4070(TI), 3080 12Gb, and 3090(TI).

Hogwarth Legacy clearly showed a degrading image quality for less than 10-12GB RAM, so even if DLSS claims to be so good, in reality the gameplay quality experience is poor.

Can you show us on a 3070 videocard how superior is DLSS2 vs AMD FSR2 vs Native at 4K high and ultra settings, running Hogwarth legacy? The last of Us can be another example, worth to check this and clarify. And run the same games on AMD videocards corresponding price competition.

4. Can you mention or at least give us an estimate over price cost of Nvidia DLSS2, DLSS3 versus AMD FSR2, (soon FSR3) which is available for both AMD and NVIDIA cards to have o better and more objective picture if it really worth the price difference? Because when you benefit of something which is open, easier and almost free to implement like FSR2 (soon FSR3) versus having to pay extra for a proprietary, closed Nvidia DLSS, it is very important.

5. The percentage of Nvidia 1xxx cards on steam chart is high.

So, can you show us how superior is DLSS2 on a 1080(TI) videocard? Or an 1660 ti?

And show us how good is FSR2, on the same Nvidia 1xxx cards?

Citing your next affirmation:

"Over the past few weeks we've been looking at DLSS 2 and FSR 2 to determine which upscaling technology is superior in terms of

performance but also in terms of

visual quality using a large variety of games. On the latter, the conclusion was that DLSS is almost universally superior when it comes to image quality, extending its lead at lower render resolutions."

Thus, we want to see how "superior" is DLSS2 versus FSR on GTX1xxx videocards. If it is not superior than it is mandatory to know.

Can you show us a graph with how many FPS FSR2 brings and how many FPS DLSS2 brings? Or the "superior"quality? Even if DLSS brings 0 fps and 0 superior quality, you have to mention this, not disregard it.

So we can have a better informed opinion of how "DLSS is almost universally superior when it comes to image quality, extending its lead at lower render resolutions."

Because for Nvidia own GTX 1xxx cards, AMD FSR2 is infinite more times "superior" in ANY game (any FSR2 positive number is more than 0 Nvidia DLSS2).

6. Why did you chose to mention only the strong points of DLSS2 in your latest articles but fail to mention at least one of the major weak points at all?

Do you at least acknowledge the irony of FSR2 working on more Nvidia cards while own Nvidia DLSS versions work only on some Nvidia videocards and this mostly because Nvidia intentionally chose a market fragmentation dark pattern business model? Why do you choose to keep silence of this instead of saying it louder and every-time when you mention DLSS superiority to also mention the weak points of the same DLSS?

Otherwise it seems that you rather limited yourself to write a PR commercial article for Nvidia DLSS2 "superior" quality.

I find these 5-6 issues being really concerning points of this article and that's why, especially of the 6 point, I find this article as being of a low quality at least. Can we appreciate an article as being consistent when it only shows the strong points of a product, technology but fail to mention the weak points? Is DLSS technology so superior or, so perfect, as Nvidia PR claims to? I can tell you that you make it very hard to discern when tons of articles including this one write almost only about Nvidia superior DLSS strong points but in the same time are hit by Nvidia Amnesia and DO NOT aknowledge or mention Nvidia weak points which often are evident.

An advice: it is better to present both the strength and the weakness of a product, technology, etc. and the same for the competition product and engage the readers to formulate their own conclusion instead of leading them to your own narrative.

I did not read or hear you saying anything about the strong points of FSR2, though you compare against DLSS2 in this article and the previous one too. I want to know the strong and weak points for both DLSS2 and FSR2. Why you failed to mention for both of them and focus only on DLSS2 "strong" points shows a lack of consistency.

An image is worth a thousand words.

How do you think that your praise of DLSS2 "superiority" is looking on a graph image showing 0-ZERO fps increase from DLSS2 on GTX 1xxx versus between 50-75% more than native FPS with AMD FSR?

Overall, I find AMD FSR very good, because it is available for all AMD, Intel and Nvidia videocards, and also on the last gen consoles too. FSR is available even for the old generation Nvidia cards which Nvidia DLSS is not available.

And I suggest to Tim to acknowledge the following arguments in his articles about Nvidia and AMD videocards comparison.

I am citing Haiart, an user from another forum, which very clearly pointed out the weak points of DLSS as a deliberately planned obsolescence and also as an Nvidia dark pattern business model:

"It is obvious that NVIDIA could have made DLSS3 work with Ampere and Turing, or DLSS 2 work on GTX 1xxx. NVIDIA didn't make it available because of market segmentation. If you truly believe that NVIDIA couldn't, this also means that AMD is more technically advanced for being able to make it work not only on AMD, but on NVIDIA and Intel hardware too across all their generations."

As I said in a previous post, I'll prepare an interesting post about "triple standards" of many hardware reviewers which praise some strength of hardware components which they get for free to review, others are payed, while others are blackmailed, but in the same time blatantly disregard the huge issues or limitations which the same components have, only to suite their narrative or better to say the manufacturer narrative.

The tragedy is that more and more of these "reviewers" are hit by this Intel, AMD and especially Nvidia Amnesia, and forget to use the same skewed "standards" or methodology when reviewing or comparing hardware from the competition.

Thus, unfortunately, many hardware reviewers gradually became the 2nd class PR tools for the big tech corporations instead of formulating an HONEST, personal and intelligent argumented opinion. And some of them became so delusional that they claim they like it instead of fighting against.

P.S. About this DLSS "drama", I decided to take a stand because too much DLSS is shoved on readers and users eyes by Nvidia PR and by their most passionate reviewers and users, but quite often some of their arguments lack consistency or do not apply the same standards or methodology to competition products.