Intel is said to be working on a Broadwell chip that will pack a whopping 18 processing cores. The chip, which would feature the highest core count to date, won’t arrive until sometime in 2015 according to a report from VR-Zone.

Instead of just speeding up the cores, Intel will simply pile more of them on each die. That may not immediately be useful unless you actually have software designed to take advantage of multiple cores, but I digress.

The 18-core Broadwell-EP or EX Xeon chip will be based on the company’s pending 14-nanometer process although there will be multiple variants. For example, one is expected to be an eight to 10 core high performance desktop / workstation part while another with 12 to 16 cores will target enterprise servers.

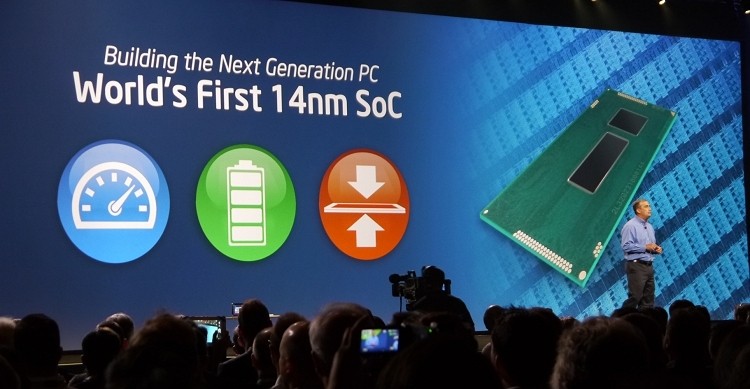

The first consumer Broadwell chips are expected sometime in the first half of next year as the second processor in the Haswell microarchitecture. In accordance with Intel’s tick-tock production methods, Broadwell will feature the aforementioned reduction in manufacturing process to 14-nanometers.

During a public demonstration at IDF back in September, CEO Brian Krzanich said the chip would allow systems to provide a 30 percent improvement in power use compared to Haswell.

In related news, CPU World is reporting that Intel is also planning to bring Broadwell to mobile devices. With a TDP of as low as 4.5 watts, such a chip could be a perfect fit for tablet use. Naturally, Intel declined to comment on the matter.

https://www.techspot.com/news/55074-future-intel-broadwell-chip-could-pack-up-to-18-cores.html