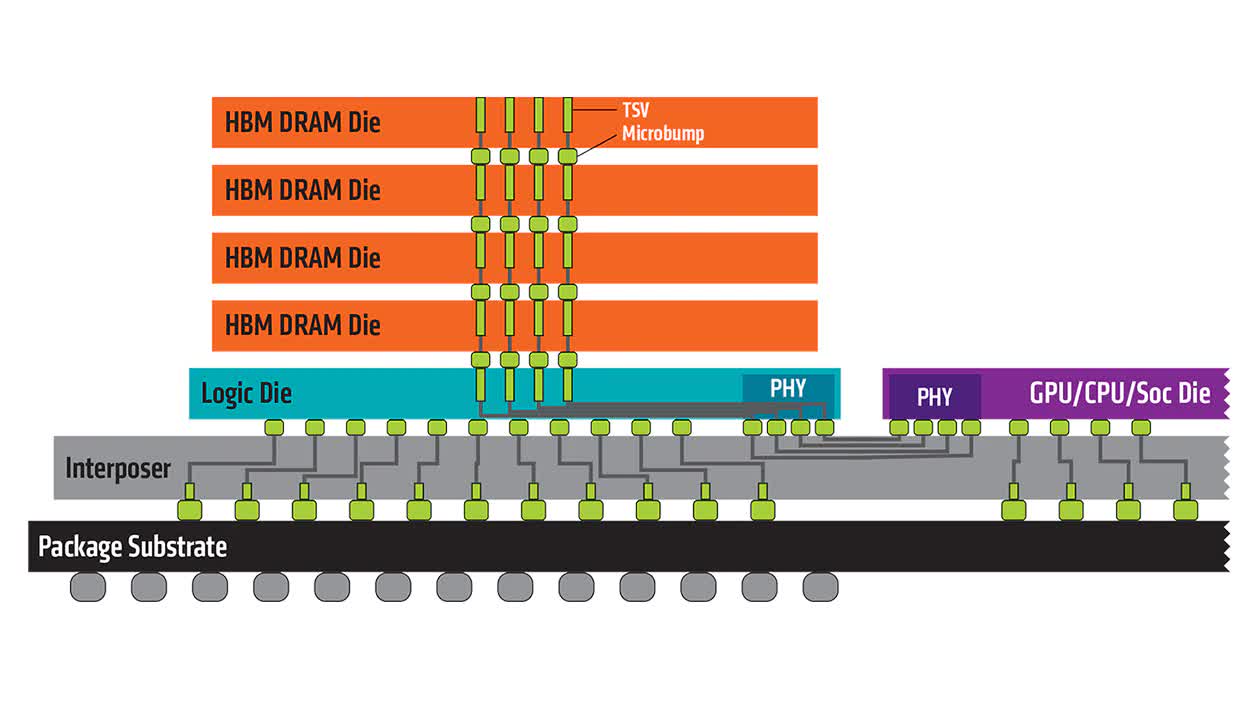

In context: The first iteration of high-bandwidth memory (HBM) was somewhat limited, only allowing speeds of up to 128 GB/s per stack. However, there was one major caveat: graphics cards that used HBM1 had a cap of 4 GB of memory due to physical limitations. However, over time HBM manufacturers such as SK Hynix and Samsung have improved upon HBM's shortcomings.

HBM2 doubled potential speeds to 256 GB/s per stack and maximum capacity to 8 GB. In 2018, HBM2 received a minor update called HBM2E, which further increased capacity limits to 24 GB and brought another speed increase, eventually hitting 460 GB/s per chip at its peak.

When HBM3 rolled out, the speed doubled again, allowing for a maximum of 819 GB/s per stack. Even more impressive, capacities increased nearly threefold, from 24 GB to 64 GB. Like HBM2E, HBM3 saw another mid-life upgrade, HBM3E, which increased the theoretical speeds up to 1.2 TB/s per stack.

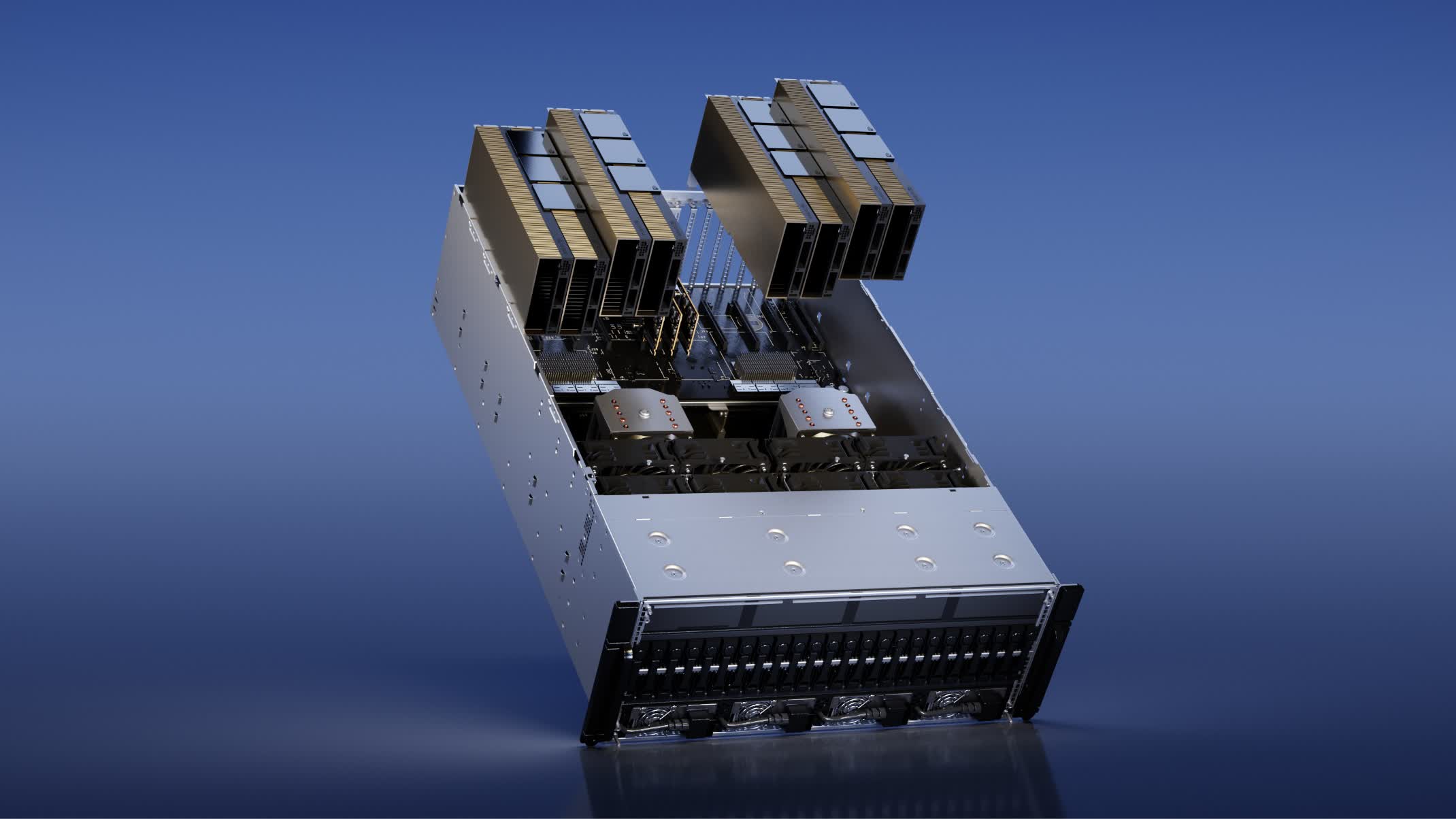

Along the way, HBM slowly got replaced in consumer-grade graphics cards by more affordable GDDR memory. High-bandwidth memory became a standard in data centers, with manufacturers of workplace-focused cards opting to use the much faster interface.

Throughout the various updates and improvements, HBM retained the same 1024-bit (per stack) interface in all its iterations. According to a report out of Korea, this may finally change when HBM4 reaches the market. If the claims prove true, the memory interface will double from 1024-bit to 2048-bit.

Jumping to a 2048-bit interface could theoretically double transfer speeds again. Unfortunately, memory manufacturers might be unable to maintain the same transfer rates with HBM4 compared to HBM3E. However, a higher memory interface would allow manufacturers to use fewer stacks in a card.

For instance, Nvidia's flagship H100 AI card currently uses six 1024-bit known good stacked dies, which allows for a 6144-bit interface. If the memory interface doubled to 2048-bit, Nvidia could theoretically halve the number of dies to three and receive the same performance. Of course, it is unclear which path manufacturers will take, as HBM4 is almost certainly years away from mass production.

Both SK Hynix and Samsung believe they will be able to achieve a "100% yield" with HBM4 when they begin to manufacture it. Only time will tell if the reports hold water, so take the news with a grain of salt.

https://www.techspot.com/news/100174-hbm4-could-finally-double-memory-bandwidth-2048-bit.html