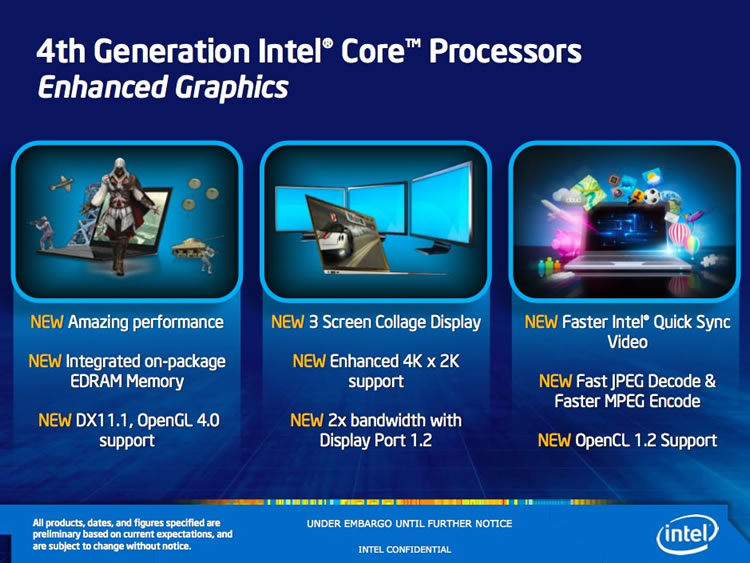

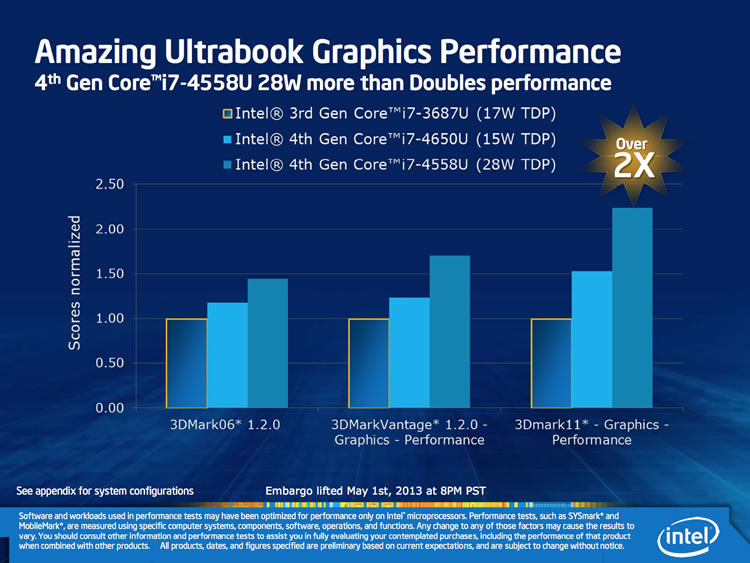

Intel has disclosed new information about its up and coming Haswell processors, detailing the graphics specs and potential performance improvements versus today's integrated HD graphics. Intel is giving the new graphics engine a brand name: "Iris", touting 2x to 3x the performance of HD 4000 graphics.

In the past few months we've come to learn a lot about Haswell. As with Ivy Bridge, efficiency and power consumption have taken priority over raw processing power, and thus Haswell it's expected to be only marginally faster than the current generation of Intel Core CPUs. On the graphics front, however, it's a different story.

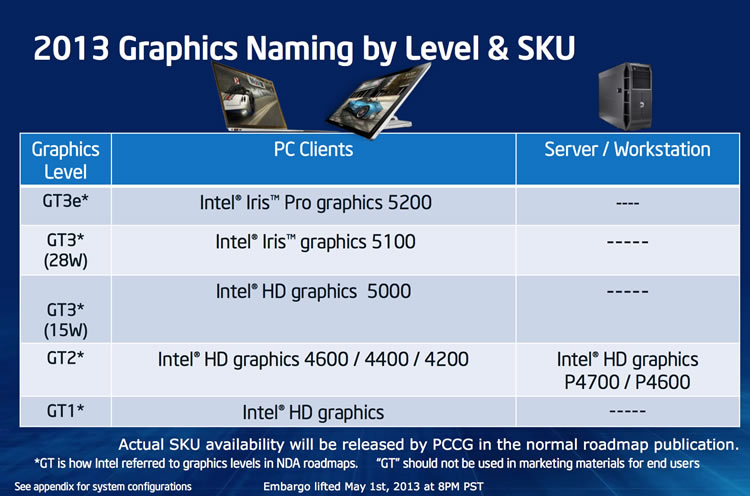

There will be five different graphics configurations, in order of performance: Iris Pro 5200, Iris 5100, HD 5000, HD 4600/4400/4200 and HD graphics. This may become even more confusing that the current line-up, although for the very most part performance-oriented desktop and laptop CPUs simply ship with HD 4000 (the fastest integrated graphics from Intel), while smaller ultrabooks and tablets get the more specialized configurations.

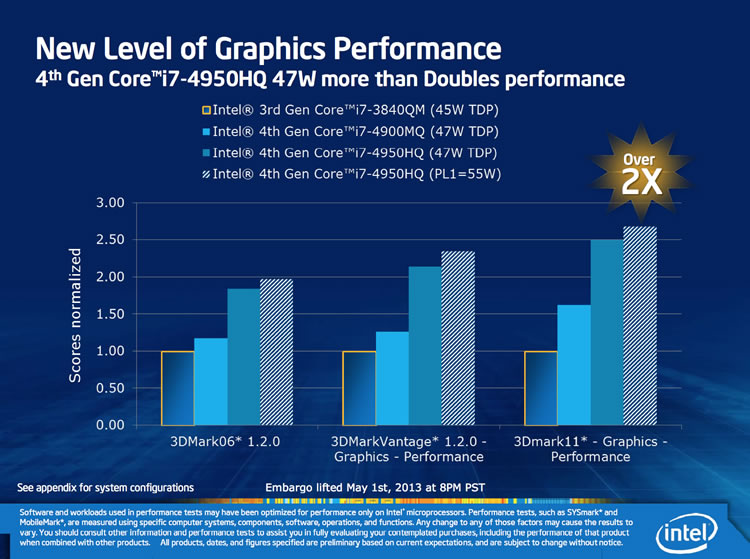

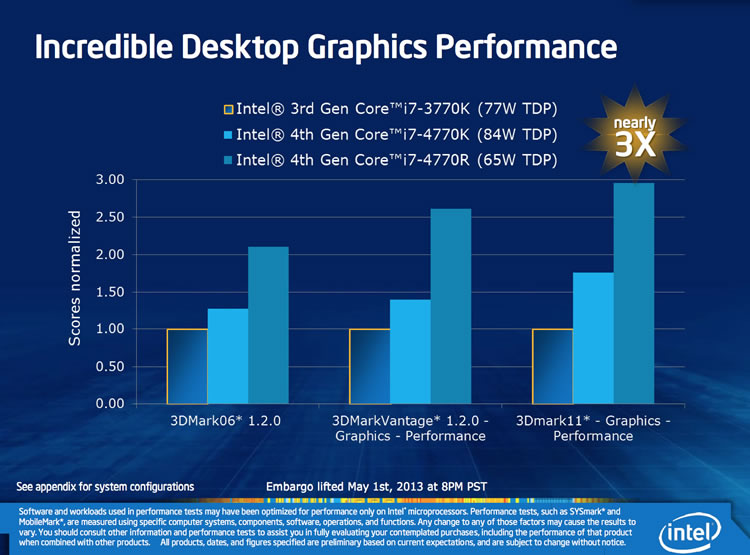

Here's what Intel is expecting from Iris (don't miss our extended note about performance further down below):

Three times the performance of HD 4000 sounds like an amazing feat for a single generational jump. As before, this has the potential to keep eating on the budget discrete GPU market. It's no coincidence AMD and Nvidia have nearly retired the sub $100 graphics cards, since integrated graphics have been able to cope with different kinds of typical workloads (HD video playback, etc.), usually only falling short when it comes to gaming.

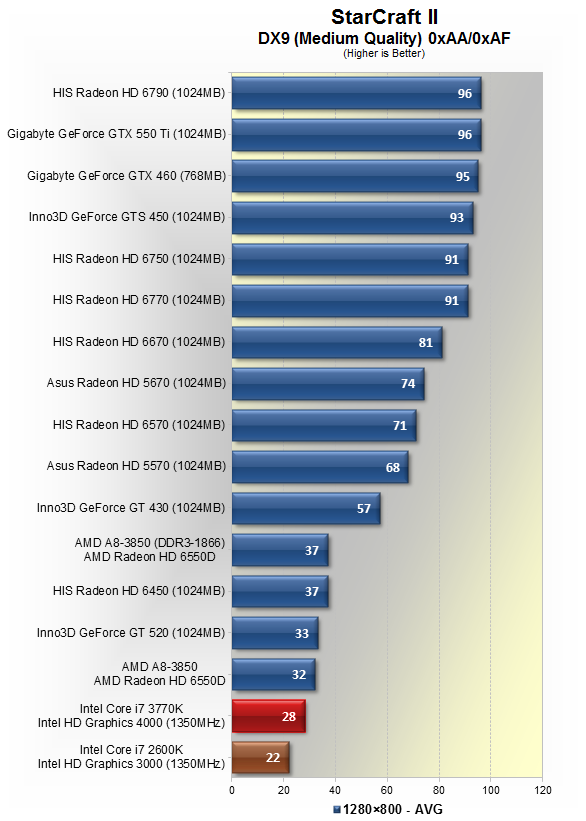

When we reviewed Ivy Bridge about a year ago, we tested the Core i7-3770K's on-die GPU performance. The results were disappointing to say the least, but if Intel is able to double or even triple performance, it's going to be a very interesting landscape for PC gaming moving forward.

Starcraft 2 running at 1280x800 (medium quality) on HD 4000. More games here.