No, it isn't. Digital Foundry has done several dozens of tests of games running on PCs vs consoles, and they found them to perform about the same as a RTX 2070 Super on average (which performs the same as a RX 6600 XT), or like a RTX 2080 at most in a few absolute best case scenarios. And those are tests running actual, released games in real time with a framerate counter on the screen. Any "console optimization" or "Windows performance penalty" (which is complete nonsense to begin with) is already accounted for in those comparisons.

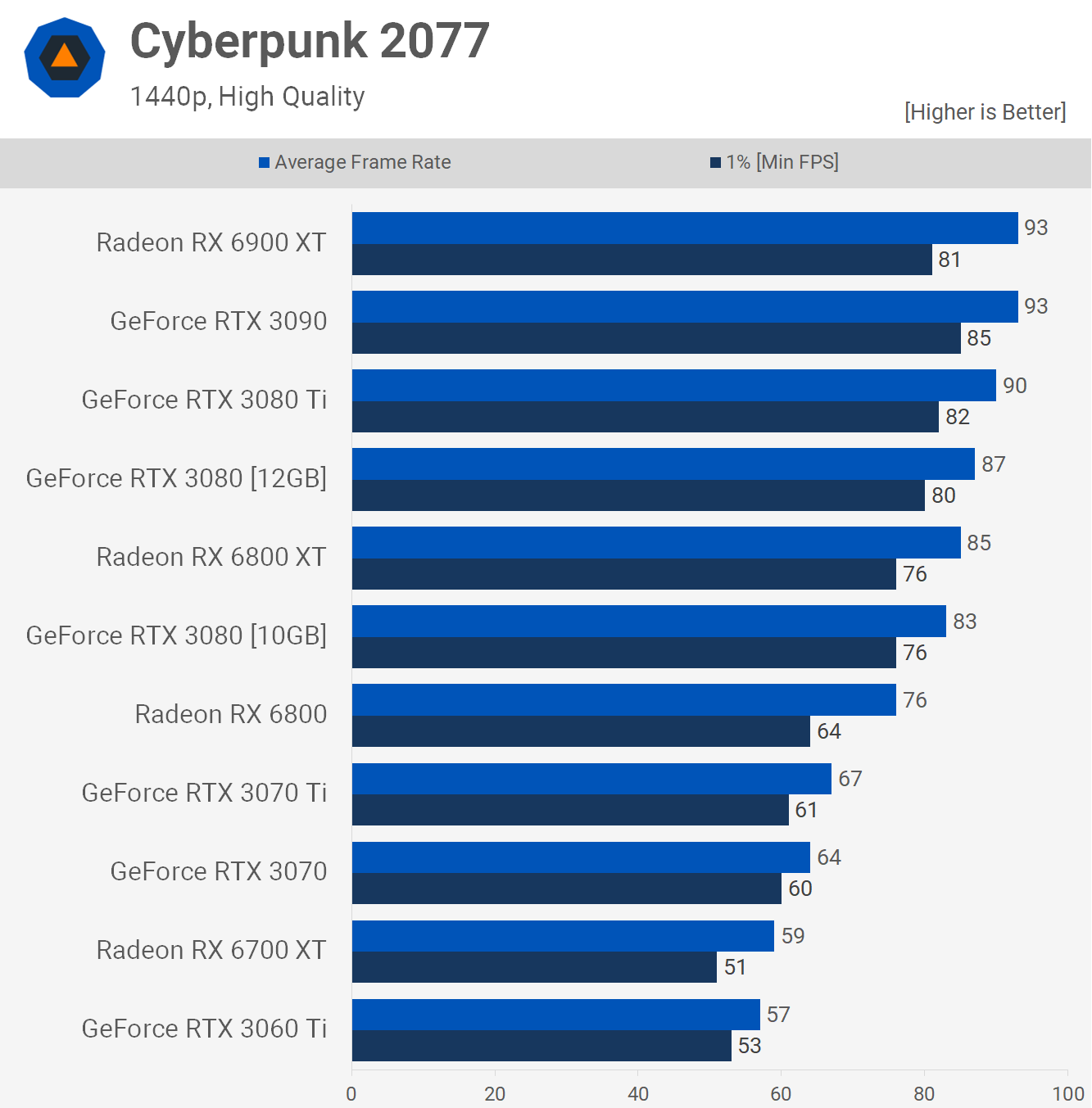

Complete and utter nonsense with no source. You can see from reviews all over the web, including right here on TechSpot, that even a 6700 XT already runs the game significantly better than the PS5 does at 1440p (which is the resolution the PS5 uses in performance mode).

Also, using one single game (that is a questinable port with VRAM issues) to compare PC and consoles, instead of the myriad of tests done by DF, is just hilarious.