A hot potato: There were plenty of Super Bowl commercials yesterday, including one involving a Tesla. But this wasn't a promotion from the electric vehicle company; it was a 30-second criticism filled with simulated child deaths from an organization dedicated to banning the automaker's Full Self-Driving (FSD) software.

The Super Bowl ad, aired in Washington, DC, Austin, Tallahassee, Albany, Atlanta, and Sacramento, was the work of The Dawn Project, an organization calling for software in critical computer-controlled systems to be replaced with unhackable alternatives that never fail.

The ad shows a Tesla Model 3 with FSD engaged (according to The Dawn Project) running down a mannequin on a crosswalk the same size and dressed as a child, swerving into oncoming traffic, hitting a stroller, going past stopped school buses, ignoring 'do not enter' signs, and driving on the wrong side of the road. The voiceover then claims Tesla is endangering the public with its "deceptive marketing" and "woefully inept engineering."

Exactly. This will greatly increase public awareness that a Tesla can drive itself (supervised for now).

— Elon Musk (@elonmusk) February 12, 2023

CEO Elon Musk didn't seem concerned by the commercial, tweeting that it would likely increase public awareness that Tesla's can drive themselves (with supervision). He also responded to a Tesla fan account claiming the ad was fake with a laughing emoji.

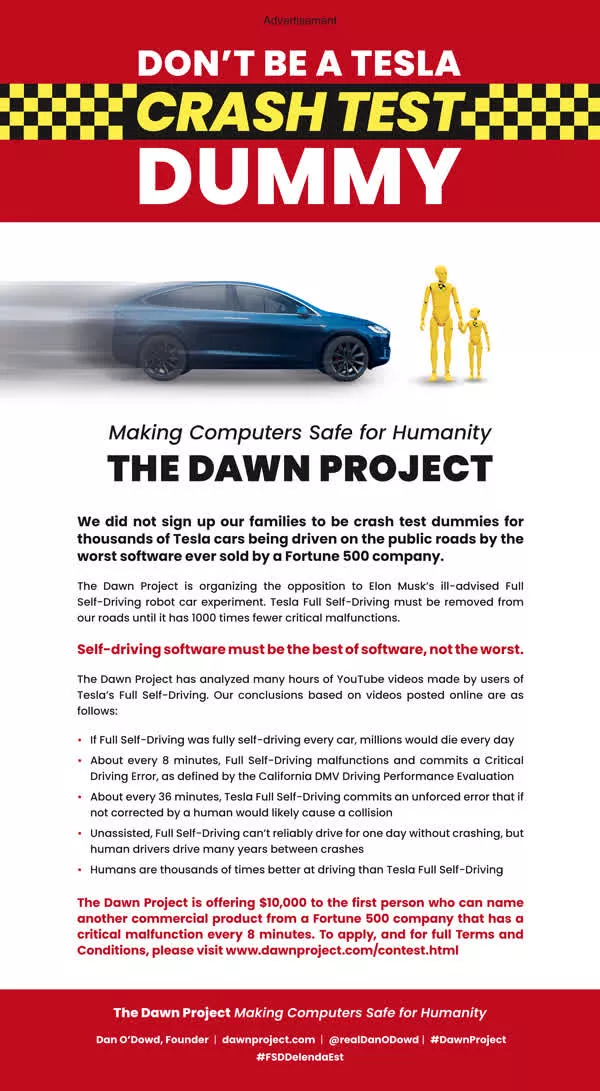

This isn't the first time the Dawn Project has taken out a public notice slamming Tesla. Back in January 2022, it paid for a full-page ad in the New York Times claiming FSD software malfunctions and commits critical driving errors every 8 minutes. The ad demanded FSD be removed from public roads until it has "1,000 times fewer critical malfunctions," and offered $10,000 to the first person who could name "another commercial product from a Fortune 500 company that has a critical malfunction every 8 minutes."

The following August, The Dawn Project released a video that, again, showed four child-size mannequins wearing children's clothes being hit by Tesla vehicles. The company sent a cease-and-desist letter on August 11 to Dan O'Dowd, founder of the Dawn Project, demanding the video be taken down. It also asked O'Dowd to issue a public retraction and send all material from the video to Tesla within 24 hours of receiving the letter. O'Dowd responded in a blog post that called Musk "just another crybaby hiding behind his lawyer's skirt."

O'Dowd is also the CEO of Green Hills Software, which develops operating systems and programming tools for embedded systems. Its real-time OS and other safety software are used in BMW's iX vehicles and other cars from various automakers.

Detractors have called The Dawn Foundation's testing methodology in these ads into question, while others note that one of Green Hills Software's customers is the Intel-owned Mobileye, which makes chips for self-driving software.

It's been a mixed year so far for Tesla. While its director of software admitted that the famous 2016 Autopilot demo video was staged, and Apple co-founder Steve Wozniak slammed the company and Musk over claims they robbed him and his family, Tesla's Autopilot system was exonerated after NTSB investigators found it was not engaged at the time of a fatal crash in 2021.

https://www.techspot.com/news/97587-super-bowl-ad-shows-alleged-fsd-enabled-tesla.html