Bottom line: Nvidia sees the race to develop generative AIs like ChatGPT and Midjourney as the iPhone moment for artificial intelligence. More importantly, the company seems well-positioned to capitalize on the surging demand for GPUs and AI accelerators, and this has sent its valuation close to $1 trillion this week. Its latest financial report paints a good forward outlook, but it's also a reminder that pleasing gamers isn't a priority for the company. And it probably won't be for as long as the tech industry is obsessed with AI.

Almost every business out there is trying to jump on the AI train, and this is making Nvidia and its investors happier than ever. So happy, in fact, that the company feels confident it can soon become the first chipmaker to be valued at more than $1 trillion, thanks to the enormous demand for data center GPUs and AI accelerators. Investors have sent the share price soaring, and it's hovering around $384 (up more than 25 percent from Tuesday) as of writing this.

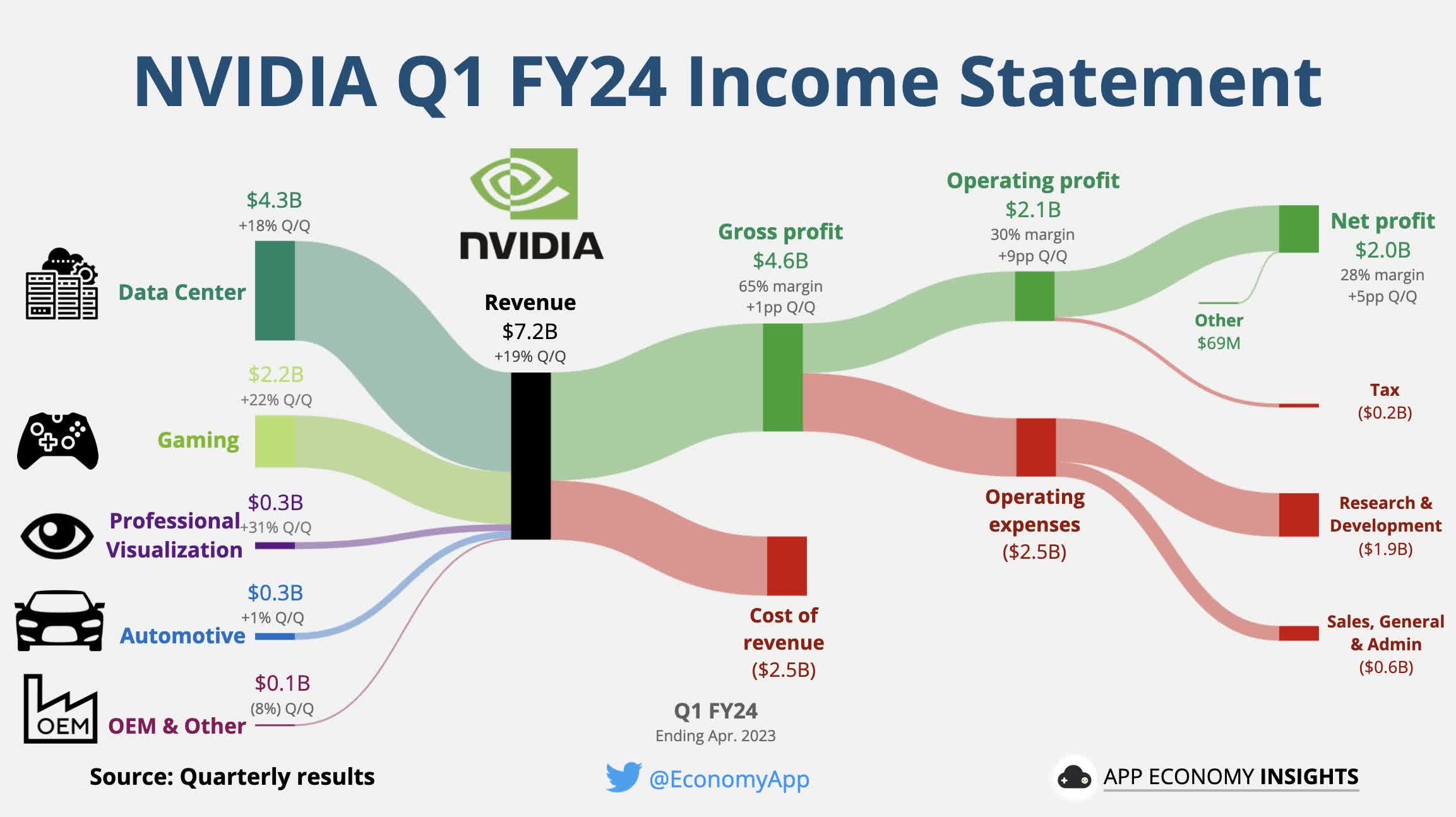

AI is one of the main reasons Nvidia's data center business grew no less than 14 percent during the first three months of this year compared to the same period last year. For reference, Intel's Data Center and AI Group recorded a staggering 39 percent drop, and AMD's data center division delivered flat revenue compared to the same quarter in 2022.

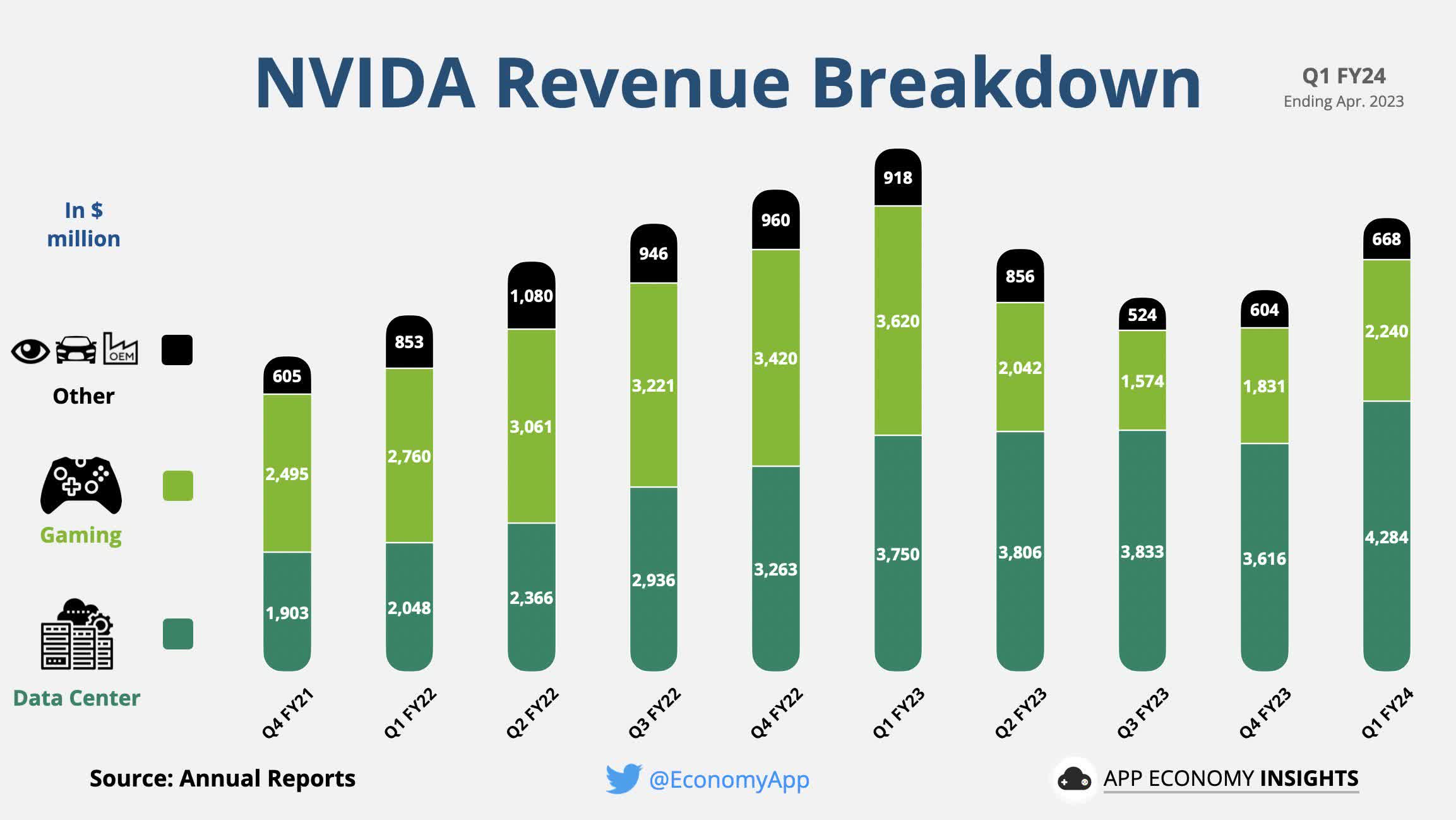

As you can see from the graph above, it's a big deal for Team Green as this is where more than half of its revenue has been coming from for almost a year now. The company recorded $4.28 billion in sales to enterprise customers, which is also above the $3.9 billion figure expected by analysts.

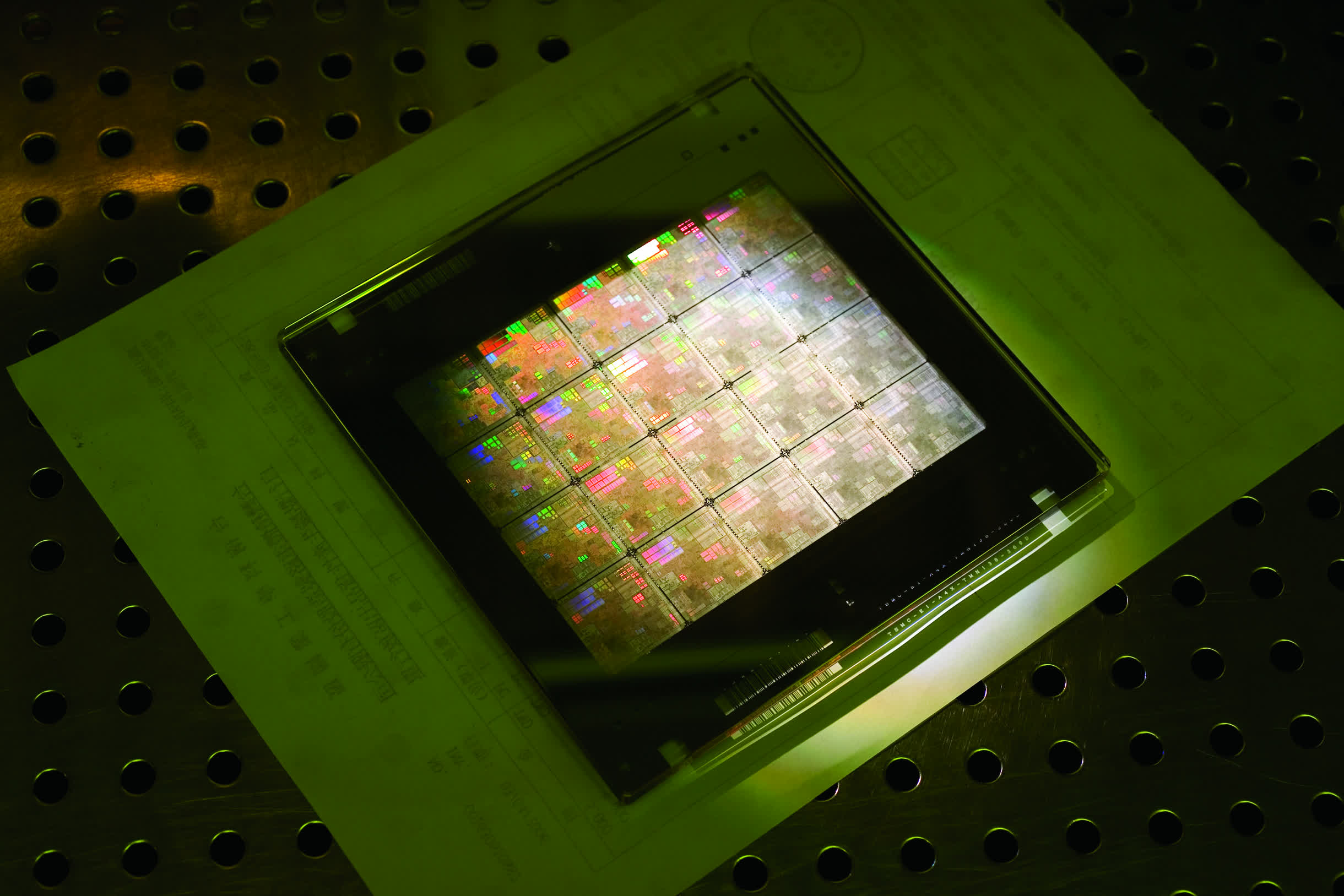

This partly explains why Nvidia has moved some production of GeForce RTX 4090 GPUs to make more Hopper-based H100 enterprise GPUs. Companies like Microsoft, Oracle, OpenAI, Twitter, Amazon, and Google are all buying large amounts of the latter product to train and run generative AIs. To put things into perspective, the RTX 4090 sells for around $1,600, while used H100 cards sell for over $40,000 on eBay. This makes even Intel's 4th-gen Xeon Scalable Sapphire Rapids CPUs look cheap at $17,000.

Some companies like Microsoft, Meta, Amazon, and Google are investing in custom silicon for their AI efforts, but that won't curb their appetite for Nvidia GPUs anytime soon. During an investor call Wednesday, Nvidia CEO Jensen Huang explained the company has put 15 years of investment into hardware and software development which placed it in the right position at the right time to capitalize on a large investment cycle from companies big and small working on AI-based services.

Jensen is no doubt happy about generative AIs becoming the "primary workload of most of the world's data centers," but gamers are understandably less joyful about Nvidia's strategy with the RTX 40 series graphics cards. Between the high prices and low VRAM amounts on some of the new models, PC enthusiasts aren't exactly rushing to upgrade. That prompted Nvidia to announce an RTX 4060 Ti variant with a larger frame buffer, and we're also seeing small price drops on existing models.

Nvidia's gaming revenue for the first quarter of this fiscal year was $2.24 billion – a 38 percent year-over-year drop. The company blames the overall economic climate and the relatively slow rollout of RTX 40 series GPUs for the decline. However, our own Steven Walton took a look at the recently-launched RTX 4060 Ti and found it to be overpriced at $400. Higher-end models are $600 and $800, respectively, so not exactly appetizing options for gamers even though they do offer more value for the money.

Also read: Is Nvidia now a software stock? The competitive advantage of CUDA

One thing is for sure, the AI frenzy is transforming the tech industry and Nvidia stands to benefit the most thanks to the CUDA software stack, which is exclusive to its hardware offerings. Rivals have so far failed to create a true alternative and convince others in the industry to use it, though companies like AMD and Intel have certainly tried with toolkits like ROCm and oneAPI.

As a result, Nvidia is optimistic about the future and expects to generate around $11 billion in revenue in the current fiscal quarter. This would be a 64 percent increase year-over-year and mark a new record in terms of quarterly revenue for the Jensen-powered company. We'll have to wait and see.

https://www.techspot.com/news/98823-ai-chip-boom-propelling-nvidia-new-heights-gamers.html