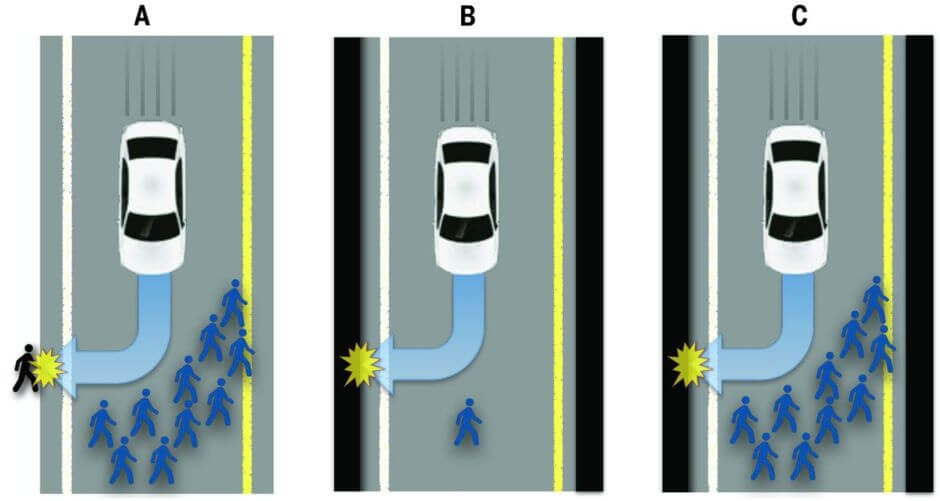

One of the interesting moral questions posed by self-driving vehicles is one of self-sacrifice. Specifically, if a situation arose where an autonomous car could save numerous lives by sacrificing its occupants, should it choose the lesser of two evils and kill its passengers?

According to a recent study - “The social dilemma of Autonomous Vehicles” - in Science, 75 percent of participants said self-driving cars should be programmed to prioritize minimizing the number of public deaths in such situations, even if it means those inside the car perish.

While the statement sounds noble, many respondents added that if a car was designed to act in this fashion, they wouldn’t buy it, preferring instead to ride in a vehicle with an AI that protects the lives of its passengers above all else. Essentially, those surveyed say a self-driving car should definitely sacrifice its occupants for the sake of the greater good, just not when it’s their own lives or the lives of their children at stake.

Additionally, most people said self-diving vehicles should not put its passengers at risk if it meant only one or two pedestrians were guaranteed to live. So when autonomous vehicles become the norm, remember to walk around in large groups.

Researchers say that if both self-sacrificing and self-preserving autonomous cars went on sale, even the most altruistic among us would probably start putting themselves first.

“Even if you started off as one of the noble people who were willing to buy a self-sacrificing car, once you realize that most people are buying self-protective ones then you’re going to really reconsider why you’re putting yourself at risk to shoulder the burdens of the collective when no one else will,” said co-author Iyad Rahwan.

One way to avoid choosing between AVs that will save you and ones that may kill you is introducing government regulations that legally force manufacturers to program vehicles to take the self-sacrificing “utilitarian approach.” However, the survey participants said they were much less likely to purchase any autonomous vehicles if such regulations appeared.

The crux of this issue could be that while many people would sacrifice themselves when behind the wheel to save a large number pedestrians, they don’t want to be in a situation where the choice is taken away from them. What if, for example, the autonomous vehicle makes a mistake and kills its passengers needlessly?

It’s worth remembering, though, that it will be a long time – decades, even – before self-driving vehicles populate our roads, and by then we’ll have hopefully solved some of the moral and social dilemmas posed by the vehicles. They may improve congestion, pollution, and road safety, but it seems the cars will bring with them some new problems of their own.

https://www.techspot.com/news/65333-would-you-self-driving-vehicle-kill-you.html