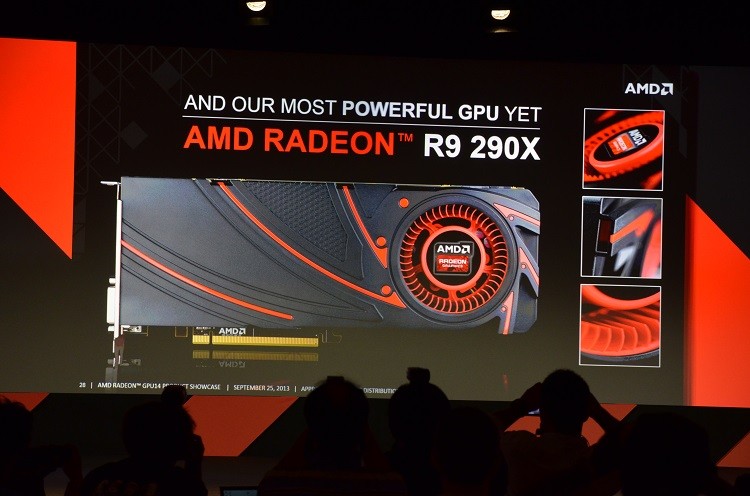

For the last few days, AMD has been throwing their GPU14 Tech Day event in Hawaii, gearing tech media up for the reveal of their next-generation graphics card. We've seen a few leaks surrounding this card, known as the Radeon R9 290X, and AMD has been pushing this announcement as the next big thing for PC gamers. But not until today have we officially heard the specifics relating to this graphics card, and what it will mean for the gaming market.

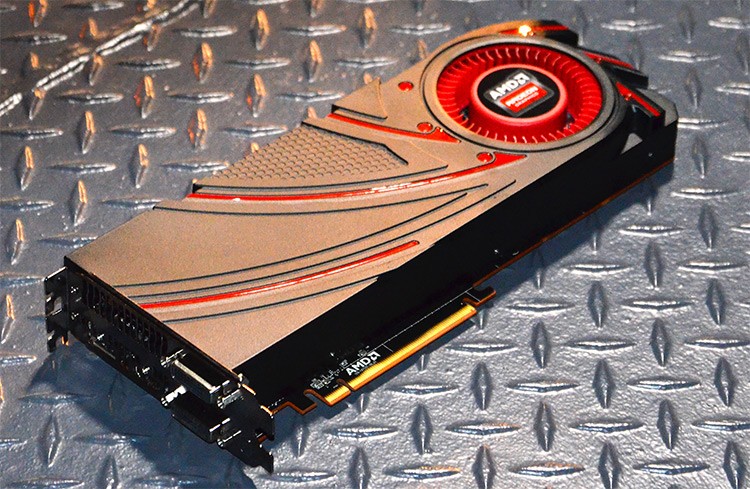

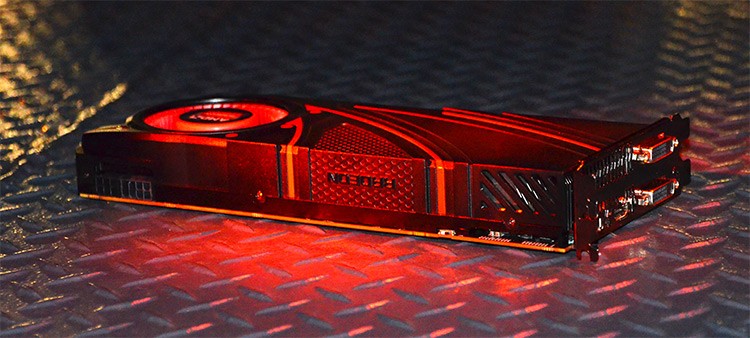

First up we have photos of the actual card, as seen at Tuesday's reception on the USS Missouri, accompanied by EA Dice for Battlefield 4. It's fairly standard in designs as far as we've seen recently for GPUs, with AMD once again opting for an air cooler at the end of the card that pushes air across the GPU core itself. The front panel sees dual-DVI ports, plus a HDMI port and a DisplayPort, and for power there's an 8+6pin PCIe power port.

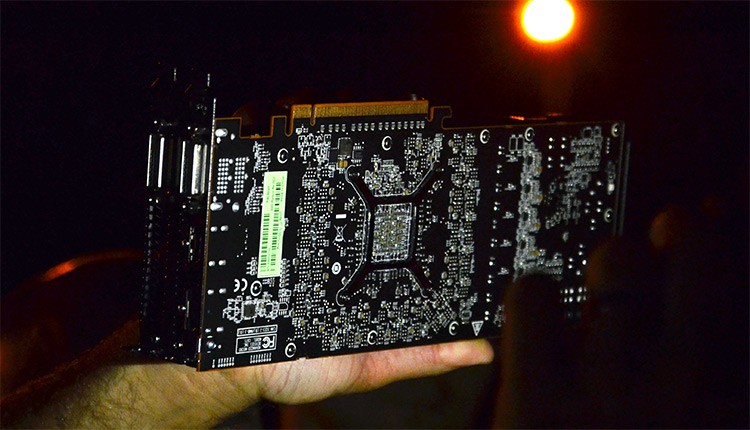

Perhaps most interesting, though, is the lack of a CrossFire port a long the top of the card. Speculation at the event is that AMD will be using PCI Express 3.0 for connectivity between two GPUs, ditching their proprietary connector that added extra bandwidth. PCIe 3.0 is found already in many modern motherboards, and it supports 120 GT/s (15.8 GB/s) transfers in each direction through a 16x slot, compared to 80 GT/s (8 GB/s) through PCIe 2.0. This extra bandwidth should allow it to ditch the connector at the top, instead pushing the necessary frame information through PCIe 3.0.

There's 4GB of memory aboard this card, 5 TFLOPS of computer power, 300 GB/s of memory bandwidth, and the ability to process 4 billion triangles per second. The GPU itself packs a whopping 6 billion transistors, and is built to support 4K Ultra HD resolutions.

The R9 290X will be available alongside Battlefield 4 in an exclusive bundle, although prices and a launch window have yet to be announced.

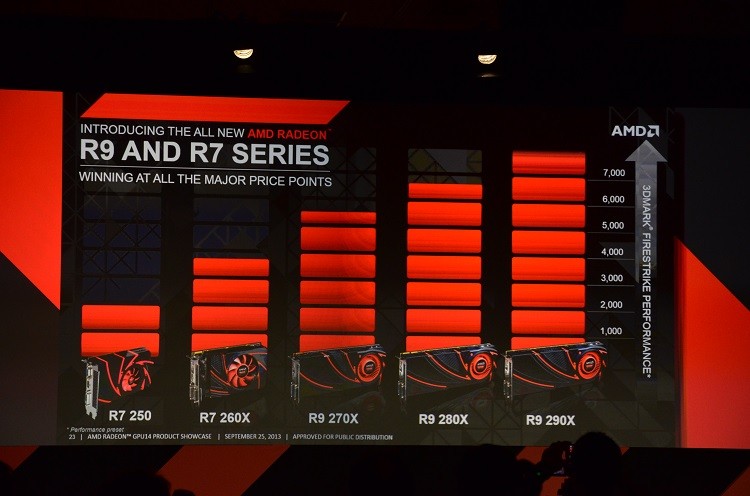

AMD also launched new R7 and R9 card lines, right from low-budget cards to enthusiast GPUs at many price points. The R7 250 is a <$89 budget card with 1GB of GDDR5 memory, the R7 260X is a 2GB card for $139, the R9 270X is a 2GB card for $199, and there will also be an R9 280X with 3GB of memory for $299. We also heard about an R9 290 later in the demonstration, but this wasn't detailed.

To support 4K displays, AMD has proposed a new VESA standard that will be embedded in 4K displays, allowing them to work out of the box with various devices. It will be supported in the Catalyst Control Panel, and should resolve issues with screen tearing at high-resolutions.

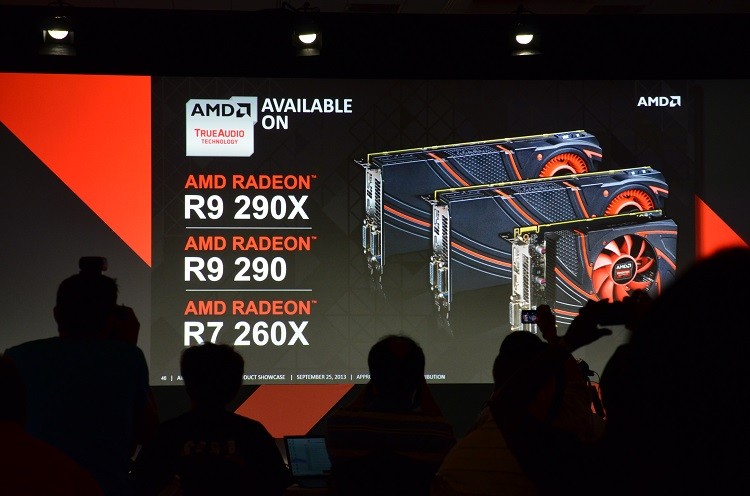

AMD TrueAudio will be a major part of Graphics Core Next 2.0-based graphics cards, available in the R9 290X, R9 290 and R7 260X. AMD is calling this technology a revolution for audio designers and game developers, similar to programmable shaders. We listened an "AstoundSound" demo at the GPU14 tech day from GenAudio, and it sounded absolutely incredible in 7.1 surround sound.

TrueAudio appears to be dedicated DSP technology on AMD's latest chips, which plugins can access to reduce GPU and CPU load. It was mentioned that certain plugins, such as AstoundSound, will be available for PC, Xbox One and PlayStation developers, enhancing how we hear in-game sounds. TrueAudio will help greatly with reverberation technology - normally a CPU and memory intense task - as audio tasks can be offloaded to the DSP.

Also announced today at AMD's GPU14 Tech Day:

Revolutionary 'Mantle' API unveiled to optimize GPU performance