This is the first part of an investigation into game streaming, a topic that we've been requested on before – particularly after we review CPUs – but that has purposely been left out because it's quite complex to explore all the nitty gritty details of streaming along with everything else in those reviews.

In the next weeks we'll dedicate a couple of articles to game streaming and provide you with a definitive answer on what sort of setup is the best, and what quality settings make the most sense to use. Today's investigation is focused on streaming quality settings, we want to find out what encoding settings deliver the best balance between quality and performance, and how each of the various popular encoding modes differs in terms of results.

One of the key things we want to figure out first: whether software encoding on the CPU, or hardware accelerated encoding on the GPU is the better approach...

A bit of backstory on our test platform before we get into the results... one of the key things we want to figure out first, is whether software encoding on the CPU, or hardware accelerated encoding on the GPU is the better approach. This is really one of the key battles, because if GPU encoding is the way to go, what CPU you need for streaming becomes largely irrelevant, whereas if CPU encoding is better, naturally your choice of processor becomes a major factor in the level of quality, not just in terms of consistency of streaming, but game performance on your end.

Over the last few months in particular, GPU encoding has become more interesting because Nvidia updated their hardware encoding engine in their new GPU architecture, Turing.

While a lot of the focus went into improving HEVC compatibility and performance, which isn't really relevant for game streaming at the moment, Turing's new engine is also supposed to bring 15% better H.264 quality compared to the older engine in Pascal (GTX 10 series). So that's something we'll look into, and see how Turing stacks up against x264 software encoding.

On the GPU side, we'll be using the RTX 2080 for Turing encoding, a Titan X Pascal for Pascal encoding, and we'll also see how AMD stacks up with Vega 64.

The second part of the investigation involves software encoding with x264, using a variety of presets. We're going to leave a CPU comparison with software encoding for a separate article, in this one we're more interested in how each preset impacts performance and quality.

All testing was done with the Core i7-8700K overclocked to 4.9 GHz and 16 GB of DDR4-3000 memory, which is our current recommended platform for high-end gaming. In the future we'll see how the 9900K fares along with AMD Ryzen CPUs.

For capturing this footage we're using the latest version of OBS, set to record at 1080p 60 FPS with a constant bitrate of 6000 kbps. These are the maximum recommended quality settings for Twitch, if you were just recording gameplay for other purposes we'd recommend using a higher bitrate, but for streaming to Twitch you'll need to keep it to 6 Mbps or lower unless you are a Partner.

We're testing with two games here, we have Assassin's Creed Odyssey which is a highly GPU and CPU demanding title, something that CPU encoding struggles with; and Forza Horizon 4, which is less CPU demanding but a fast paced title that low bitrate encoding can have issues with. Both titles present a bit of a worse case scenario for game streaming, but in different and unique ways.

We'll start with GPU encoding, because this is something that has been known to be rather terrible for a long time now. The key bit of interest here is to see how Turing has managed to improve things compared to past GPU encoding offerings, which were pretty much unusable next to CPU encoding options.

For Nvidia cards we used the NVENC option in OBS, and set it to use the High Quality preset at 6 Mbps. There are a few other preset options but High Quality produces, as the name suggests, the highest quality output. For AMD's Vega 64 we tried a range of encoding options, both in terms of preset and bitrate, without much luck as you'll see in the comparisons shortly.

Putting Turing and Pascal's NVENC implementations side by side, there honestly isn't that much of a difference at 6 Mbps. Both suffer from serious macroblocking effects, and in general there is a complete lack of detail to the image. In Forza Horizon 4 in particular, blocking is very noticeable on the road and looks terrible. Turing's encoder is perhaps a little sharper and in some situations is less blocky, but really both are garbage and if you want to stream games, this isn't the sort of quality that will impress your viewers.

AMD's encoder is even worse in that when your GPU utilization is up near 100%, the encoder completely craps its dacks and can't render more than about 1 frame per second, which wasn't an issue with the Nvidia cards.

I was able to get the encoder working with a frame limiter enabled, which brought GPU utilization down to around 60% in Forza Horizon 4, but even with the 'Quality' encoding preset, the quality Vega 64 produced was worse than with even Nvidia's Pascal cards.

With AMD's encoder out of the question right from the beginning, let's look at how Nvidia's NVENC compares to software x264 encoded on the CPU. In the slower moving Assassin's Creed Odyssey benchmark, NVENC even using the High Quality preset is noticeably worse than x264's veryfast preset, particularly for fine detail, even when both are limited to just 6 Mbps.

Veryfast x264 isn't amazing by any stretch, but the level of blocking and the lack of detail to Turing's NVENC implementation is terrible in comparison.

In the faster moving Forza Horizon 4 benchmark, Turing's NVENC does outperform x264 veryfast in some areas, NVENC again probably has slightly worse blocking but veryfast really struggles with moving fine detail. With this level of motion, NVENC is approximately equal to x264's "faster" preset. There is no doubt, however, that x264's "fast" preset is significantly better than NVENC in fast motion, and completely smokes it when there is slow or no motion.

These results are perhaps a little surprising considering Nvidia claims their new Turing NVENC engine for H.264 encoding is around the mark of x264 fast encoding or even slightly better at 6000 Kbps for 1080p 60 FPS streaming. But from what I observed, especially in Assassin's Creed Odyssey, software encoding was much better.

When looking purely at software x264 encoding presets, there are noticeable differences between each of veryfast, faster, fast and medium. In the slower moving Assassin's Creed Odyssey - and ignoring the clear performance issues with some presets for now - veryfast and faster don't deliver a great level of quality, with a lot of smearing, blocking in some areas, and a lack of fine detail particularly for objects in motion.

These two presets really should be reserved just for those that want to stream casually, because the presentation when capped to 6 Mbps isn't great.

The fast preset is the minimum I'd consider using for a quality game stream, particularly if you value image quality for your viewers. It provides a noticeable quality jump over faster, to the point where blurred fine detail now has definition.

Medium is a noticeable improvement again, but the gap between fast and medium is smaller than the gap between faster and fast. And as we'll see in a moment, good luck using the medium preset on the same system the game is running on. I did also check out the slow preset but at this point we're into diminishing returns for a massive performance hit.

For faster motion in Forza Horizon 4, again I'd completely dismiss the veryfast preset immediately because it's worse than NVENC for this type of content. Unfortunately the tight bitrate limit of 6000 kbps prevents any preset from doing true justice to the source material, but once again medium gets the closest and provides an improvement over fast.

The faster preset looks terrible so again I'd suggest fast as the absolute bare minimum for this type of content, really I'd recommend medium at a higher bitrate but hey, Twitch has set the limit to 6 Mbps so it's basically the best we can do.

Performance

But image quality is only one part of the equation, of course, the other is performance, and when you're streaming your gameplay from the very same computer you are playing on, it's important that both your gameplay experience and the performance of the stream are adequate.

We'll start here by looking at GPU encoding and see how that affects performance...

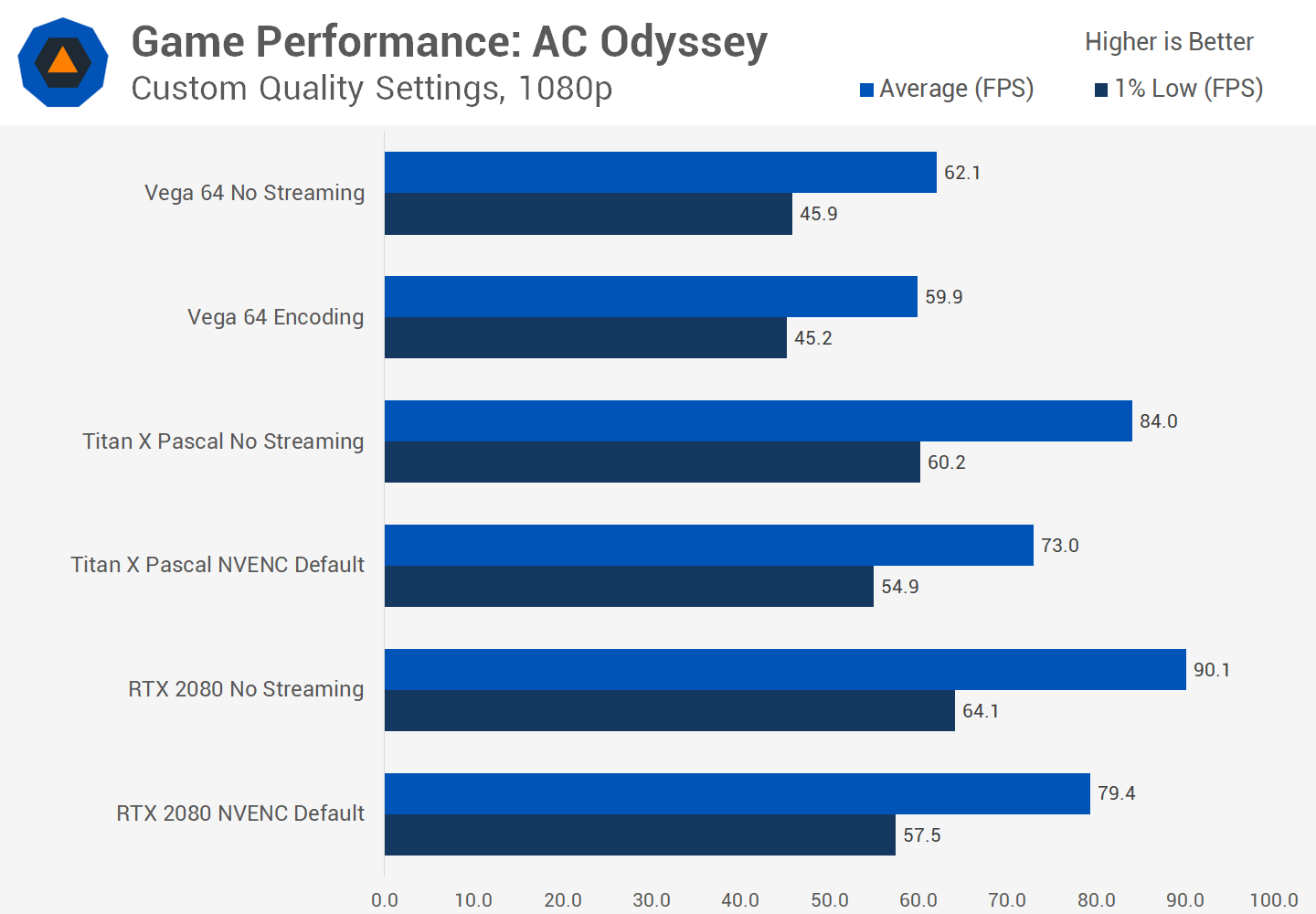

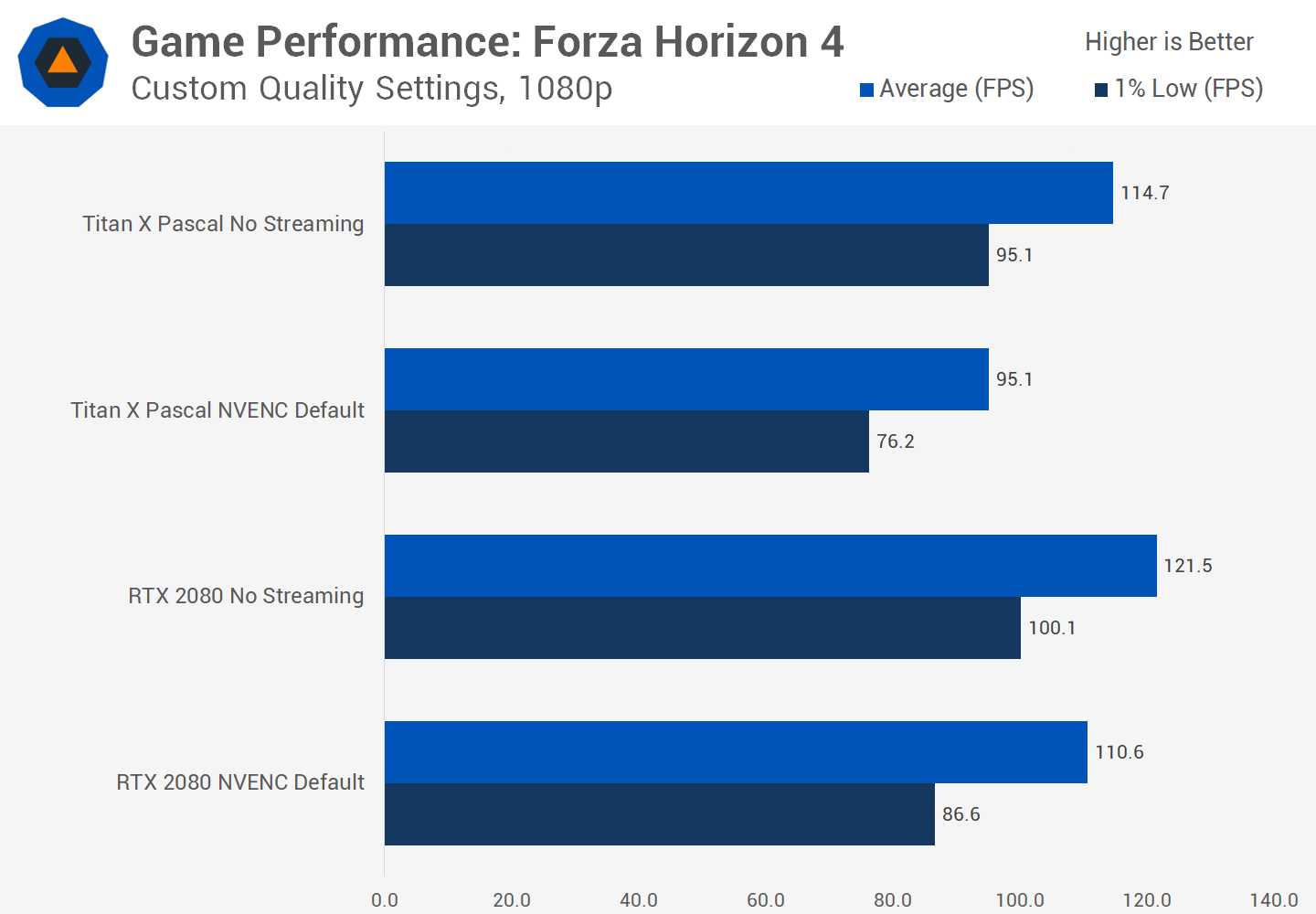

Enabling either Pascal or Turing's NVENC engine affects the frame rate of the game by around 10 to 20% depending on the game, in other words, you'll see a 10 to 20% drop to frame rate when capturing footage using NVENC, compared to not capturing the game at all. The more GPU limited the game is, the more of an impact NVENC will have, which is why Forza Horizon 4 is impacted more heavily than the heavy CPU user in Assassin's Creed Odyssey.

The good news, though, is while you'll be running the game at a lower framerate while NVENC is working, the stream itself will have perfect performance with no dropped frames, even if the game is using 100% of the GPU. AMD's encoding engine doesn't impact the game's framerate nearly as much, but it drops about 90% of the frames when the GPU is being heavily used, making it useless as we already discussed earlier.

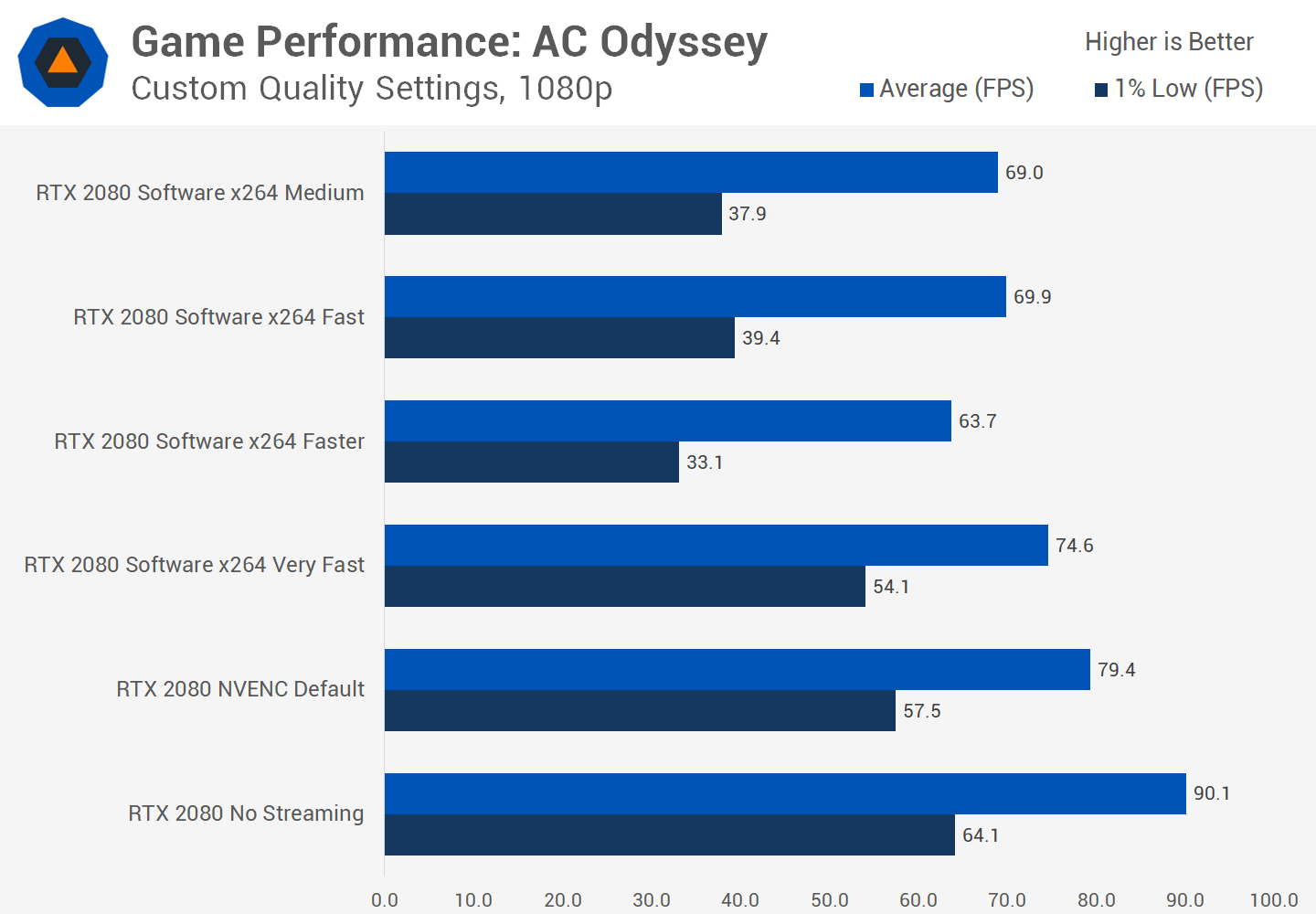

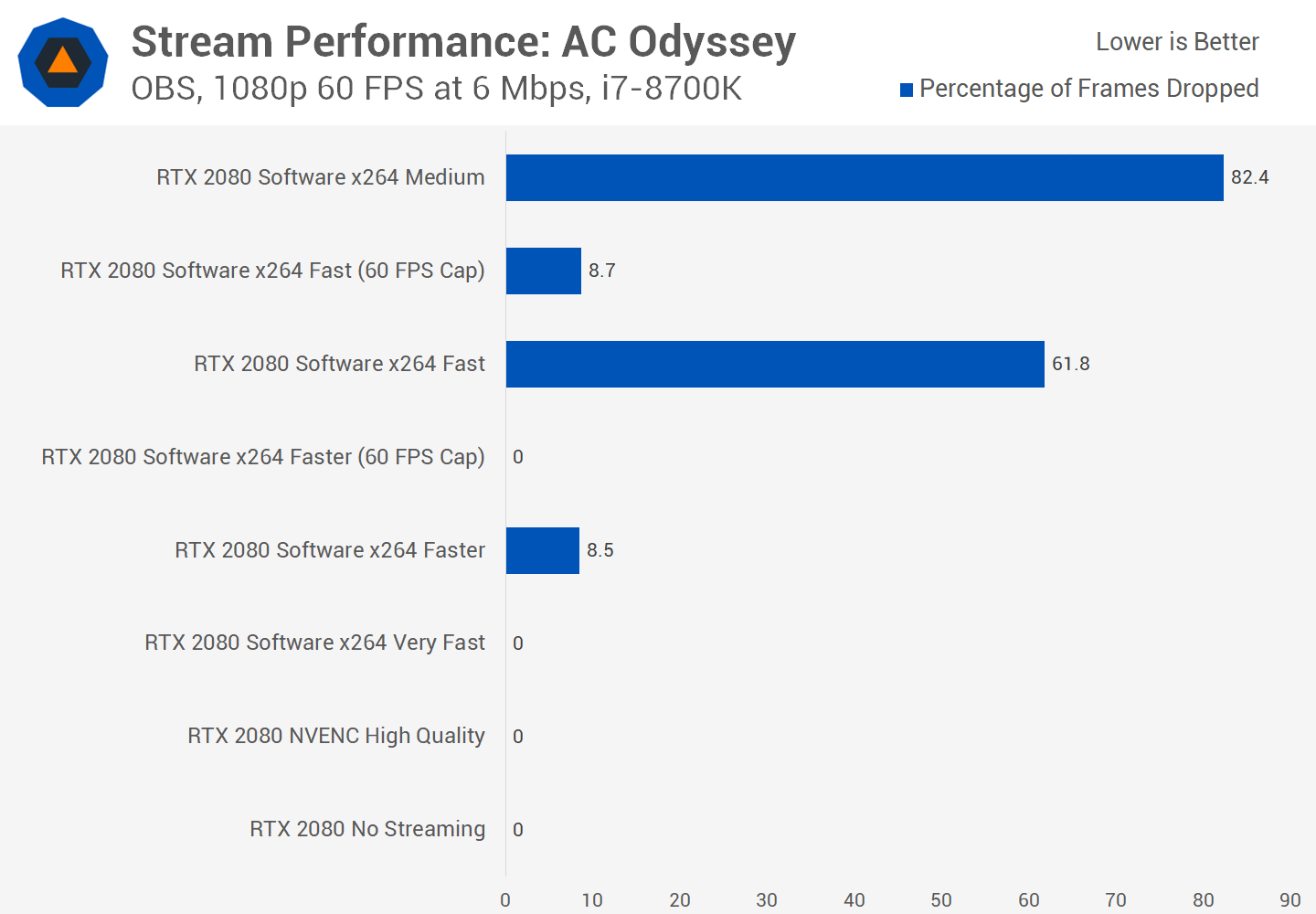

Software encoding performance depends on the type of game you're playing. In the case of Assassin's Creed Odyssey which heavily utilizes both the CPU and GPU, streaming using the CPU will have a noticeable affect on frame rate, and high quality encoding presets will struggle to keep up.

With the Core i7-8700K and the RTX 2080 playing Odyssey using our custom quality preset, we were only able to encode the game using the x264 veryfast preset without suffering from frame drops in the stream output. X264 veryfast encoding also reduced the frame rate by 17%, which was a larger reduction than simply using NVENC. Veryfast encoding is better visually than NVENC for this type of game, so the performance hit is worth it.

However moving to even the 'faster' preset introduces frame drops into the stream output. With a frame drop rate of 8.5%, the output is stuttery and hard to watch. Meanwhile, game performance has dropped from 90 FPS on average, to just 63 FPS with a 1% low only just above 30 FPS. It's clear this preset is choking the system. And it gets worse with fast and medium, which see frame drop rates of 62 and 82 percent respectively. Interestingly, game performance is slightly better with these presets than with faster, but I suspect that's due to the encoder being overwhelmed which allows the game to get a small amount more CPU headroom for rendering the game.

One strategy to improve performance might be to cap the game to 60 FPS, as those watching your stream will be limited to 60 FPS anyway. But with this cap in place, the story isn't much better: the fast preset still sees 9% of all frames dropped, while the faster preset just scrapes in with no frame drops, but with a 1% low in the game of around 40 FPS. The only option to use fast would be to reduce the visual quality and try again, but for this article we weren't really interested in optimizing Assassin's Creed specifically for streaming with our hardware.

With the 8700K limited to veryfast streaming or GPU streaming in this title, it will be interesting to see how other CPUs stack up in part 2 of this investigation. But certainly with the 8700K - a high-end, popular gaming CPU - what we've shown here is a typical scenario for streaming in a title that heavily utilizes the CPU and GPU. Those with lower-tier CPUs and in particular, lower core count Intel CPUs, will run into this veryfast limit more often.

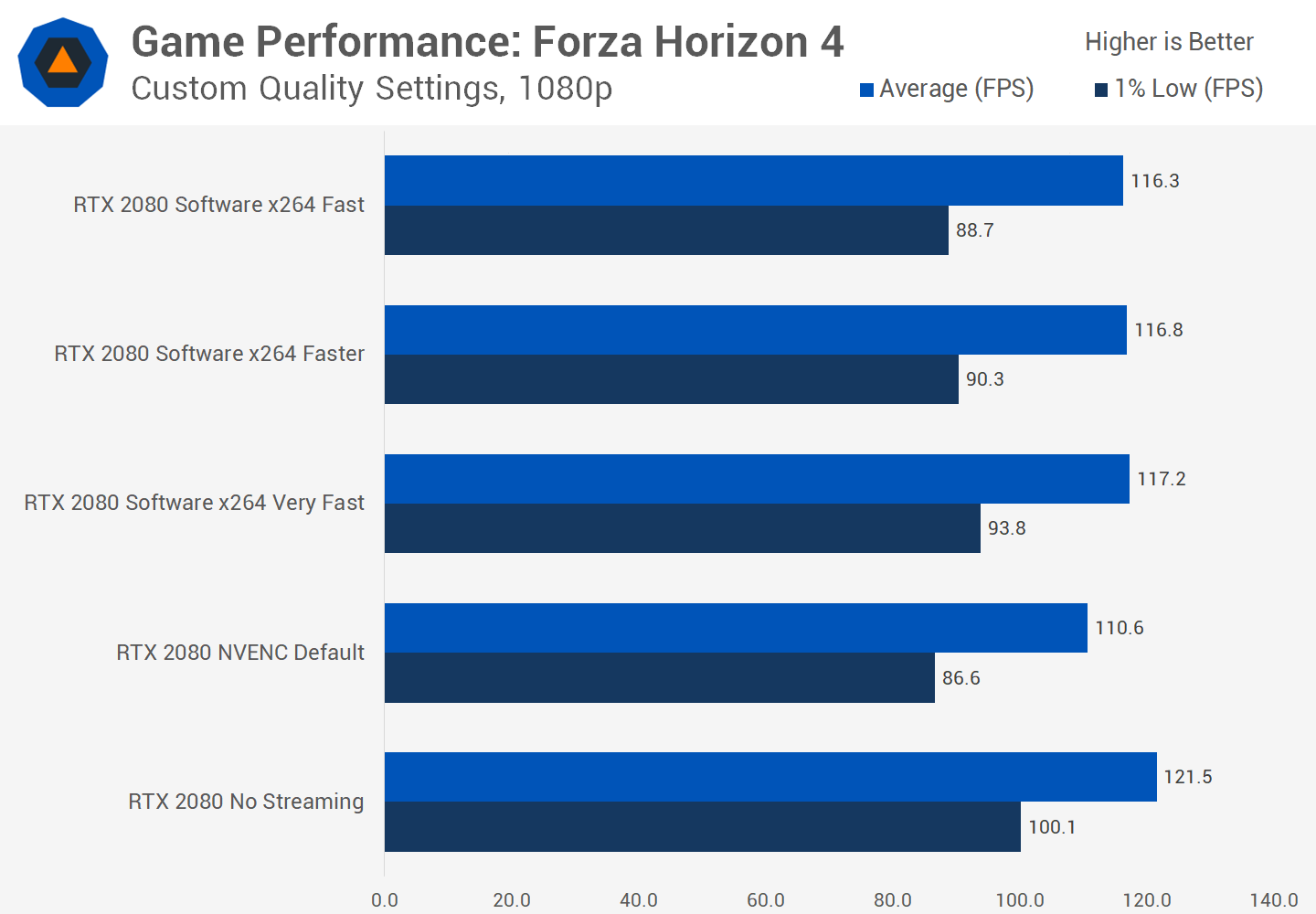

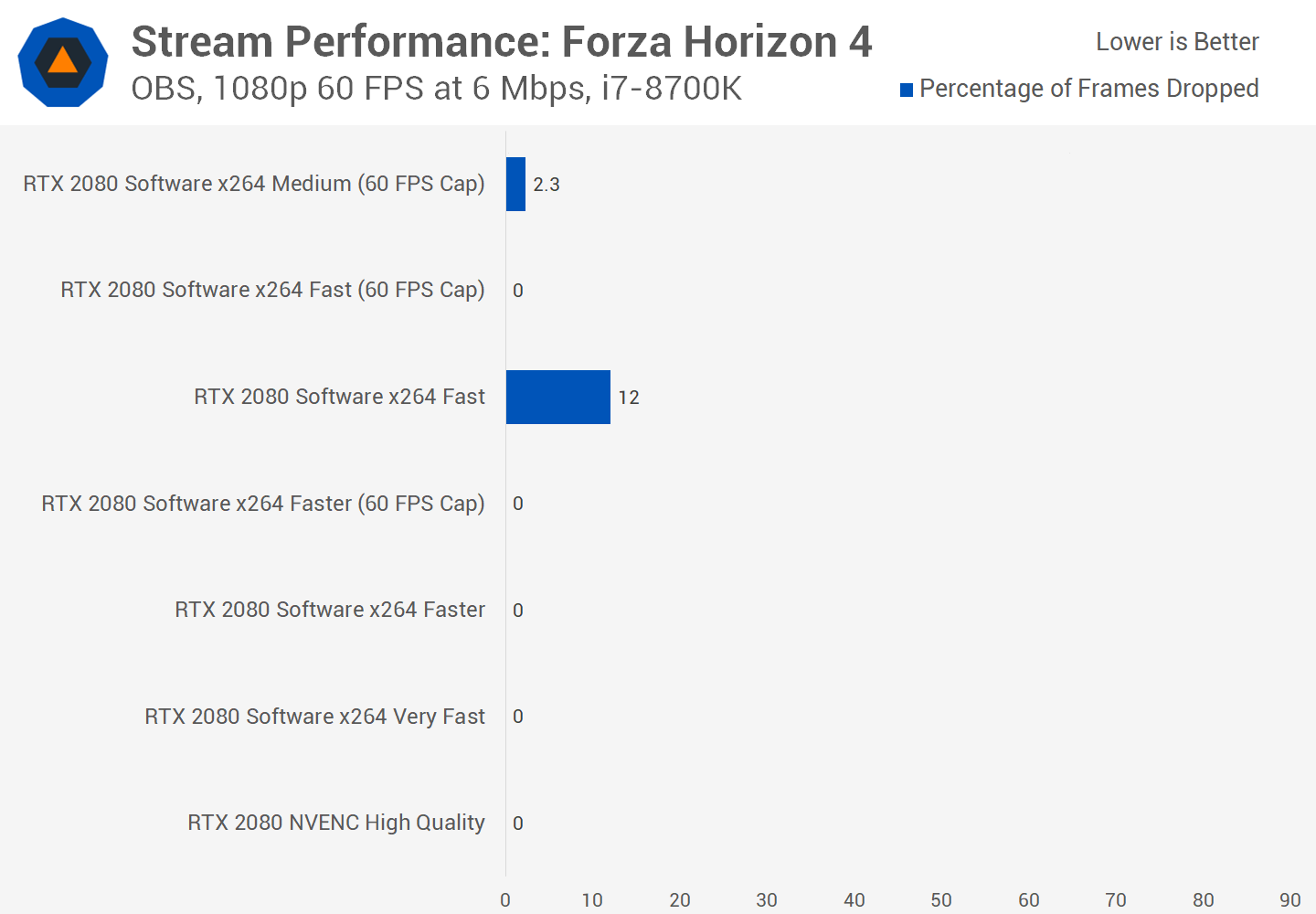

As for Forza Horizon 4, which is far less demanding on the CPU, it's an interesting situation because software encoding on the CPU actually delivers higher game performance than hardware accelerated GPU encoding. This is because there is plenty of CPU headroom to encode on the CPU without eating in to GPU performance.

Using the x264 veryfast preset impacted game performance by 6% looking at 1% lows, but the difference between veryfast and fast was only a further 5% drop despite the massive increase in CPU power required to encode using the fast preset.

On the stream side, we saw no frame drops with the veryfast and faster presets, however moving to fast saw a 12% frame drop rate for the encoded stream. This caused unpleasant stuttering in the stream. However considering we are running the game at nearly 120 FPS, we can quite easily implement a 60 FPS cap to reduce the game's stress on the CPU. With that cap in place, the fast preset becomes usable with zero frame drops in the output. The cap also opens up the option of medium preset encoding, although with the 8700K we still saw around a 2% of all frames dropped with the 60 FPS cap in place, which isn't ideal. If we wanted to go with medium encoding we'd have to look at reducing the game's CPU load through quality setting tweaks.

Wrap Up (Until Part 2 of this Series)

So with all that testing done, there's a few interesting takeaways here. The key finding for GPU buyers at the moment is that Turing's GPU encoding engine for H.264 isn't significantly improved compared to Pascal and certainly doesn't turn GPU encoding into a viable option for streaming.

The only time I'd suggest using NVENC is with fast paced, high motion games with a system that cannot CPU encode using the x264 faster preset or better. Games with less motion should be encoded using the veryfast x264 preset rather than NVENC, and veryfast should be achievable on most PCs that have been built with streaming in mind.

On the AMD front, their encoding engine needs plenty of work to be even considered. It doesn't work with high GPU loads and when it does work, the output quality is terrible.

CPU encoding is obviously a more tricky story as what level of x264 encoding you can manage will depend on your CPU and, crucially, the type of game you are playing. With our 8700K system we ranged from being stuck with veryfast encoding in a CPU demanding game, to being able to use the fast or even medium preset with a steady 60 FPS game output at decent quality settings in a less CPU demanding title.

But what streamers should be aiming for is to use the fast preset at a minimum, that's the first preset where the output quality is decent enough at 6 Mbps for Twitch streaming, and while it's not fantastic for fast motion scenes, fast is much better than either the faster or veryfast presets yet it's still achievable on decent hardware. Medium is also worth trying for those with top-end systems, while I really wouldn't bother with any of the even slower presets.

While it's nice to be able to game and stream on the one PC, this advice really only applies to casual or part-time streamers. Anyone who is streaming professionally or full time should use a second, dedicated stream capture PC with a decent capture card and CPU. This then fully offloads the encoding work, allowing you to comfortably use the medium preset or slower for the best quality streams, without impacting your game performance.

We've now discovered what the optimal presets are from a quality perspective. The second part of this series on game streaming we'll investigate which CPUs are capable of encoding at these presets, so stay tuned for that.

Shopping Shortcuts

- Intel Core i7-8700K on Amazon, Newegg

- Intel Core i9-9900K on Amazon, Newegg

- Intel Core i7-9700K on Amazon, Newegg

- Intel Core i5-8400 on Amazon, Newegg

- Intel Z390 motherboards on Amazon, Newegg

- AMD Ryzen 5 2600 on Amazon, Newegg

- AMD Ryzen 7 2700X on Amazon, Newegg

- AMD Threadripper 2950X on Amazon, Newegg

- AMD X470 motherboards on Amazon

Masthead credit: Sean Do