What just happened? Nvidia has announced first quarter results for fiscal 2026 and judging by early numbers, the April downturn looks to be well in the rear view. Nvidia reported revenue of $44.1 billion for the three-month period ending April 27, 2025, an increase of 12 percent quarter over quarter and up a whopping 69 percent from the same period a year earlier.

For comparison, analysts polled by LSEG were expecting $43.31 billion for the quarter. Non-GAPP diluted earnings per share were $0.81.

Nvidia's data center division continued to impress, netting $39.1 billion in revenue. That is up 10 percent over the previous quarter and 73 percent from a year ago, and is thanks almost exclusively to the AI revolution.

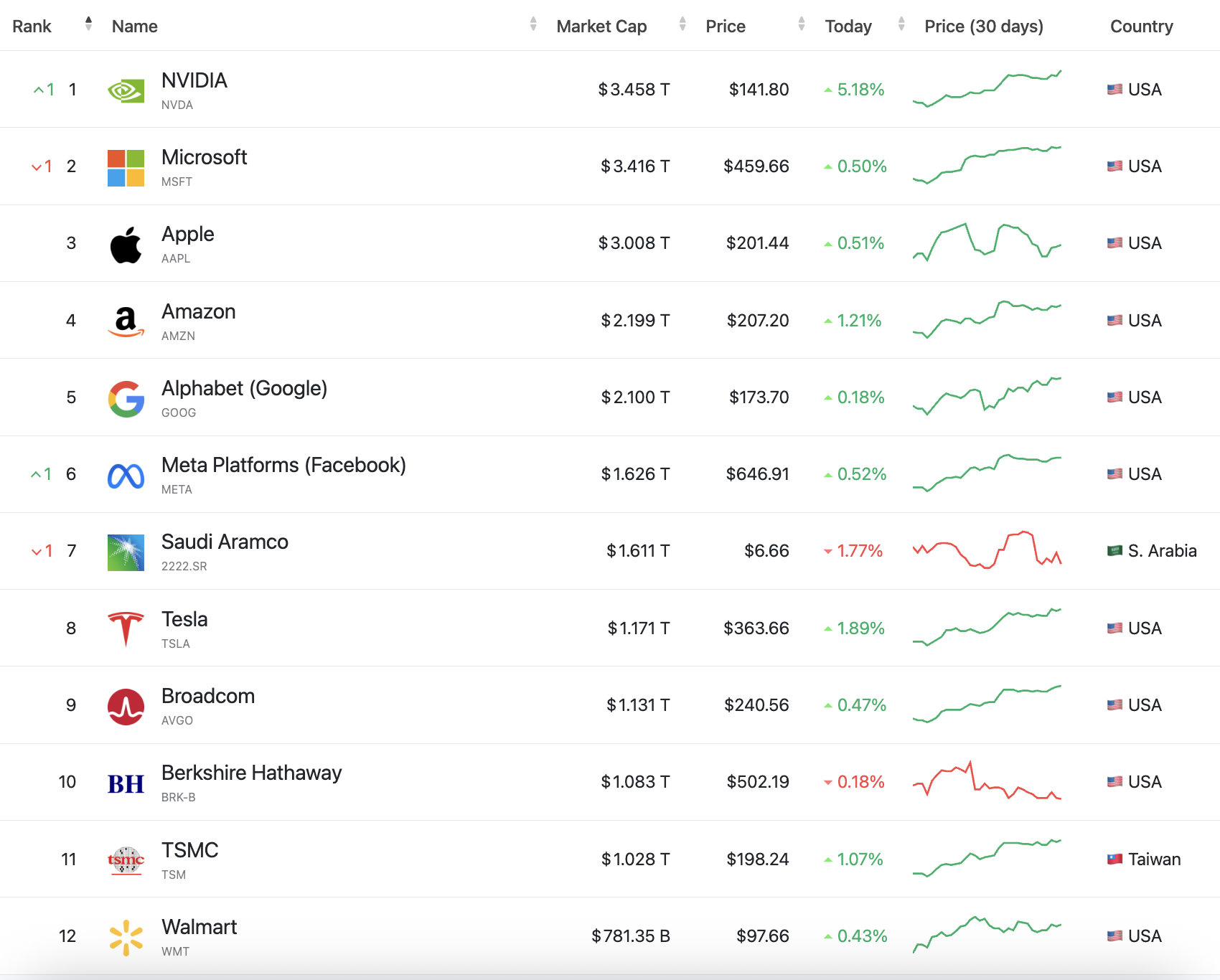

Source: Largest companies by marketcap

Revenue from gaming reached a record $3.8 billion, up 48 percent quarter over quarter and 42 percent year over year. This division will no doubt continue to hold firm in the coming quarters as Nintendo gears up for the launch of its Switch 2 console, which is powered by hardware from Nvidia and launches on June 5.

Nvidia CEO Jensen Huang said countries around the world are recognizing AI as essential infrastructure, just like electricity and the Internet, adding that he is proud that Nvidia is standing at the center of the transformation.

Nvidia will pay a quarterly cash dividend of $0.01 per share on July 3 to shareholders of record on June 11.

Looking ahead to the current quarter, Nvidia expects to bring in $45 billion in revenue (plus or minus two percent). The chipmaker initially expected to generate an extra $8 billion from the sale of H20 products exported to China but new US law now requires an expensive license to do so.

Nvidia incurred a $4.5 billion charge in the first quarter for the same reason, and saw its non-GAPP gross margin fall from 71.3 percent to 61.0 percent as a result. Earnings per share would have been $0.96 had it not been for the new law.

Shares in Nvidia are up nearly four percent in early morning trading.

AI chipmaker Nvidia hits $3.46T, overtakes Microsoft and Apple as the world's most valuable company